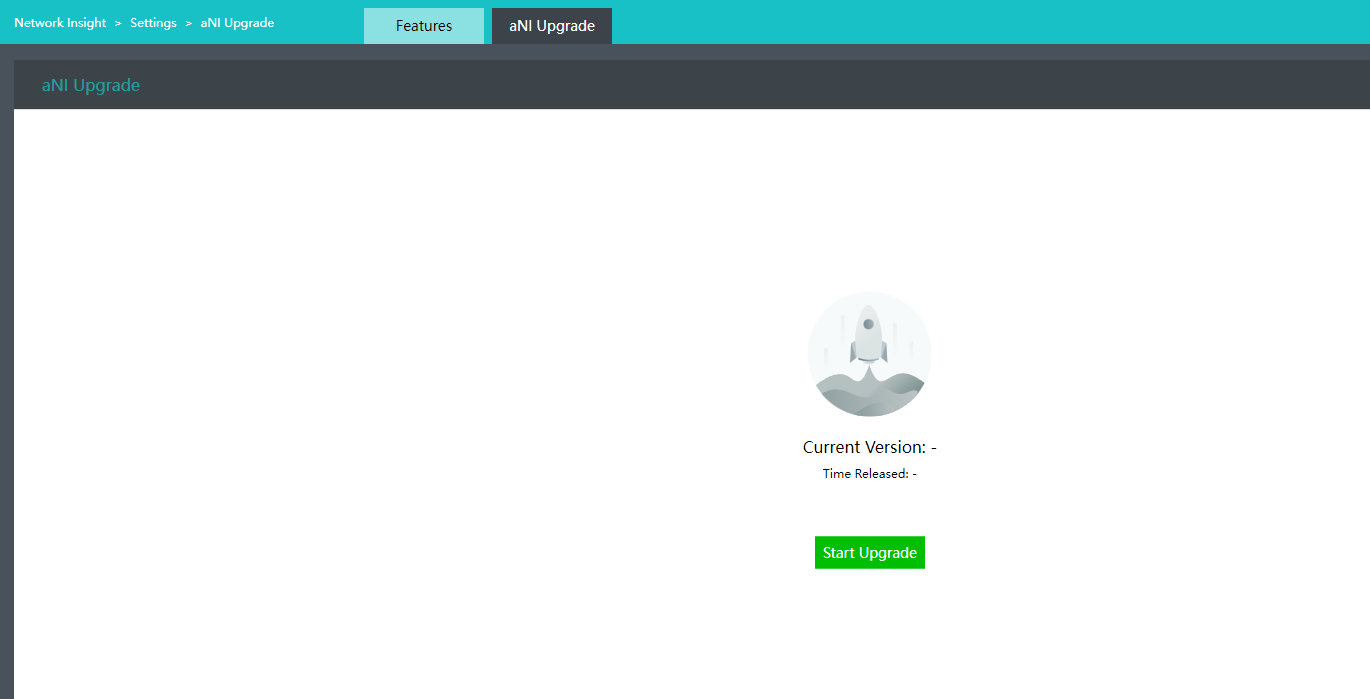

【HCI】User Manual_V6.8.0

Product Description

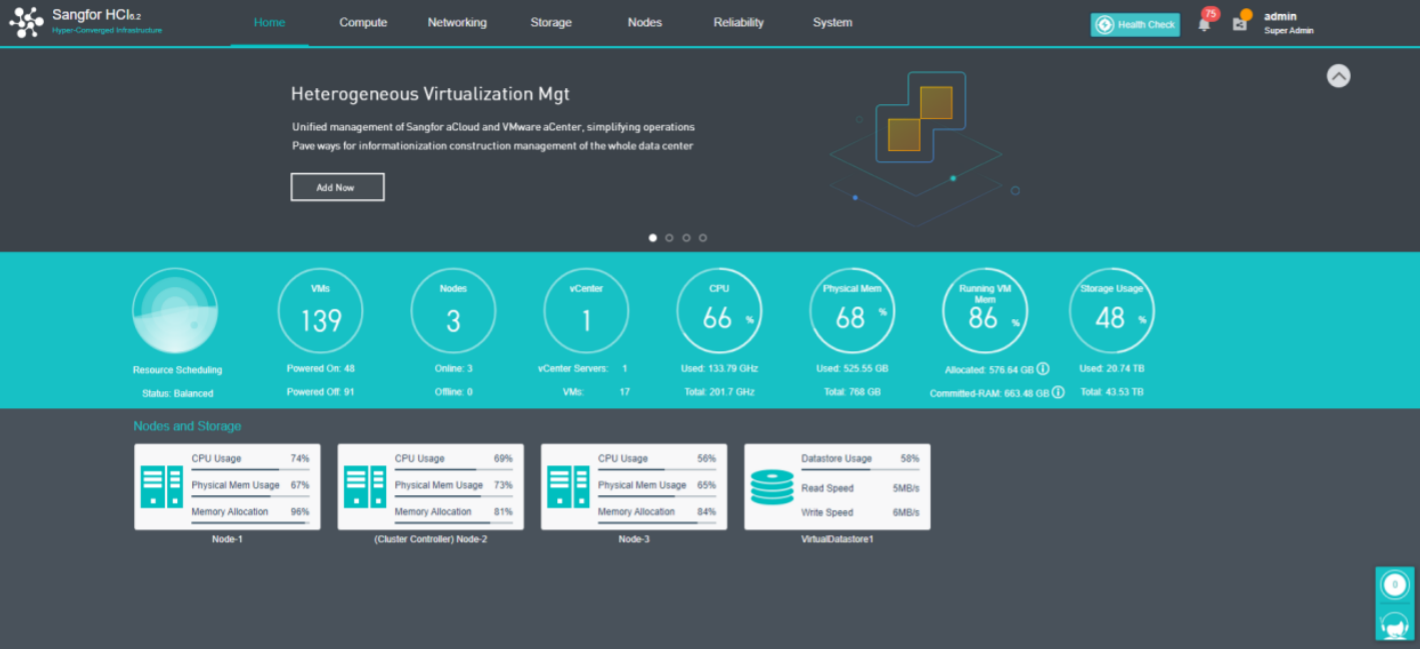

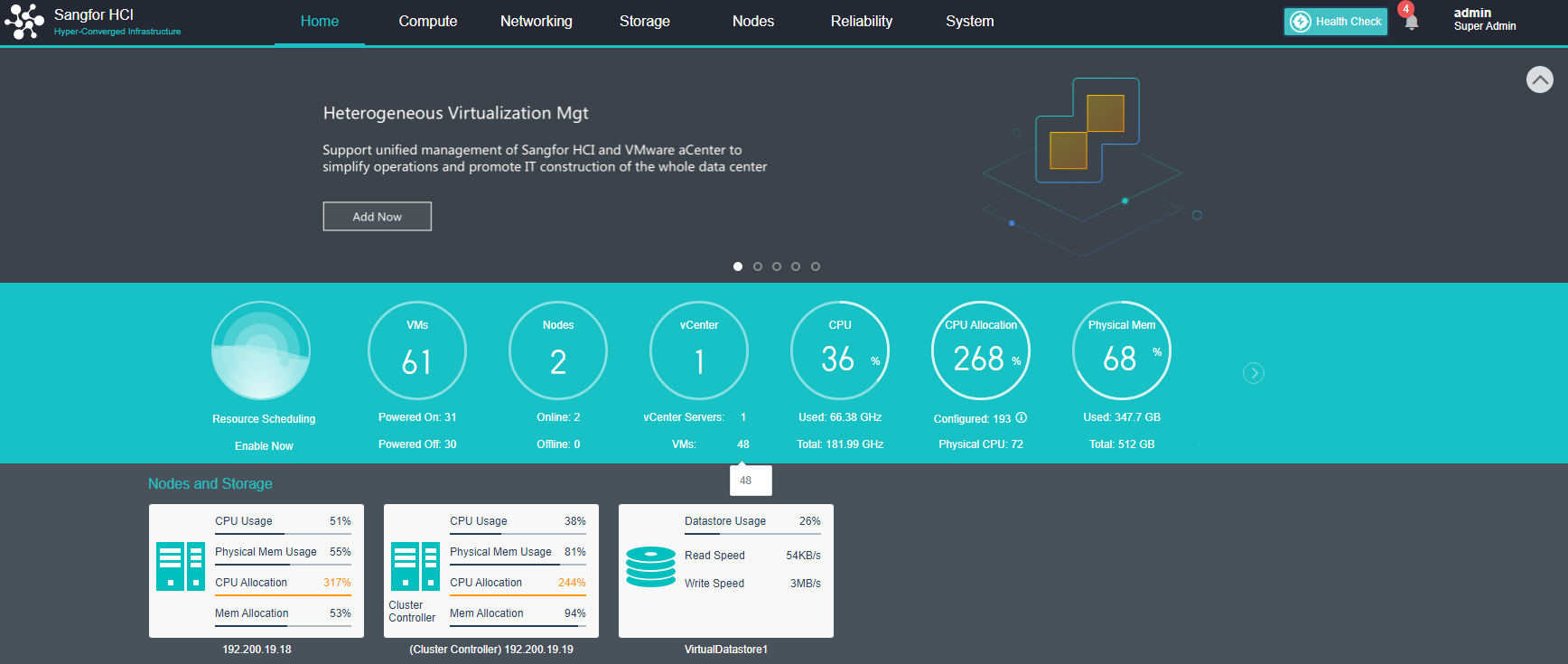

HCI (Hyper-Converged Infrastructure) is a product based on innovative hyper-converged technology. It has complete IT infrastructure service capability and operation and maintenance management service capability and can carry core database, ERP, financial system, production system, and other enterprise key business applications.

Unlike the traditional cloud, we are convinced that the hyper-converged is more portable and flexible. It helps users quickly build a business-driven cloud computing data center, pools users’ IT resources, make IT use service, automates its operation and maintenance, and makes key businesses easily put on the cloud. It is the preferred solution for governments and enterprises to put their businesses on the cloud.

This chapter mainly introduces and explains Sangfor HCI products in detail from different aspects, such as product introduction, product architecture, and key features.

Product Introduction

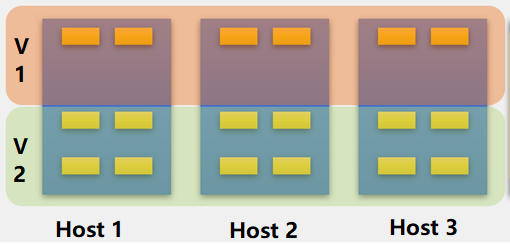

HCI takes resources such as computing, network, and storage as basic components to form a technical architecture that is selected and predefined according to system requirements. The specific implementation method is generally to integrate software virtualization technology (including virtualization of computing, network, storage, and security) into the same set of cell nodes (x86 servers). Each set of cell nodes can be aggregated through the network to realize modular seamless horizontal expansion (scale-out) to build a unified resource pool. We are convinced that the HCI architecture based on HCI can replace the heavy and complex traditional cloud infrastructure and realize the minimalism of cloud architecture.

Relying on HCI technology, HCI simplifies the data center into two kinds of equipment: x86 server and switch, which reduces the initial investment cost and the cost of learning to use the equipment. Smooth capacity expansion is realized by accessing the SCP cloud computing platform to support the demand for high-performance services. HCI has a built-in P2V migration tool, which can realize one-click migration of applications to the cloud and improve its innovation efficiency. HCI ensures data reliability through CDP technology, data multi-copy technology, virtual machine backup technology, application data backup, network behavior management, and other technologies. It has unique optimization technology for key applications, supports the stable operation of key businesses of Oracle RAC cluster, SQL server AlwaysOn cluster, Kingdee, UFIDA, and ERP software, and can meet the needs of the ultra-high reliable business. The built-in firewall, WAF, cloud antivirus, and other business applications running on the cloud platform make them have a perfect security protection system, meet the security and compliance requirements, and effectively prevent East-West security threats in the data center. HCI has global resource management capability. It deploys and configures what you draw is what you get, reduces application deployment time, fault location, and repair time, and can master the use method of the platform without special training.

Product Architecture

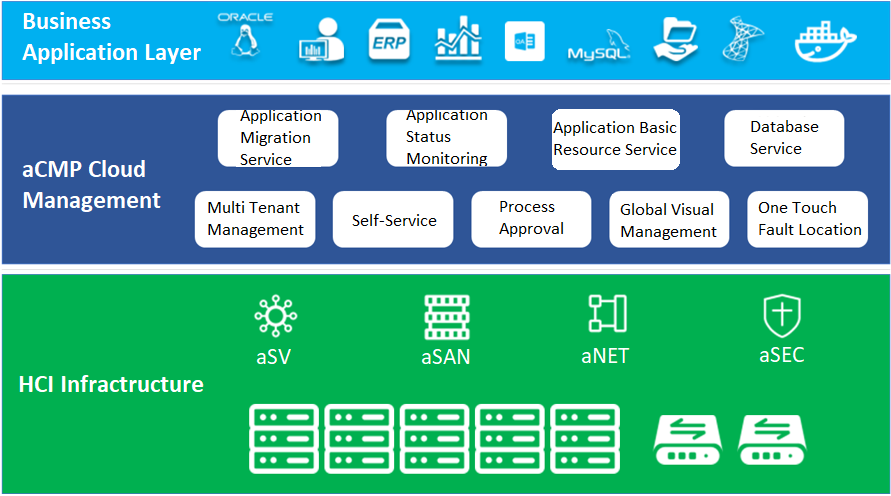

Sangfor HCI architecture consists of two parts: HCI and SCP. Based on the HCI architecture, users can build business systems based on their needs.

Sangfor HCI is based on HCI architecture, takes virtualization technology as the core, and uses components such as computing virtualization (aSV), storage virtualization (aSAN), network virtualization (aNET), and security virtualization (aSEC) to integrate computing, storage, network, and other virtual resources into a standard x86 server to form a benchmark architecture unit. Moreover, multiple sets of unit devices can be aggregated through the network to realize modular seamless scale-out and form a unified resource pool.

SCP provides the management and resource allocation capabilities of HCI and third-party resource pools. Reduces the difficulty of platform operation and maintenance and reduces the operation workload through automatic operation and maintenance tools. With the help of its self-service and process management, it improves the agility and rapid response ability of users’ IT services to improve its management level and service efficiency. Through the combination of the measurement function of the cloud computing management platform, fine-grained measurement and statistics are carried out on the IT services and resources used by each tenant to help enterprises and organizations calculate the costs and benefits of the Department.

HCI has four main components: aSV (server virtualization), aNET (network virtualization), aSAN (storage virtualization), and aSEC (security virtualization and NFV). aSV is the kernel of the whole HCI and is a required option. aNET, aSAN and aSEC can be selected from three or all according to specific requirements.

aSV is the computing virtualization component in the HCI architecture solution and the core component in the whole HCI architecture. The computing resource virtualization technology presents the standard virtual machine to the end-user through the general x86 server through the aSV component. These virtual machines are like a series of products produced by the same manufacturer. They have a series of hardware configurations and use the same drivers.

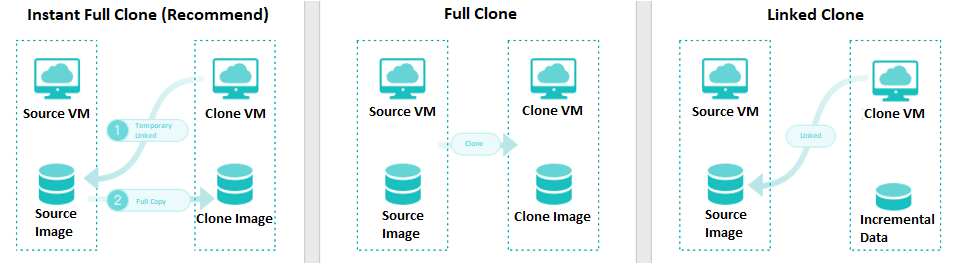

aSAN is a self-developed distributed storage system. It uses virtualization technology to "pool" the local hard disk in the general x86 server in the cluster’s virtual datastore to realize the unified integration, management, and scheduling of server storage resources. Finally, it provides the upper layer with NFS / iSCSI storage interface for virtual machines to freely allocate and use the storage space in the resource pool according to their storage requirements.

aNET is a network virtualization component in the HCI architecture solution. It uses Overlay to build the second tier and realize tenant isolation between business systems. Through NFV, all functional network resources (including basic routing switching, security, and application delivery) required in the network can be allocated and flexibly scheduled on demand to realize network virtualization in HCI architecture.

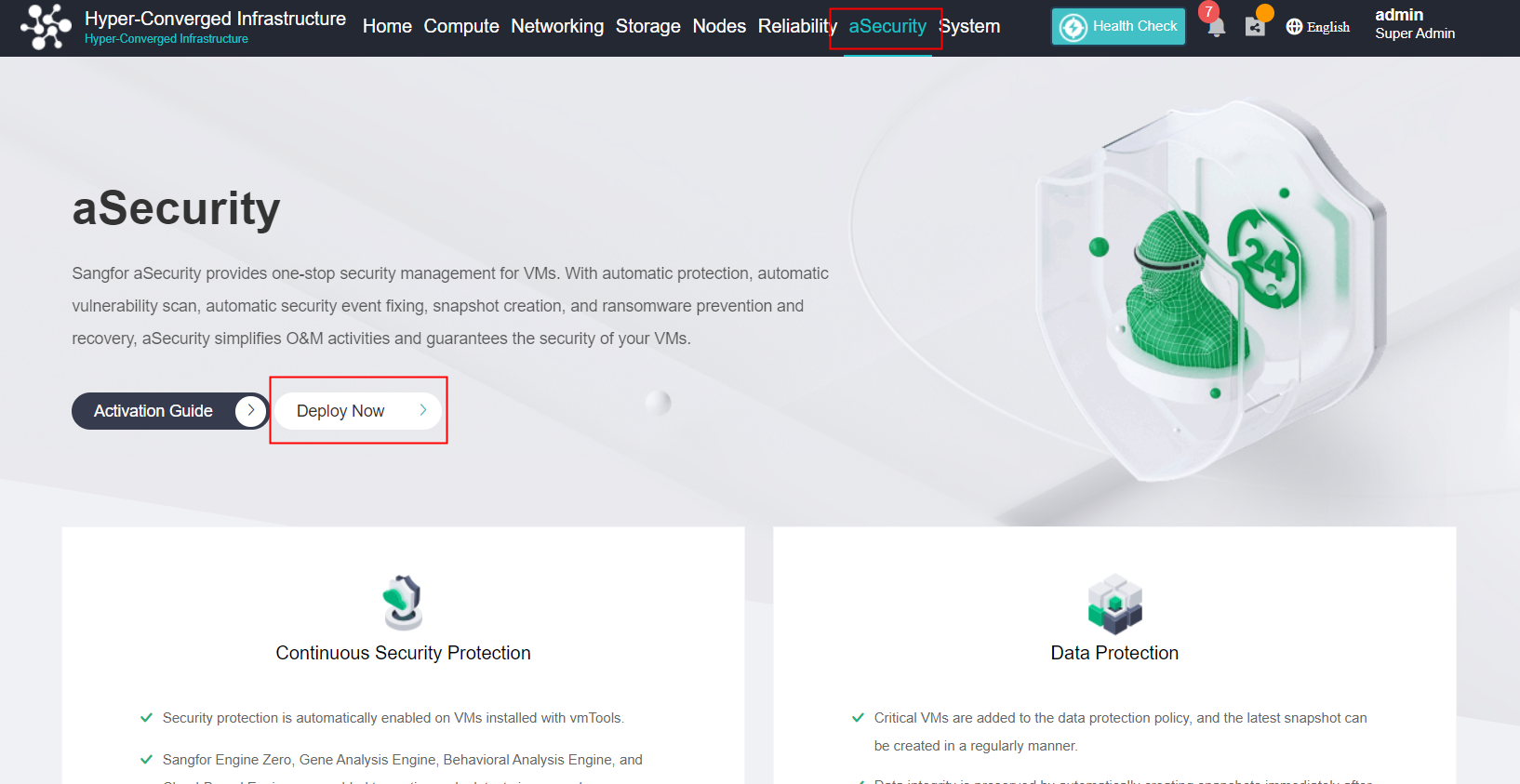

aSEC is a trusted security virtualization and NFV (including: vAC, vAD, vAF, vSSL VPN, vWOC, VDAS). It will be convinced that the existing network devices (SSL, WOC, ad, AF, AC, DAS) will be virtualized and provided separately in the form of templates.

Key Characteristics

Key Features of SCP

-

SCP

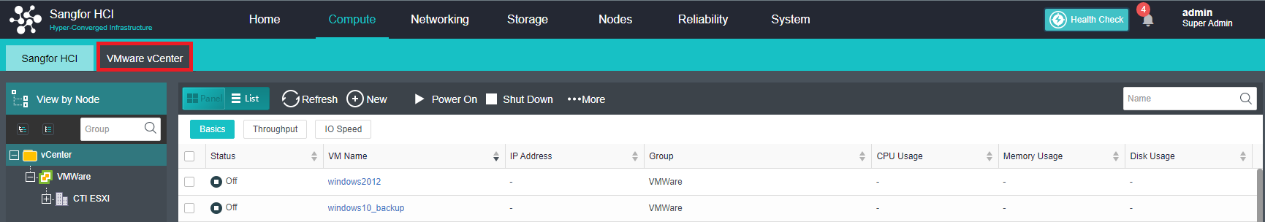

Sangfor SCP can provide rich management functions, including hosting HCI clusters and VMware vCenter, supporting unified licensing for multiple HCI clusters, and supporting multi-tenant management and independent service management. In terms of security, support tenants to configure their own distributed firewall policies. In terms of disaster recovery, SCP integrates the reliability center, which can provide users with a complete remote disaster recovery scheme at the virtual machine level.

-

Application Migration Service

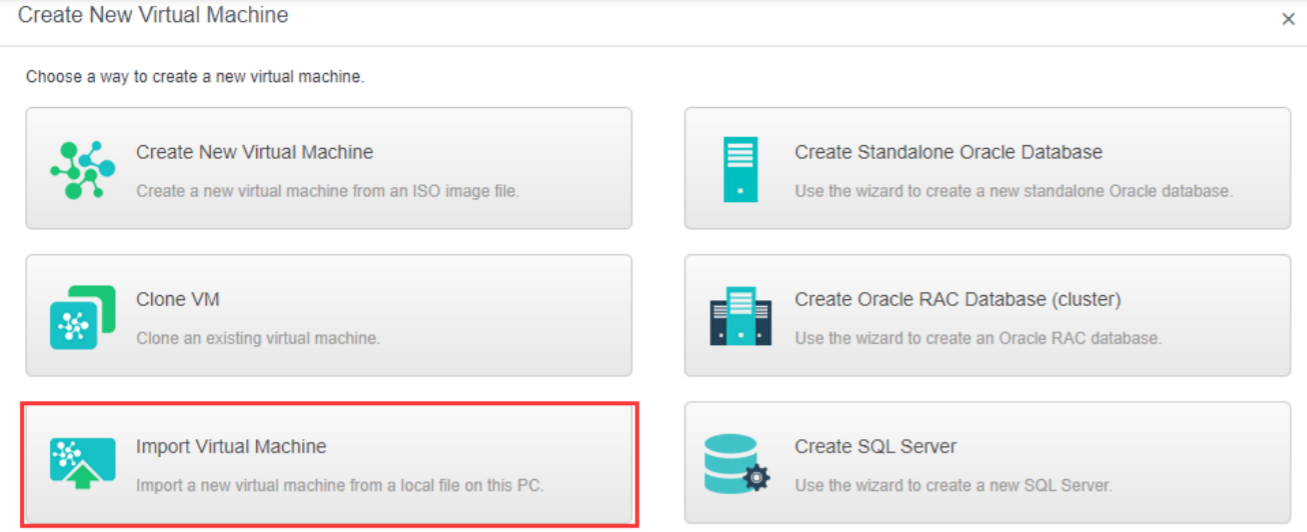

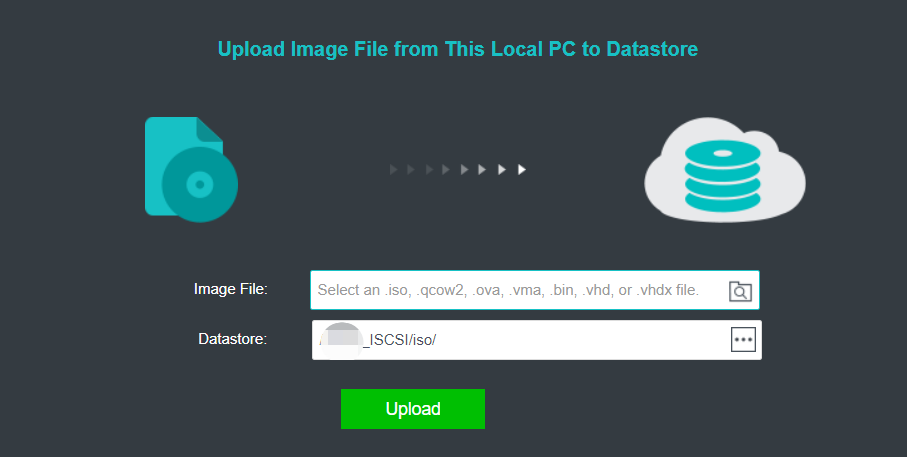

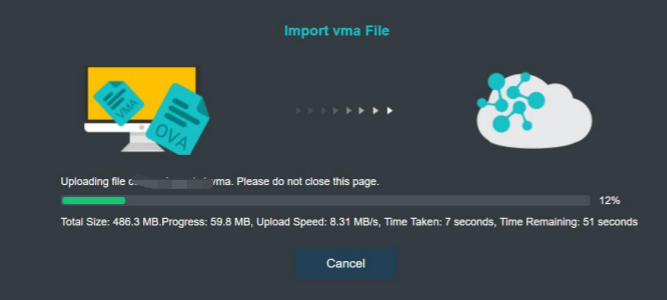

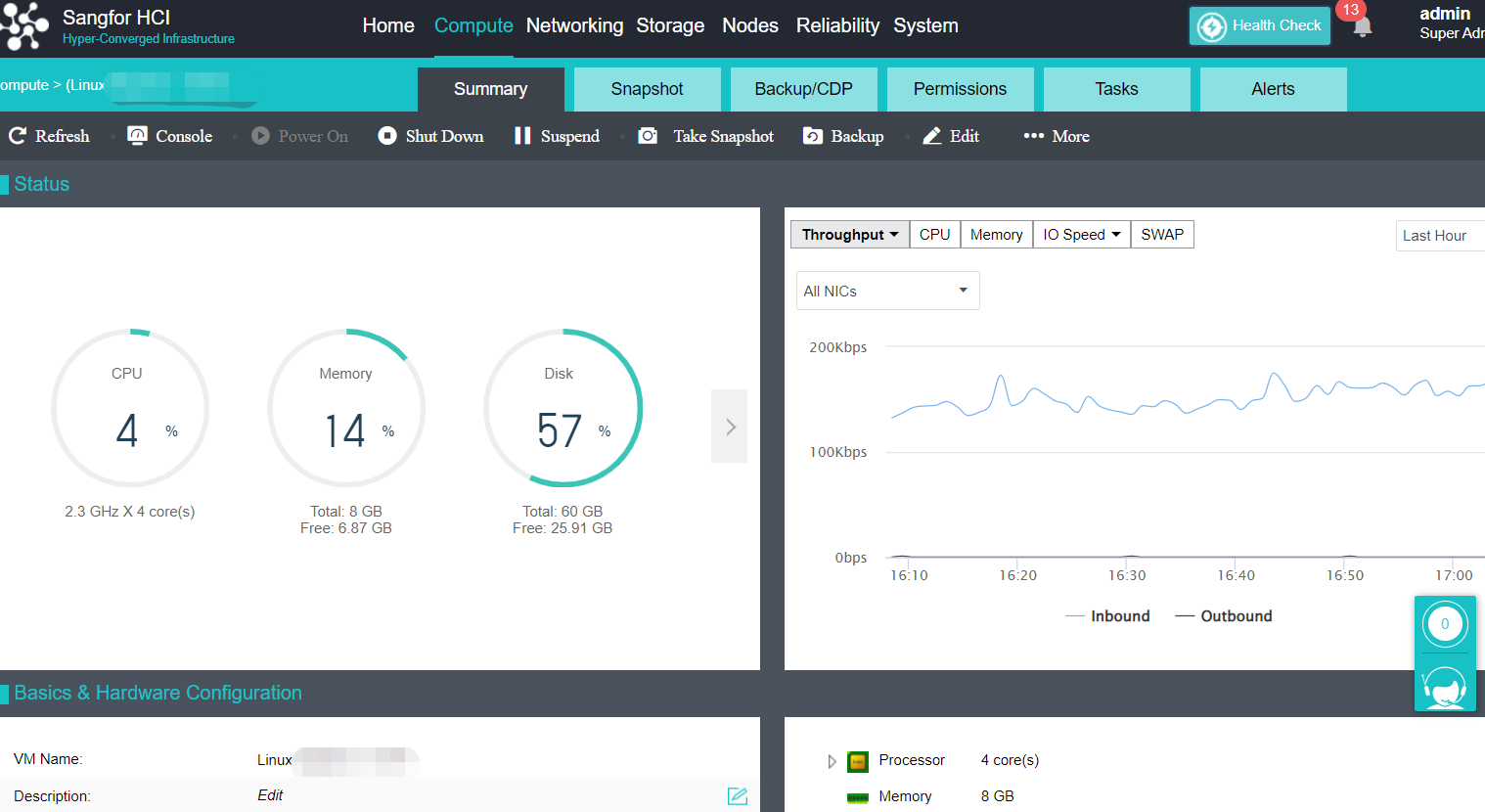

HCI migration tool can copy the existing physical node or windows / Linux operating system on VMware or Citrix platform to the HCI platform through the network.

-

Heterogeneous Virtualization Management(aHM)

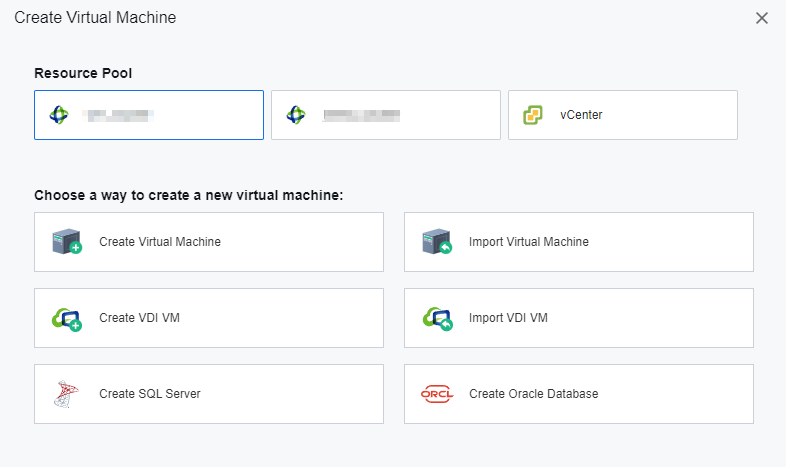

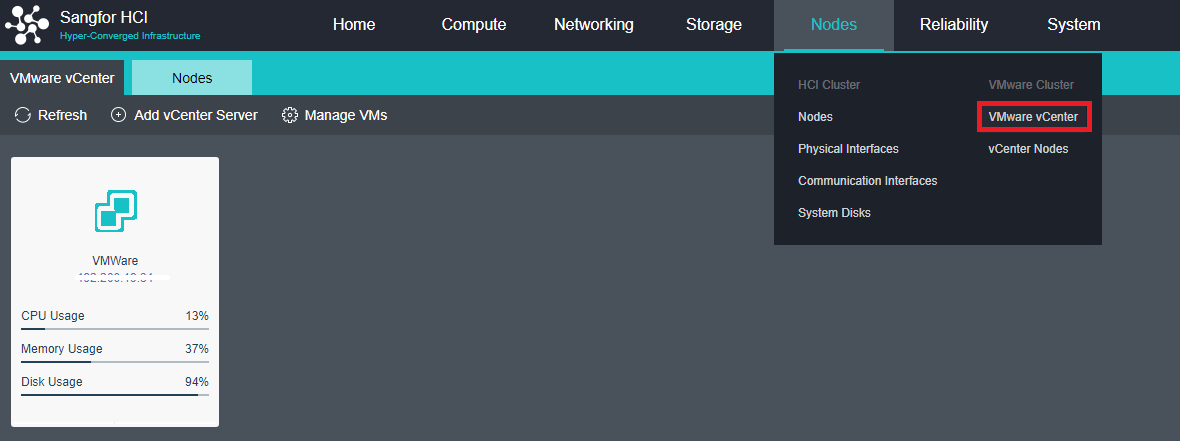

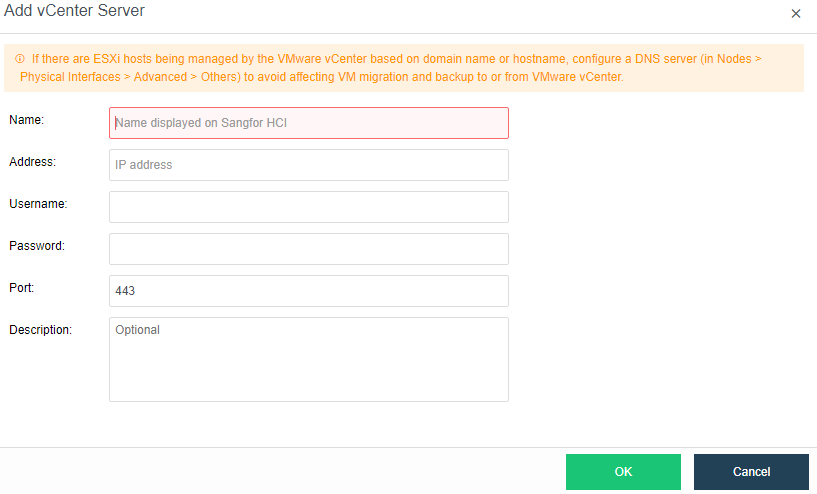

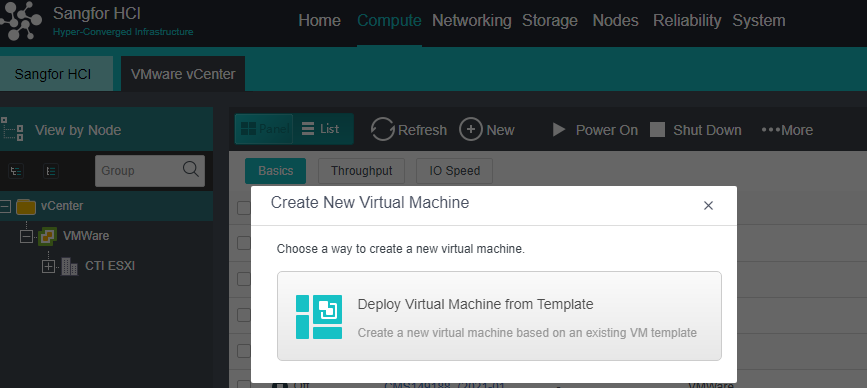

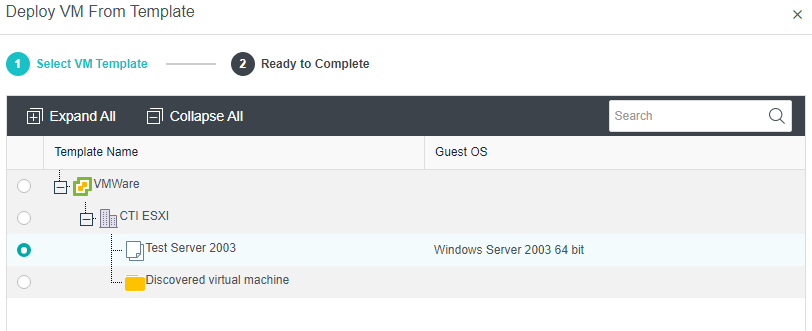

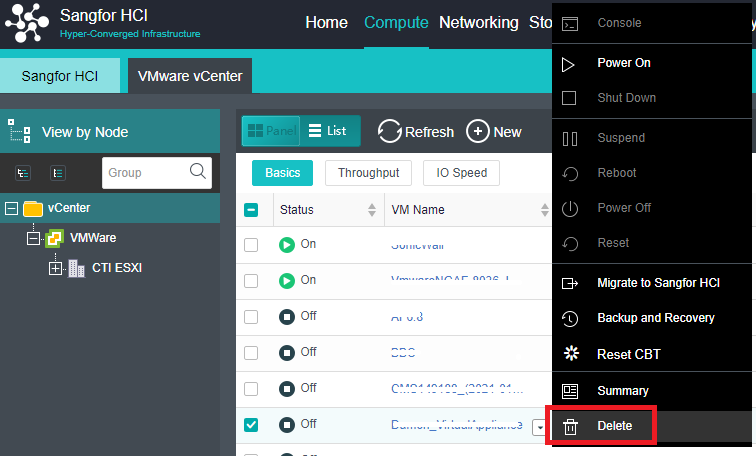

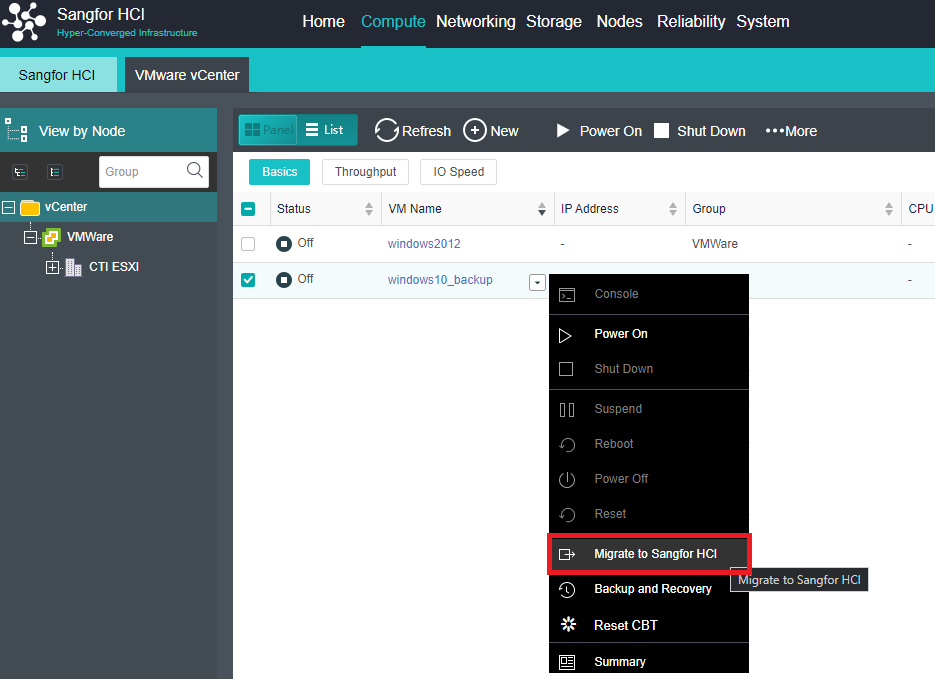

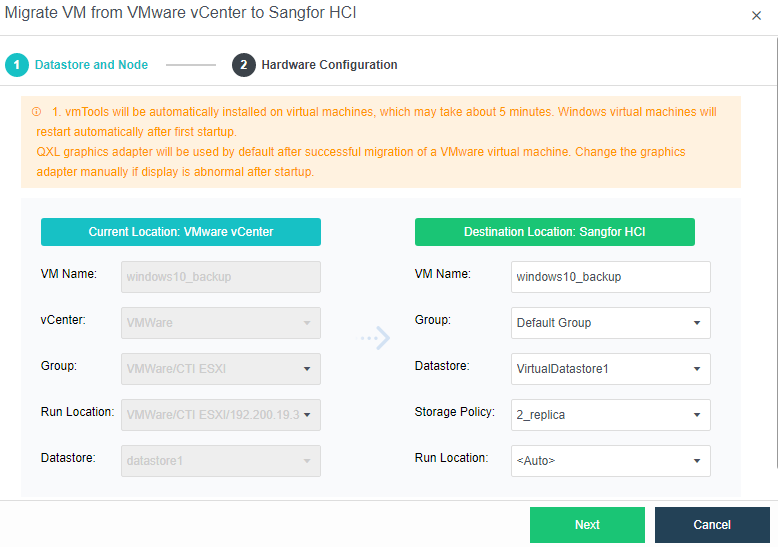

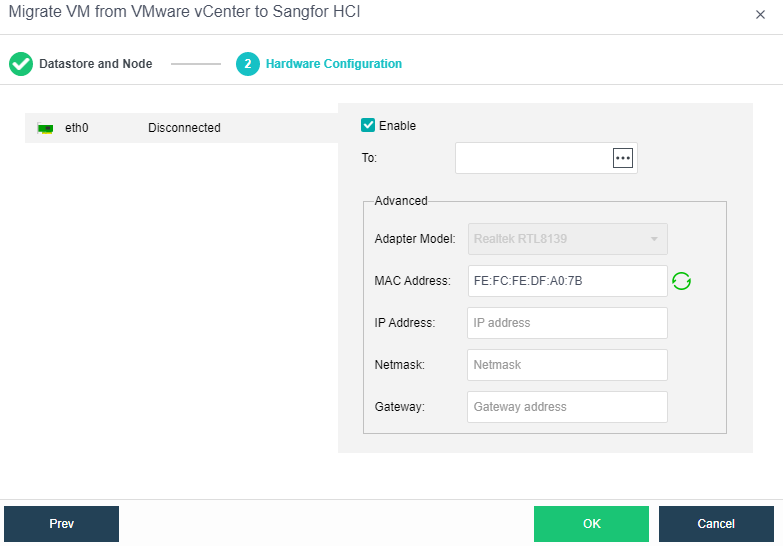

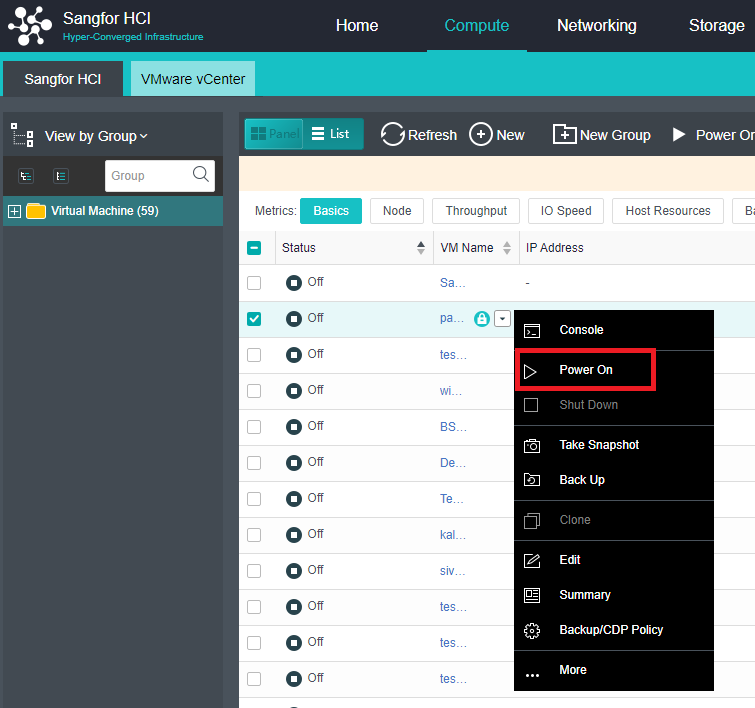

HCI can add VMware vCenter and manage it to realize centralized management of dual platforms. Virtual machines between VMware vCenter and HCI can support two-way migration.

-

Database Wizard Deployment

Oracle RAC on the HCI platform supports wizard deployment and SQL Server AlwaysOn cluster database.

-

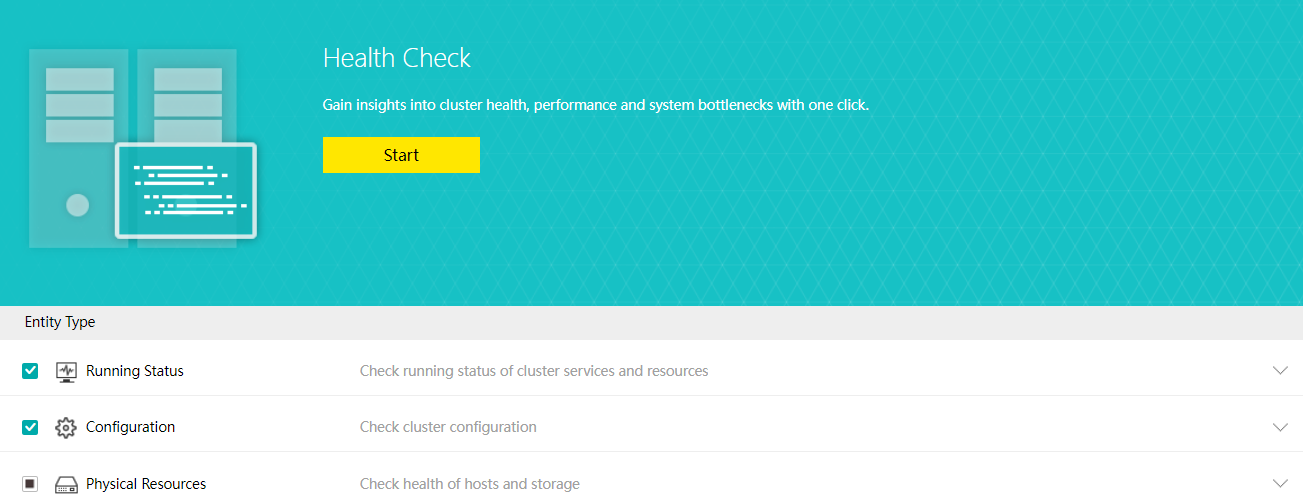

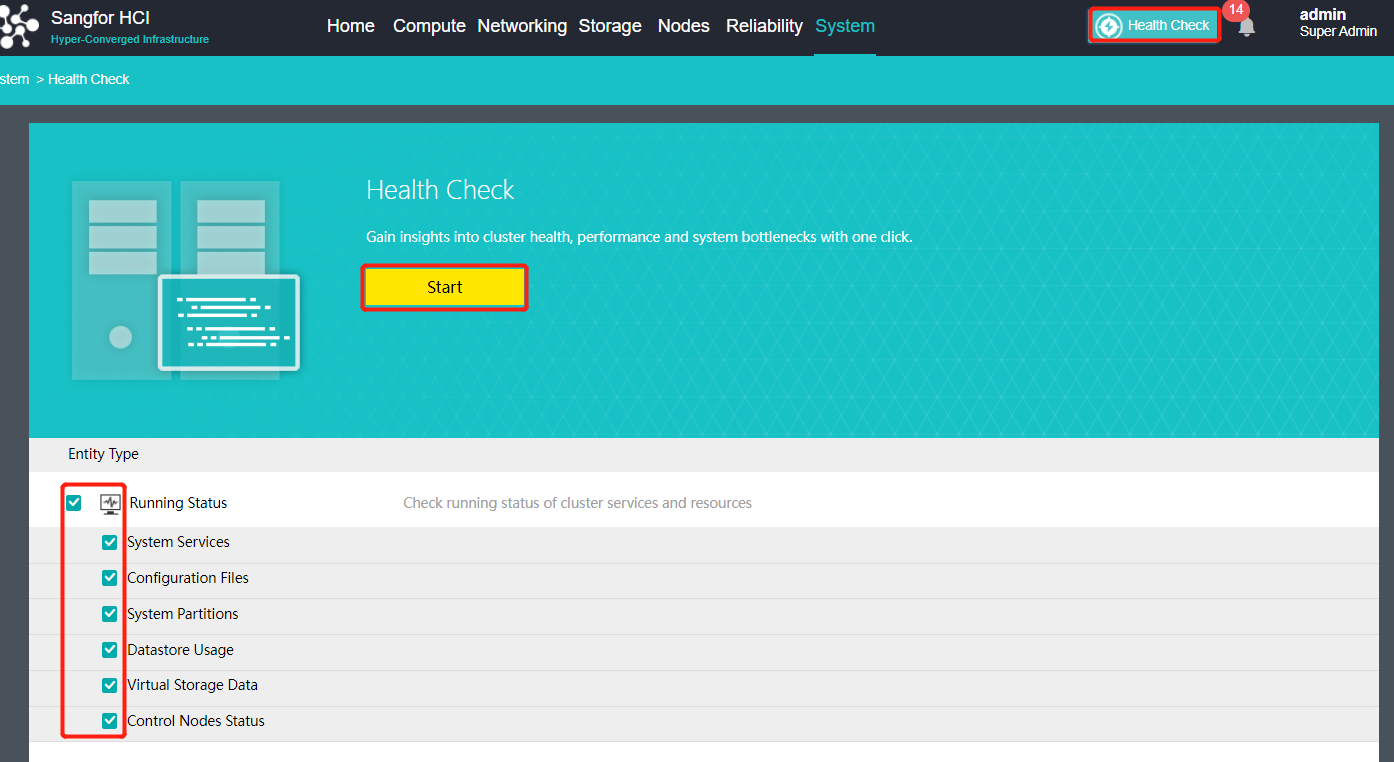

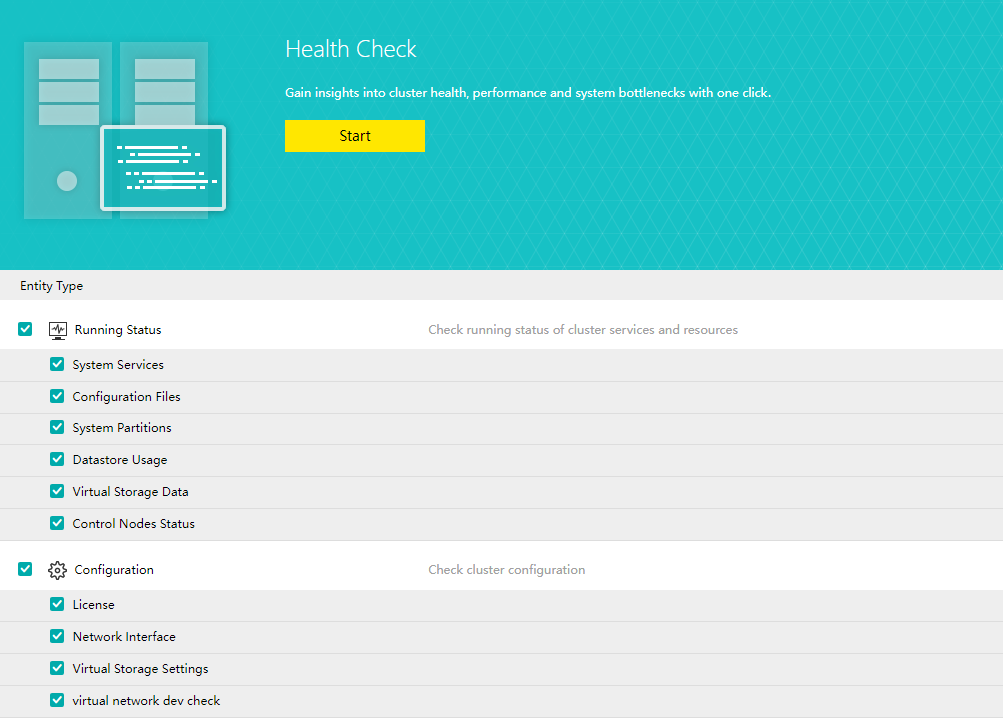

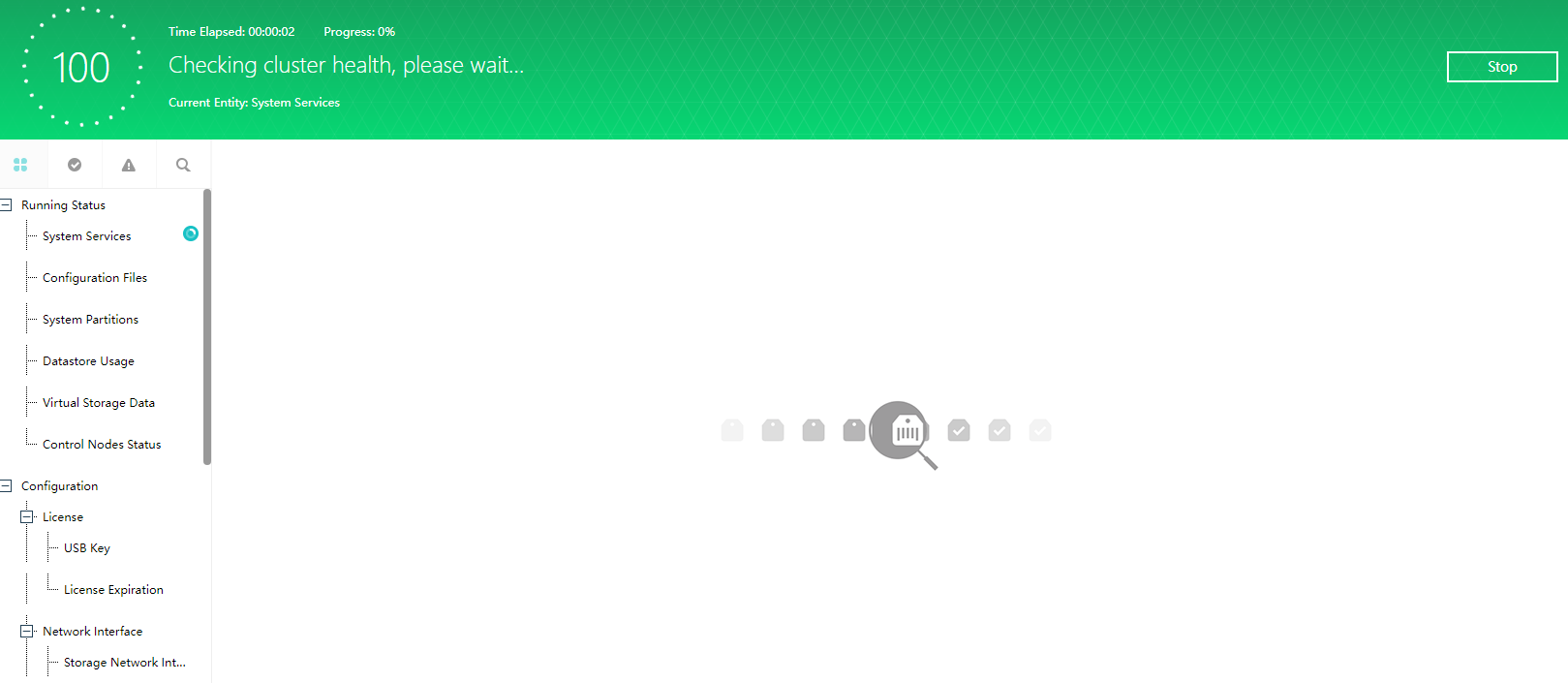

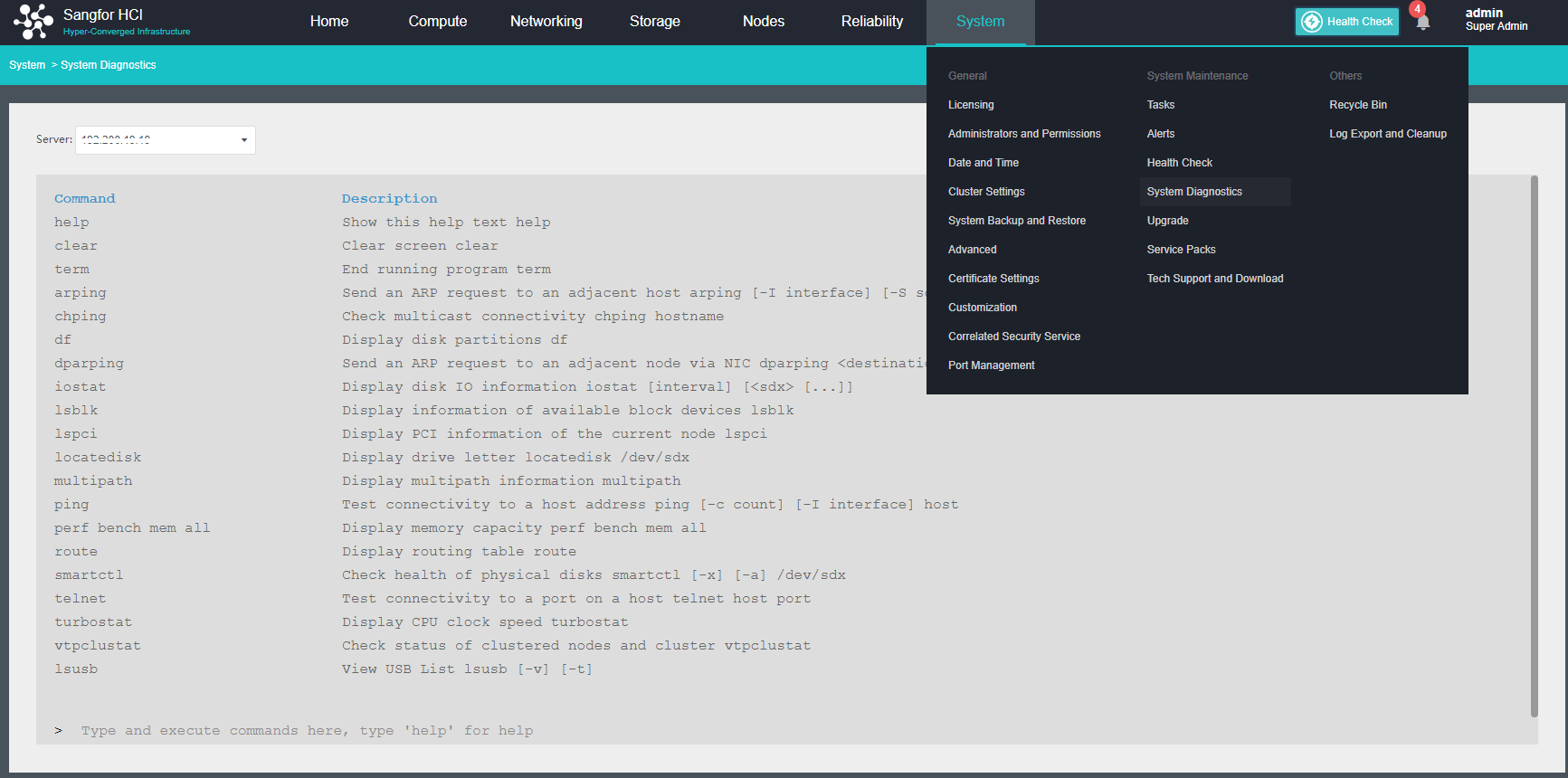

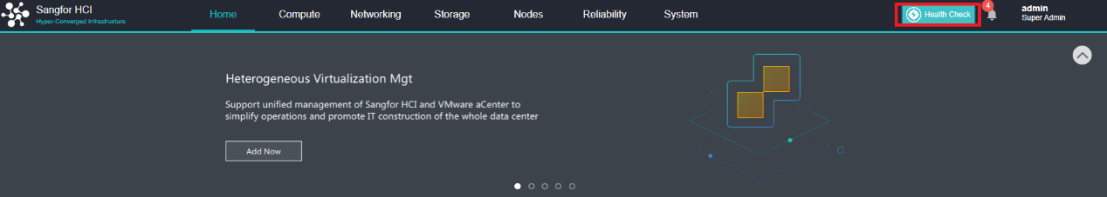

Health Check

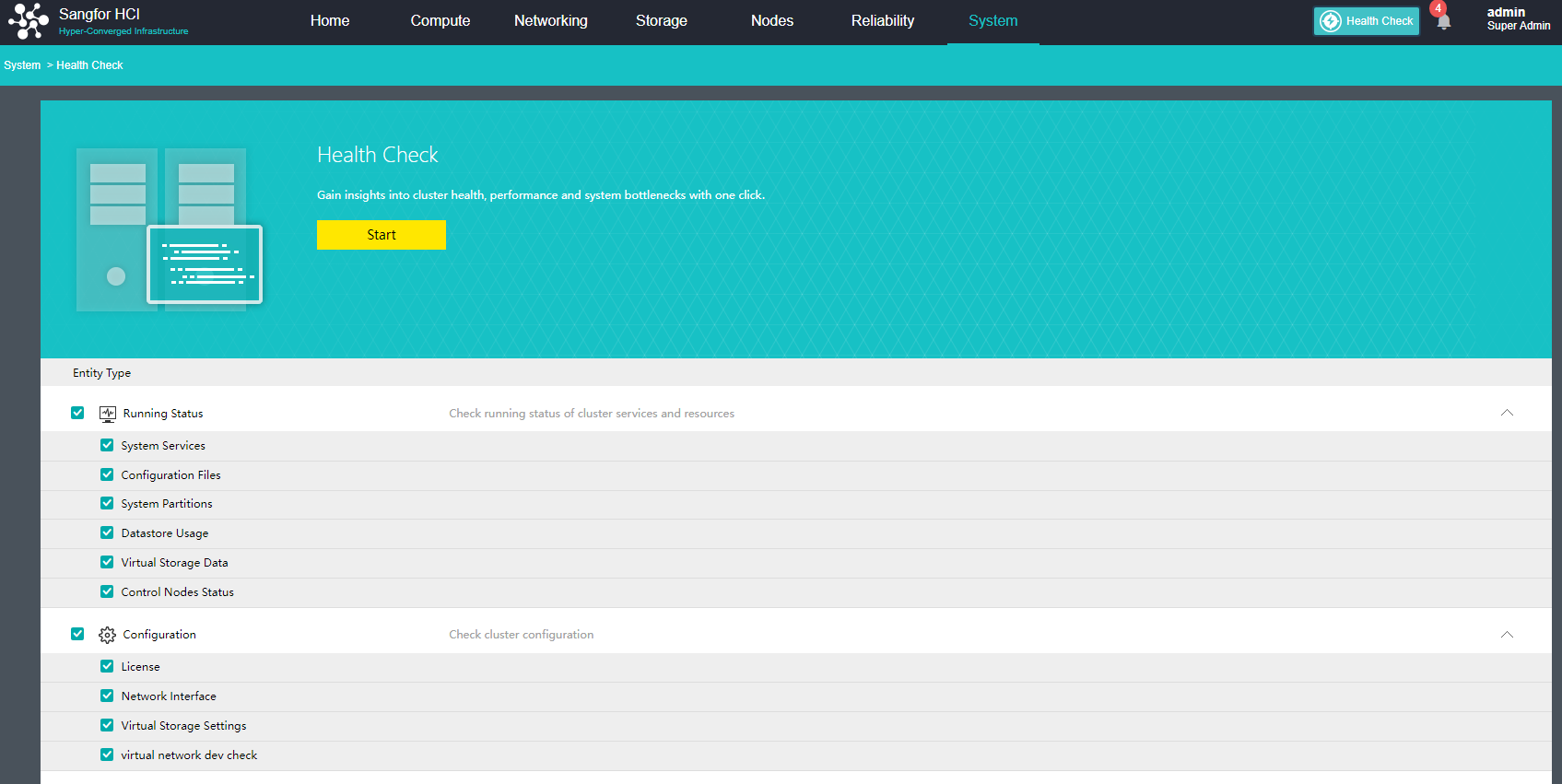

Health Check can identify the system hardware, configuration, and system operation, quickly locate the problem location (hardware, platform, and business), conduct the layered inspection, and provide detailed fault solutions.

-

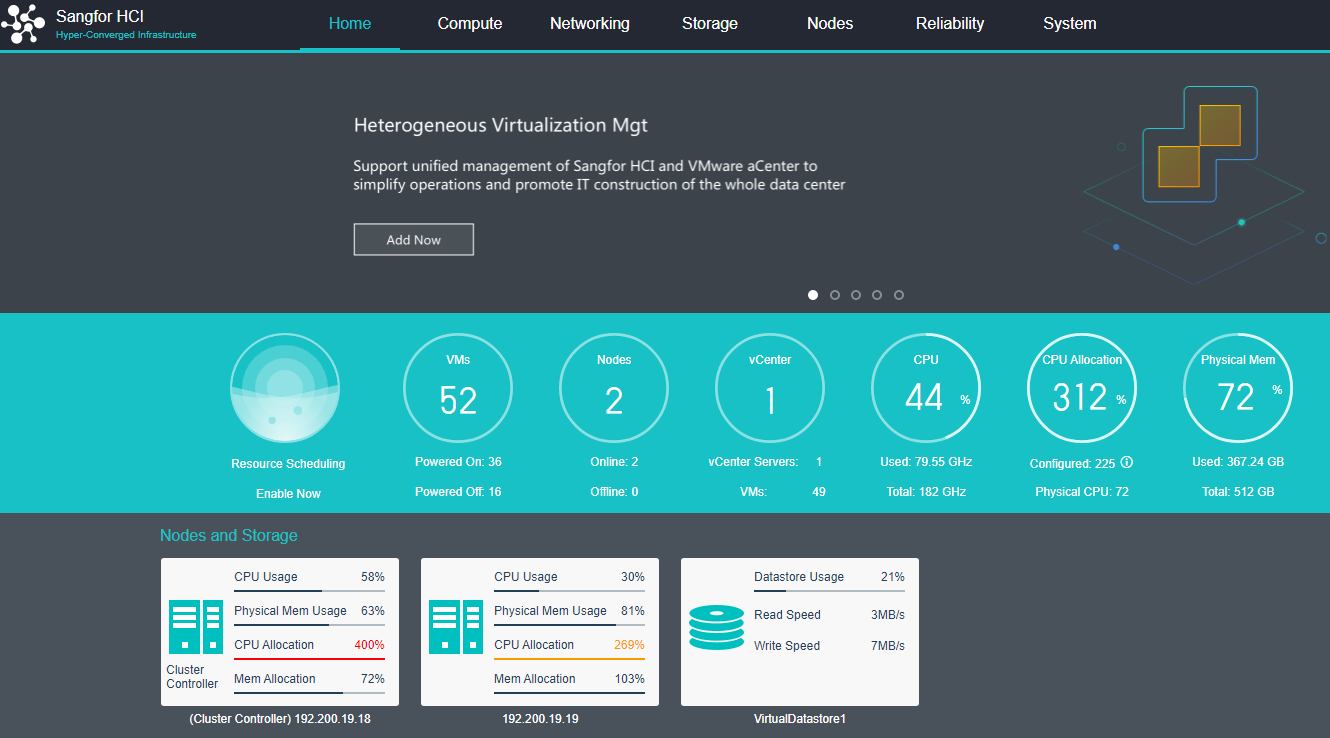

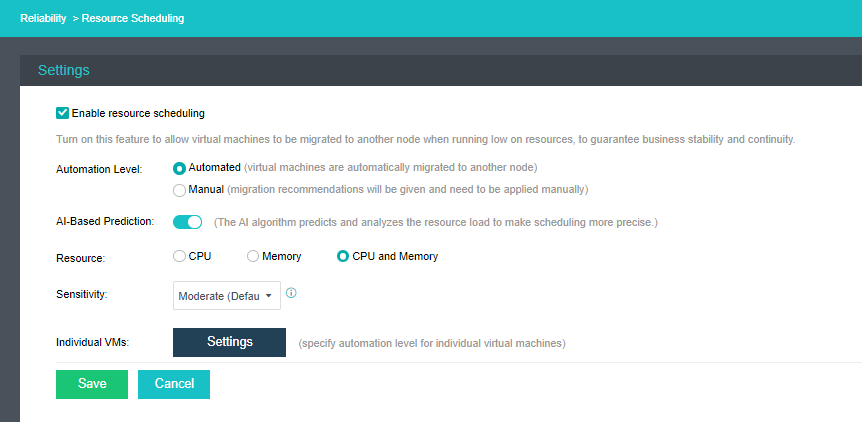

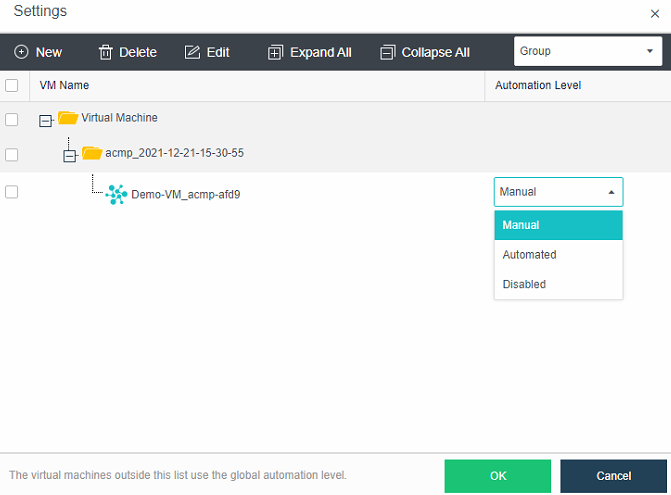

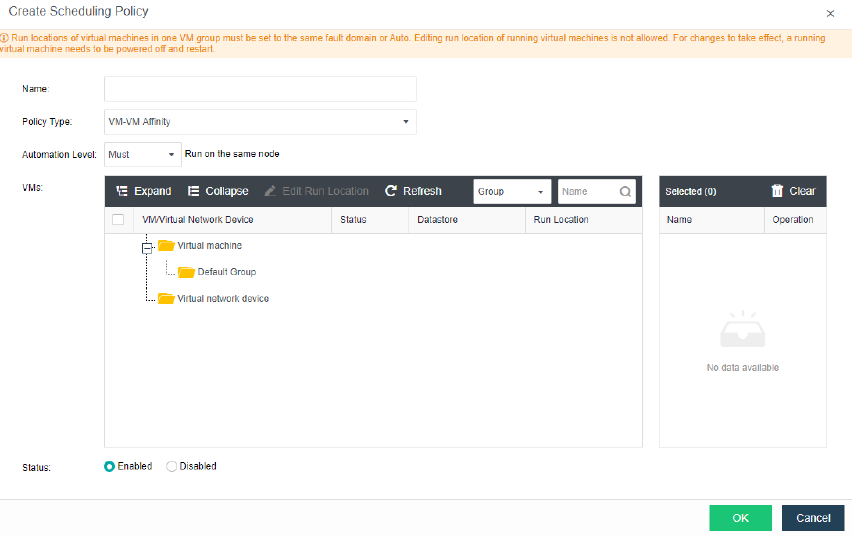

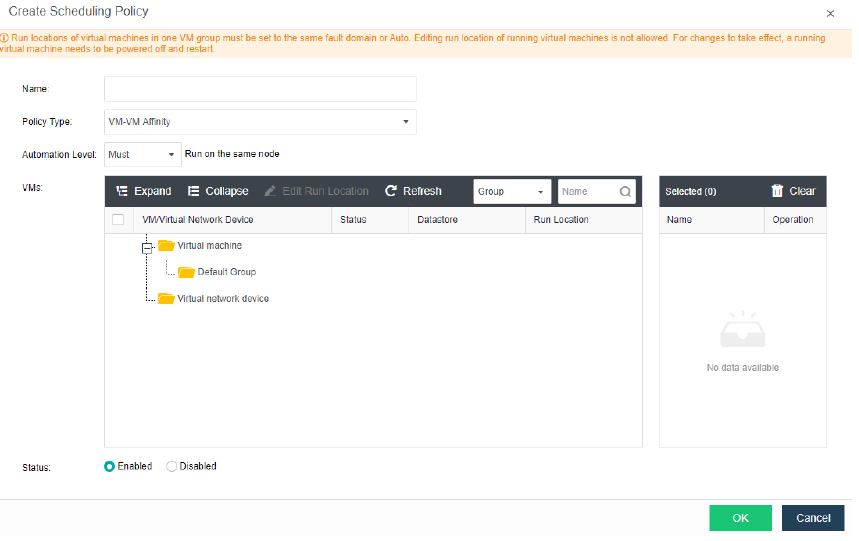

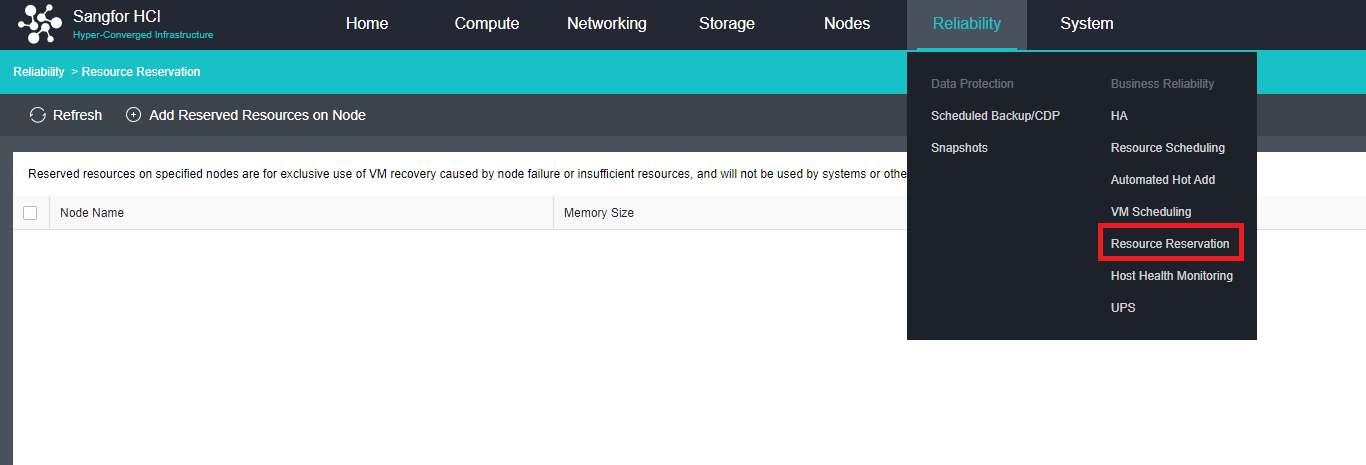

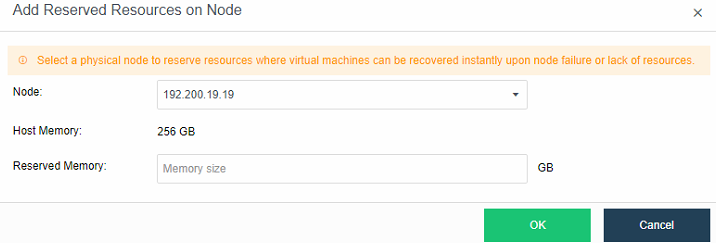

Resource Scheduling

Resource scheduling refers to scheduling cluster resources in specific scenarios, migrating virtual machines from nodes with high CPU or memory usage to nodes with low utilization, and reducing the utilization of nodes with an excessive load below the threshold.

-

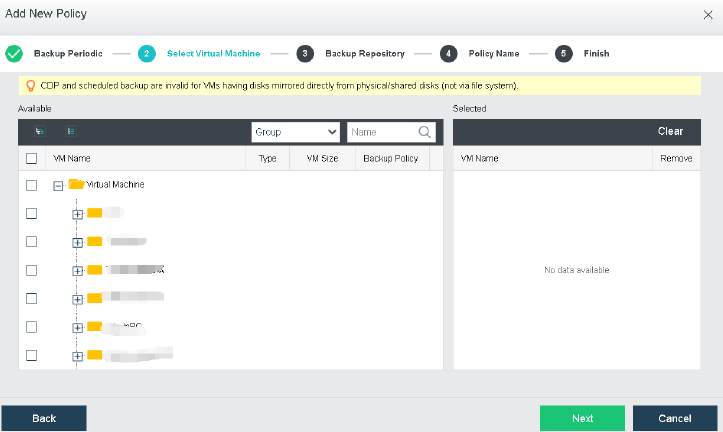

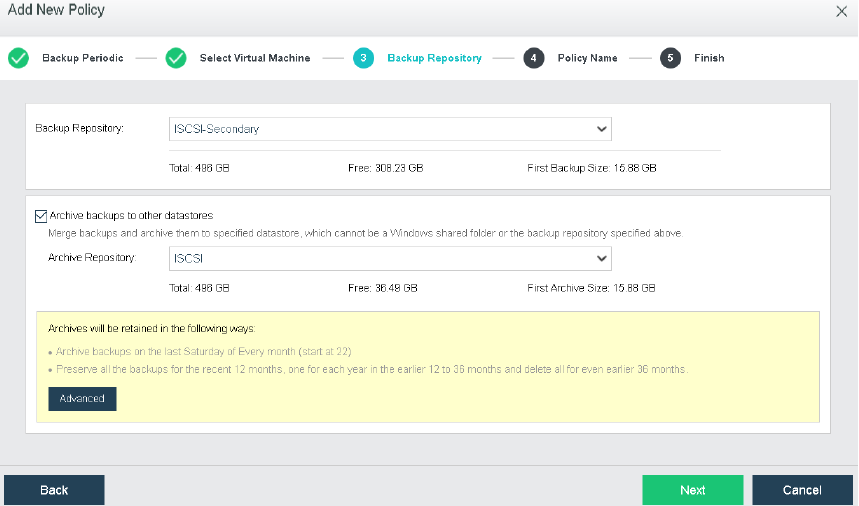

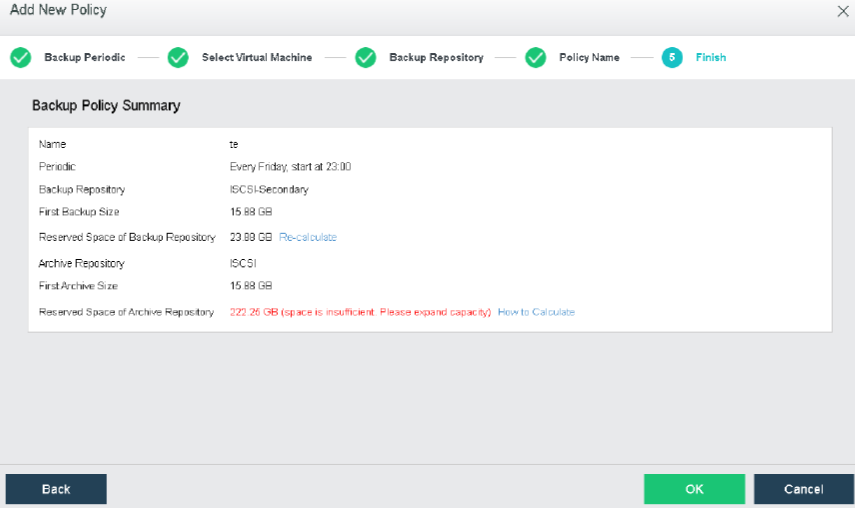

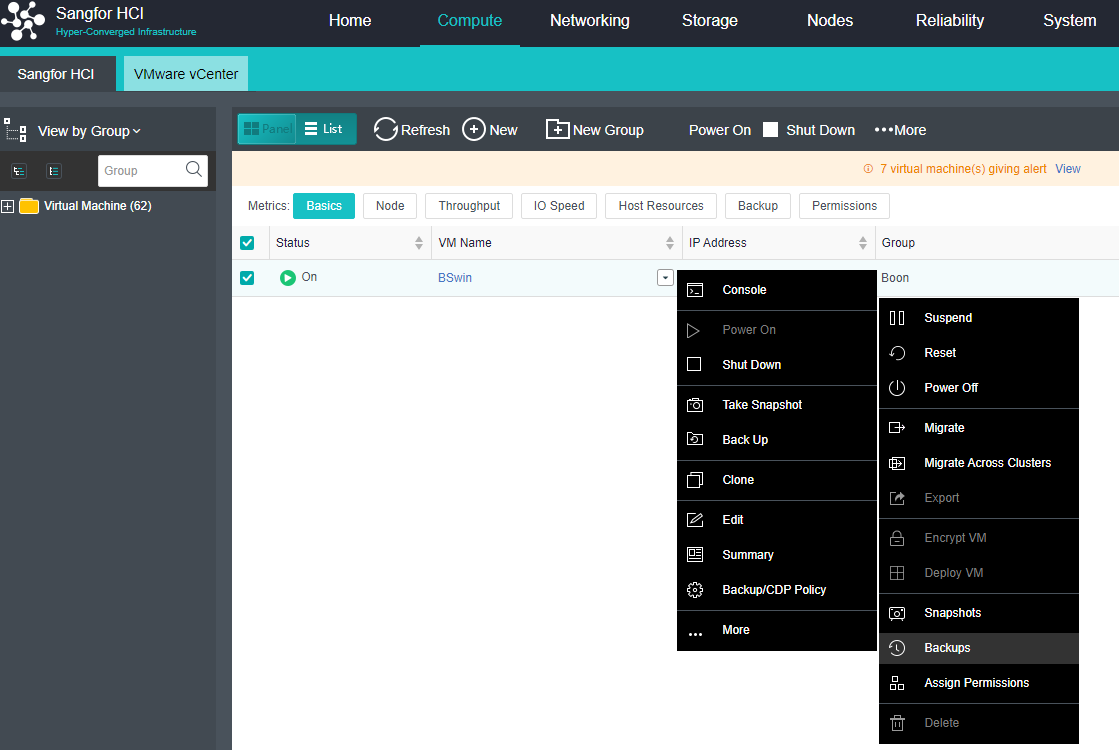

Virtual Machine data Protection

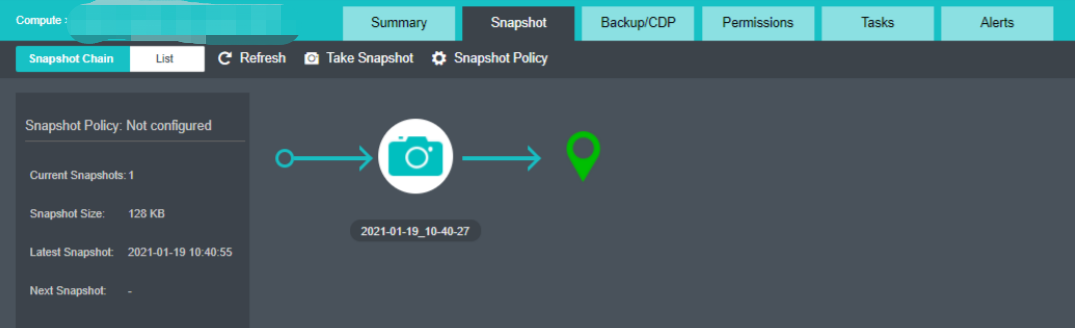

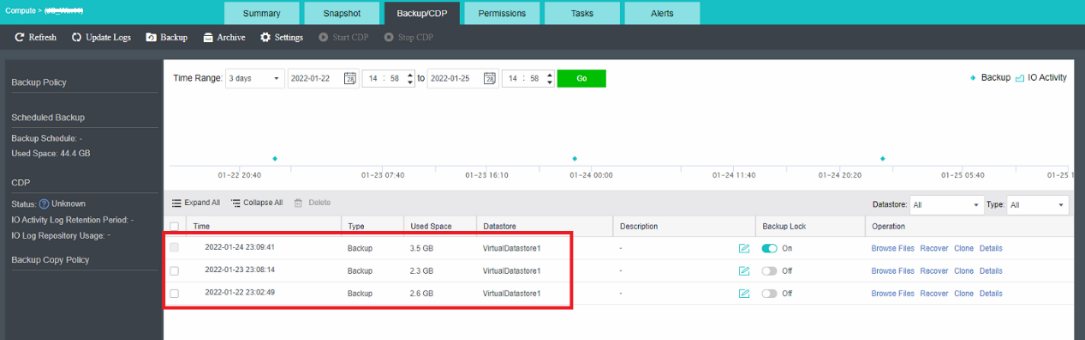

- Virtual machine snapshots are similar to system restore points. A virtual machine can have multiple snapshots.

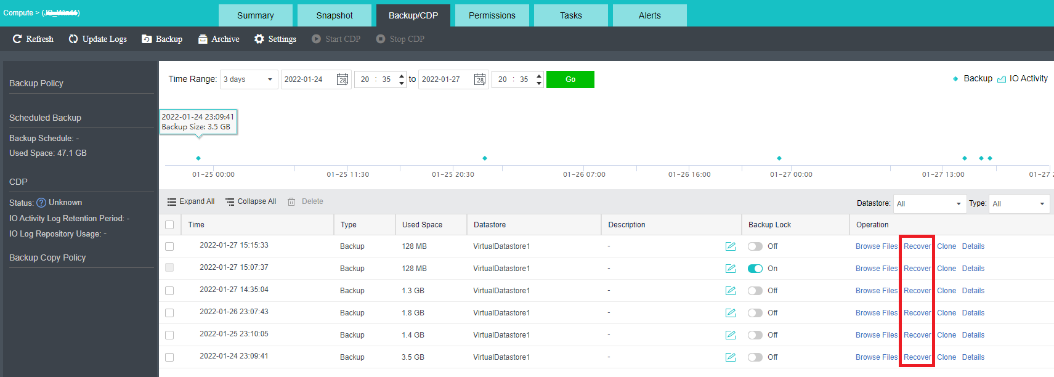

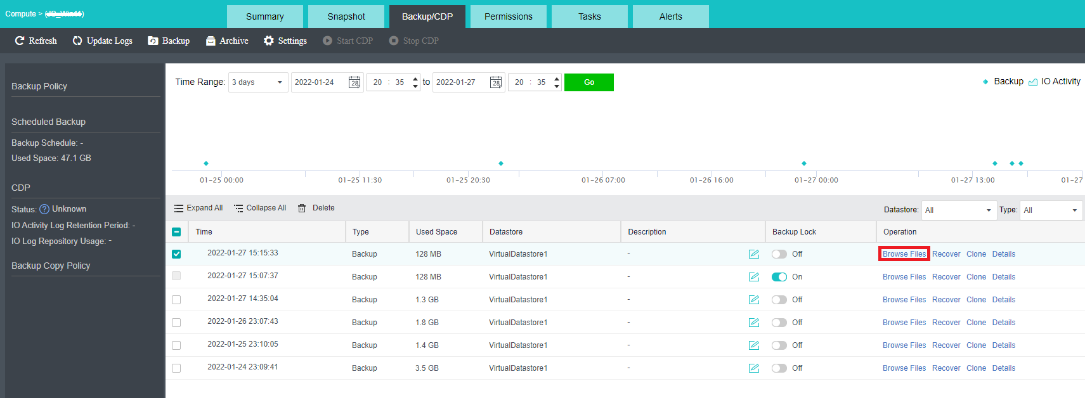

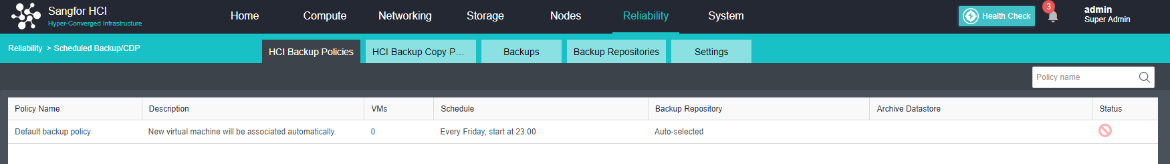

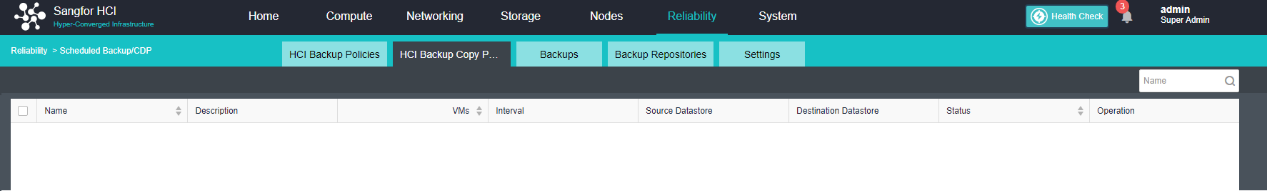

- The virtual machine backup mode supports full backup and incremental backup of virtual machines. The first virtual machine backup of the user is the full backup of the virtual machine, and other backups within the backup retention period are incremental backups.

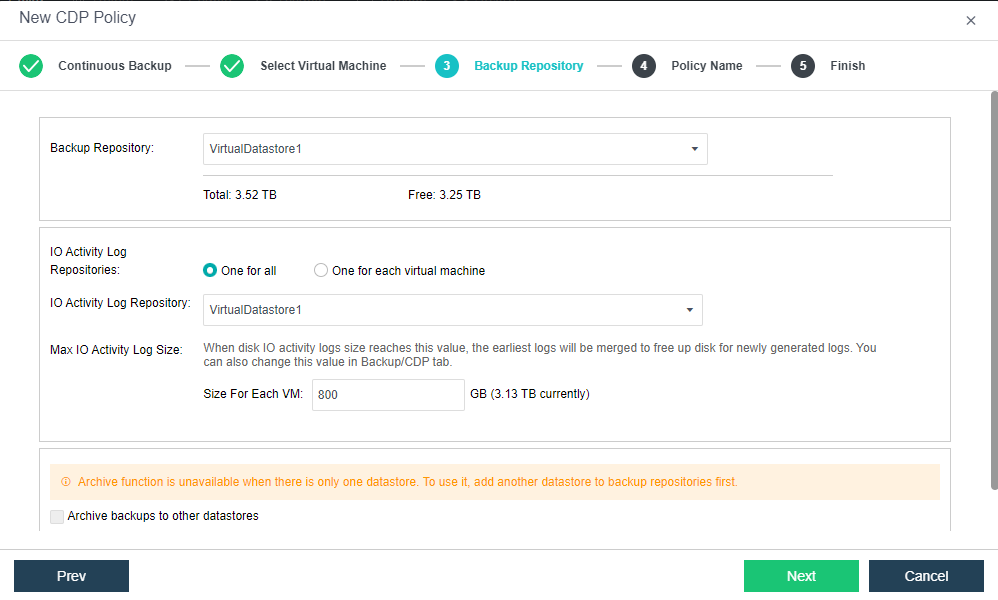

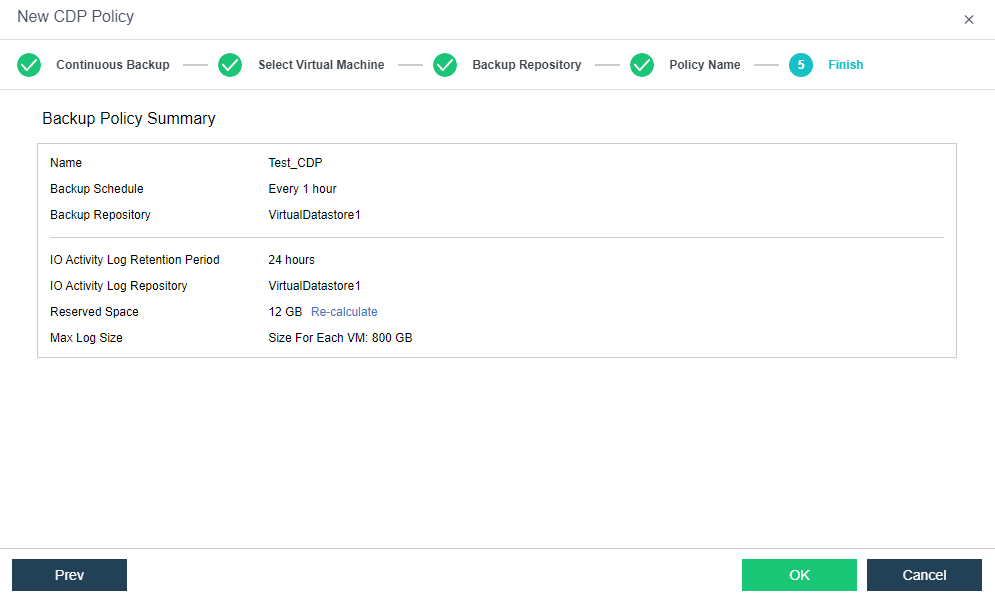

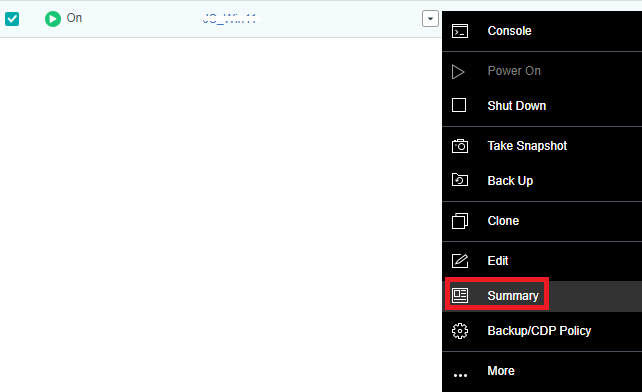

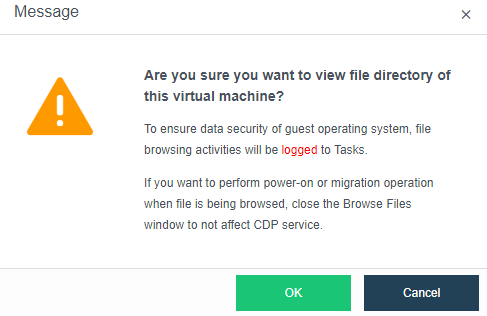

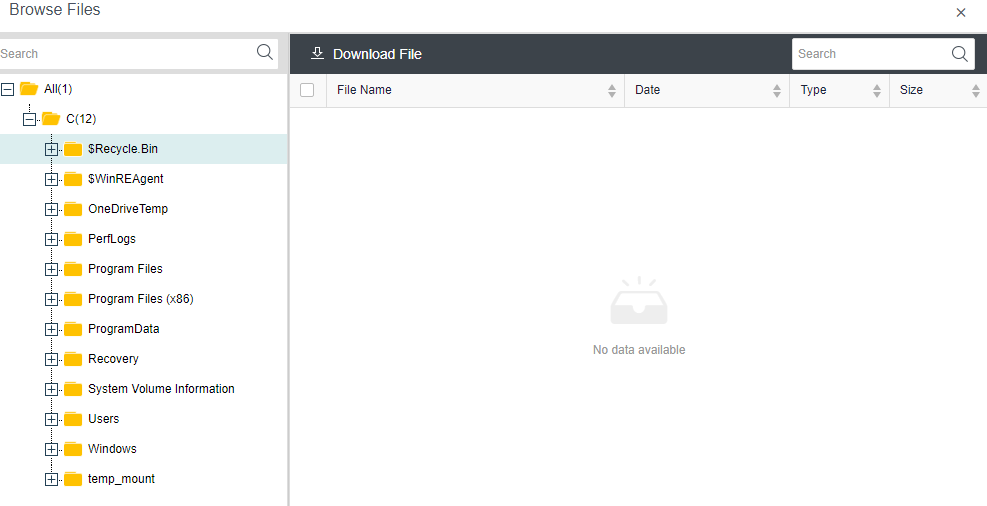

- CDP is continuous data protection. It can record every IO of the business system to the disk in the virtual machine, restore the virtual machine to the state at any time, or directly view and download the file at a particular time. It is of great value for file deletion, viruses, system crashes, data damage, and other faults, so the RPO is close to 0.

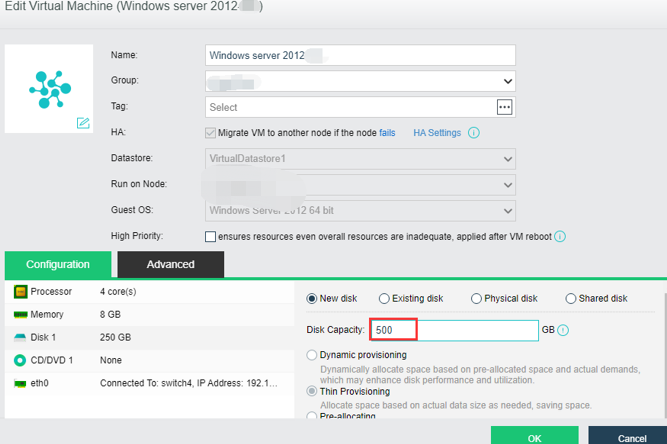

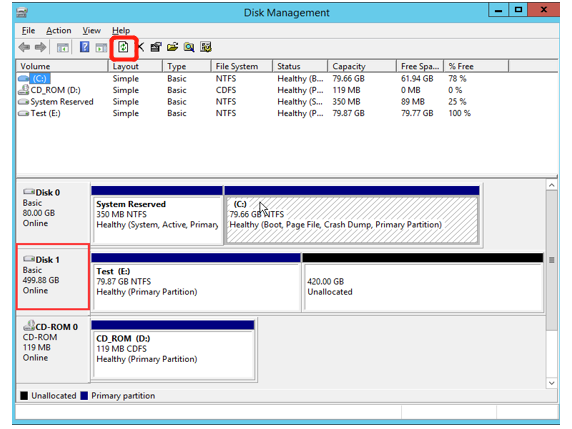

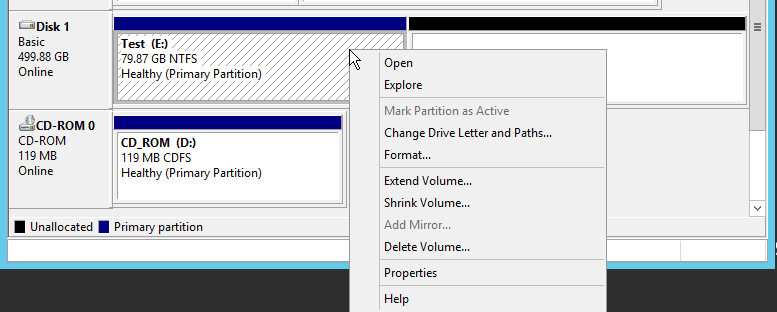

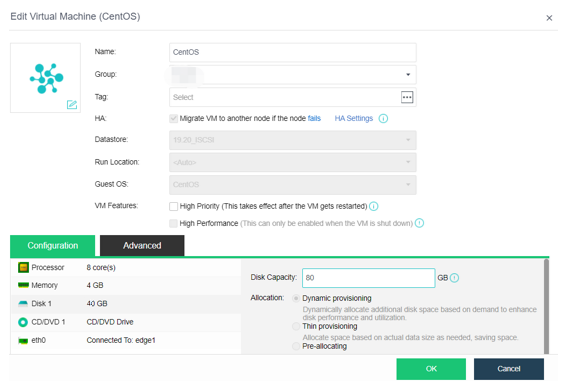

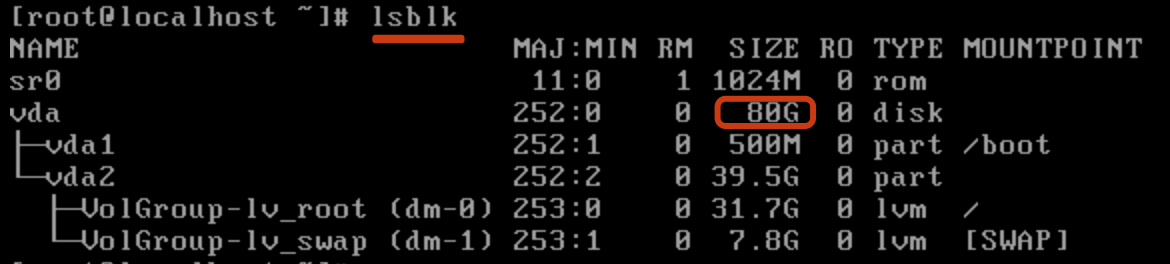

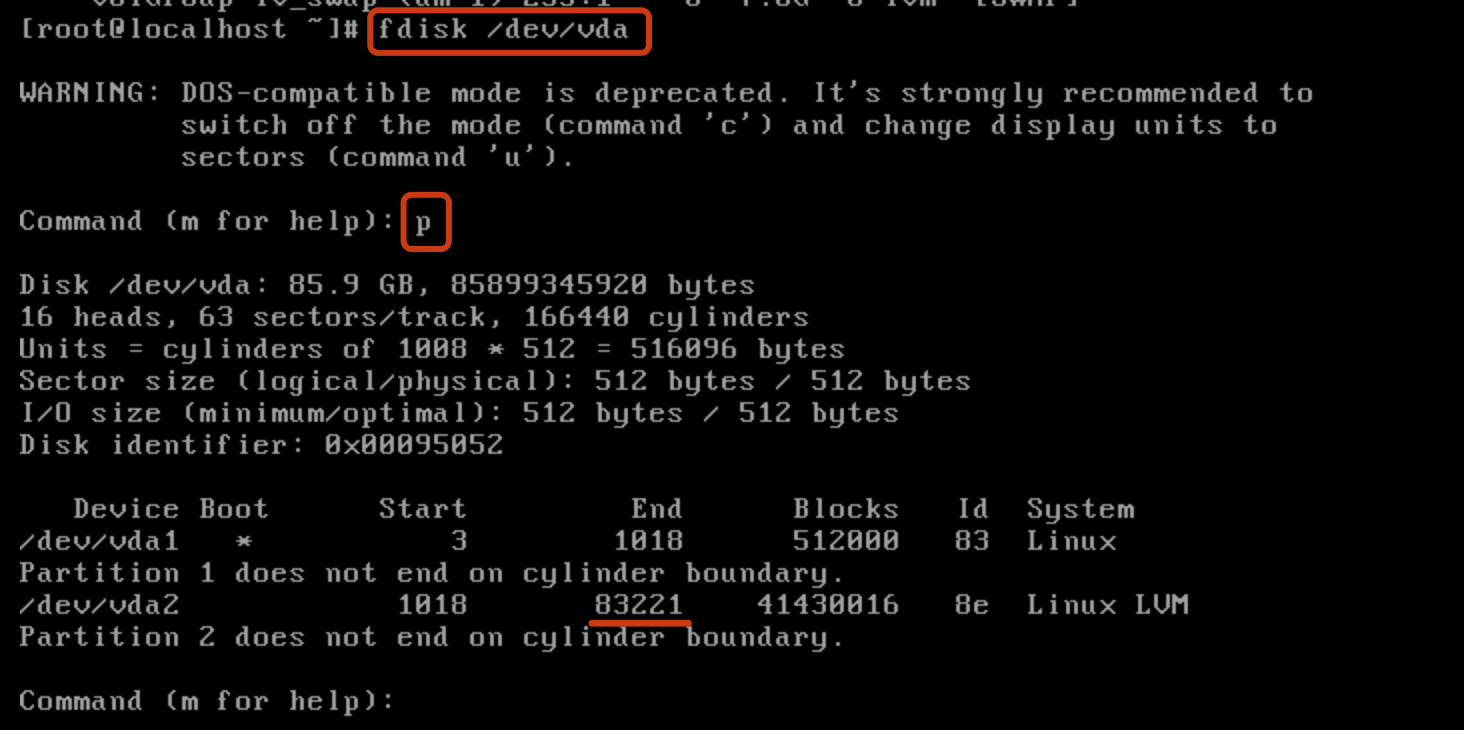

- Power On Expansion

It supports the expansion of existing disks when the virtual machine is powered on to avoid downtime caused by the expansion of virtual machine disks.

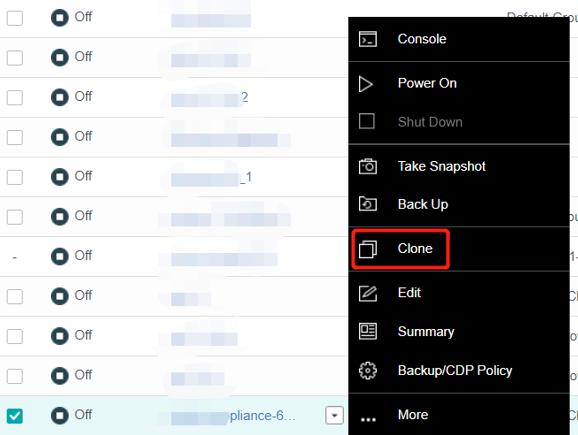

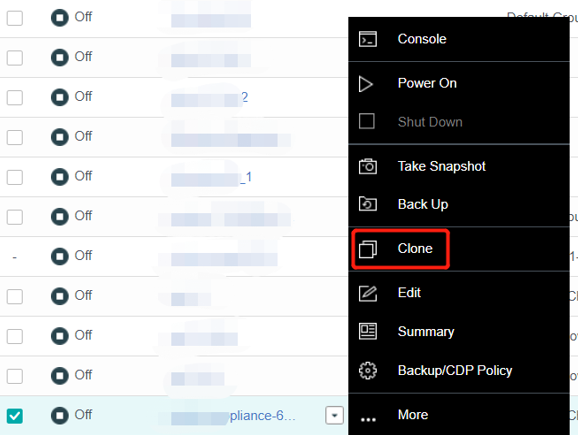

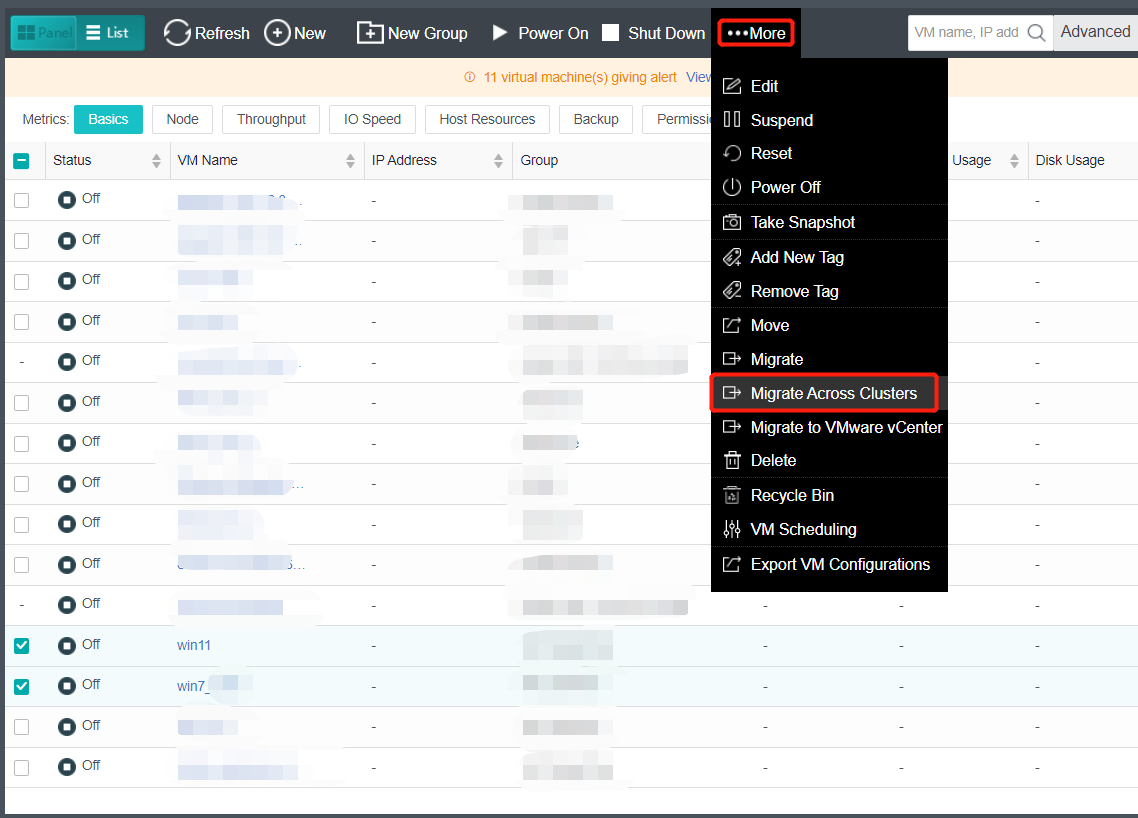

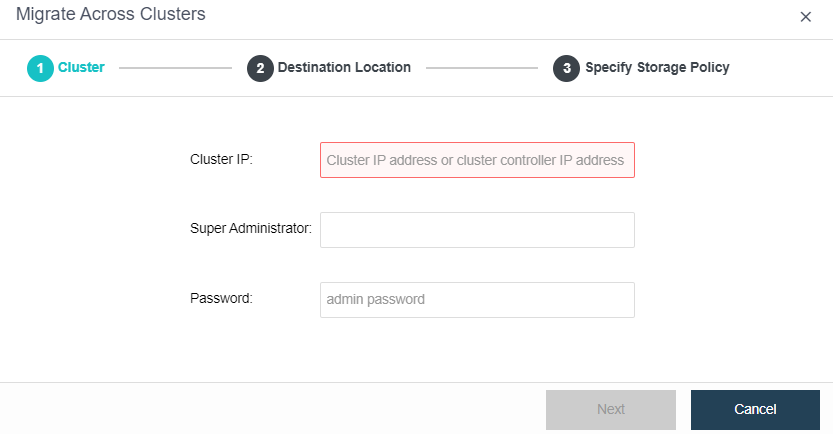

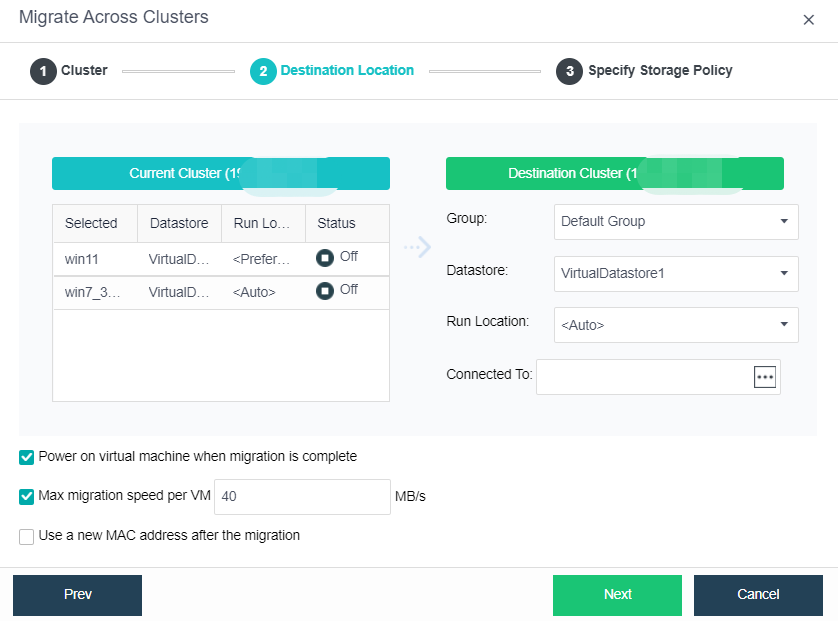

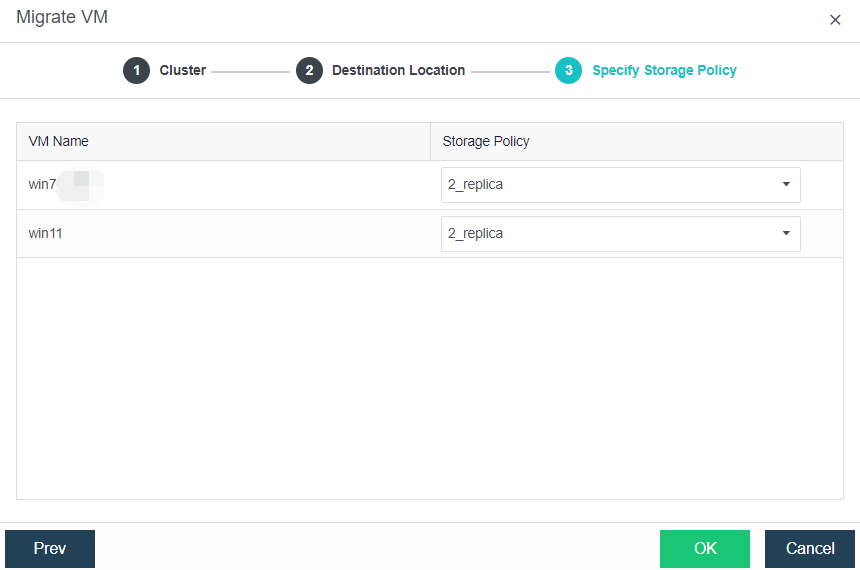

- Bulk Clone Migration

- Support batch cloning of virtual machines.

- Support batch migration within the cluster.

- Support batch migration across clusters.

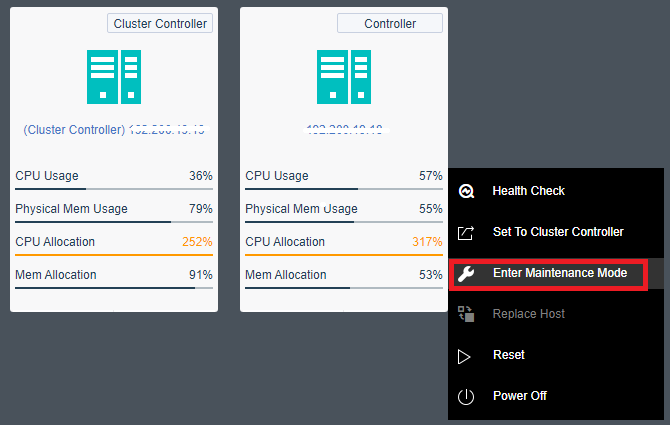

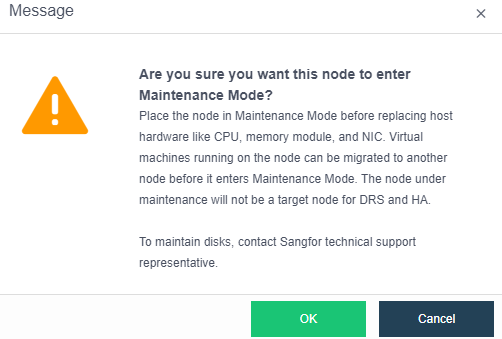

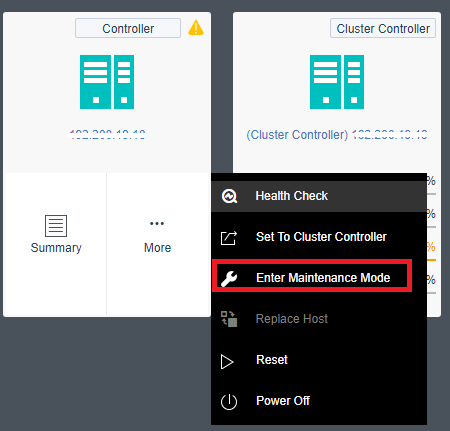

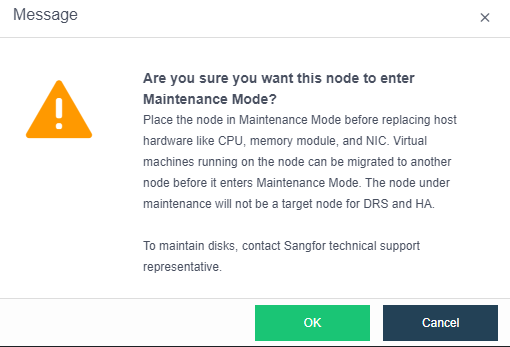

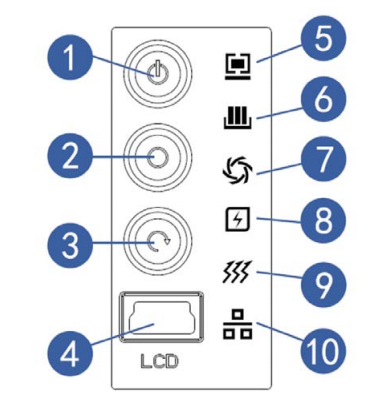

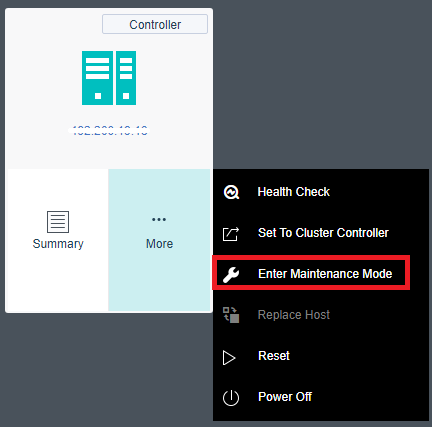

- Single Node Maintenance

The maintenance mode can be set for a single node. For a node that enters the maintenance mode, tasks will not be scheduled to the node for execution to reduce the impact on business during physical node maintenance.

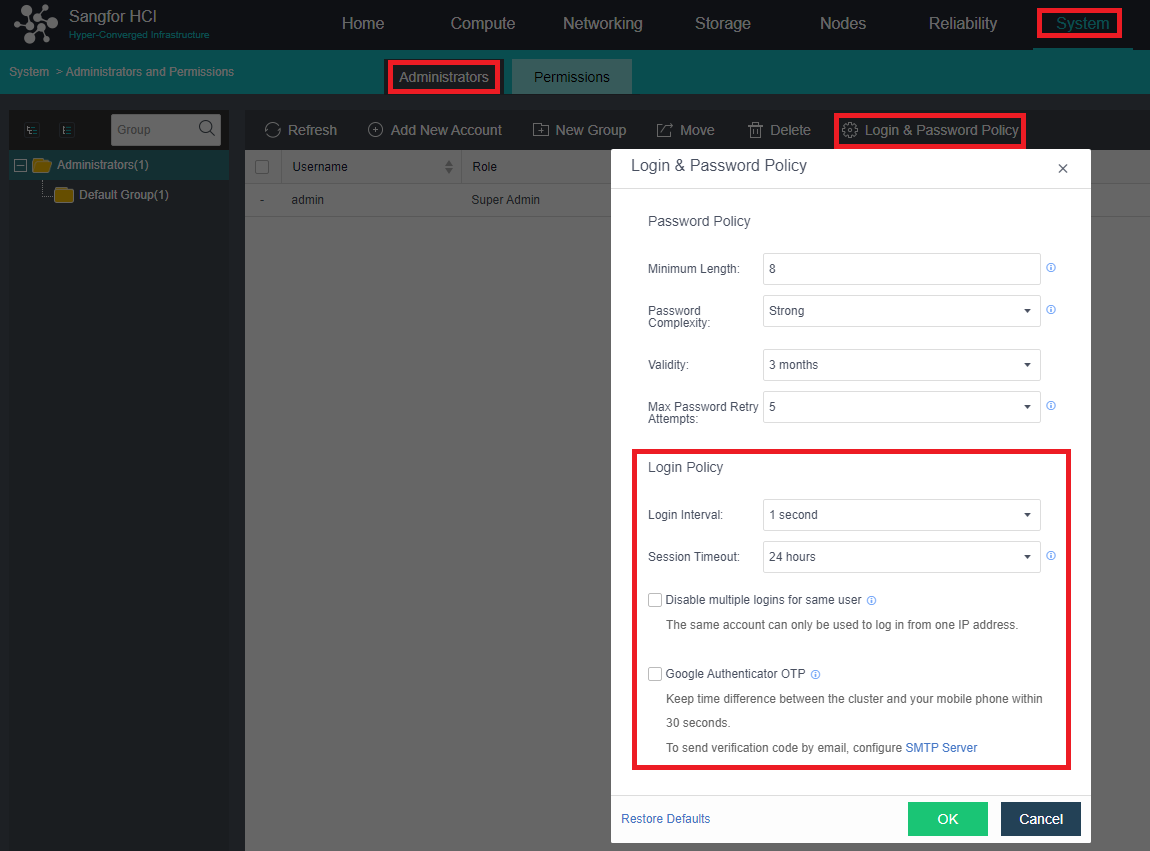

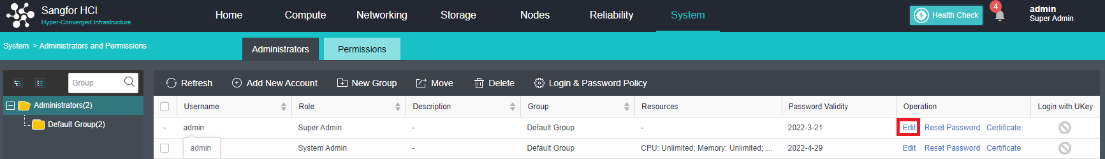

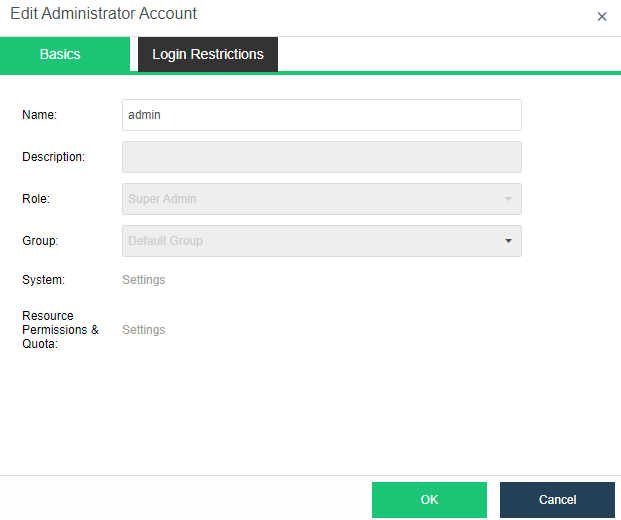

- Security Policy For Classified Users

- Syslog log reporting is supported.

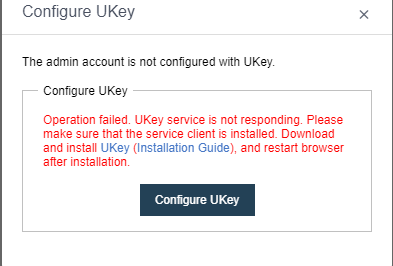

- Ukey dual-factor login is supported.

- Support IP + Mac and terminal binding policies.

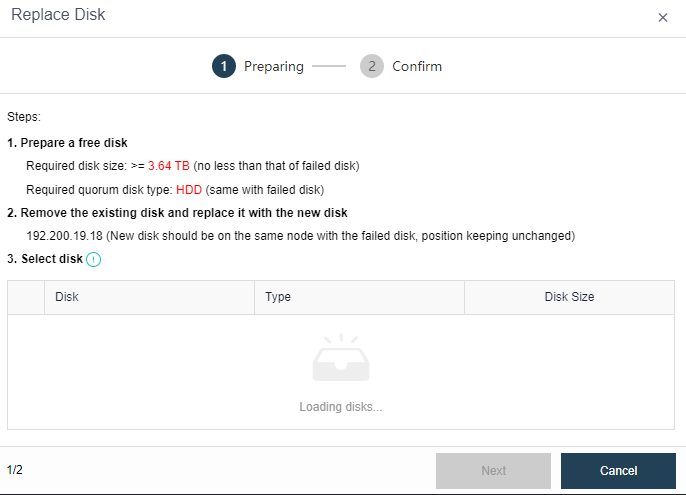

- System Disk Replacement

-

Support health inspection and lifetime prediction of existing system disks.

-

Support scheduled backup of system configuration and user configuration of the system disk.

-

Support the safe replacement of the node’s system disk.

aSV key Features

- Hypervisor Implementation of aSV

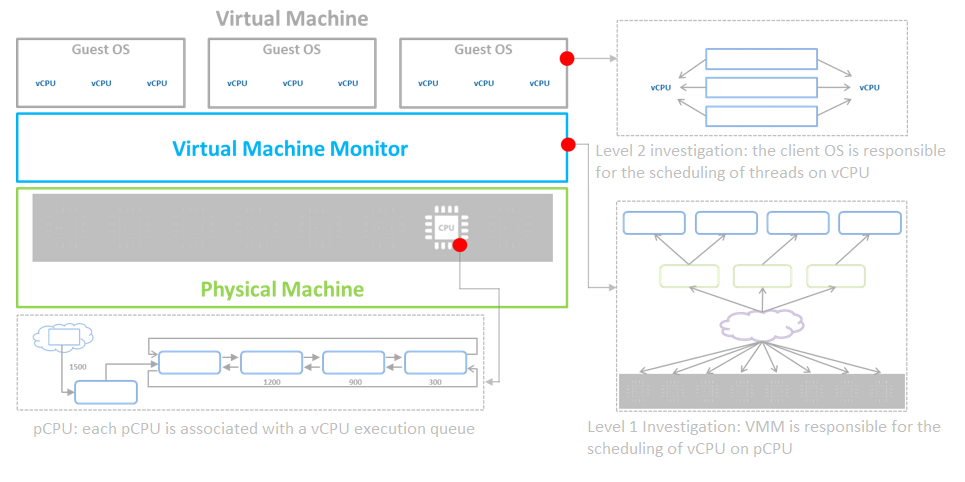

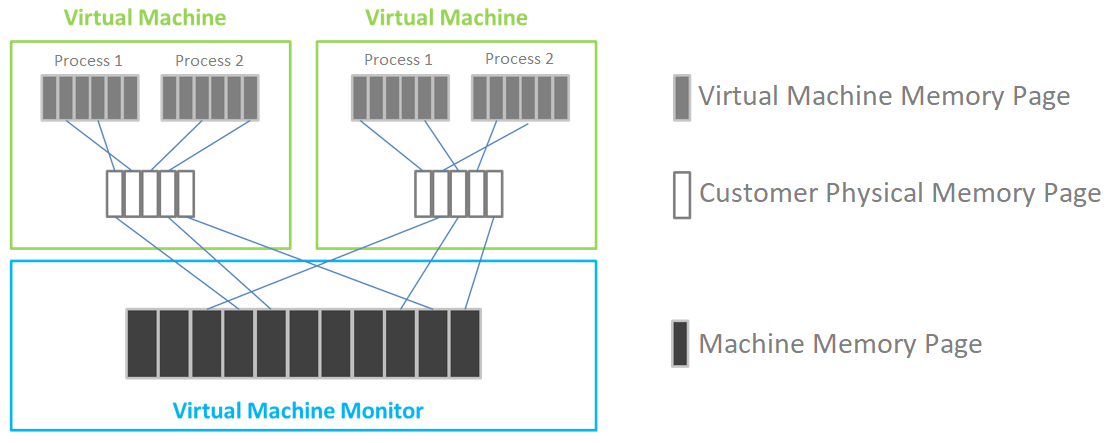

The aSV platform virtualizes CPU, memory, and IO devices through VMM (Virtual Machine Monitor).

Based on hardware-assisted virtualization technology, aSV uses VMM to realize the virtualization of x86 architecture and divides a single physical CPU into multiple vCPU. The virtual machine only sees the vCPU presented by VMM and does not directly perceive the physical CPU. The user operating system is responsible for the level 2 schedule, the scheduling of threads or processes on vCPU. The virtual machine monitor is responsible for the level 1 schedule and the scheduling of vCPU on the physical processing unit.

aSV completes memory virtualization based on page table virtualization technology. VMM is responsible for page memory management, maintaining the mapping relationship from virtual address to machine address, virtualizing physical memory into the virtual machine, and using virtual memory.

aSV uses VMM to intercept the access request of Guest OS to I/O devices and then simulates the real hardware through software to realize I/O virtualization.

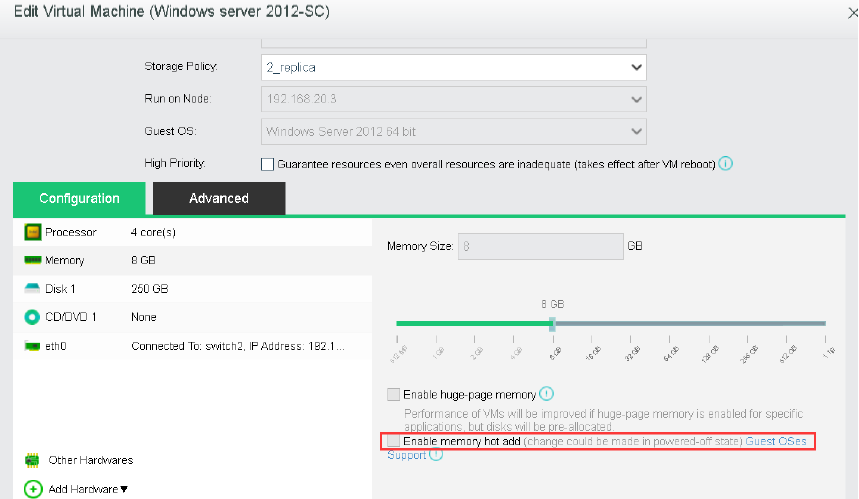

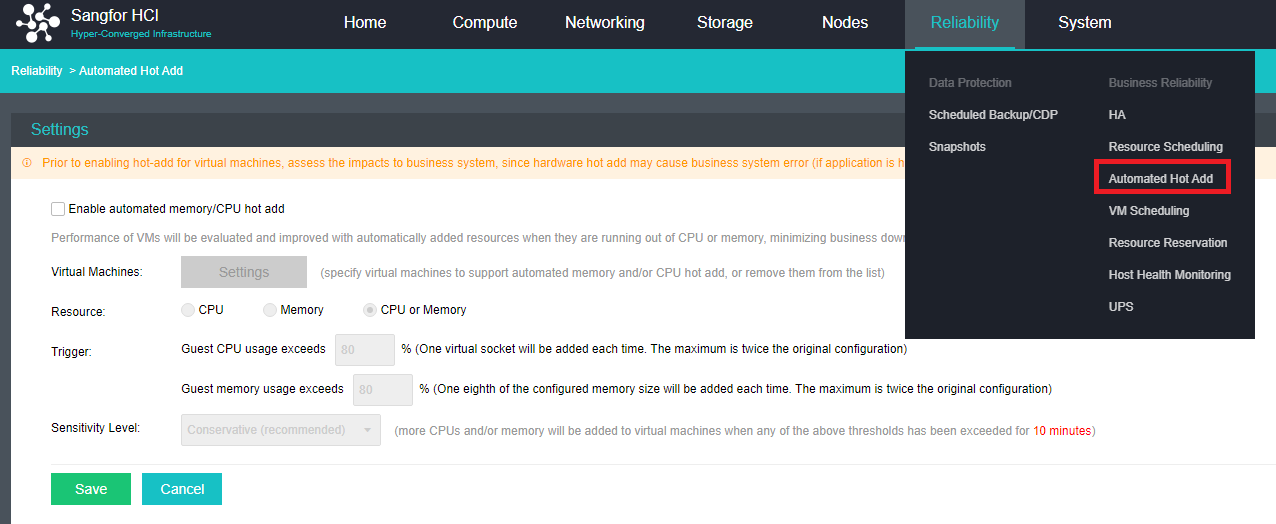

- Virtual Machine Resource Hot Add

aSV supports hot addition to the CPU and memory of the virtual machine and hot plug to the interface and disk of the virtual machine.

- Dynamic Thermal Addition

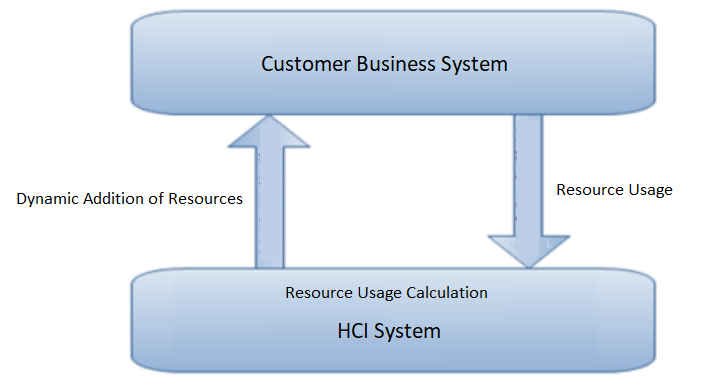

The automation strategy provided by the automated hot addition function can quickly respond to the growth and change of the business state. Dynamically expand the virtual machine’s CPU and memory resources, ensuring business continuity and solving the agile operation and maintenance problems caused by user business growth.

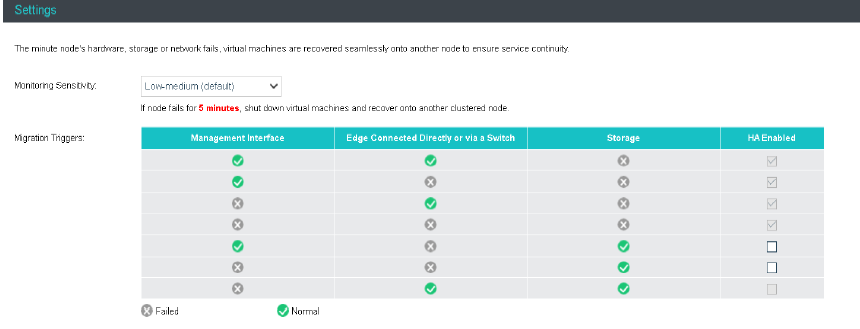

- Virtual Machine High Availability (HA)

Virtual machine high availability (HA) is divided into node failure HA and virtual machine failure HA.

- Node failure HA means that when the node where the virtual machine is located has an accident (node power outage, interface drop, etc.), a node with sufficient resources will be selected to restart the virtual machine, which significantly reduces the service interruption time.

- Virtual machine failure HA refers to shutting down the virtual machine and restarting it on the original physical node when the virtual machine has a blue screen, black screen, and other failures.

- Virtual Machine Rapid Recovery

The rapid recovery function can immediately create and start the virtual machine when it needs to be restored. The process can be completed in 3 minutes, and the performance will climb to the normal within 15 minutes, which can quickly help users restore business operation and ensure business continuity. RTO <= 15 minutes.

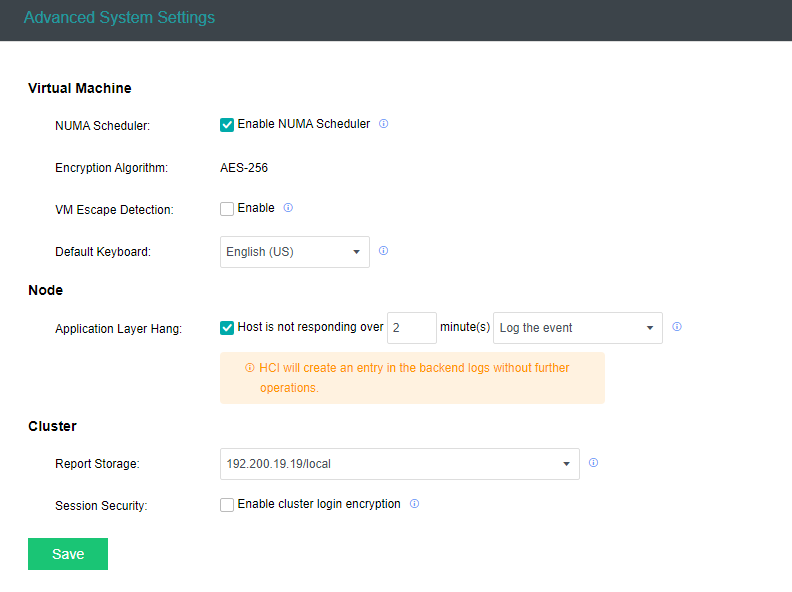

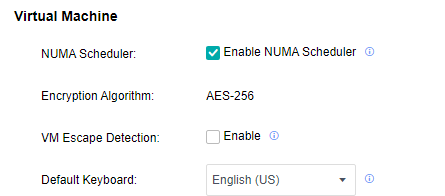

- Intelligent NUMA Scheduling

By modeling the core business database and using an AI algorithm, the NUMA binding rule base of the core database in the general scenario is generated to improve the business performance.

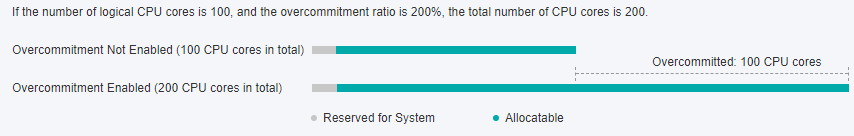

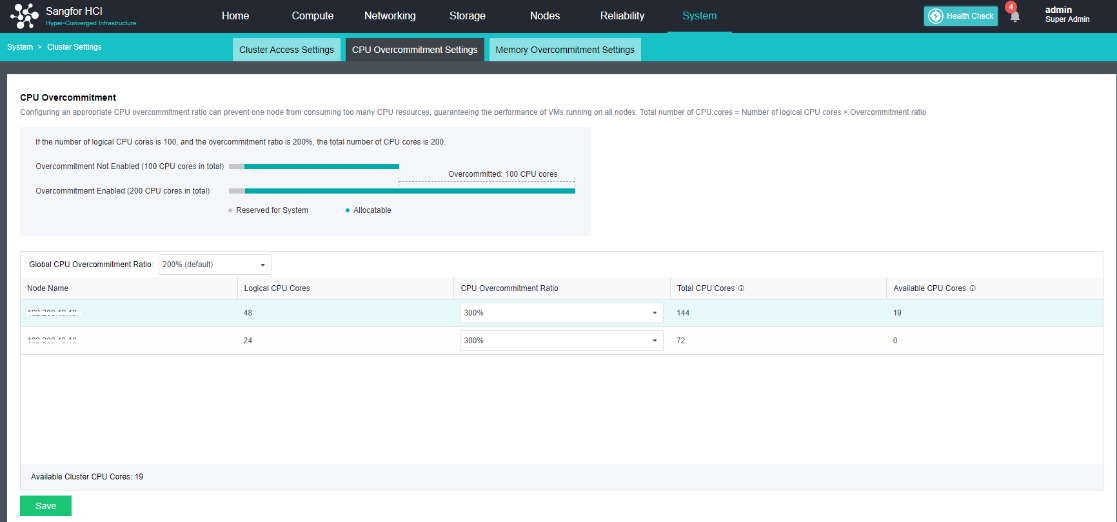

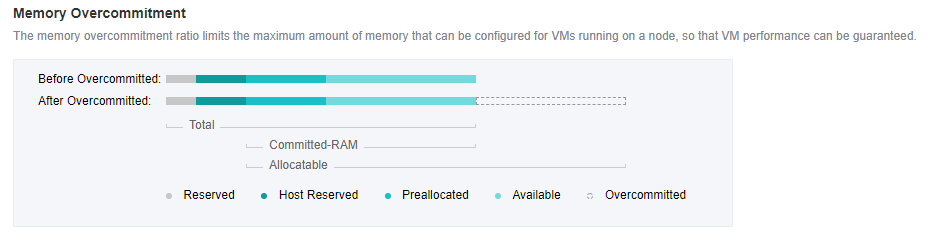

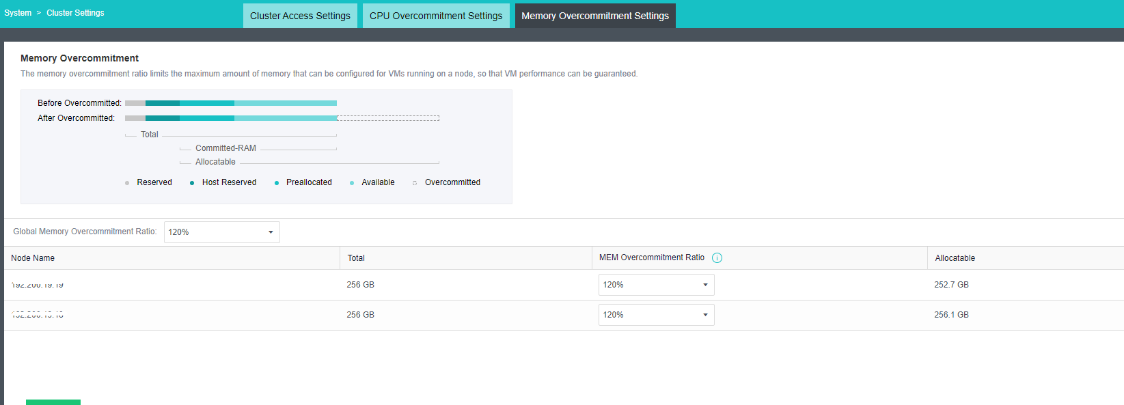

- Memory Isolation, Memory Over Allocation, and CPU Over Allocation Limits

By isolating the memory area of each module, the system will not be suspended or shut down due to memory preemption to improve the platform’s reliability. At the same time, it supports the over allocation of virtual machine CPU and memory, and the actual usage can exceed the physical limit. Give priority to ensuring the memory usage of important virtual machines and improving the CPU and memory usage.

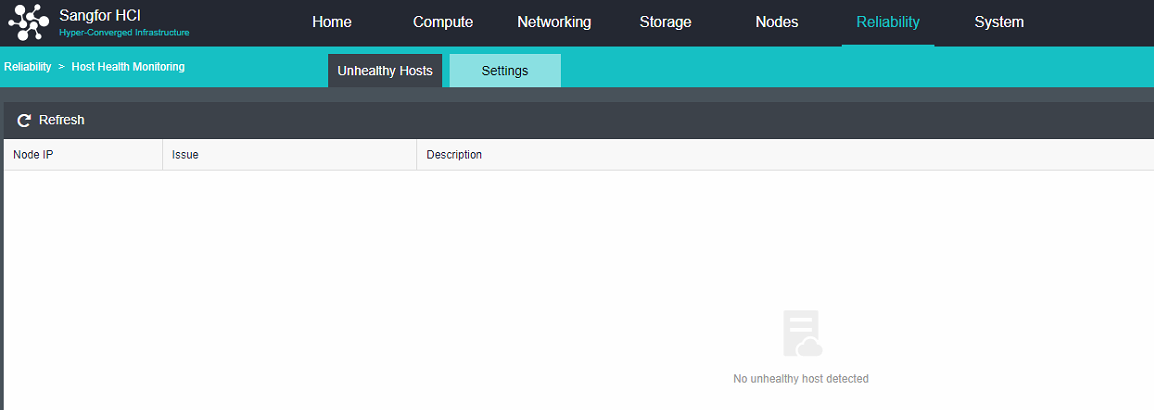

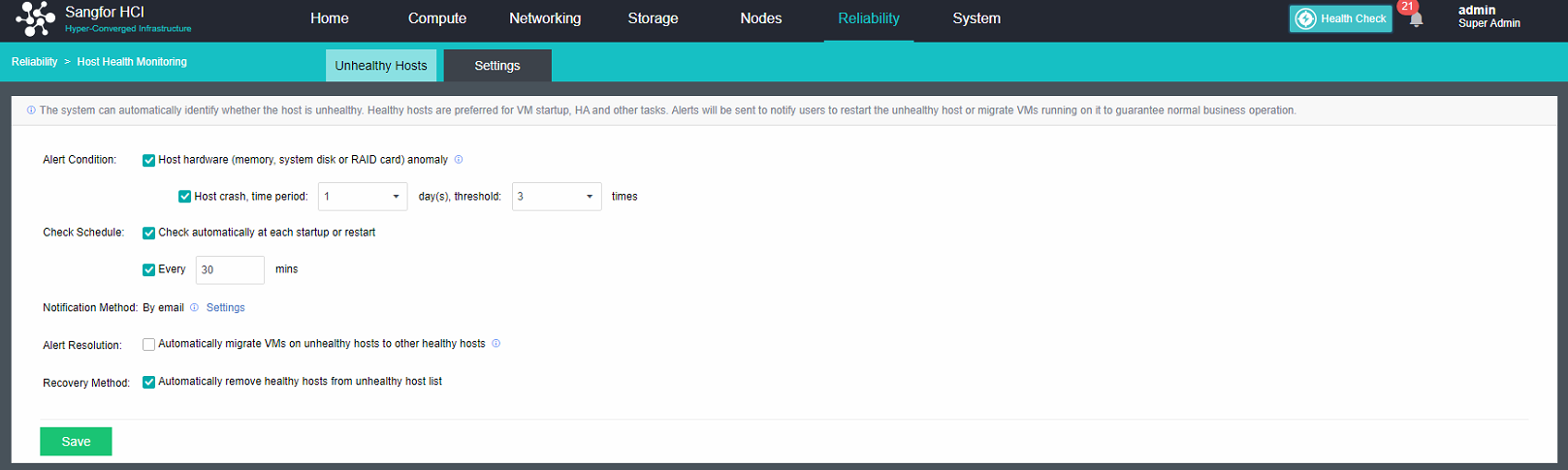

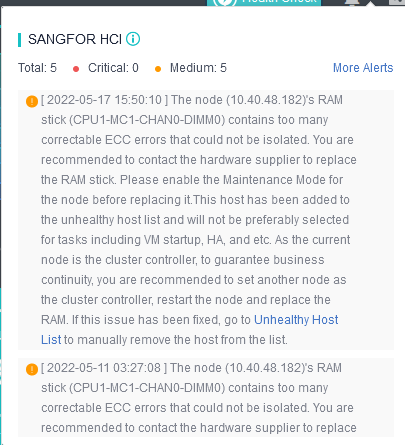

- Sub health node monitoring

Monitor whether the system disk and memory of the node in the cluster are in the sub-health state, give treatment opinions to the sub-health node and reduce the priority of the sub-health node in the process of virtual machine startup (or HA) node selection. Currently, it supports processing five sub-health states: memory CE and UE errors on the node, read-only system disk, insufficient SSD lifetime, and bad channel on HDD.

aSAN key Features

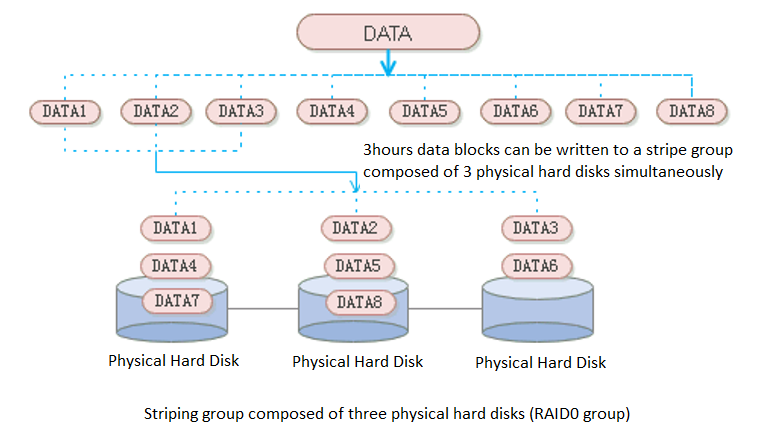

- Data Striping

aSAN uses striping technology to maximize I/O concurrency. Striping technology is to cut a piece of continuous data into many small data blocks and then stores them concurrently on different physical hard disks to achieve the maximum I/O concurrency when writing or reading data to obtain excellent performance.

As shown in the figure below, striped data can be written to three disks concurrently, while non-striped data can only be written to one disk at a time. Therefore, the write performance of striped data is three times that of non-striped data.

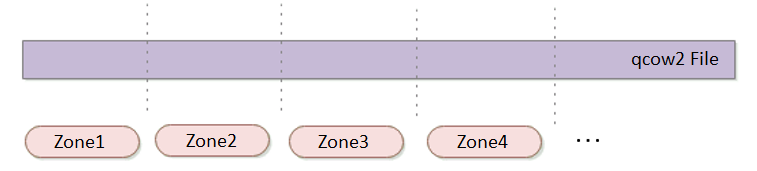

- Zone

aSAN divides a single qcow2 file into several smaller pieces according to a fixed unit size through data fragmentation technology. Make data more evenly distributed in virtual datastores and data management more flexible.

- Multi Replica Mechanism: Supports 2 Replica and 3 Replica

It also supports 2 replica and 3 replica policies. For virtual machines carrying important business systems, users can choose to configure three replica policies to further improve the reliability of data. Support the conversion between 2 replica virtual machines and three replica virtual machines to achieve the best balance between high reliability and high performance.

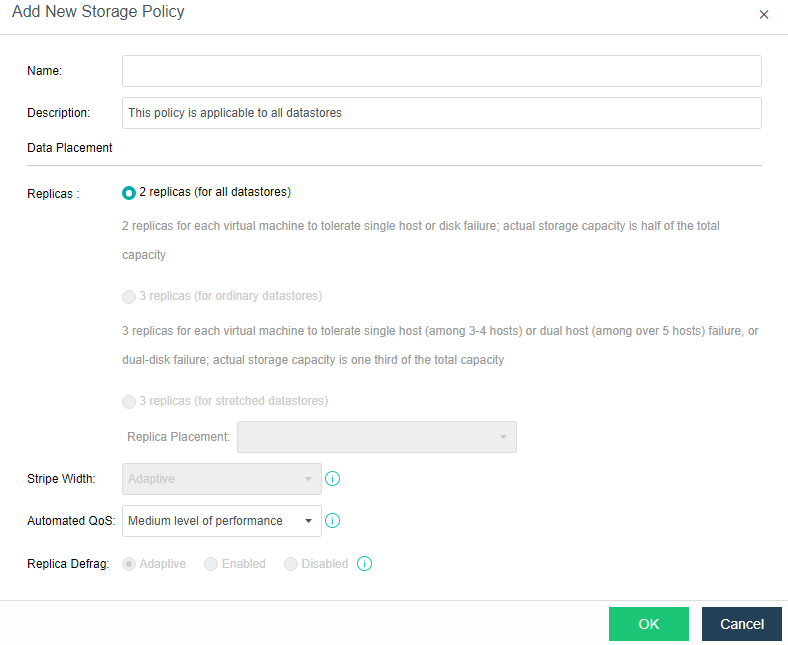

Common storage strategies are built in the platform. Users can select corresponding storage policies when creating virtual machines according to the characteristics of business systems. At the same time, users can configure more detailed storage policies with the virtual machines as granularity according to the characteristics of the business system, which makes the configuration more flexible. Contains the following four attributes:

-

Replicas: Reliability index, two or three replicas can be selected according to the importance of the business.

-

Automated QoS: Performance indicators, including high performance, default performance, and low performance.

-

Strips WIdth: Performance index, the system will automatically set the number of strips or customize them according to the current storage state of the hard disk.

-

Replica Defrag: Performance indicator to ensure IO localization and improve read performance. An aggregate replica is enabled by default.

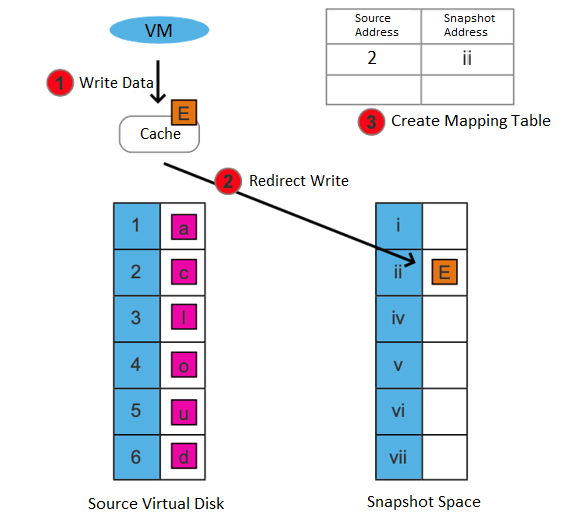

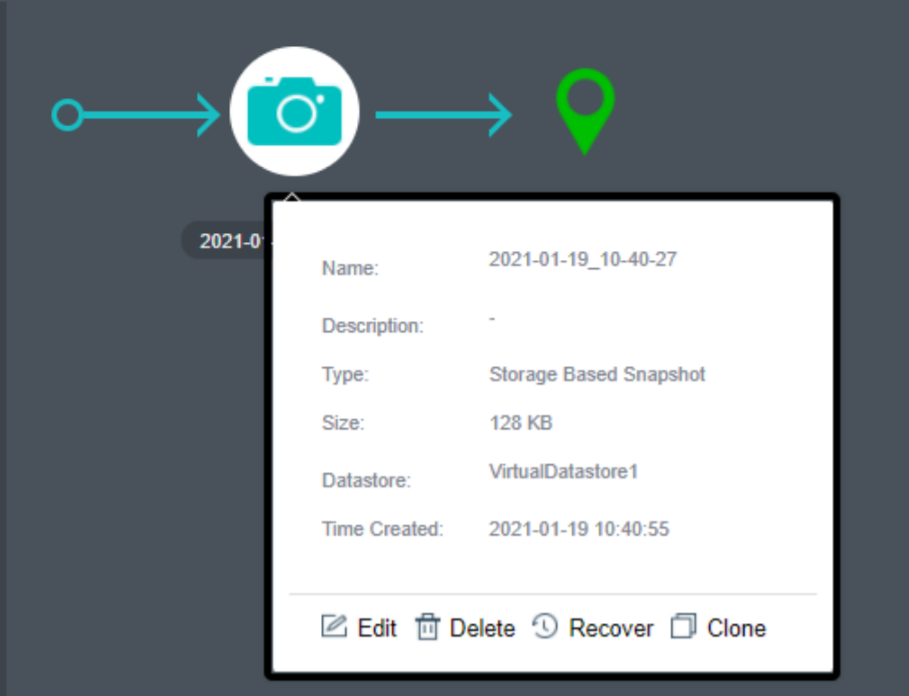

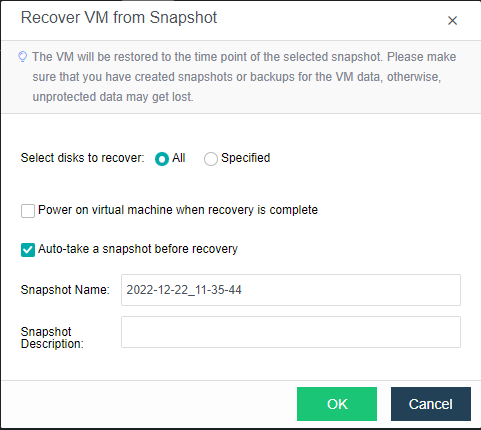

- Storage Snapshot

Supports the storage snapshot function. When creating a virtual machine snapshot, the system will set the source virtual disk as read-only and generate a corresponding number of new disk files (i.e., snapshot space).

After the snapshot is created, all new data and modifications to the source data of the virtual machine will be written to the newly generated snapshot space. The corresponding relationship between the logical address of the source virtual disk and the snapshot space will be written in the mapping table.

After the snapshot is created, there are two situations for the virtual machine to read data:

-

If the data read is the existing data before the snapshot is created and has not been modified after it is created, it is read from the source virtual disk.

-

If the read data is newly added/modified after the snapshot is created, it is read from the snapshot space.

This snapshot method has the following advantages:

- Redirect on write (ROW) is used to realize the snapshot, and the virtual machine’s performance will not be affected after the snapshot.

- After deleting a snapshot, you can free up storage space (initiate the snapshot residual file cleanup task).

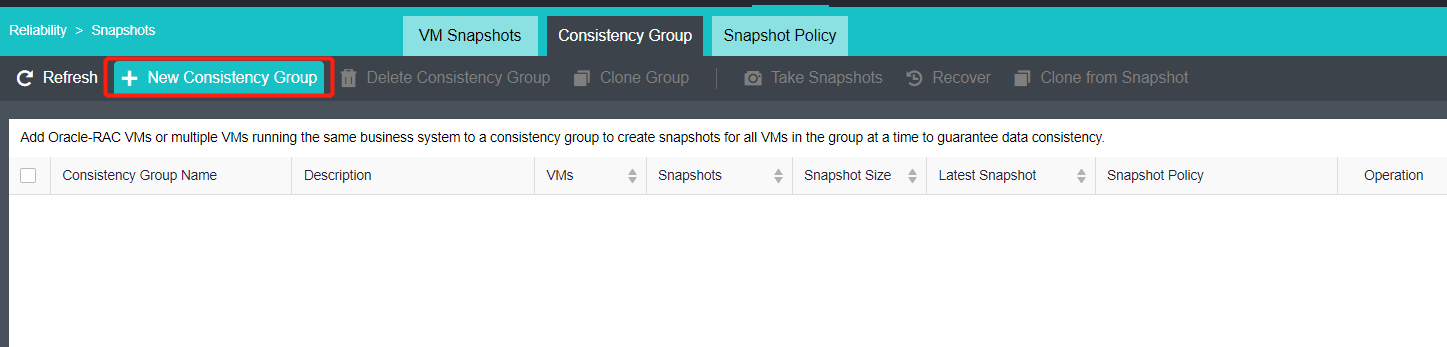

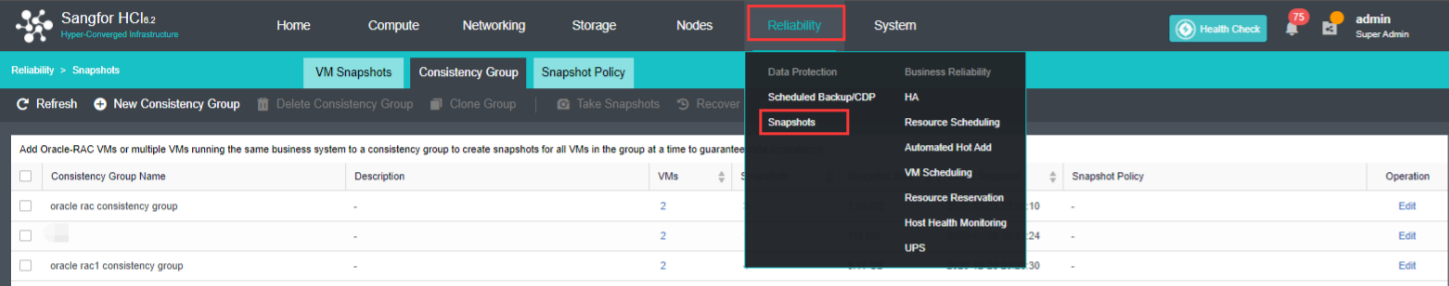

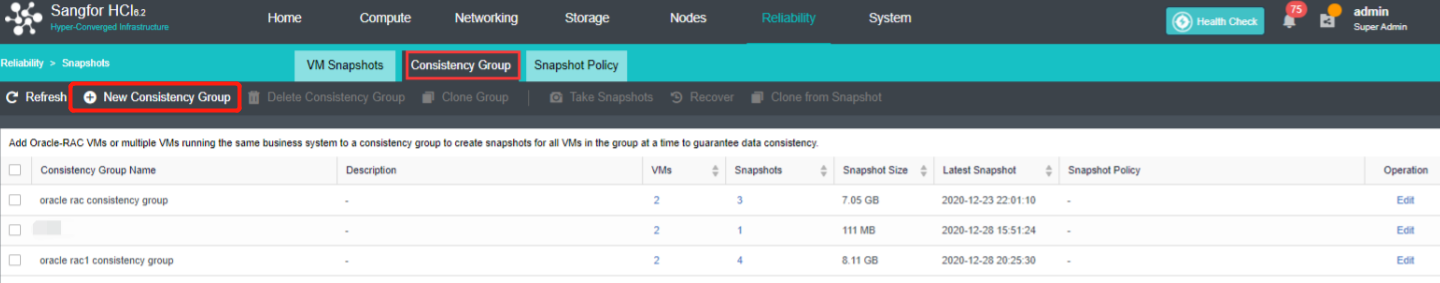

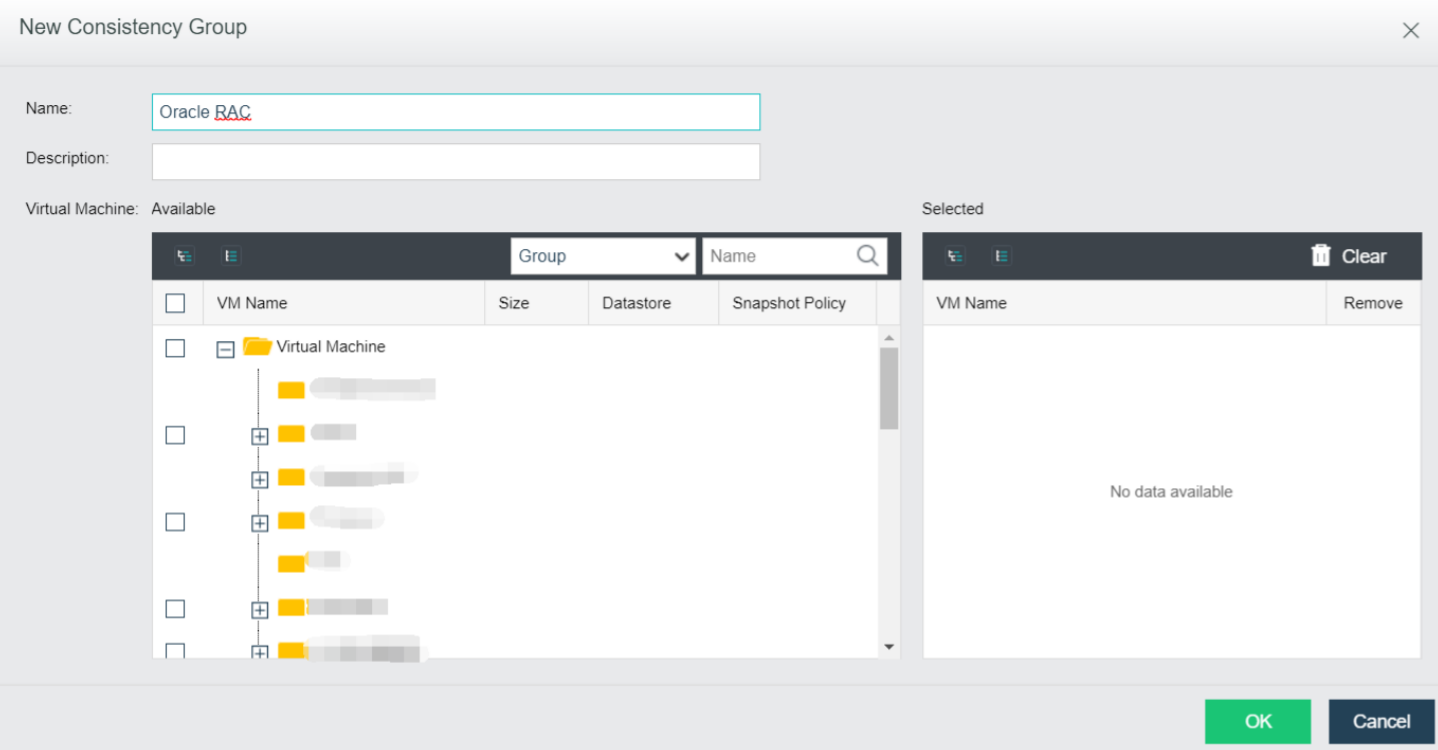

Support consistency group snapshots to ensure the consistency of Oracle RAC and distributed services:

- Support adding virtual machines in Oracle RAC or multiple virtual machines hosting the same business system as consistency groups. Take snapshots for consistency groups to ensure that all virtual machines in the group take snapshots simultaneously.

- When the virtual machine in the consistency group fails, the virtual machine in the group can be rolled back to a consistent state by using the consistency group snapshot.

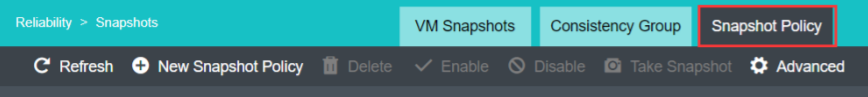

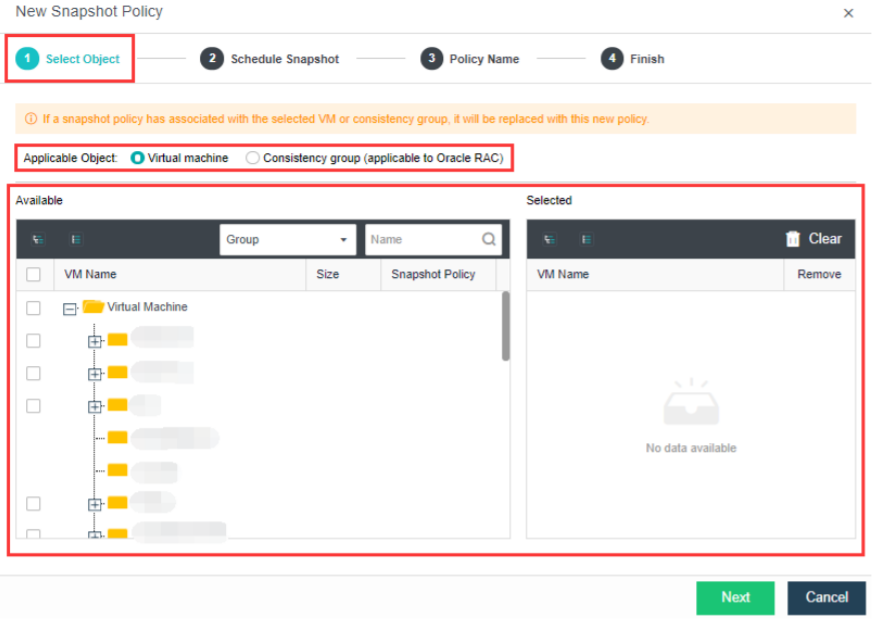

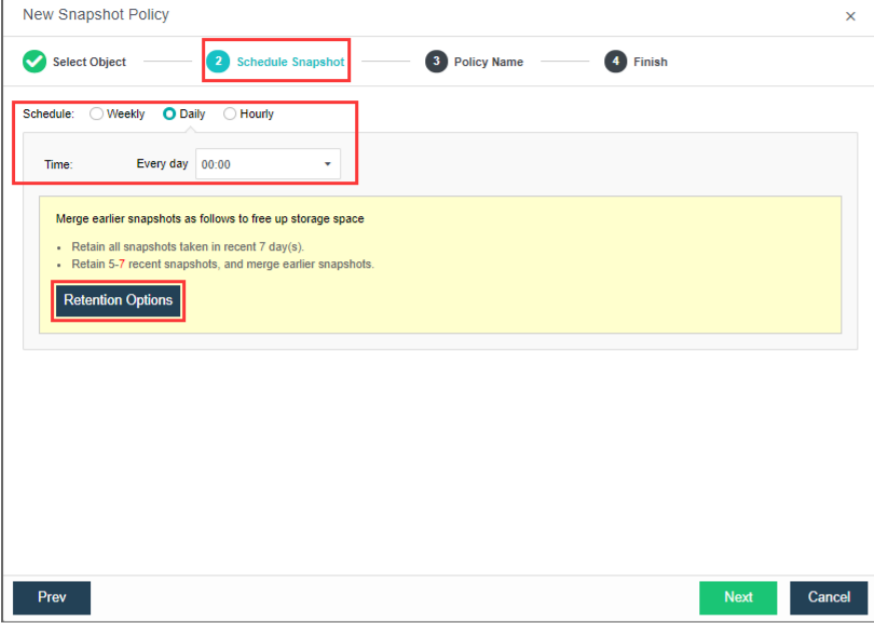

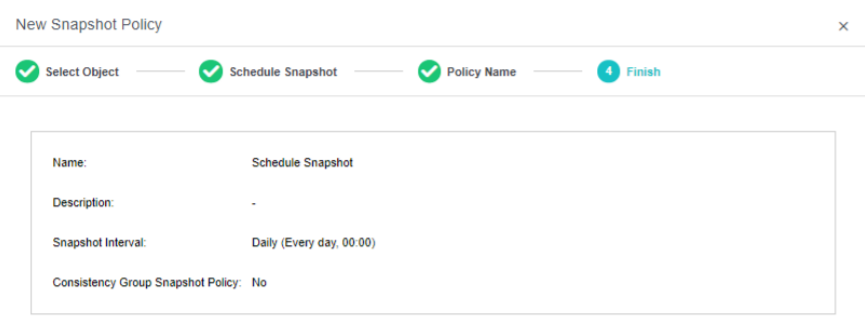

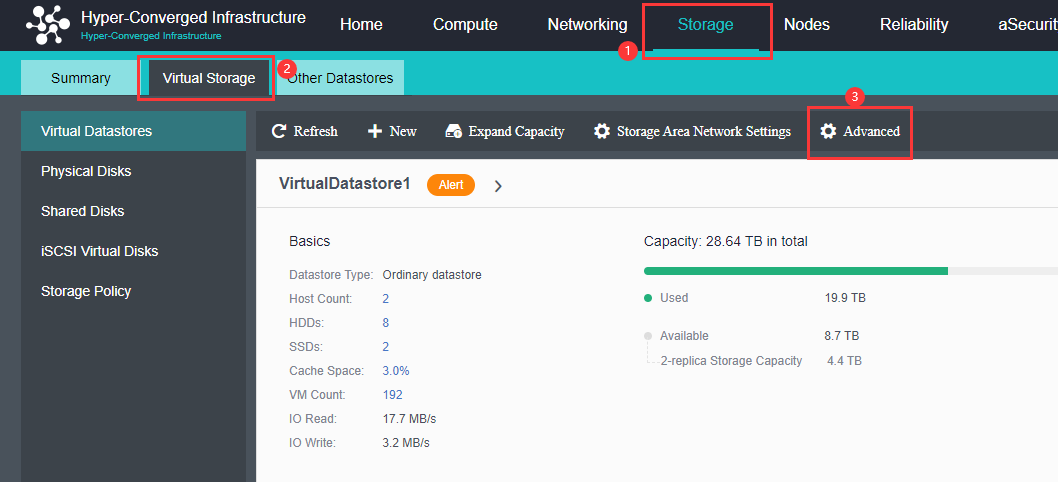

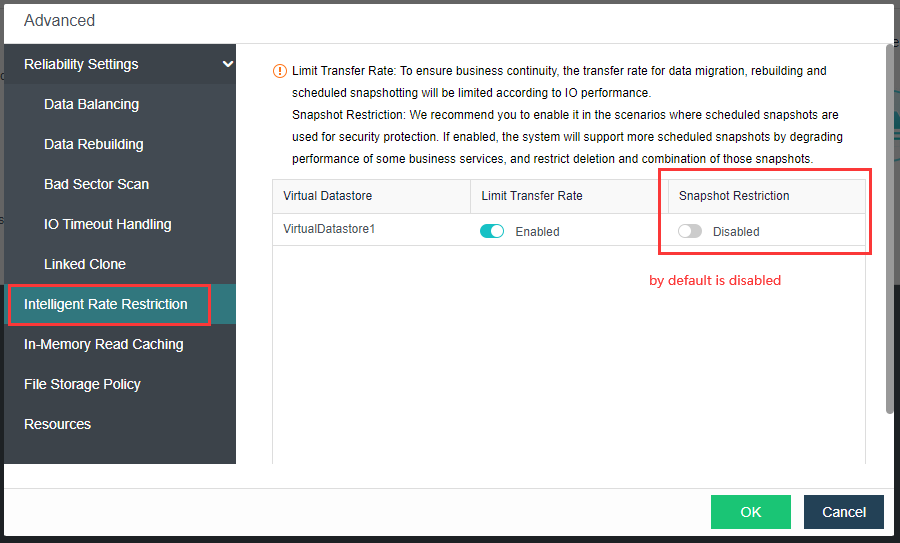

Support scheduled snapshots, simplify operation and maintenance, and make data more reliable:

- Support custom scheduled snapshot policies for individual virtual machines and consistency groups.

- The snapshot frequency can be selected by hour, day, and week. At the same time, to avoid frequent snapshots occupying a lot of storage resources, you can set the snapshot automatic cleaning policy. The system will automatically merge snapshot points and effectively use storage space.

- In the development test scenario, scheduled snapshots can greatly simplify operation and maintenance and improve data reliability.

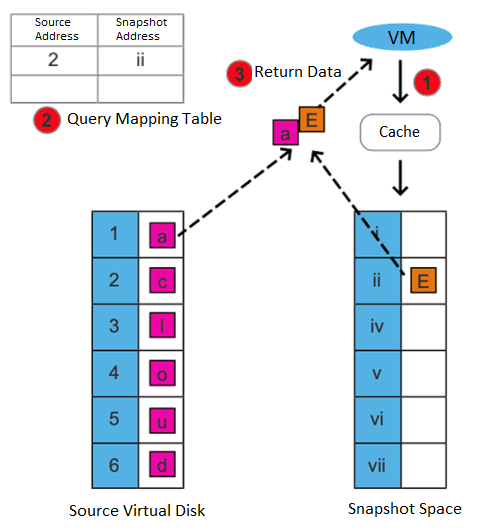

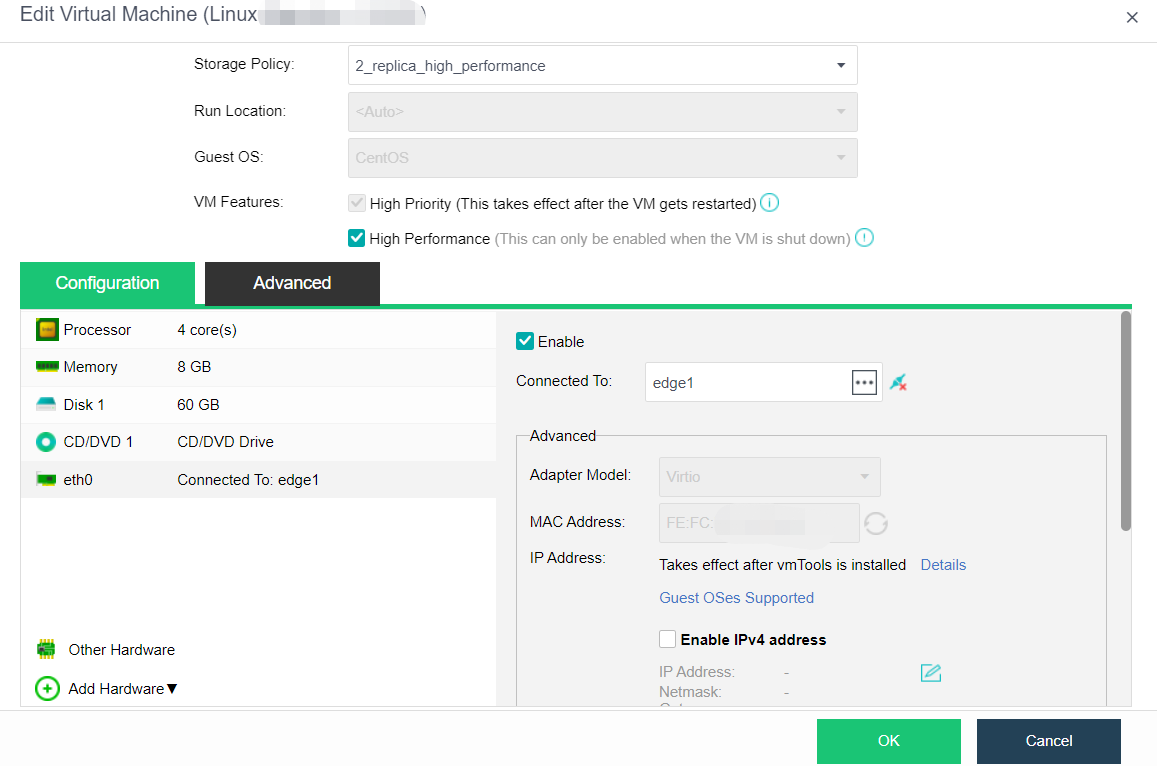

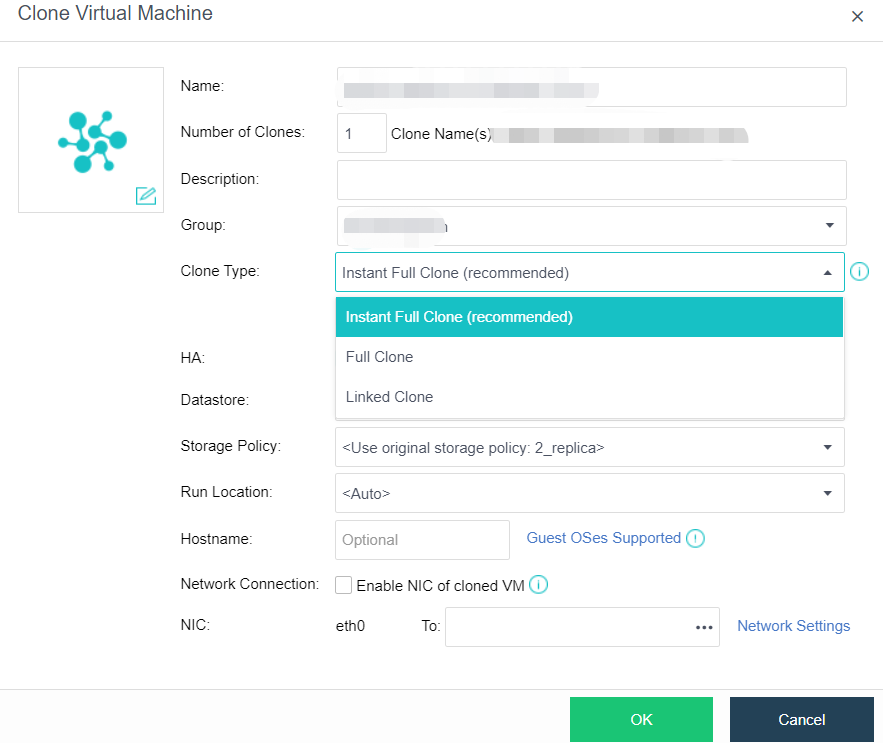

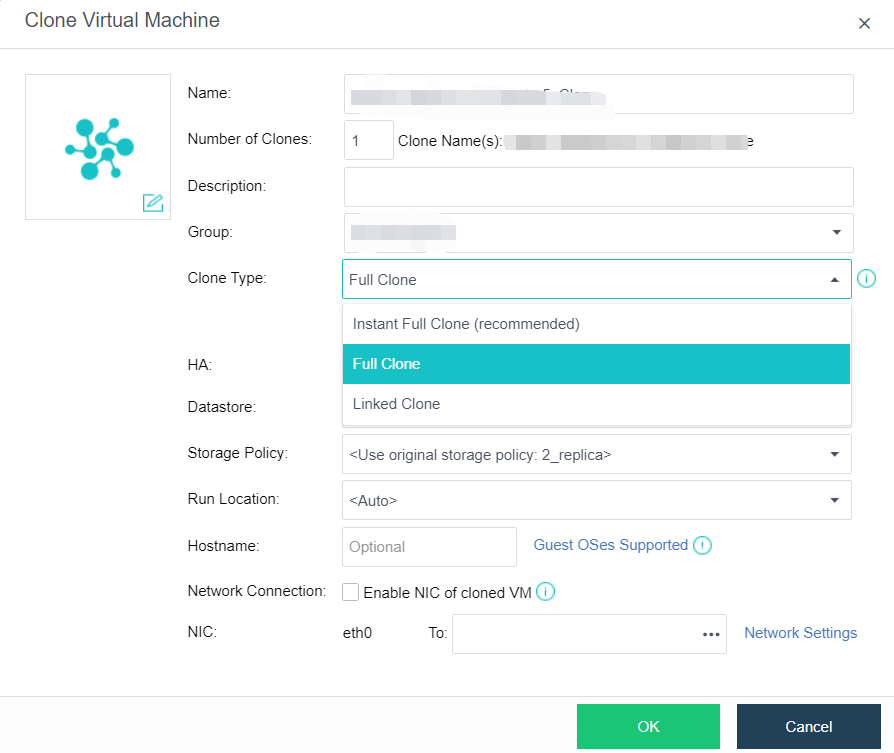

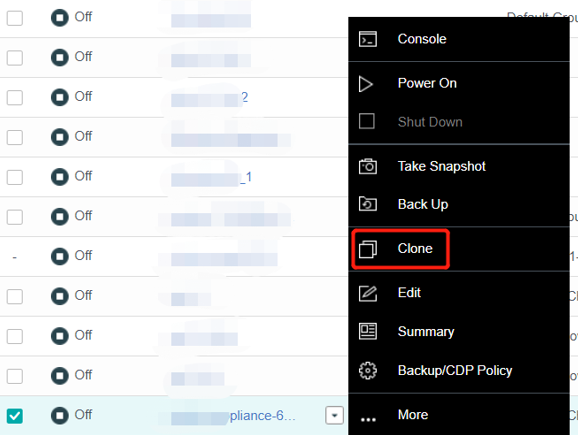

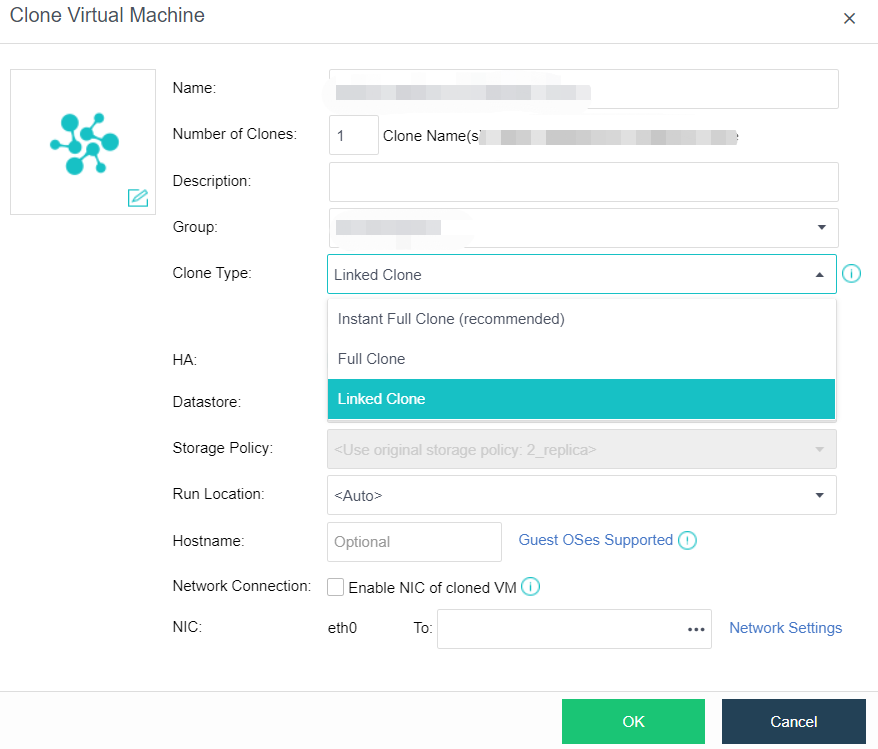

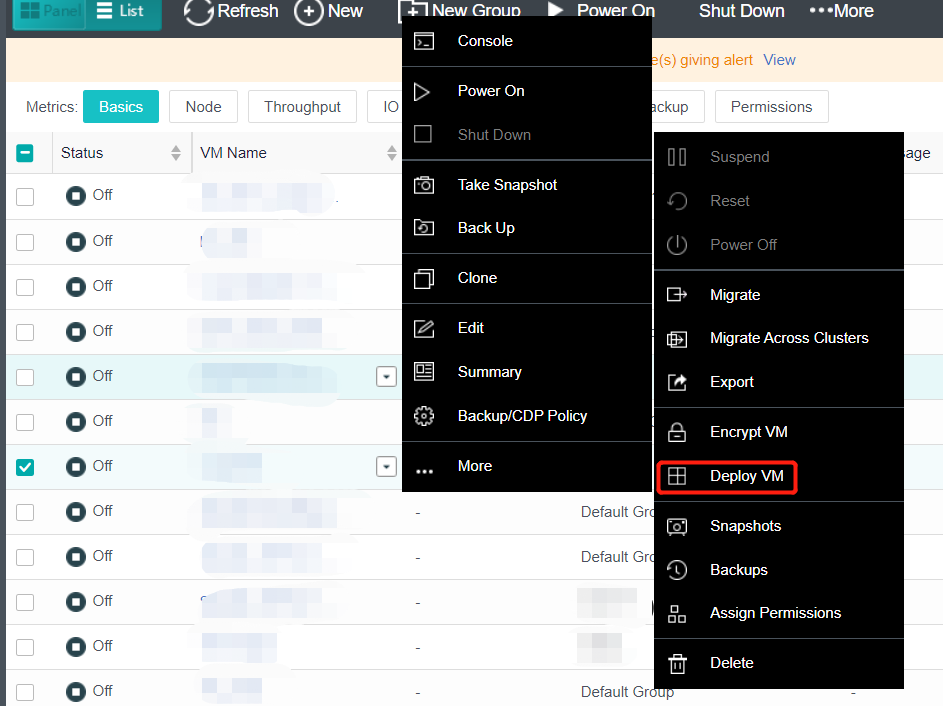

- Virtual Machine Cloning

On the basis of full cloning of virtual machines, it supports both link cloning and fast full cloning of virtual machines.

The virtual machine generated by the linked-clone always depends on the source image to start and run. When the data is added or changed, it will be recorded and redirected to the new image. It has the characteristics of fast virtual machine startup, non-independent data, saving storage space, and performance will still be affected after cloning.

The virtual machine-generated by fast full cloning depends on the source image in the early stage and can be started in seconds. After the virtual machine start, the data will continue to be cloned, and the final data will be complete and independent. It has the characteristics of a fast virtual machine startup, final independent data, and no impact on the performance after cloning.

The virtual machine clone type can be flexibly selected, and the fast full clone type is recommended by default.

- Host Multi Volume

HCI supports the establishment of multiple storage subvolumes by selecting different nodes in a cluster. It meets the user’s requirements for different services’ capacity and performance isolation. Also, it enables the business to switch between different storage subvolumes to realize the different performance requirements of the same business at different stages.

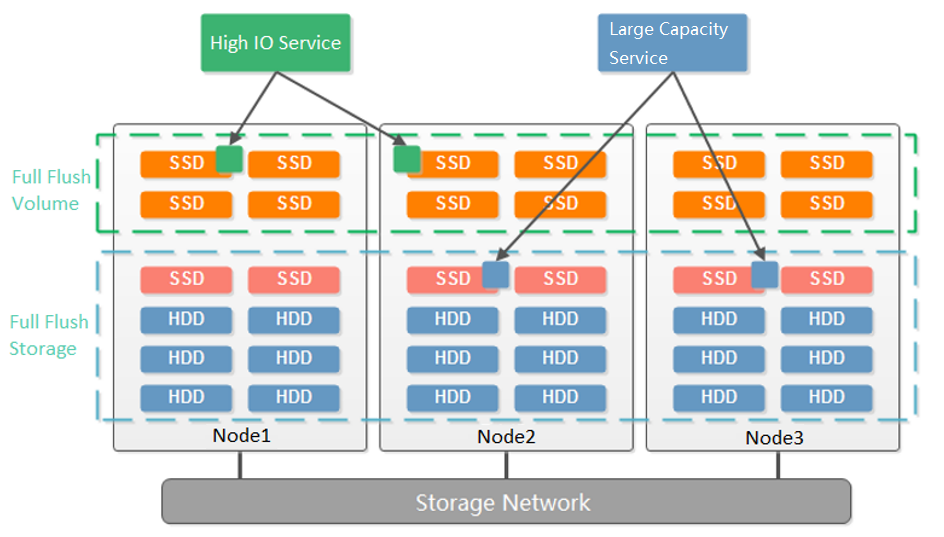

- Disk Multi Volume

Dividing multiple volumes by host can only be supported when at least 6 hosts start. Small clusters with small business nodes cannot be divided into multiple volumes by node. However, multiple volumes can be divided by the hard disk.

As shown in the figure below, starting from three nodes, aSAN supports dividing into two volumes according to the granularity of the hard disk. Compared with dividing virtual datastore according to nodes, it reduces the deployment threshold of multiple volumes. When customers have two types of services: high-performance and high-capacity, they can be divided into two volumes according to the hard disk: one flash volume + one hybrid volume. The flash volume runs high-performance services, and the hybrid volume runs high-capacity services.

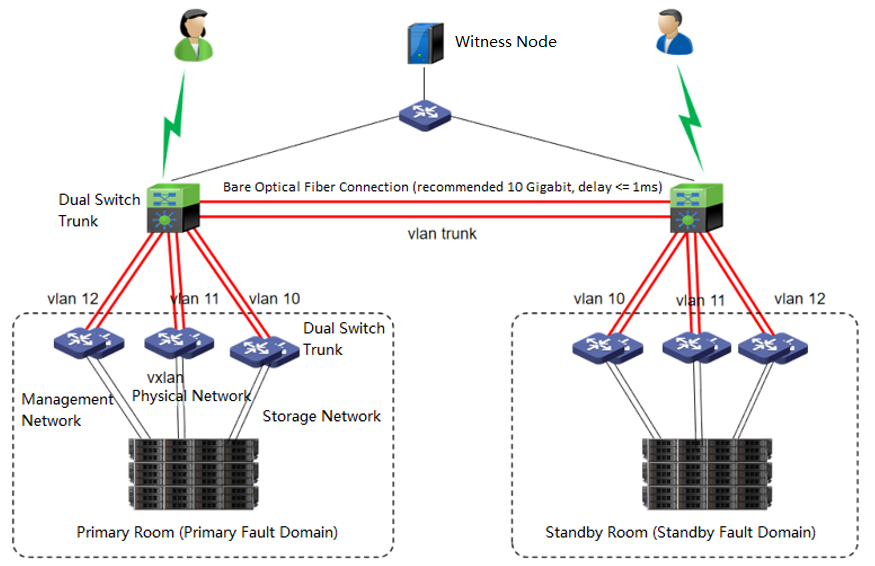

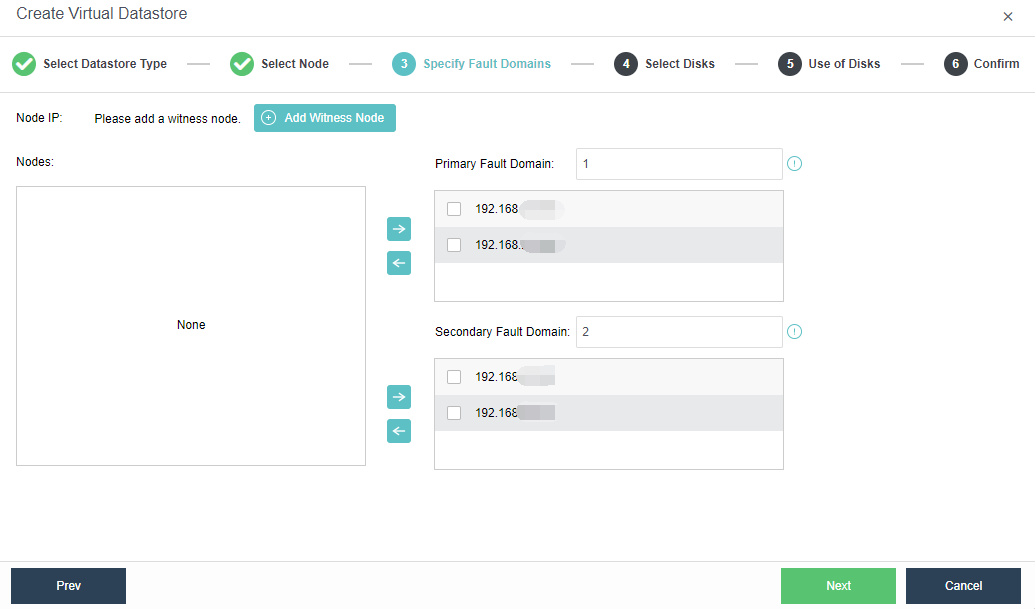

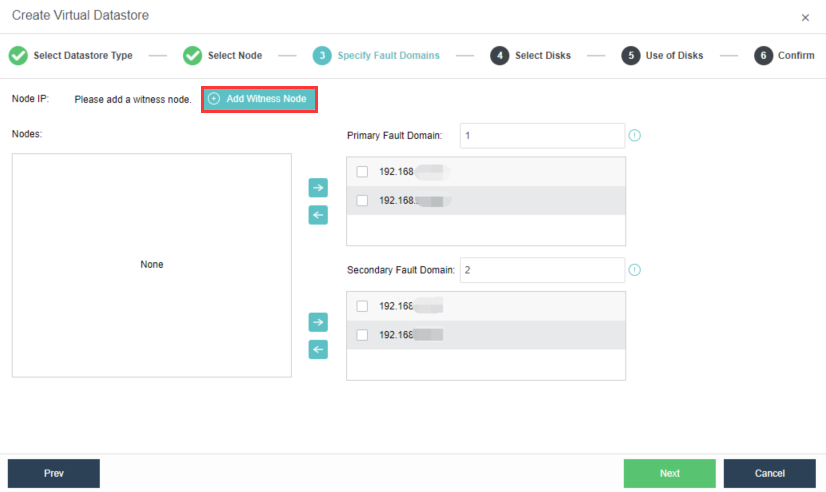

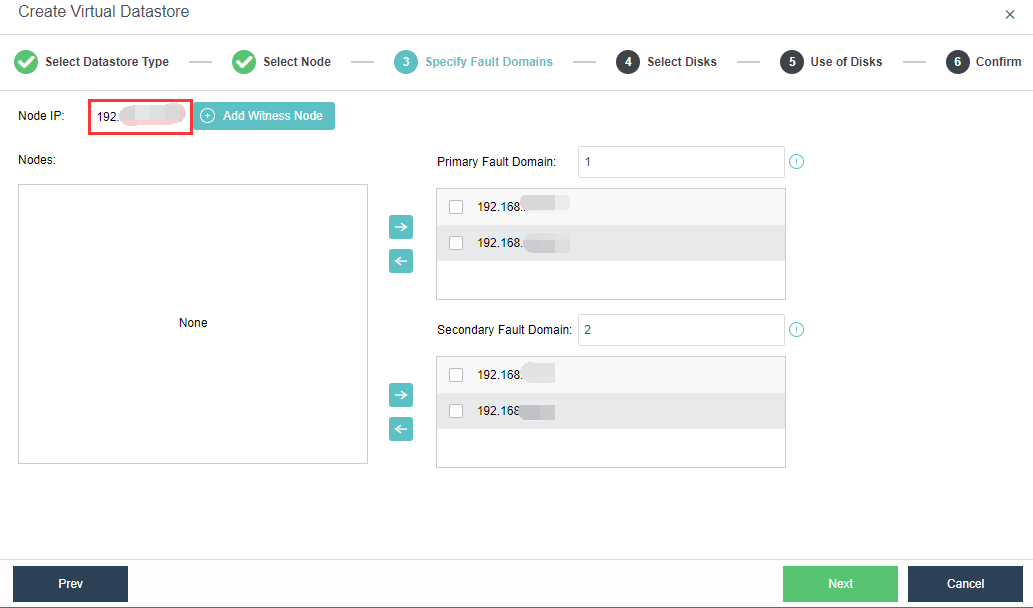

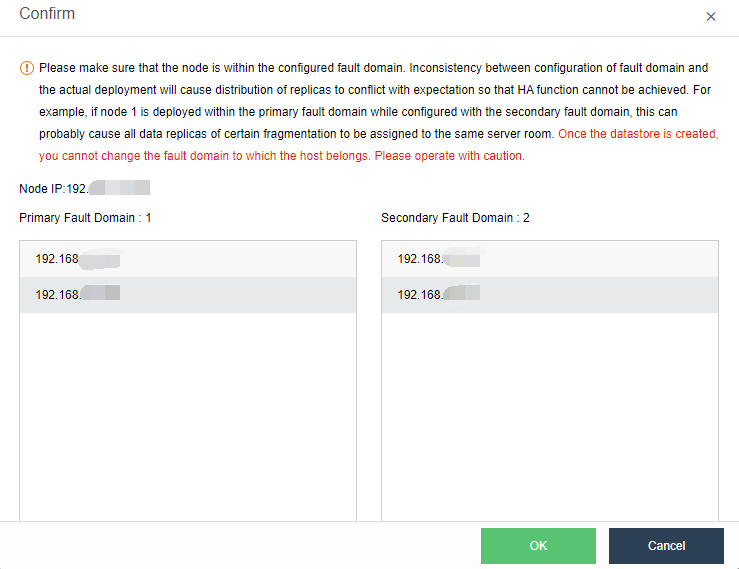

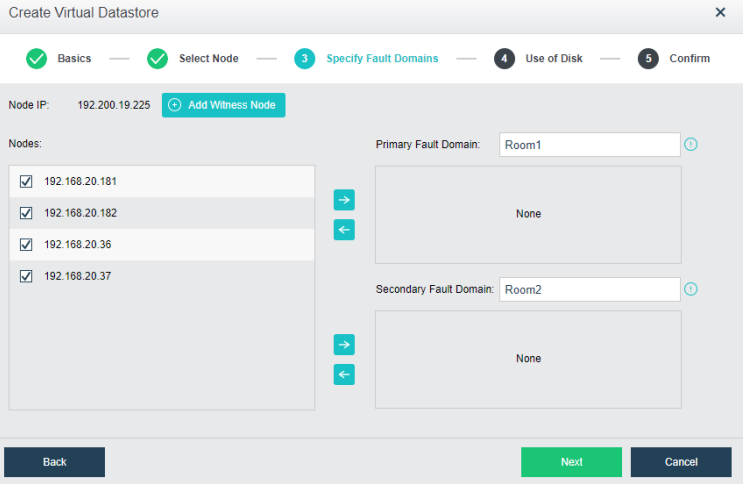

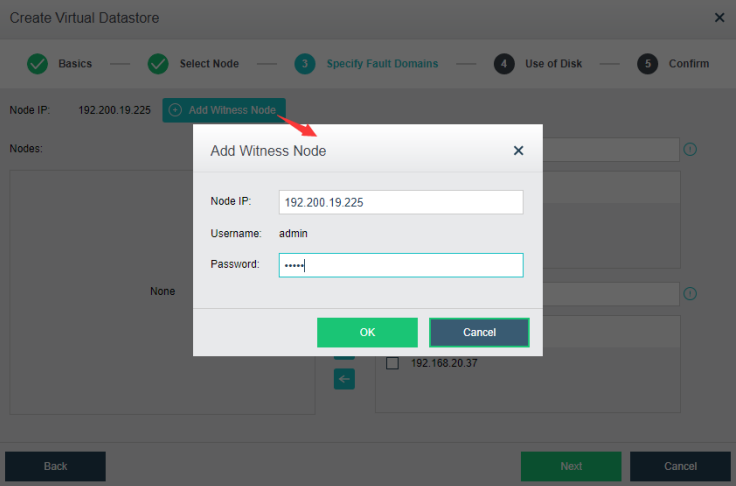

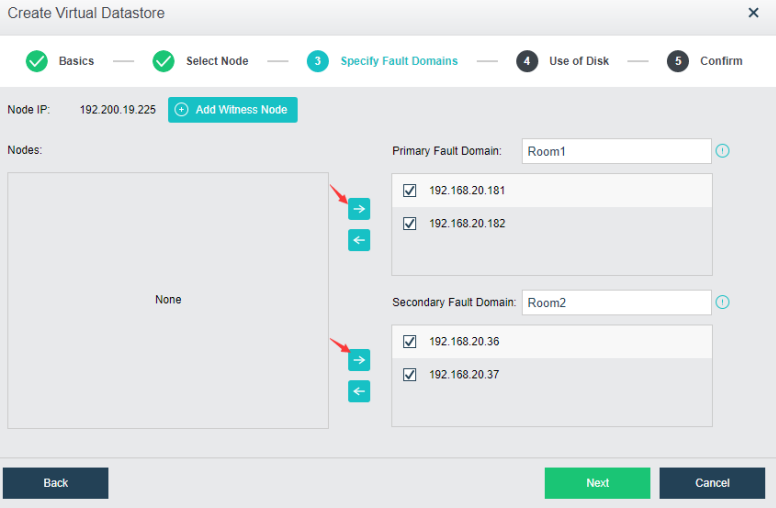

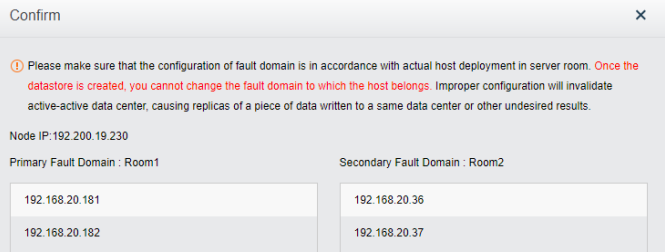

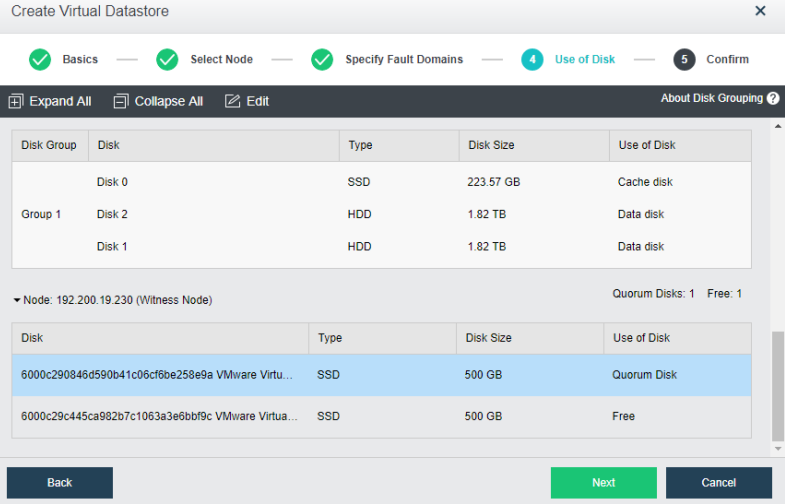

- Extended Volume

The dual-active data center is realized through the scheme of extending the cluster. The witness node must be deployed in this scheme to solve the split-brain problem and ensure data reliability. On average, the HCI node forms a cluster and is deployed to two machine rooms. Each machine room is configured as a fault domain, and each fault domain saves a copy. The data will be written to two copies simultaneously, and if any machine room fails, Data will not be lost.

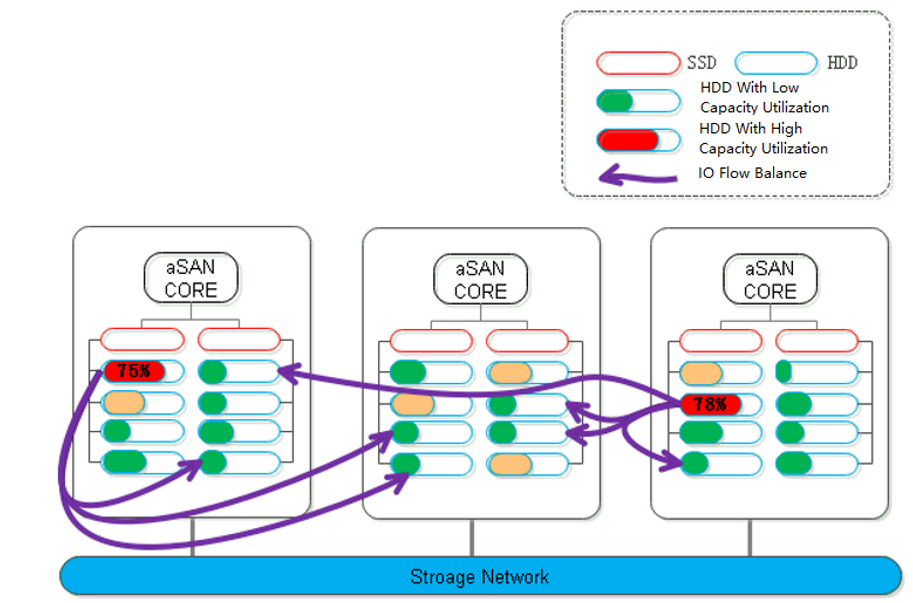

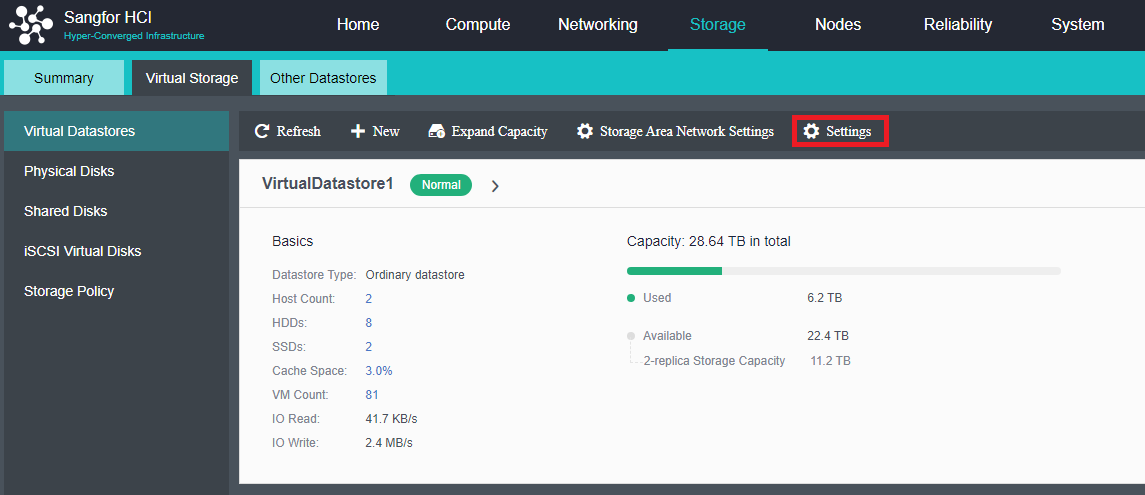

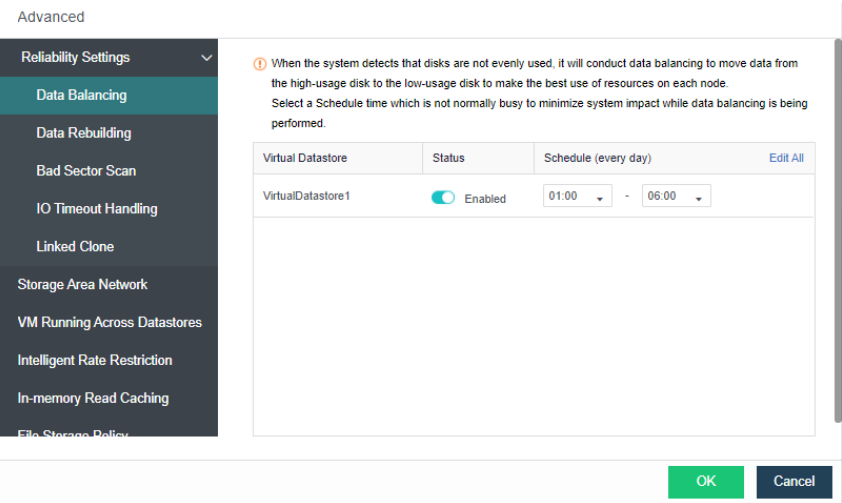

- Data Balance

During distributed storage, the disks between nodes may also be used unevenly. When it is found that the difference between the highest and lowest disk capacity utilization in the volume exceeds a certain threshold, aSAN will calculate the location of each partition on the source side disk and the destination side disk it is about to fall into. The number of destination disks can be multiple; the location can be other disks in the node or disks in other nodes.

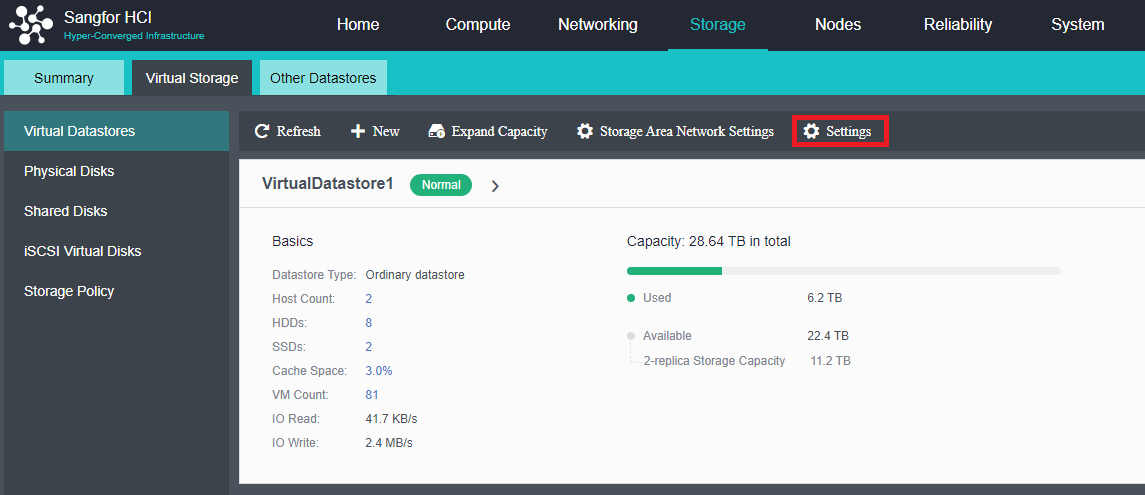

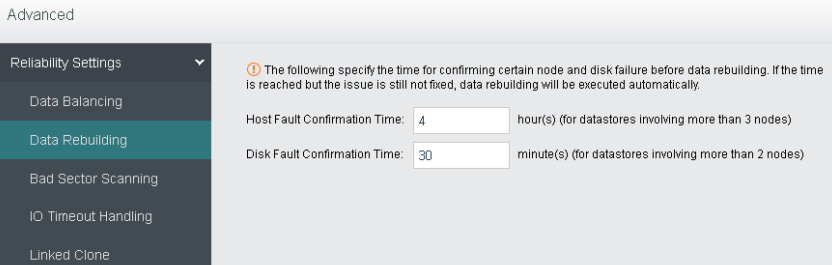

- Data Rebuilding

Through the data rebuilding function, after a component (disk or node) fails, aSAN will take another copy of the data on the failed component as the repair source, rebuild a new copy on the target component in the unit of fragmentation, restore the integrity, and realize system self-healing.

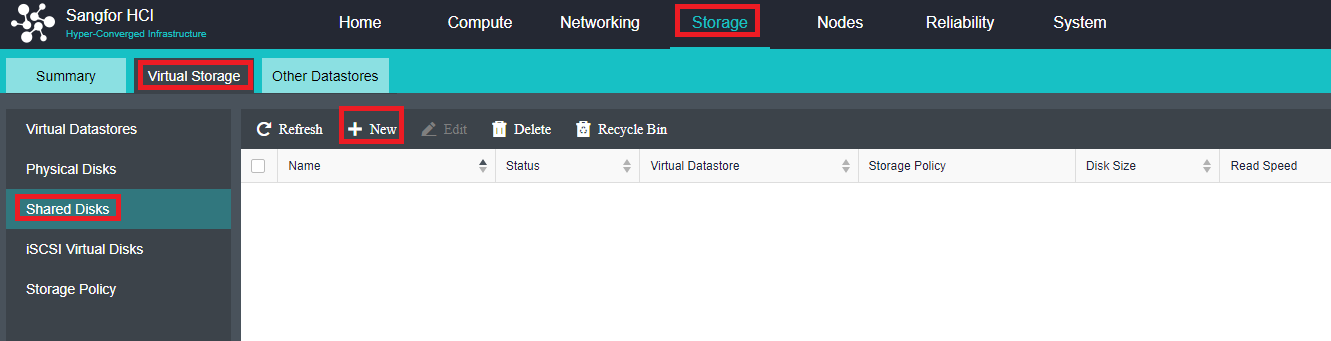

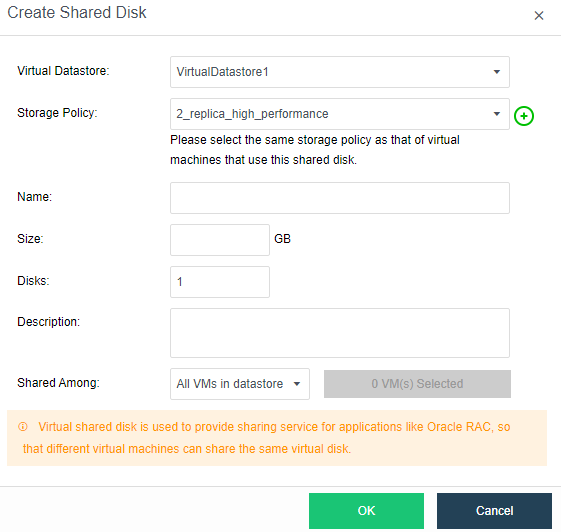

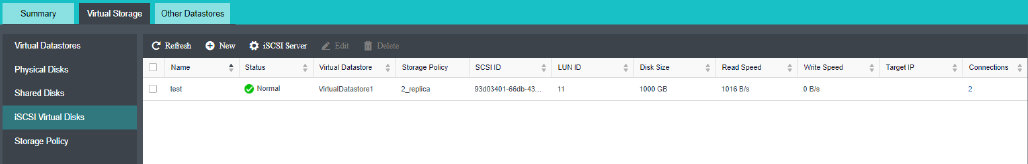

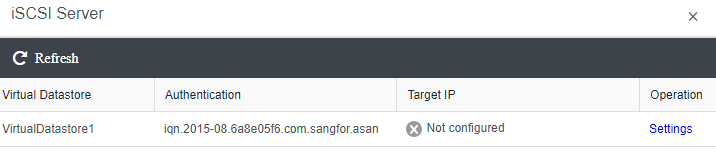

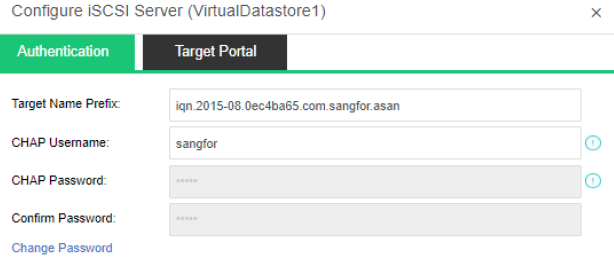

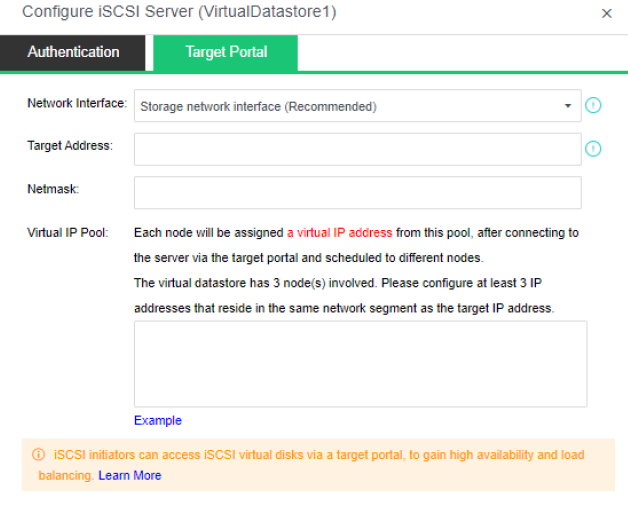

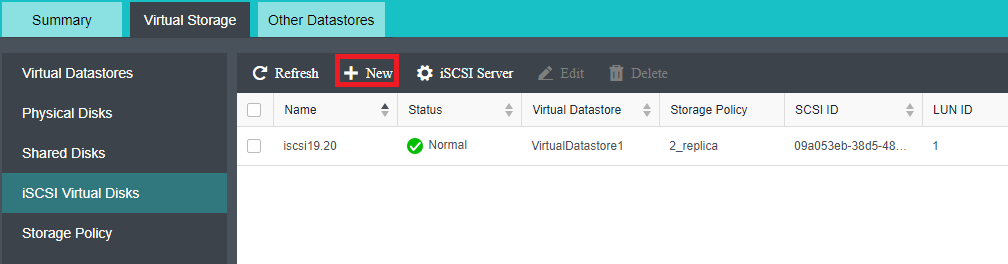

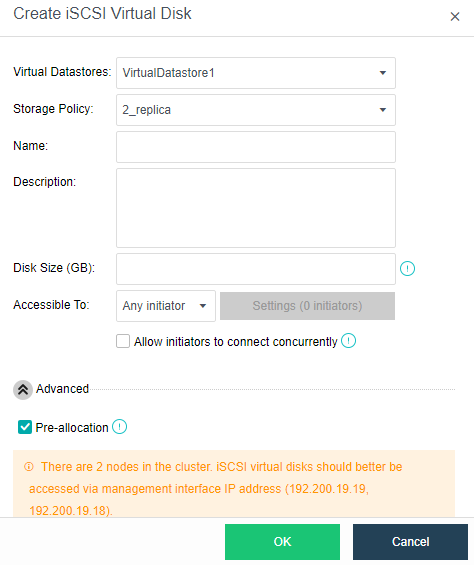

- Virtual iSCSI Technology

aSAN has virtual iSCSI technology. It can create a virtual iSCSI hard disk and supports access from a virtual storage network interface.

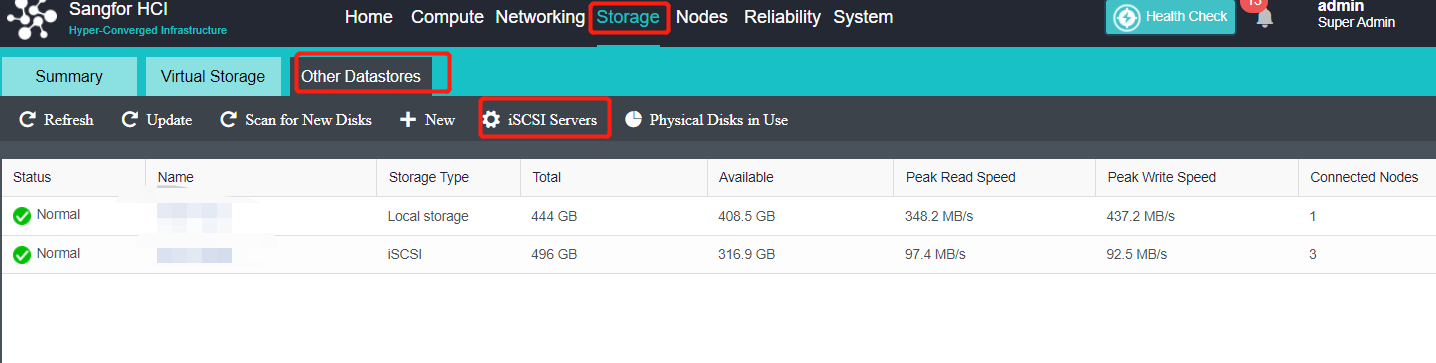

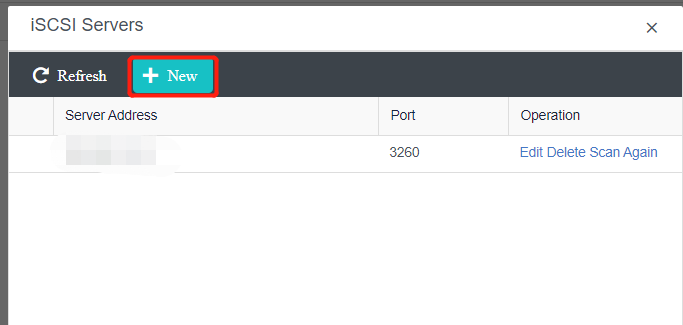

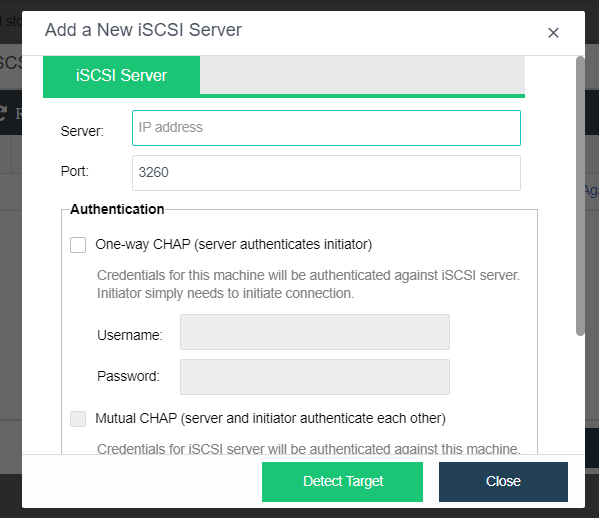

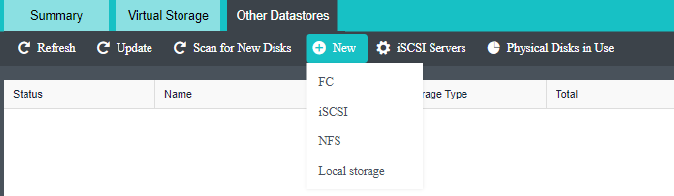

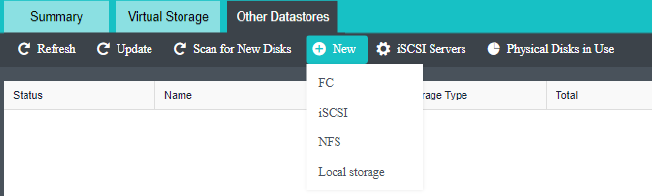

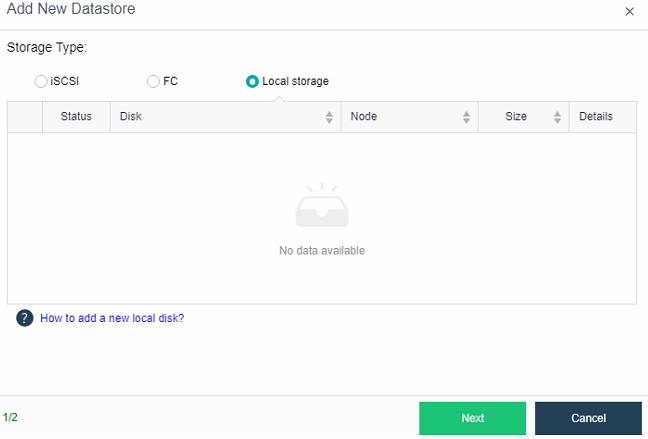

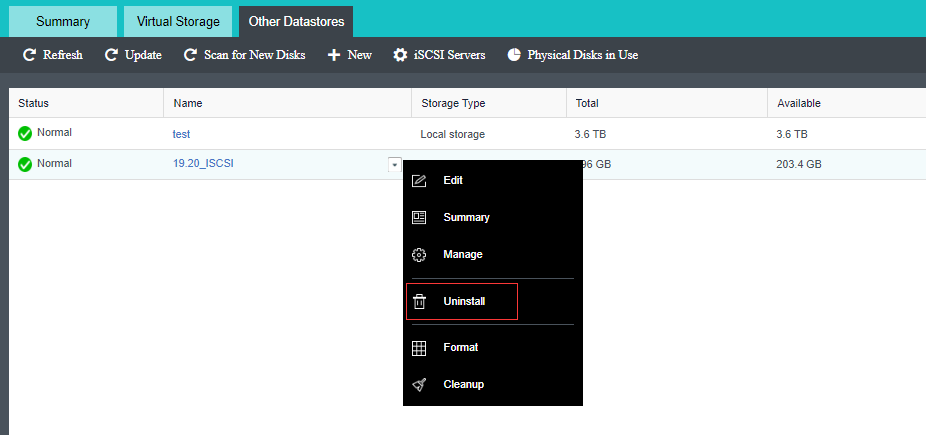

- Heterogeneous storage management

-

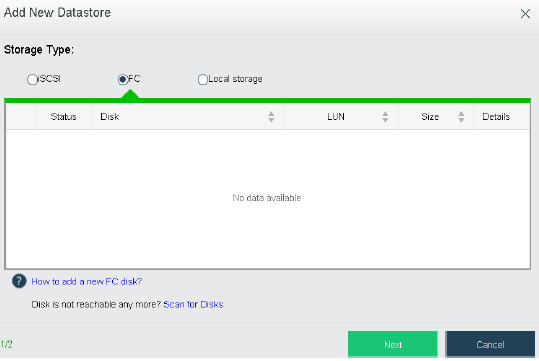

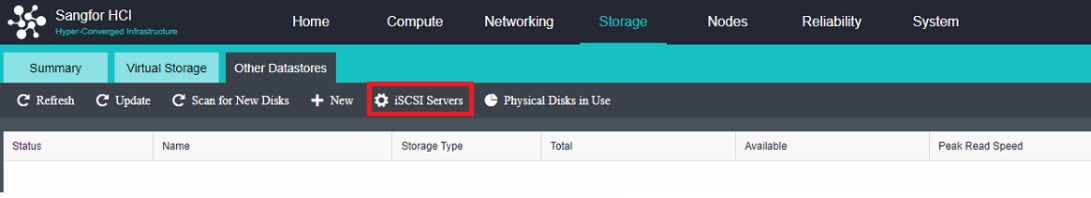

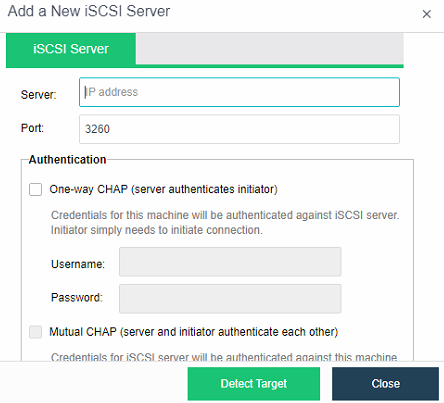

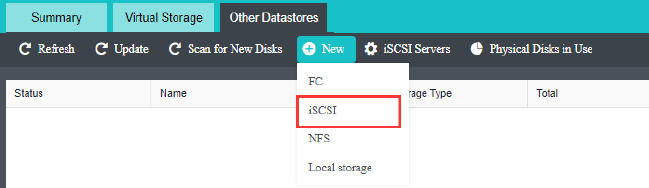

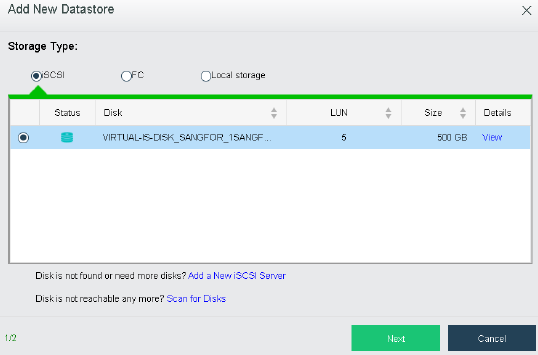

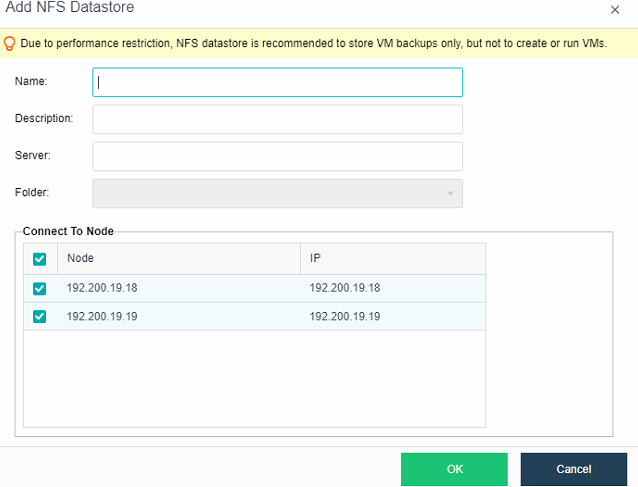

HCI supports adding FC storage and iSCSI storage as external storage, placing the datastore of virtual machines on FC storage or iSCSI storage, and realizing the HA function of virtual machines.

-

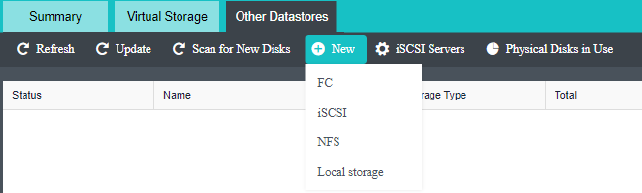

HCI supports adding NFS as the backup location of virtual machines.

-

HCI supports adding local or on-server disks other than the server system disk. After the RAID is formed, the logical disk is used as the virtual machine’s datastore, but the virtual machine’s HA function is not supported.

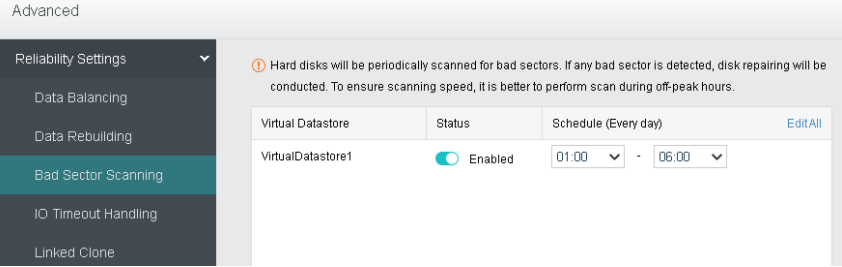

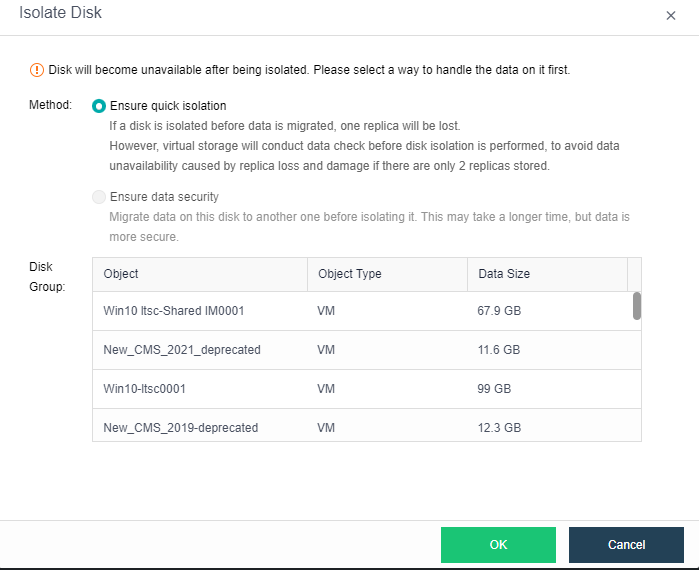

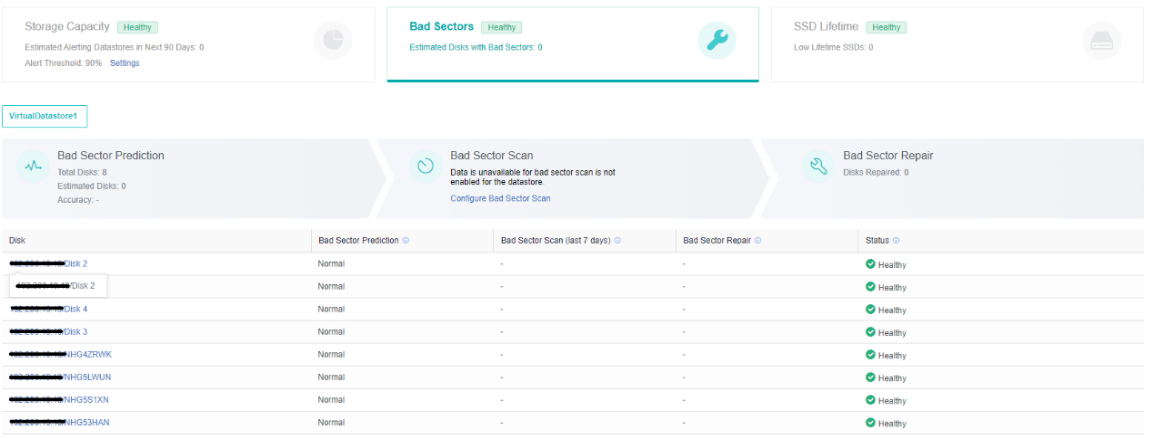

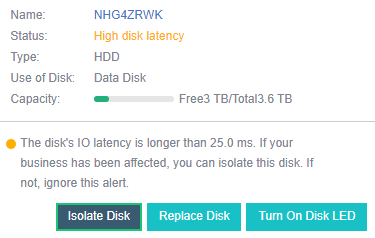

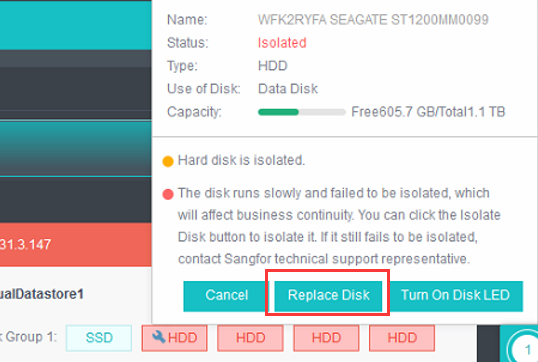

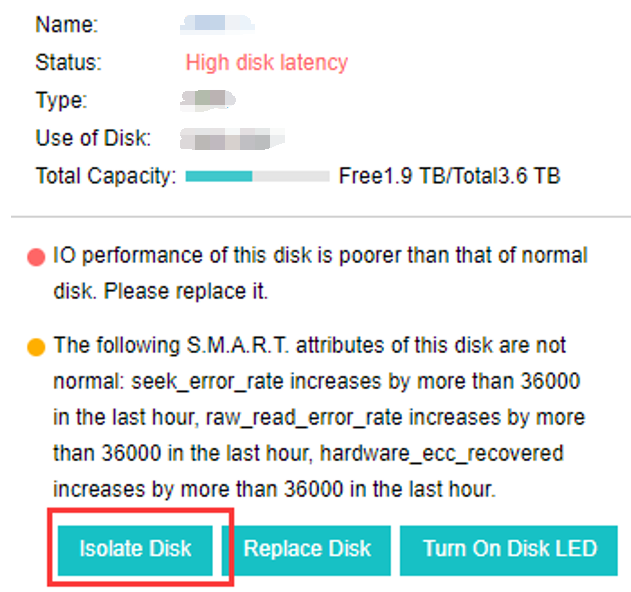

- Disk Sub-health Scanning And Repair

-

aSAN can find hidden bad sectors in time through the active scanning function of bad sectors to avoid the data being in the single-copy state for a long time.

-

When aSAN finds a bad sector, it will immediately trigger the repair of the bad sector data, read the data from another copy, repair it to the reserved sector of the bad sector disk, and recover the redundancy of the data copy in time.

-

aSAN actively migrates all data on hard disks with too many bad sectors (or SSD lifetime is about to run out) to other healthy hard disks in advance and always maintains the redundancy of replicas.

-

When the hard disk gets stuck, slows down, is congested, and under other abnormal conditions, it will affect the performance. At this time, the continuity of performance is guaranteed through sub-health disk isolation and read-write source switching.

- Bad Sector Prediction

We independently developed a high-precision bad sector prediction function by collecting and analyzing the SMART data, performance parameters, and hard disk log information of bad sector hard disks in many customers’ actual scenarios, combined with advanced algorithm training models. The accuracy of aSAN’s bad sector prediction is 95% above through many different business scenario tests.

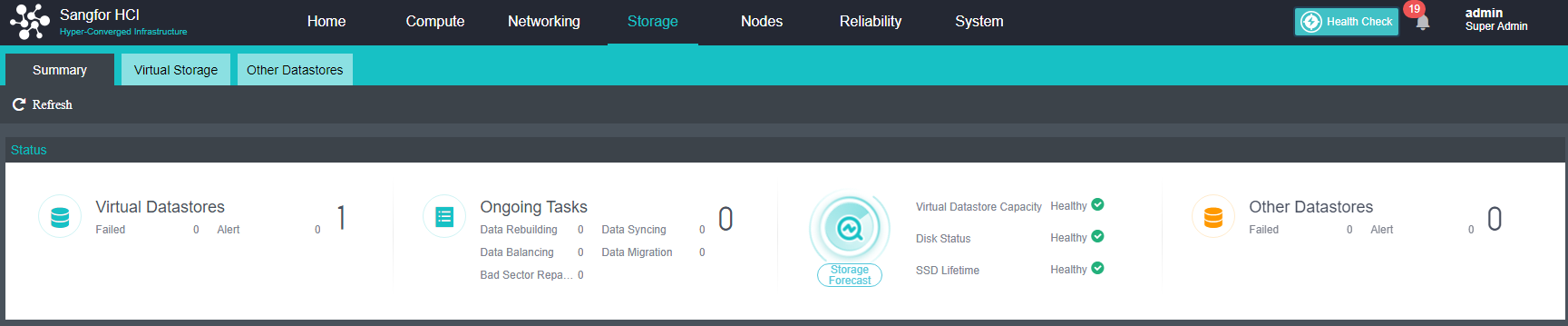

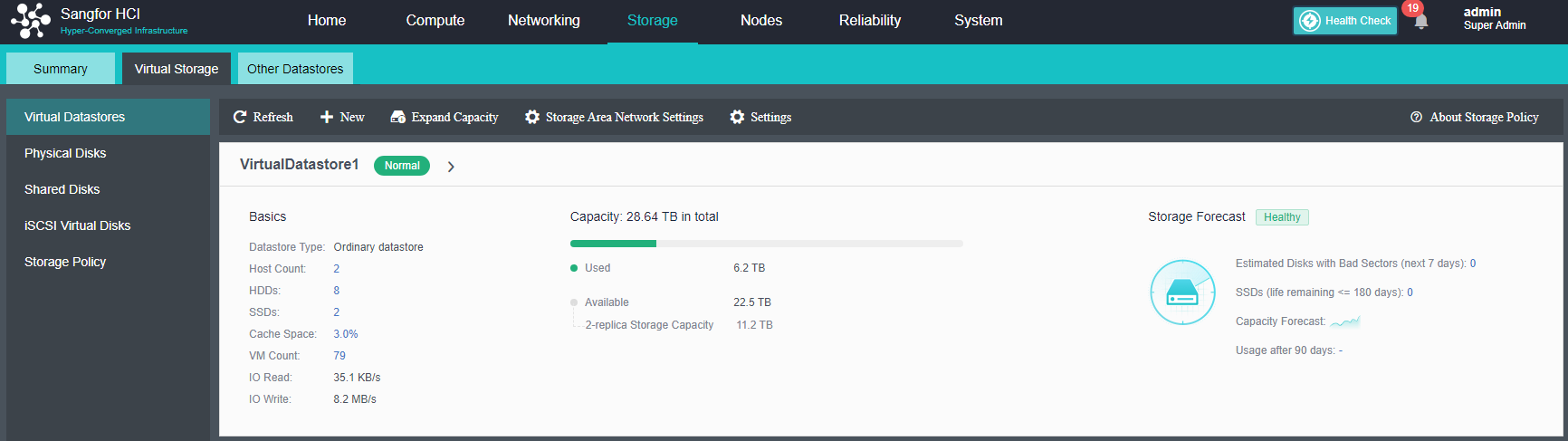

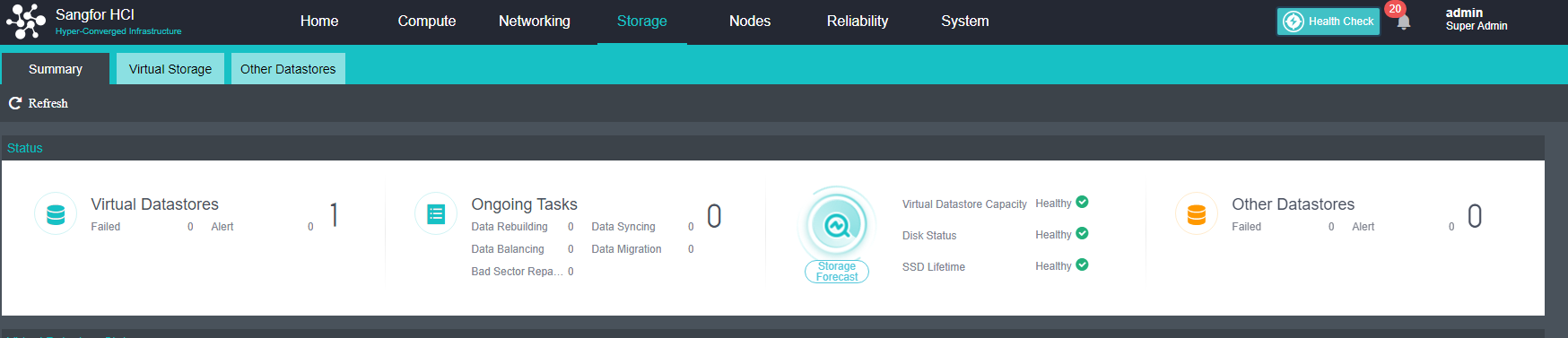

- Capacity Prediction

aSAN can dynamically predict the capacity growth trend in the next 90 days according to the capacity usage of customer clusters. In the capacity prediction interface, the user can switch and view the raw capacity, actual used capacity, and dynamic prediction curve of different virtual datastore. It will prompt the user that the used capacity will reach the capacity alert threshold (90%) in XX days.

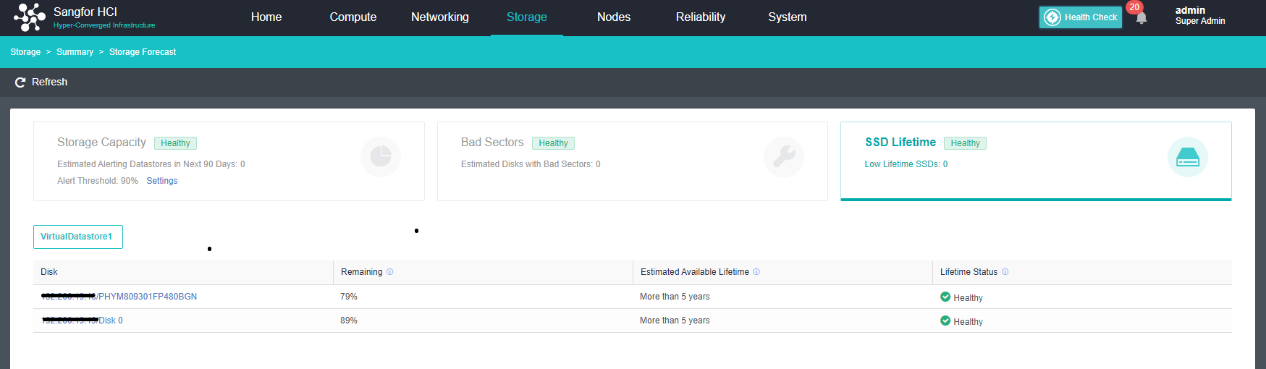

- SSD Lifetime Prediction

aSAN collects and analyzes the IO data of SSD hard disks in the cluster, calculates the remaining lifetime of SSD hard disks, and displays the expected remaining available time of SSD in combination with the upper business pressure. According to the prediction results, it is divided into three-lifetime levels: Healthy, Medium Risk, and High Risk. Notify users to replace the SSD hard disks in the cluster in time.

aNET Key Features

- Network Virtualization

-

The virtual distributed switch can ensure the consistency of network configuration when virtual machines migrate between nodes. It provides rich network configuration management functions, dynamic interface binding, and static binding.

-

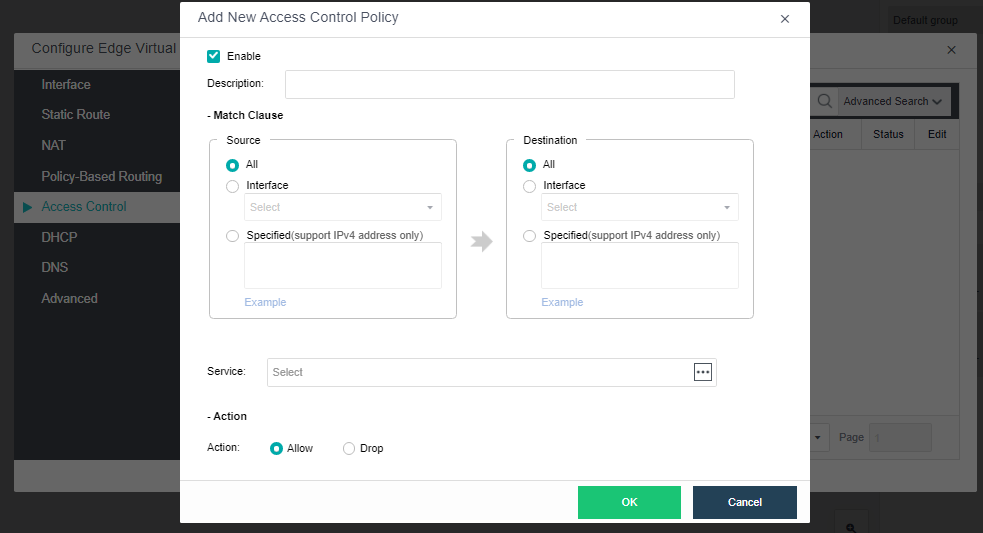

The distributed firewall provides IP access control and virtual machine network QoS to realize unified management of network resources and real-time network monitoring.

-

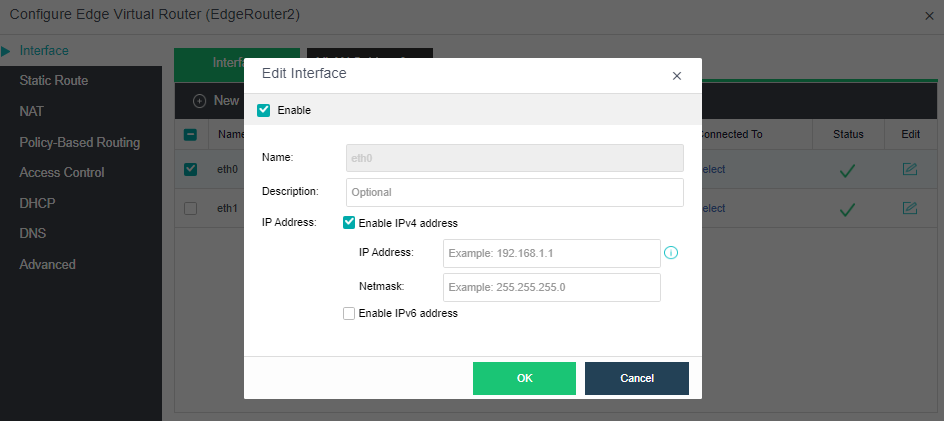

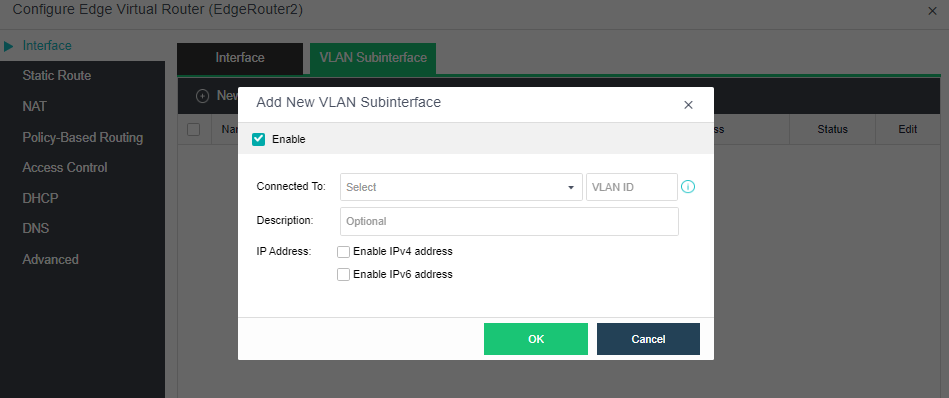

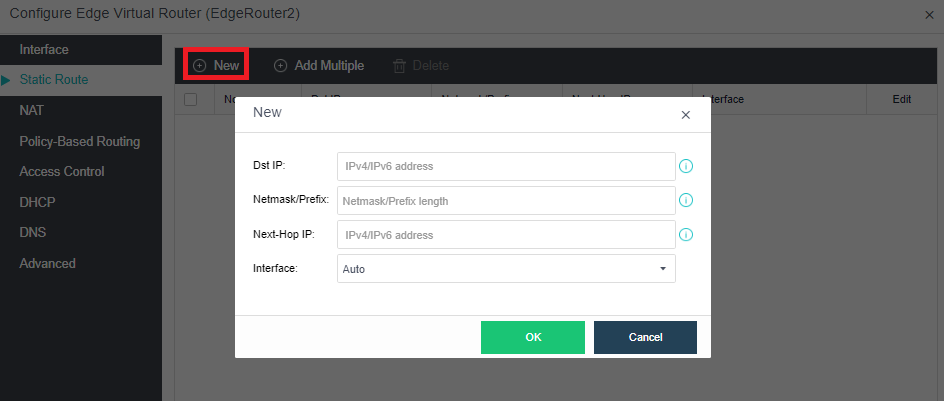

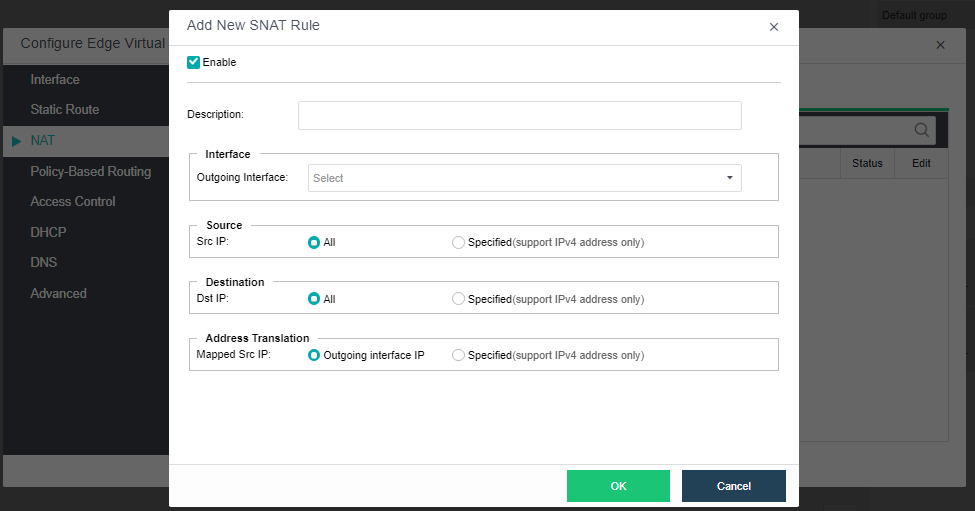

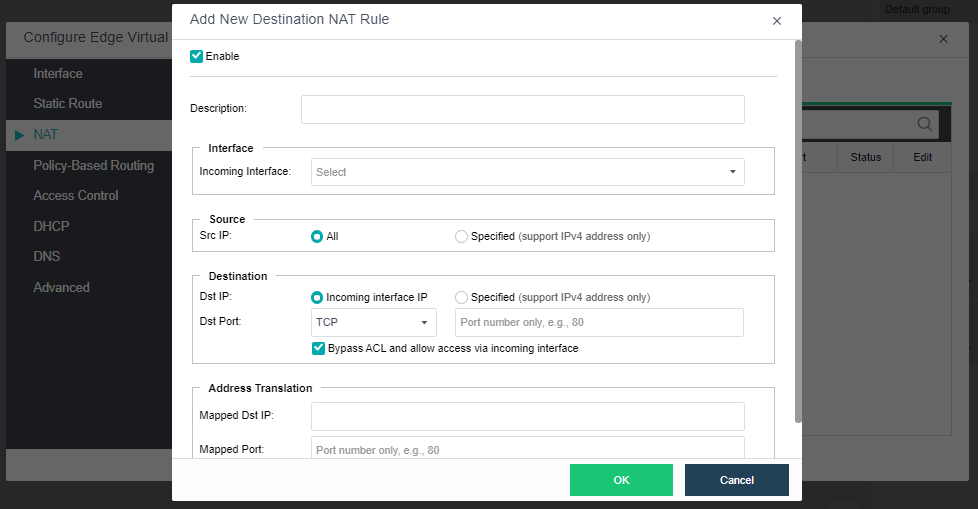

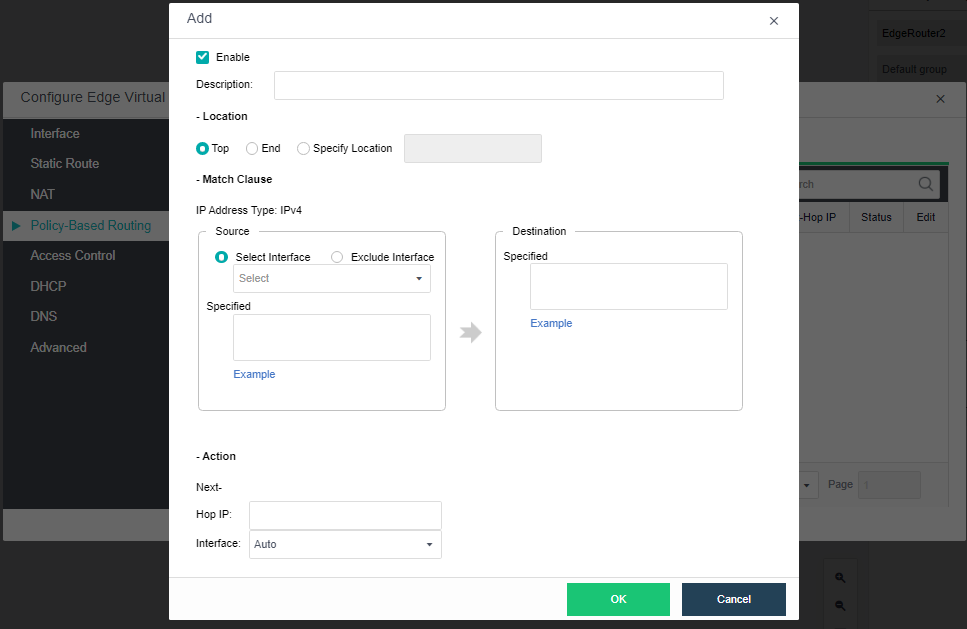

The virtualization router can solve the problem of exit routing after virtualization and provide other functions, including VLAN subinterface, NAT rules, ACL policy, DHCP address pool, DNS proxy, and so on.

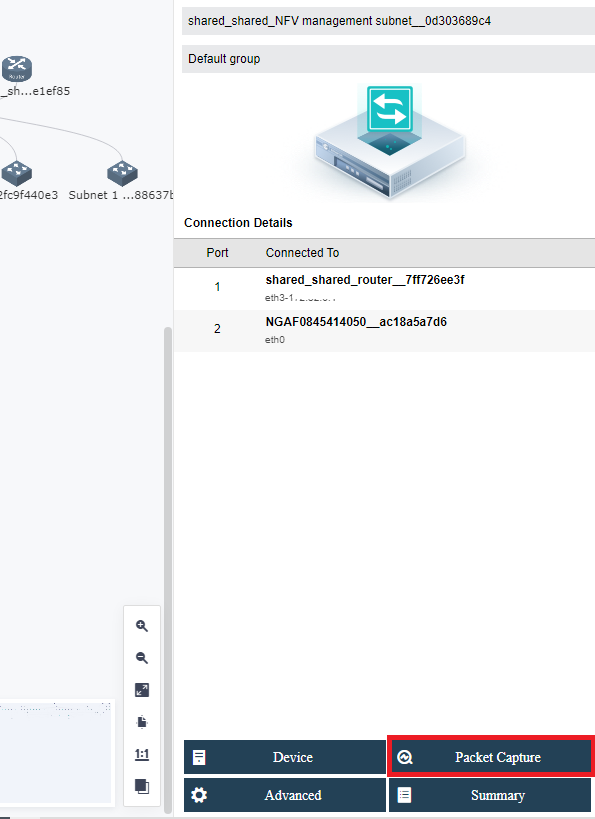

- Virtualization of Network Functions

Network Function Virtualization (NFV) separates network functions from proprietary hardware devices and implements these functions as software to support the infrastructure with complete integration of virtualization components. Sangfor’s HCI platform is complete in implementing NFV, including Sangfor network equipment software with leading technology in the security field, including vAF, vAD, vAC, vWOC, vSSLVPN, etc.

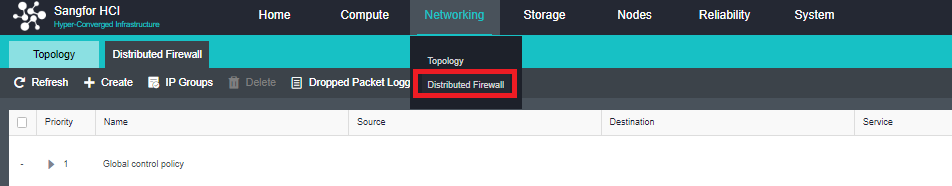

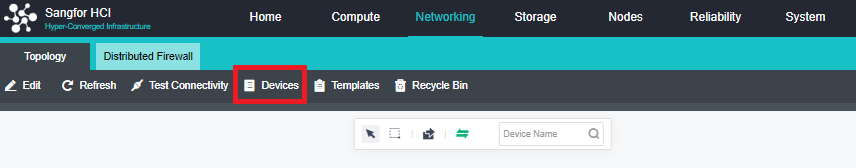

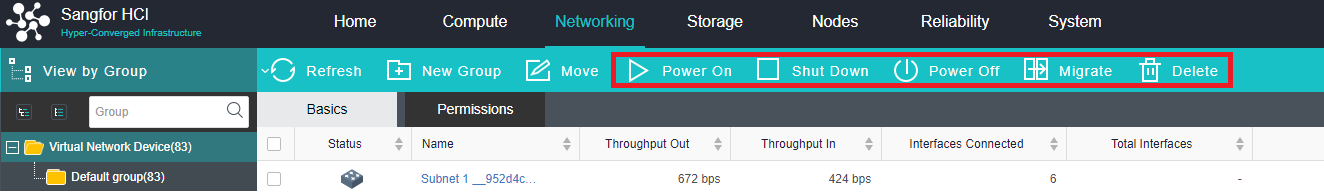

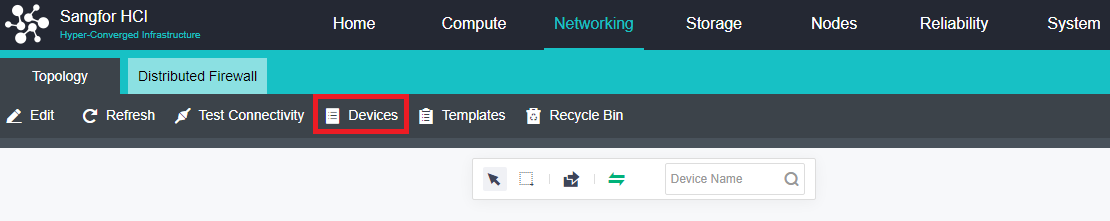

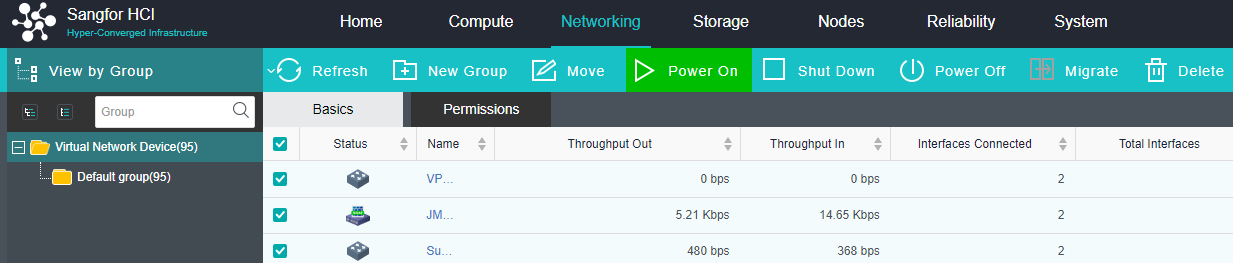

- Distributed Virtual Firewall

A distributed firewall is equivalent to placing a firewall at the exit and entrance of each virtual machine. As long as a policy is configured, it can dynamically adjust in the background no matter how the topology changes, where the virtual machine runs, or change of the IP. Therefore, security protection can be carried out at any time.

Installation And Deployment

Networking Installation Introduction

Precautions

| Node interface | Recommended minimum 6 Ge + 2 10GE | Prohibit the use of networks below Gigabit. | |

|---|---|---|---|

| Networking deployment | Storage private network | It is recommended to make dual switch link aggregation and adopt a 2 * 10GE interface. | 1. Must use the 10 Gigabit interface to build the storage private network. 2. Linkless aggregation is prohibited. |

| Networking deployment | Management network | It is recommended to stack and deploy, use 2 * Ge interfaces for aggregation, and use the IP address for a load. The corresponding interface of the switch needs to be configured with static interface aggregation. The networking switch of the cluster management interface and Overlay Network Interface needs to support multicast function. Otherwise, the cluster cannot be established. | IGMP snooping is prohibited. |

| Networking deployment | Service network | It is recommended to stack and deploy, use 2 * Ge interfaces for aggregation, and use the IP address for a load. Static interface aggregation needs to be configured for the corresponding interface of the switch. | – |

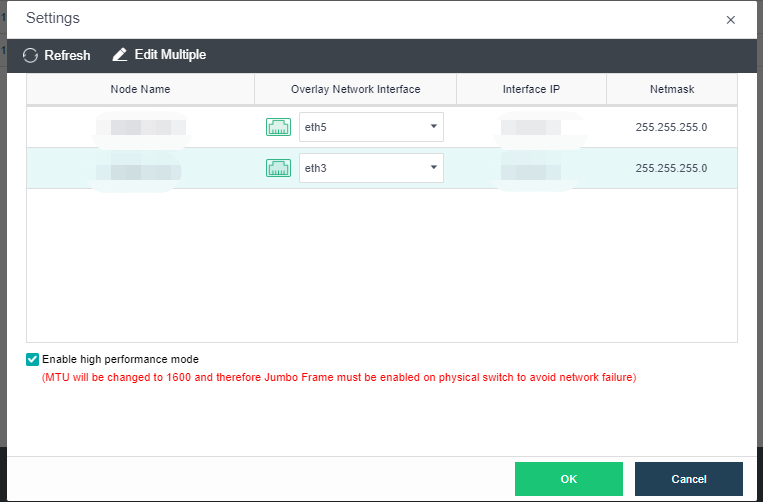

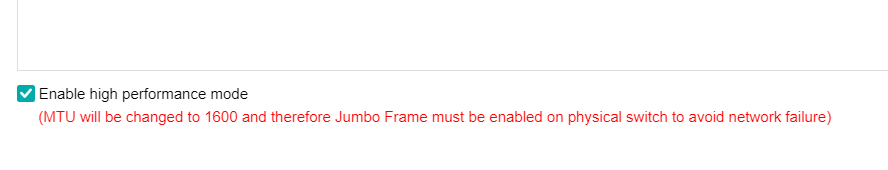

| Networking deployment | VXLAN network | It is recommended that the 2 * Ge network interface be aggregated, loaded in the IP address mode, and turned on the high-performance mode. The connected switch needs to turn on the jumbo frame, which is set to more than 1600 (the Xinrui switch is set to 2000). At the same time, the corresponding interface of the switch needs to be configured with static network interface aggregation. (it is allowed to reuse the VXLAN and service network when the equipment network interface is insufficient). | 1. A high-performance mode must be enabled for the VXLAN network settings of the HCI platform. 2. IGMP snooping is prohibited. |

| Networking deployment | Switch | The STP function must be turned off at the networking port on the switch. Except for the storage switch, other switches adopt stacked deployment. | Single switch deployment without redundancy is prohibited. |

| Networking deployment | IP address planning | It is recommended that the management network segment, VXLAN network segment, and service network segment be divided into different network segments to avoid IP address conflict. | – |

| Networking deployment | Network cable specification | 1. It is recommended to use multimode optical fiber within 50m and single-mode optical fiber over 50m. 2. It is recommended to use category 5 and above twisted pair. |

1. It is forbidden to connect a single-mode optical fiber to the multi-mode module. 2. It is forbidden to connect a multimode optical fiber to the single-mode module. 3. Class 1 to 4 twisted pair is prohibited. |

| Networking deployment | Dual active networking | 1. The bandwidth between the witness node and the active and secondary fault domain is recommended to be more than 100Mbps, and the delay is < = 1ms (fluctuation within 5ms is allowed). 2. The primary fault domain and the secondary fault domain of the stretched cluster volume must be connected with 10 Gigabit bare optical fiber, with a delay of < = 1ms. |

– |

- The networking switch of the Overlay Network Interface shall confirm whether it supports the jumbo frame. If yes, it is recommended to turn on the high-performance mode of the Overlay Network Interface and change the MTU of the VXLAN port to 1600. If it is not supported, it is forbidden to turn on the high-performance mode of the Overlay Network Interface. Otherwise, the data of the virtual machine will be blocked.

- The connectivity detection of Health Check is only Ping detection. Even if multicast fails, the detection result is normal as long as Ping is enabled.

- The four communication interface types (management communication port, VXLAN Overlay Network Interface, VS Storage interface, and physical interface) are not allowed to be multiplexed. However, in the case of an insufficient network interface, and you have to reuse the network interface, please reuse the physical interface and VXLAN interface. It is suggested that the network interface should be planned as two management interfaces, two storage interfaces, and two aggregation interfaces for VXLAN and physical export reuse.

- Do not hot-plug the NIC, whether a third-party server or an HCI aServer. Otherwise, the network interface may be out of order.

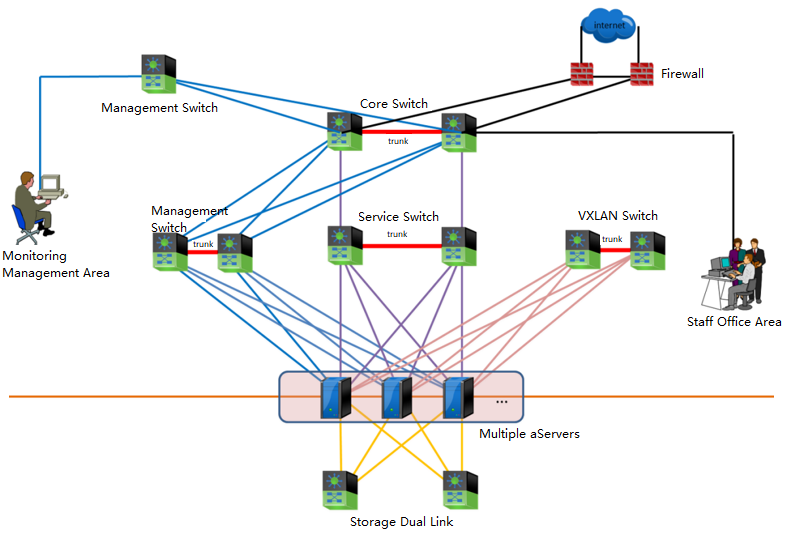

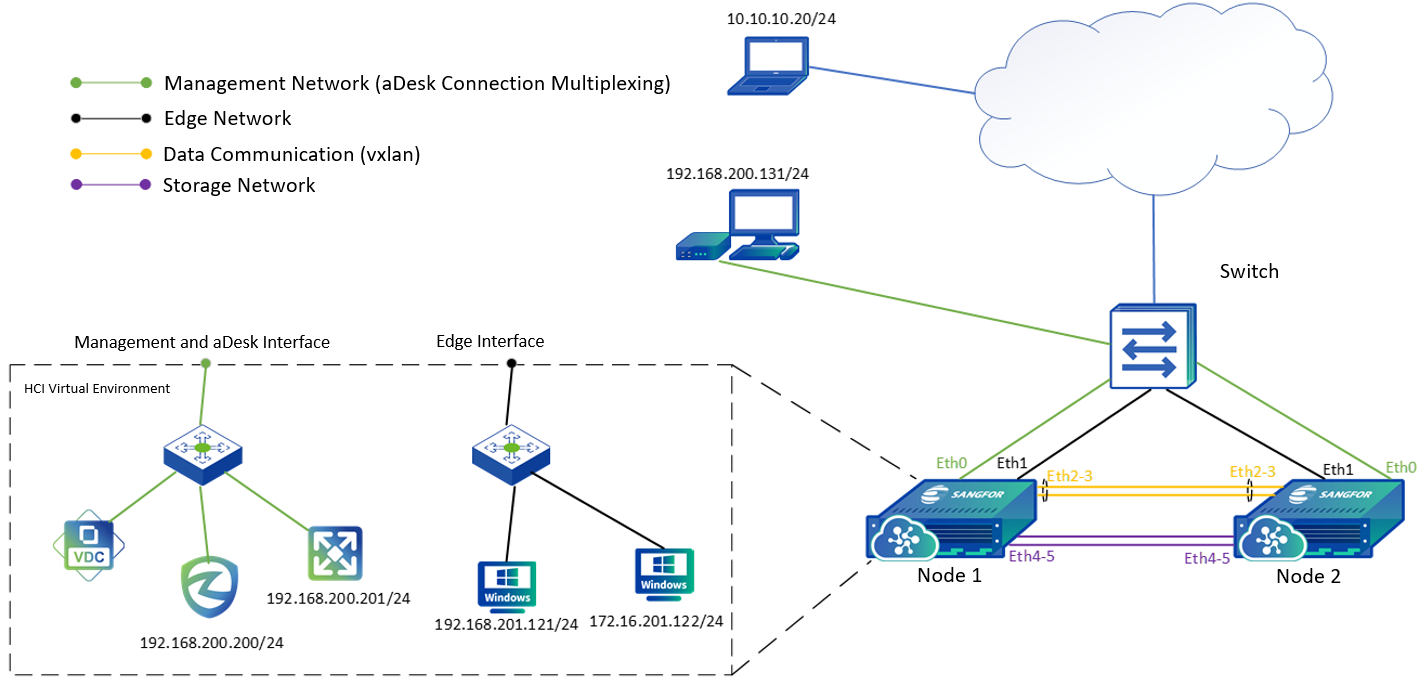

Introduction to HCI Ordinary Cluster Networking

HCI standard networking topology is as follows.

Explanation of terms:

-

The management network to reuse the management network for platform management, server IPMI, BMC, IDRAC, and other remote management network interfaces.

-

VXLAN network carries the east-west traffic of virtual machines and layer-2 communication.

-

A storage network is for aSAN storage, responsible for storage data communication between HCI nodes; When using IPSAN, it also serves as an interface for communication with external storage, layer-2 communication.

-

The service network carries the North-South communication of virtual machines.

Networking Description:

- Core layer

Using the data center core switch with high-capacity network message forwarding capability is recommended. The switch model is required to support stacking or clustering technology, and the downlink port rate is 10GE.

- Access layer

In the large-scale deployment scenario, it is recommended that the management, service, and VXLAN network planes adopt two stacking technology-supported switches(Gigabyte) data centers. The GE rate downlink connects to the server, and the uplink port rate is 10GE. Multiple 10GE links access the core switch using link aggregation to build a redundant, low convergence ratio and non-blocking service switching network. The storage network adopts two independent 10 Gigabit switches to do link aggregation with two switches to ensure Virtual Storage performance and improve the robustness of the whole network architecture. During deployment, the two hosts are aggregated and directly connected, and dual switch link aggregation should be selected.

In the small-scale deployment scenario, two stacked data center switches(Gigabyte) are recommended in the management, service, and VXLAN network planes. Each network plane is divided into different VLANs for logical reasons of isolation. The storage network also adopts two independent 10 Gigabit switches and dual switch links to ensure Virtual Storage performance and improve the robustness of the whole network architecture.

When the server is configured with 6 GE and 2 10GE network ports, the management, business and VXLAN networks adopt 2 GE network ports respectively, and the network ports are aggregated and bound; The storage network adopts 2 10GE network port and dual switch link to increase network redundancy and transmission bandwidth

For the management, service, and VXLAN network planes, the server network card aggregation supports three load balancing modes: IP, MAC address, and polling. It is recommended to use the IP mode for load balancing. The access layer switch interface needs to be configured with the corresponding interface aggregation mode. If the incoming switch is a Cisco switch, configure mode on to connect with the underlying aggregation NIC. For storage independent dual switches, configure access VLAN 1 on the switch port that maintains the storage connection.

In addition, since the HCI cannot perceive the cabinets, it is recommended to use the deployment mode of dual machines and dual cabinets as much as possible during HCI deployment. Stacked dual switches are placed in two cabinets, and servers in a single cluster are recommended to be placed in more than two cabinets.

Introduction to HCI Stretched Cluster

Networking

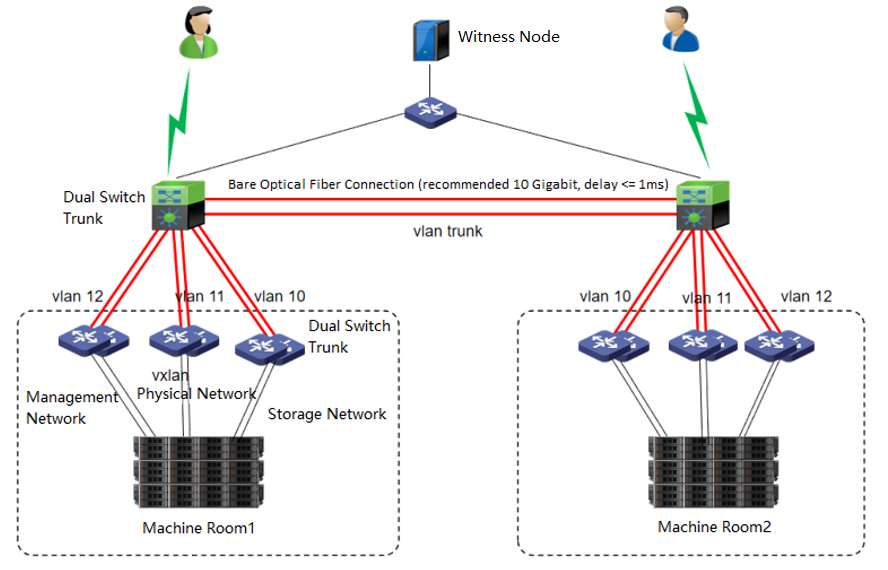

The network topology of the HCI stretched cluster is as follows.

Networking Description:

- Machine Room 1 and machine Room 2

Machine room 1 and machine room 2 form an HCI cluster. Under the condition of ensuring the interconnection of the management network, VXLAN network, and storage network of the two machine rooms, configure corresponding redundancy strategies for the management network, VXLAN network, and storage network in the machine room. Stack the three networks with two switches, and configure corresponding network aggregation on the HCI cluster. The recommendations are as follows:

Management network: Each node is aggregated with 2 * GE network interfaces and stacked with two separate management network switches.

VXLAN network: Each node is aggregated with 2 * GE network interfaces and stacked with two separate VXLAN network switches.

Storage network: Each node is aggregated with 2 * 10GE network interfaces and stacked with 2 separate storage network switches.

The two machine rooms can set their own Edge. It is also recommended that the edge network adopt 2 * GE network interfaces for aggregation to provide services to the external network. If the conditions allow, providing the individual edge to the two machine rooms is suggested. If the conditions do not allow it, set the edge in the main service’s machine room.

-

Machine room network

Machine room 1 and machine room 2 are directly connected through the second layer of the network. It is recommended to use a 10 Gigabit bare optical fiber network for aggregation, and the network latency in the machine room should be less than or equal to 1 ms. The switches of the two machine rooms are stacked, and three different VLANs are divided on the stacked switches to carry the relevant data of the management network: the VXLAN network and the storage network of the two machine rooms. -

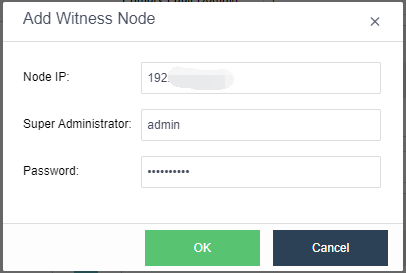

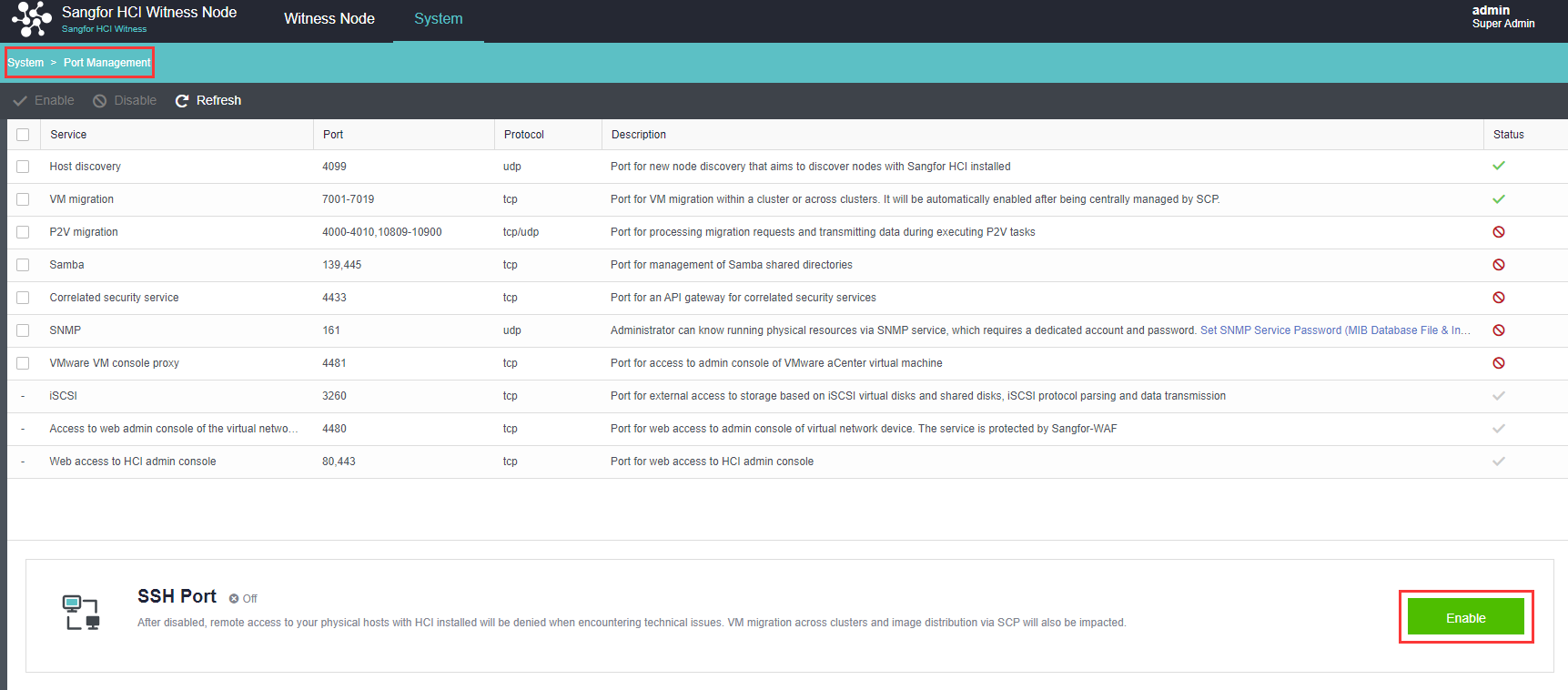

Witness Node

The witness node is important in the stretched cluster. It is different from the HCI system. To configure the witness node, you need to install the operating system of the witness node separately or purchase a separate aServer of the witness node. The regular communication between the witness node and the cluster shows whether the data copies in different computer rooms are abnormal and can be found in time. For the abnormal computer room, under the charge of the witness node, the business can continue to provide services in the computer room with normal data copies. The witness node can be deployed in the node room between machine room 1 and machine room 2 to carry services, or in a third-party site, provided that the witness node needs to be able to communicate with the HCI cluster IP. The link delay between the witness node and the cluster shall not be less than 5ms, and the recommended bandwidth is 100m.

Preparation Before Installation

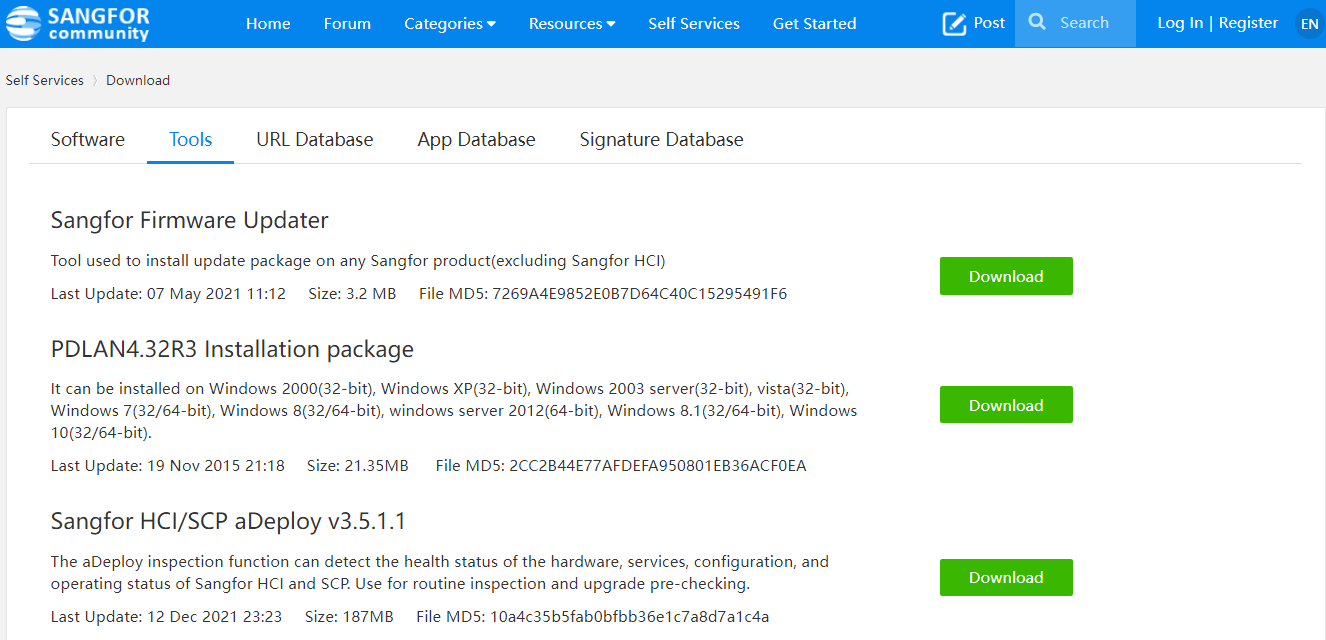

Document and Tool Preparation

| Tool / Material Name | Download Path |

|---|---|

| Sangfor HCI Version User Manual | Sangfor Knowledge Base > Knowledge Base > Cloud Products > HCI > User Manual |

| Sangfor HCI version installation image | Sangfor Community > Self Services > Download > HCI |

| Sangfor witness node installation package | Sangfor Community > Self Services > Download > HCI |

| Sangfor aDeploy | Sangfor Community > Self Services > Download > Tools |

| Sangfor virtual network device template | Sangfor Community > Self Services > Download > HCI > NFV Contact Sangfor Support or Community Livechat to obtain if it is not available. |

| Operating System Image | Self-access |

| Chrome、UltraISO、MD5 | Self-access |

HCI Cluster Configuration Requirements

| Name | Project | recommended value | Precautions |

|---|---|---|---|

| Server | RAID Card | 1. Passthrough (JBOD, non-raid) mode must be supported. 2. The cache disk and data disk must be configured in passthrough mode. 3. RAID1 is supported. (used by the third-party service system disk). 4. Use aDeploy tool to check the compatibility of raid card and raid card firmware. Hardware that does not meet the requirements will not be allowed to add to virtual storage. |

Raid0 mode is prohibited for cache disk and data disk. |

| CPU | It is recommended that the CPU dominant frequency be greater than 2.0GHz. (server-level CPU is recommended). | 1. It is forbidden to use CPUs with less than 8 threads. 2. Support HCI deployment to servers equipped with Haiguang 7159 CPU. 3. If using Haiguang CPU: a. Mixed deployment is not supported, and only Haiguang CPU can be used in the same cluster. b. NUMA scheduling function is not supported. c. Heterogeneous migration is not supported. d. Windows virtual machine is prohibited from turning on the high-performance clock. |

|

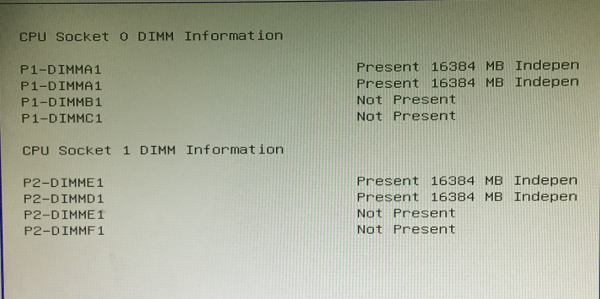

| RAM | RAM shall not be less than 64G. | For Nodes with less than 32G RAM, aSAN, aNET, and aSV are prohibited from fully enabled. | |

| System disk | 1. Enterprise hard disk is recommended for system disk. 2. The capacity shall not be less than 128G. 3. The third-party service uses two disks to make RAID1. |

1. The server system disk is prohibited from deploying a single disk RAID0. 2. It is forbidden to use SD card as HCI system disk. The reason why it cannot be used is that it has problems in reliability, performance, and stability. |

|

| Data disk | Distributed storage uses SSDs as cache disks. SATA (or SAS) is used as the data disk. SSD: the higher the HDD capacity ratio, the better the overall performance. Use aDeploy tool to check the compatibility of SSD Firmware. If it does not meet the requirements, it will not be allowed to be added to virtual storage. |

1. Non enterprise HDD disks are prohibited. 2. It is forbidden to use HDD Hard Disks with 7200 rpm or less. 3. It is forbidden to use a hard disk less than 600G as a data disk. (4T and above is recommended) 4. 4Kn disks are prohibited for versions lower than 6.2.0 or 2 hosts virtual storage clusters. 4Kn disks are supported since version 6.2.0). 5. It is forbidden that the capacity ratio of SSD cache disk or HDD data disk is less than 1:20 (the recommended capacity ratio in best practice is 20%). 6. If a read-intensive SSD is used, the SSD cache disk or HDD capacity ratio cannot be less than 7%. 7. The SSD cache disk or HDD data disk ratio cannot be less than 1:6. 8. The sum of data disk capacity among nodes and the maximum capacity cannot exceed 80% of the sum of all remaining nodes. 9. Differences in the capacity of data disks in a single host are allowed, but the capacity difference between the largest and smallest data disks should not exceed 2 times. 10. Whether in the test or implementation stage, the number of cache disks cannot be greater than the number of data disks. 11. requirement The SSD interface rate is 6gbps. If the rate is 3gbps, it is not allowed to join the virtual datastore. 12. HDDs with a rate lower than 50MB/s are prohibited from joining the virtual datastore. 13. SSD data disks (flash) with IOPs less than 10000 are not allowed to join the virtual datastore. |

|

| Cache disk | VXLAN Administration Service switch |

1. Non enterprise SSDs are prohibited. 2. The cache disk cannot use SSDs smaller than 240GB. 3. SSDs with DWPD less than 1 are prohibited (the spec parameter table of SSD can be found). 4. Do not use SSDs that do not support power-down data protection. 5. SSDs with IOPs less than 30000 are prohibited from joining the virtual datastore. |

|

| Switch | VXLAN Administration Service switch |

Support Stack/M-LAG and the VXLAN switch support jumbo frames (adjust MTU value). Disable the selection of switches that do not support jumbo frames. (some vendors call it MTU interface). |

|

| Storage switch | A 10 Gigabit switch must be used. | Gigabit switches are prohibited for private storage networks. |

Witness Node Configuration Requirements

Hardware Configuration Requirements

When deploying a stretched cluster, you need to deploy a witness node. The witness node can be deployed on a physical server or a VMware virtualization environment. You can refer to the following table for corresponding hardware planning according to the cluster size.

Note:

The arbitration disk does not support the use of mechanical disks. It must be an enterprise SSD and in the compatibility list. Otherwise, it cannot pass the checking.

| Cluster size | Minimum hardware requirements | Illustration |

|---|---|---|

| Small deployment (4 to 6 HCI nodes, 2 to 3 for each machine room) |

CPU: 6 cores Memory: 32GB System disk: capacity > = 128G Quorum disk: enterprise SSD with capacity > 100GB. Virtualization deployment requires no less than 1000 IOPs. |

Support VMware virtualization deployment and physical machine deployment. |

| Midsize deployment (8 to 16 HCI nodes, 4 to 8 for each machine room) |

CPU: 8 cores Memory: 32GB System disk: capacity > = 128G Quorum disk: two 128GB or 248GB enterprise SSDs are used and configured as RAID1 |

Physical machine deployment is recommended. |

| Large deployment (18 to 24 HCI nodes, 9 to 12 for each machine room) |

CPU: 16 cores Memory: 32GB System disk: capacity > = 128G Quorum disk: two 128GB or 248GB enterprise SSDs are used and configured as RAID1 |

Be sure to deploy using physical machines. |

Switch Configuration Requirements

Basic Configuration Requirements

-

Management, service, storage, tunnel, etc., adopt different VLAN isolation based on Security compliance considerations.

-

Turn off STP protocol.

-

Turn on the VXLAN interface jumbo frame.

-

Turn off the IGMP snooping on the switch connected to the management network and the VXLAN network.

-

The aggregation interface end-to-end switch in the active and standby mode does not need to be configured with aggregation.

Specific Configuration Examples

| Network type | Interface type | Example (Configuration in Interface View) | Remarks |

|---|---|---|---|

| Management network | access | Interface Ethernet1/0/48 switchport access VLAN 2 |

– |

| Storage network | access | Interface Ethernet1/0/48 switchport access VLAN 3 |

– |

| Tunnel network | access | Interface Ethernet1/0/48 jumboframe enable switchport access VLAN 4 |

The tunnel interface needs to turn on the jumbo frame |

| External network | trunk | Interface Ethernet1/0/48 switchport mode trunk switchport trunk allowed VLAN 10-20 |

– |

Introduction to Installation Process

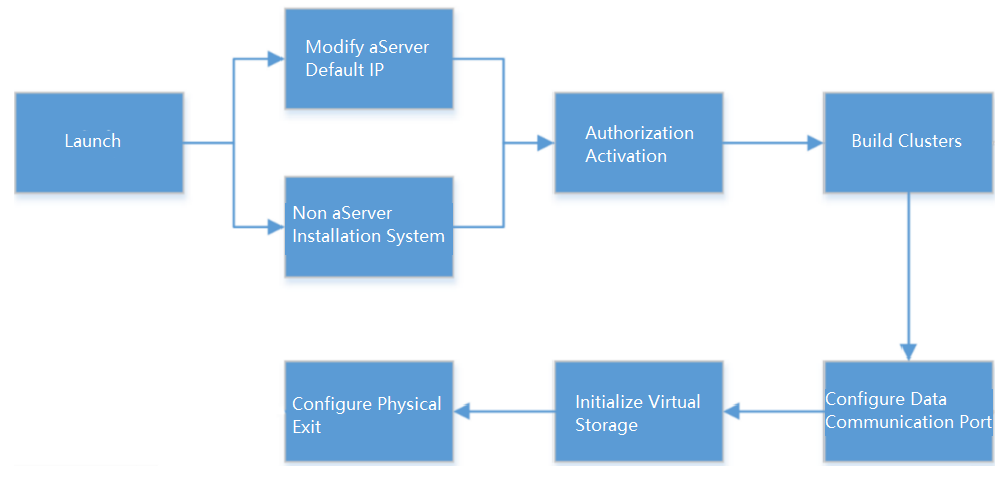

The HCI cluster installation process is outlined in the figure below.

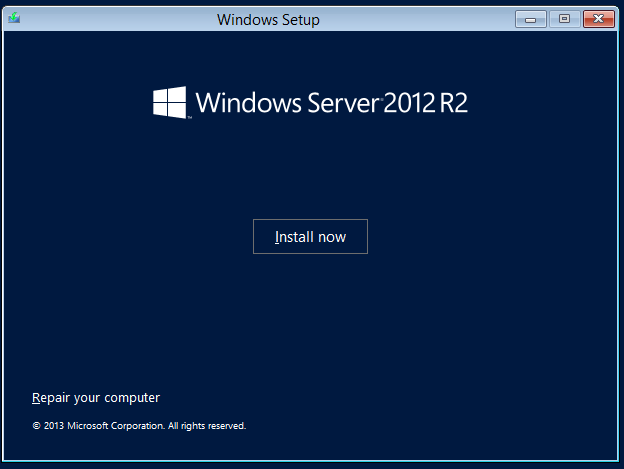

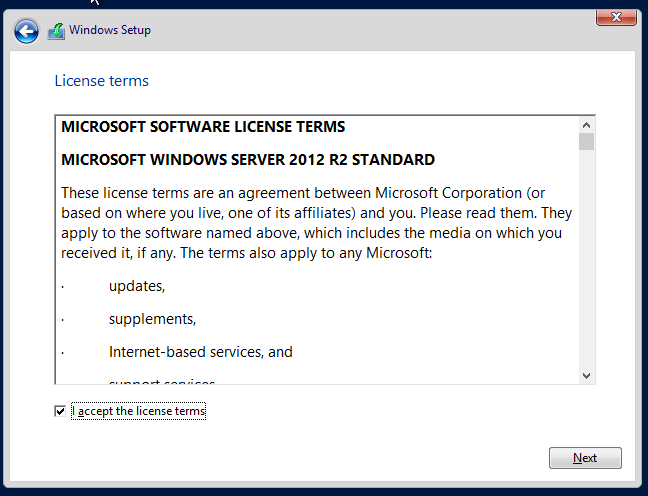

HCI Virtualization System Installation

aServer

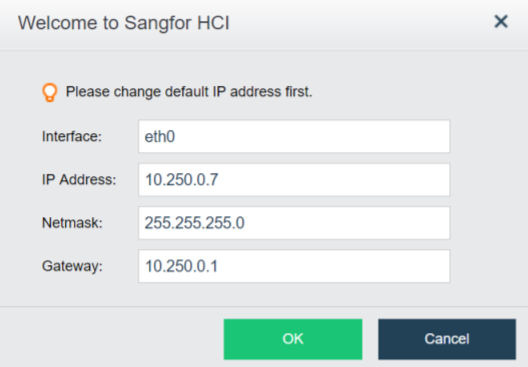

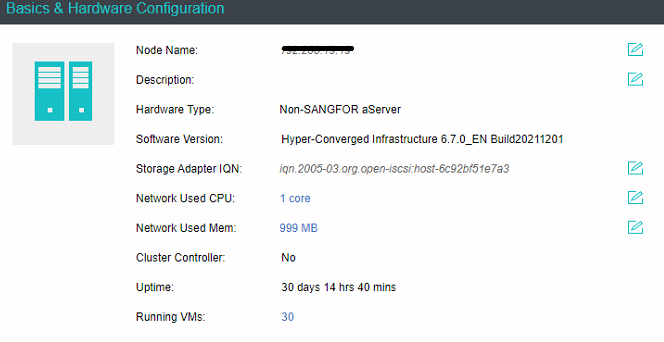

The aServer has pre-installed the HCI operating system and set the eth0 interface as the management interface. The default IP address is 10.250.0.7/24. Use a PC to configure the IP address of the same network segment, connect directly to the eth0 port, and open it with a browser https://10.250.0.7/. Then, you can log in to the console of the aServer.

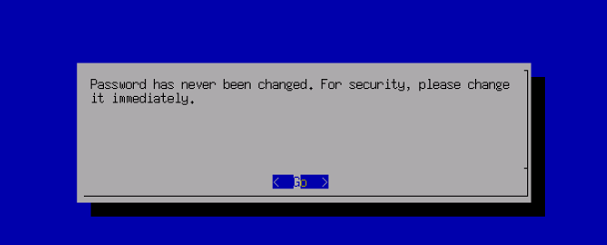

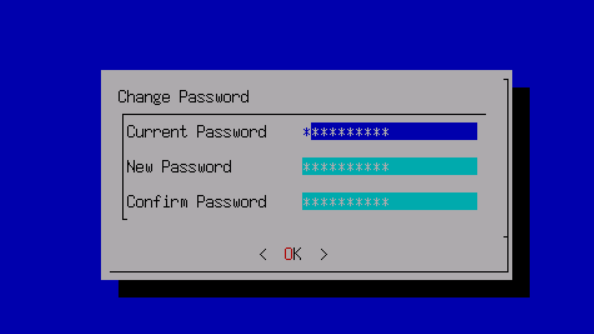

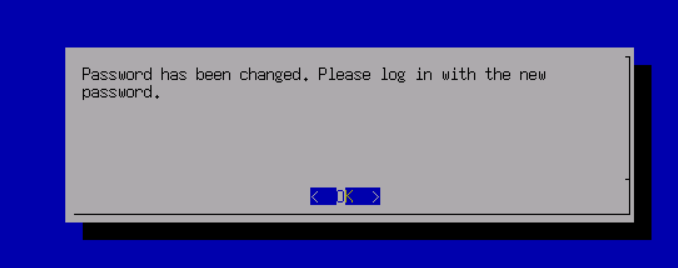

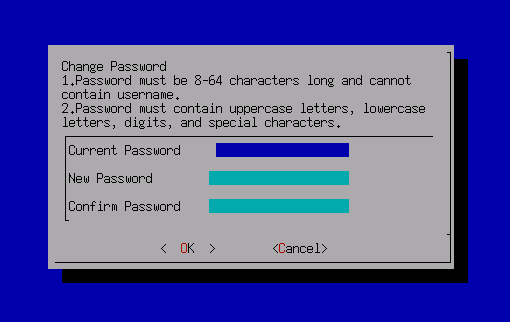

The default administrator account and password are admin. You will be prompted to change the default password when you log in for the first time. You will be forced to change the default password after a month.

After the first successful login, you will be prompted to modify the default IP address. If there are multiple aServer nodes, you need to log in separately to modify the default IP address before forming a cluster, and the IP address must be in the same network segment.

The aServer is signed and can only use the signed disk (shipped from the original factory). If the aServer is inserted into a third-party disk, it cannot be recognized in virtual storage and can only be used for local storage.

When virtual storage is used, node deletion is not supported. Taking the node out for separate use is forbidden after the cluster is disassembled.

The aServer cannot reinstall the system. After reinstalling the system, you need to re-sign the node and disk.

Do not perform a low-level format on the aServer disk. Re-sign is required after formatting.

Please refer to the BIOS Configuration Requirement section below for the configuration of the IPMI interface.

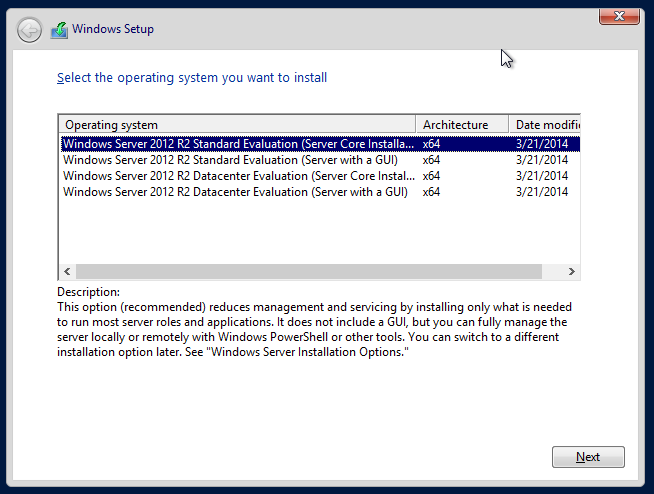

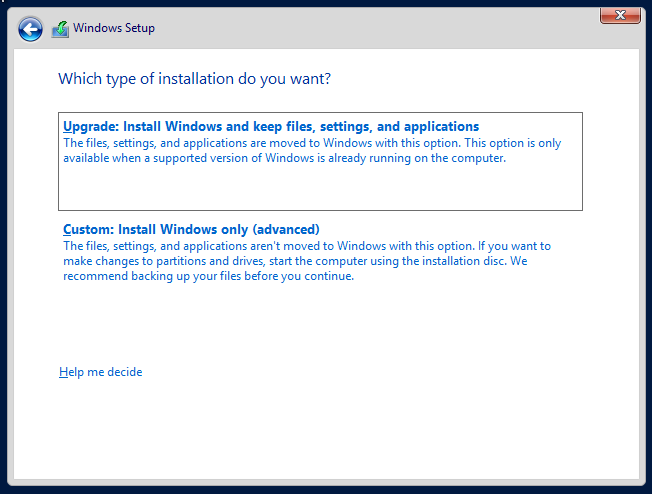

Third-Party Server

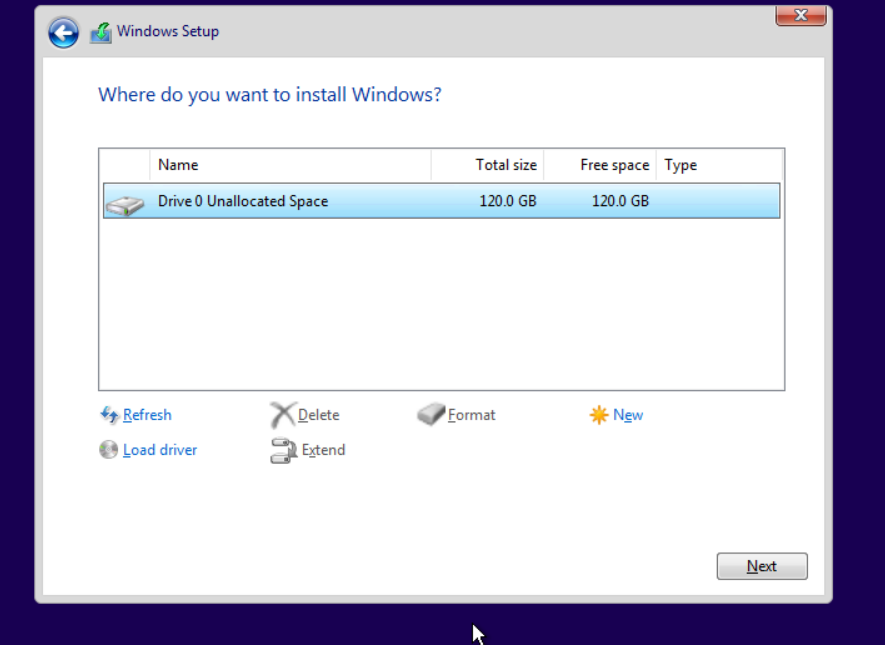

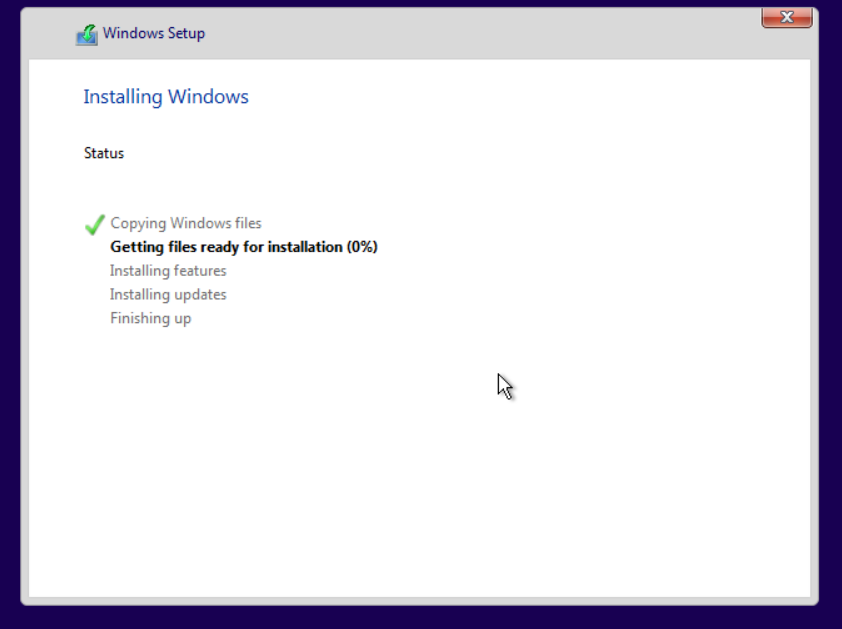

The third-party server installation system can use IPMI for ISO and USB flash disk installation. The main difference between the two methods is in the image mounting. The installation part is the same.

Server Configuration

Hardware configuration requirements

When installing HCI using a third-party server, each host hardware must meet the following minimum configuration requirements. Selecting the server hardware according to the recommended configuration is recommended to ensure the best effect.

Note:

Suppose you need to use the virtual storage function and the system disk. Each node must also be configured with at least one 240GB enterprise SSD (must support TRIM/Discard instructions) as the cache disk and several 1TB enterprise HDDs (RPM greater than or equal to 7200rpm) as the data disk.

The ratio of SSDs to HDDs shall not be less than 1:6, and the minimum ratio shall not be less than 1:25. If it is a read-intensive SSD, the ratio of SSD cache disk/HDD capacity shall not be less than 7%.

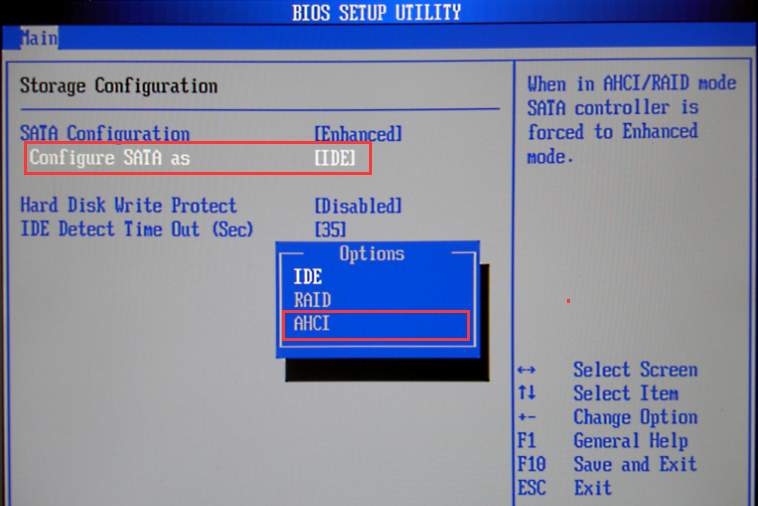

RAID Card Configuration Requirements

If there is a raid card, it is recommended to select a RAID card that supports JBOD / non-RAID passthrough and supports transparent transmission of trim instructions. If the RAID card does not support JBOD / Non-RAID, disable the raid card directly and enable normal disk mode (AHCI / IDE).

BIOS Configuration Requirement

When using a third-party server, you need to modify some BIOS options to ensure the efficient operation of the HCI platform. BIOS configuration requirements:

-

BIOS time synchronization

-

Enable VT-x or VMX

-

Turn Off Energy Saving Mode

-

Turn on power on self start

-

Configure IPMI address (optional)

Specific Configuration Examples

The method of entering BIOS is defined by the BIOS vendor. Usually, when the server is powered on and started, the screen will prompt to press del, ESC, F10, F11, and other keys to enter the BIOS Setup interface.

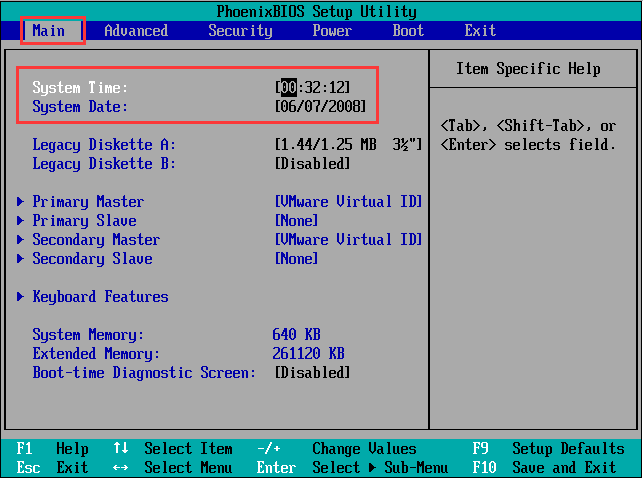

- BIOS Time Synchronization

The BIOS time of the server will be read as the system time when installing the hyper fusion platform. If the system time is inaccurate, the operation of some services may be affected. Therefore, you first need to modify the BIOS time to the current time.

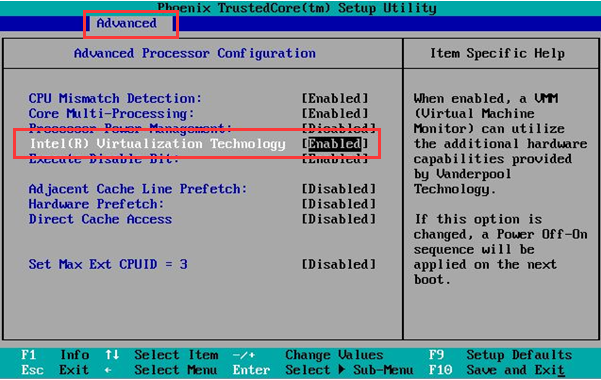

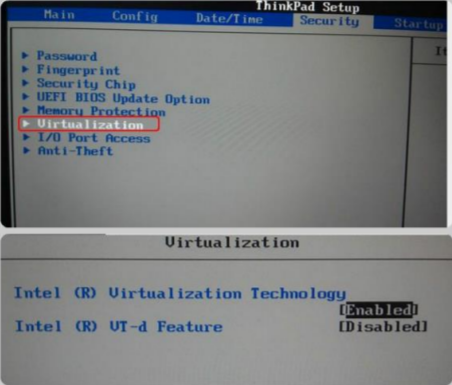

- Enable VT-x

When the HCI runs the virtual machine, the host is required to support the hardware-assisted virtualization technology of Intel VT-x. Therefore, in addition to the CPU supporting VT-x technology, you also need to enable this function in BIOS. If the CPU does not support VT-x, or VT-x is not enabled in the BIOS, the hyper fusion platform cannot be successfully installed.

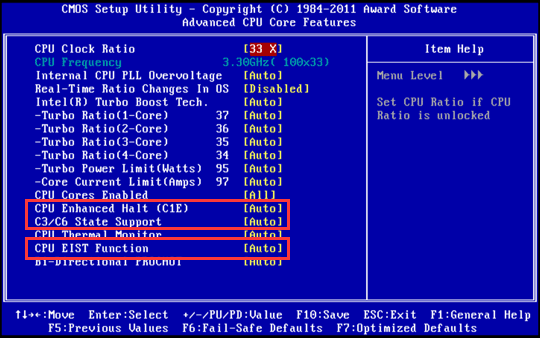

- Turn Off Energy Saving Mode

Turn off the CPU energy-saving mode (disable EIST, CPU Status, and other options). The names may differ according to the hardware (such as PowerPperformance).

| CPU Status | Status interpretation | Status type | Use suggestions |

|---|---|---|---|

| C0 | Operating State | The normal working state of CPU. | —- |

| C1 | Halt | It is recommended to disable CPU sleep states at different levels and depths. | Disable |

| C1E | Enhanced Halt | It is recommended to disable CPU sleep states at different levels and depths. | Disable |

| C2 | Stop Grant/Clock | It is recommended to disable CPU sleep states at different levels and depths. | Disable |

| C2E | Extended Stop Grant | It is recommended to disable CPU sleep states at different levels and depths. | Disable |

| C3 | Sleep | It is recommended to disable CPU sleep states at different levels and depths. | Disable |

| C4 | Deeper Sleep | It is recommended to disable CPU sleep states at different levels and depths. | Disable |

| C4E/C5 | Enhanced Deeper Sleep | It is recommended to disable CPU sleep states at different levels and depths. | Disable |

| C6 | Deep Power Down | It is recommended to disable CPU sleep states at different levels and depths. | Disable |

| EIST | Enhanced Intel SpeedStep Technology |

Automatically adjust the voltage and frequency of the processor. | Disable |

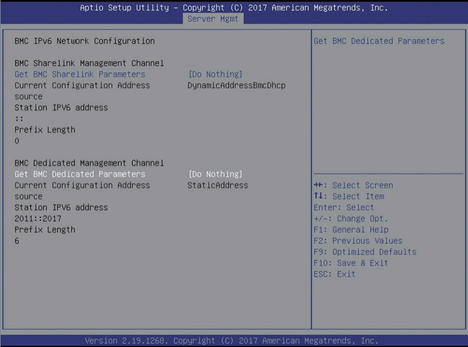

- IPMI Address Configuration

Log in to the BIOS interface, select Server MGMT >BMC Network Configuration >BMC IPv4 network configuration / BMC IPv6 network configuration, and press enter to view the configuration of current BMC IPv4 and BMC IPv6 network parameters.

BMC Sharelink is the first interface that multiplexes IPMI addresses on the NIC. BMC dedicated is the address configured for a separate IPMI interface. When configuring the out-of-band management network, modify BMC dedicated and select manual to write the static address manually.

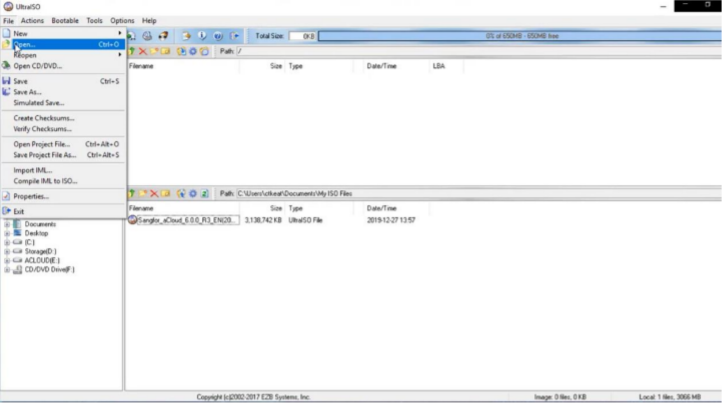

USB Flash Disk Installation

Precautions

-

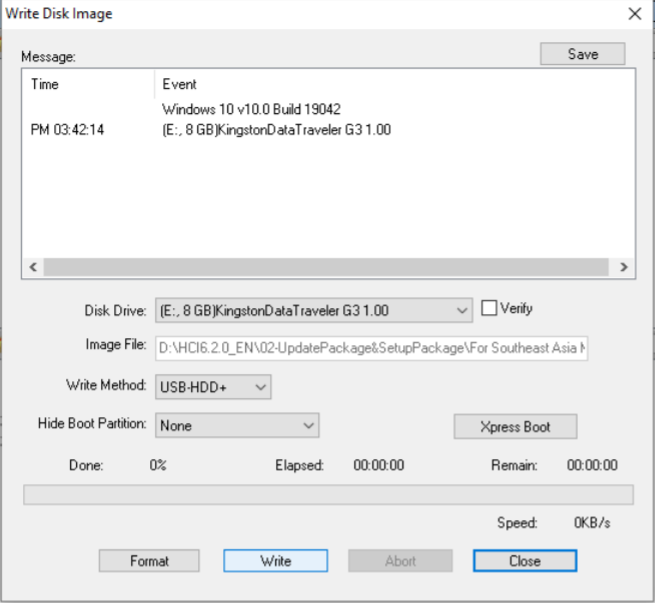

UltraISO should be the latest version.

-

The writing format of the USB drive should be USB-HDD or USB-HDD+. Click Verify to check whether the image file is written correctly.

-

USB drive capacity should be greater than the size of the ISO file.

-

The server configuration and switch configuration shall meet the configuration requirements of the server and switch in Chapter 2.2 Preparation Before Installation.

Steps

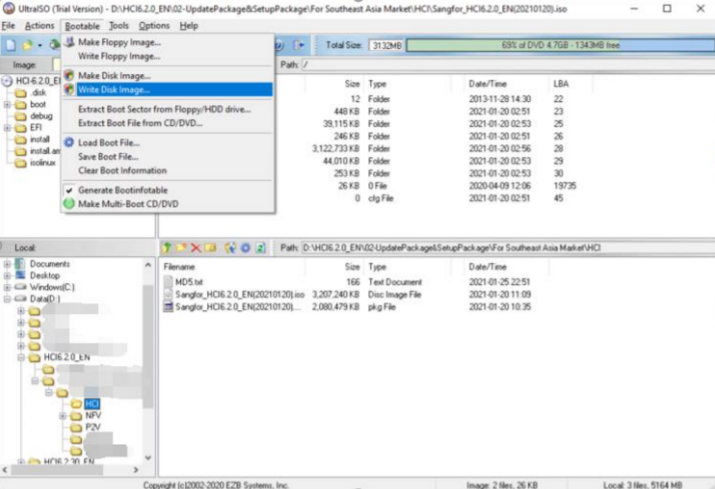

- First, insert the USB flash disk into the personal PC, then open the software UltraISO, select File > Open, and load the ISO file of Sangfor HCI software from the local disk.

-

Select Bootable > Write Disk Image and choose the USB drive into which you want to write the image file. Then, click the Write button and keep others’ settings unchanged.

You can remove the USB drive after the image file is written to the USB drive.

- The target of writing is USB flash disk or optical disc. Be sure to check Verify. The write method (usually USB-HDD+) is determined according to the USB startup type supported by the server.

- Insert the USB flash disk into the third-party server and boot from the USB flash disk in BIOS.

- Enable Virtualization Technology in BIOS, as shown below.

Note:

BIOS settings vary from computer to computer.

Witness Node System Installation (Optional)

Configuration Requirements

Refer to Chapter 2.2.3 Witness Node Configuration Requirements.

Sangfor aServer

Sangfor aServer has been pre-installed Sangfor HCI operating system and configured with a management interface(eth0, default IP address:10.250.0.7/24). To access the Web admin console of the Sangfor HCI platform on a PC. First, configure the PC with an IP address on the same network segment with that management interface and connect it to the eth0 interface on the aServer. Then open your browser and enter https://10.250.0.7/ into the address bar to log in to the Sangfor HCI platform console.

The default username and password are admin. The administrator will be prompted to change the default password upon first login. If the default password has not been changed for one month, the system will force the administrator to change it.

Upon the first login, the administrator will be prompted to modify the default IP address of the management interface, as shown below. If multiple aServers are deployed in the network, the default IP address of the management interface on each aServer needs to be modified, and addresses of management interfaces must be on the same network segment.

Third-Party Server

Description

The witness node can be either a Sangfor aServer or a third-party server. This chapter describes how to install the witness node on the third-party server.

Precautions

- It can be installed by ISO or USB flash disk. For preliminary preparations such as USB flash disk, please refer to Chapter 2.4.2.2 USB Flash Disk Installation.

- If there is a need for cluster expansion in the future, you can also adjust the configuration of the witness node accordingly.

Prerequisites

The hardware configuration of the witness node shall meet the configuration requirements in Chapter 2.2.3 Witness Node Configuration Requirements.

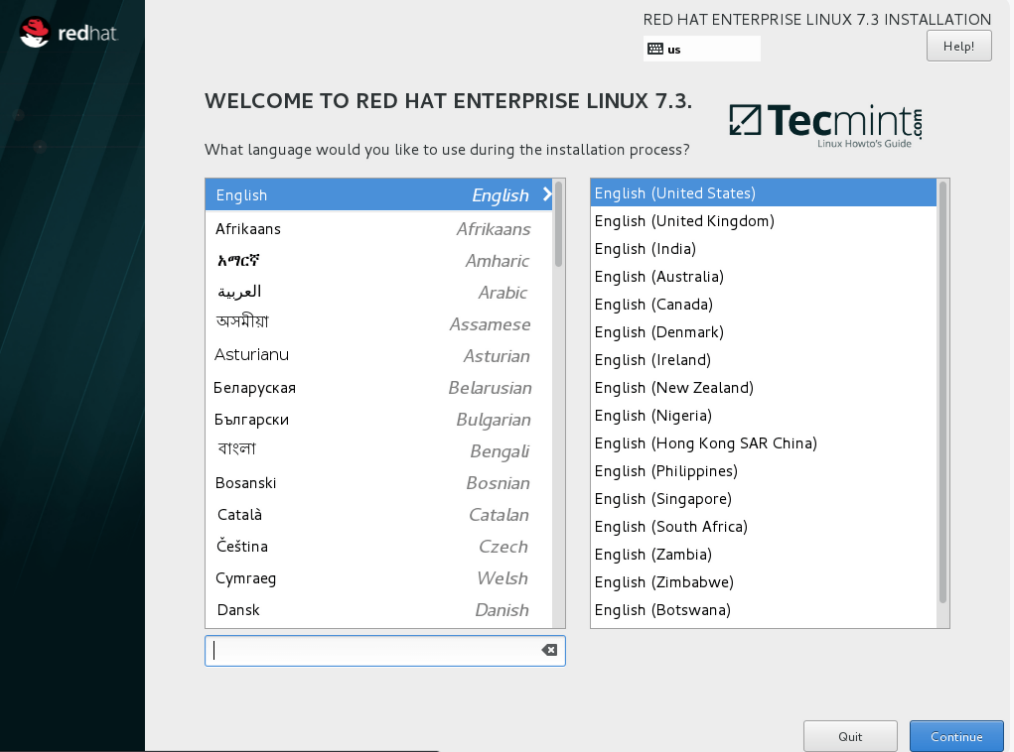

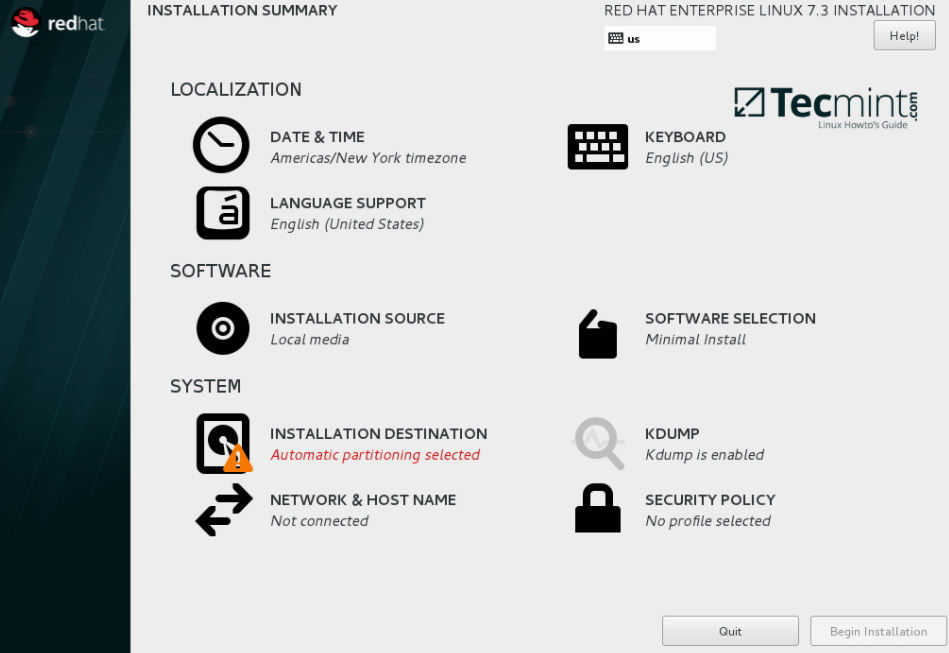

Steps

-

If ISO installation is adopted, load the arbitration installation package and select start from IPMI. If the USB flash disk is used for installation, use the USB flash disk made by the arbitration installation package to start.

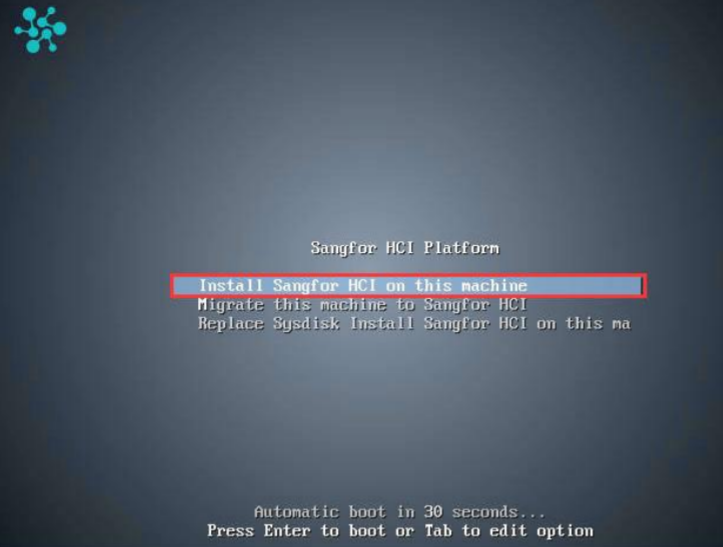

Select Install Sangfor HCI on this machine, and then press ENTER to enter the installation page.

-

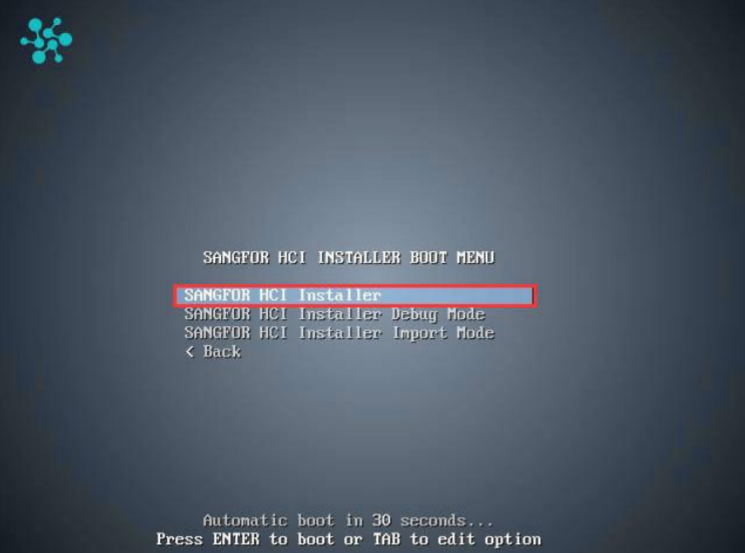

Select Sangfor HCI Installer and press ENTER to begin the installation.

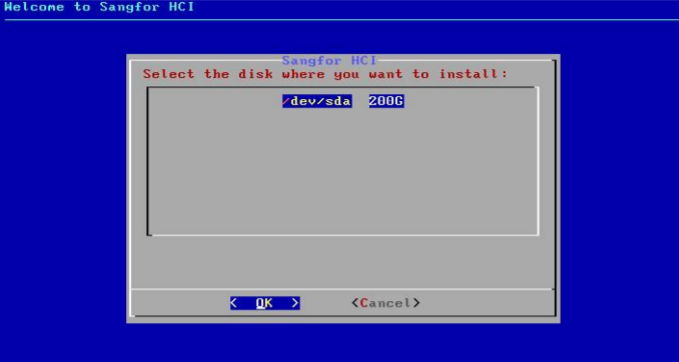

- Select a disk where you want to install Sangfor HCI software and select OK. If there is only one disk, you can select OK directly.

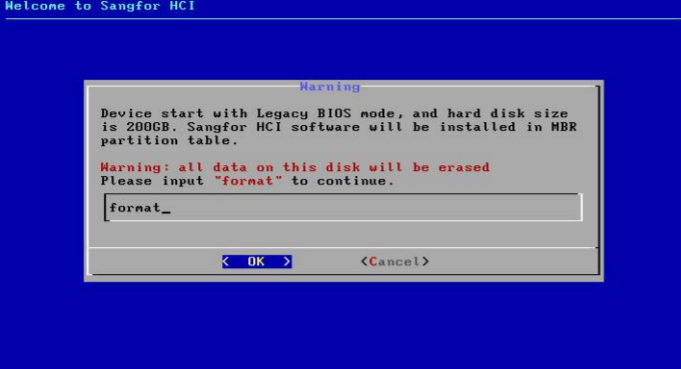

- After selecting the disk, you will be prompted to format the disk. Input format to confirm formatting disk, and select OK to continue the installation. After you select OK, the Disk Speed Tester page will appear. To test disk speed, select Yes. To skip this step, select No.

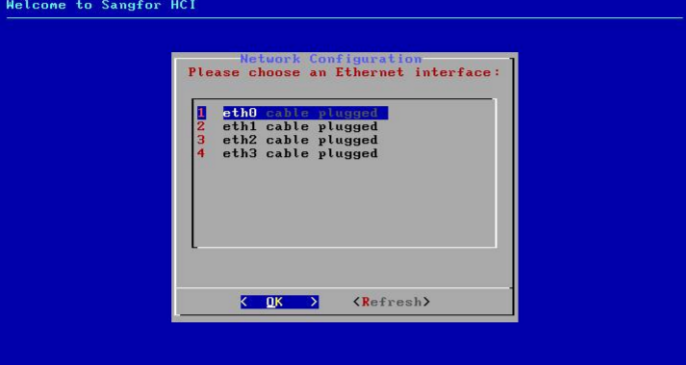

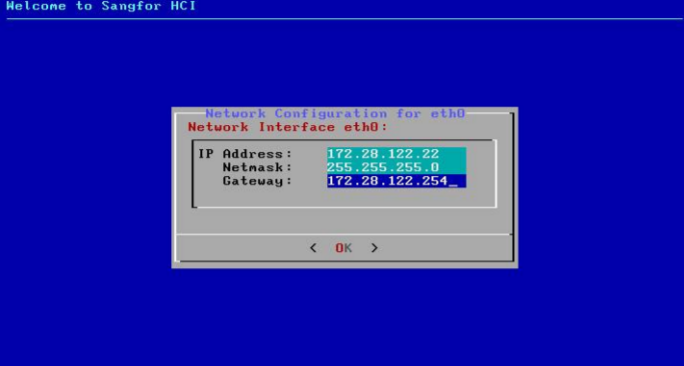

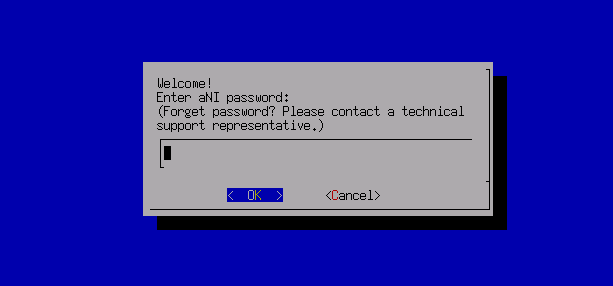

- After installing the Sangfor HCI software, you will be prompted to select an Ethernet interface and configure an IP address for that interface.

- Select an Ethernet interface, set the IP address, netmask, and gateway address, and then click OK.

-

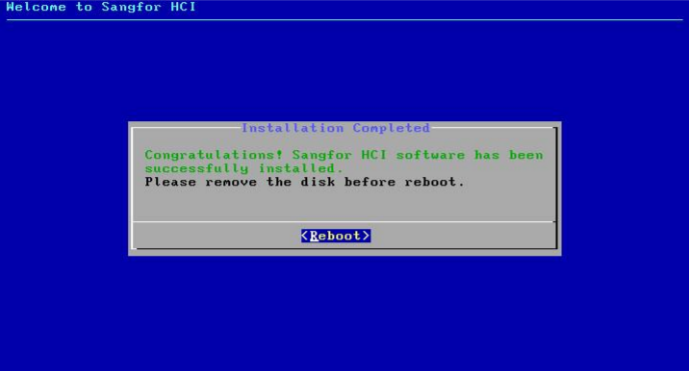

After the selected interface is configured, you can choose whether to continue to configure another interface. Select Yes to return to the network configuration page or No to finish the installation.

-

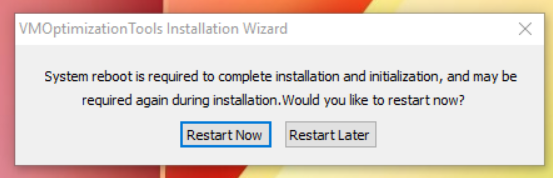

After the installation completes, remove the USB drive and then select Reboot to restart the server.

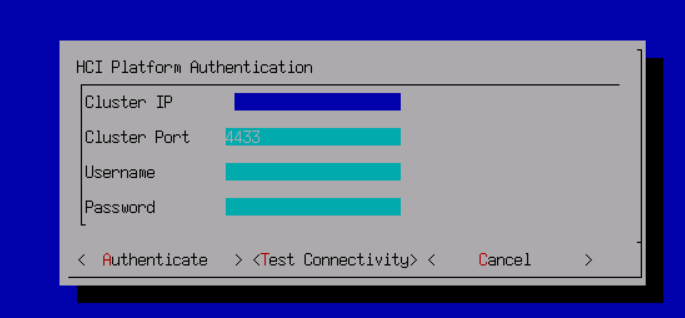

- The browser logs in to the web admin console page of HCI through the URL address: https://

<configured IP>.

Service Packs

Service Packs Settings

Description

Online Service Packs (SP) service can regularly obtain the latest patch information from the online patch platform to ensure the stability and security of the server.

HCI with internet access can directly access the Sangfor online SP center platform to update the latest patch. HCI in the intranet without internet access. If the VM has internet access, it can use the Sangfor proxy VM to access the online SP center. HCI and VM without internet access can use the third-party proxy server to access the online SP center. You can also deploy the on-premises sangfor SP server in the intranet for SP updates.

Precautions

- After configuration, the patch service must test connectivity to ensure that the HCI platform can connect to the online SP center.

- It is not recommended that the HCI management network directly access the online patch service platform as HCI will be exposed to the public network.

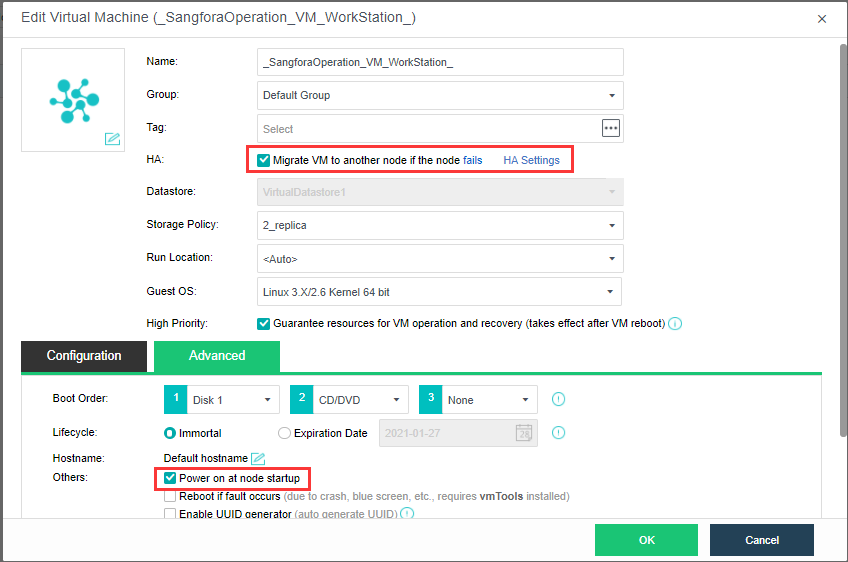

- When using the Sangfor proxy VM to access the online SP center, do not modify the virtual machine name (the default name is: _sangforaoperation_vmworkstation) after importing the VM. Otherwise, the agent service will become invalid.

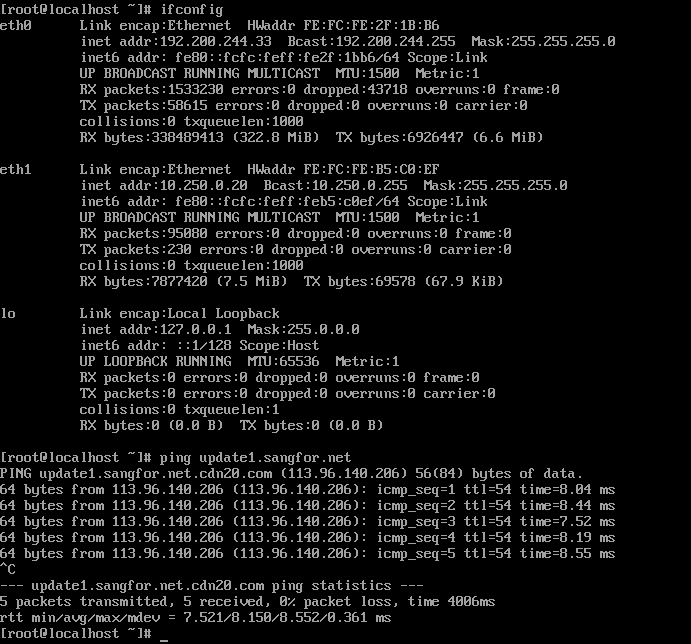

Prerequisites

The customer network needs to allow connection for the online SP center addresses to ensure that the HCI platform can access the online SP center.

Steps

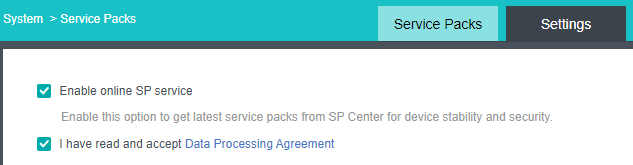

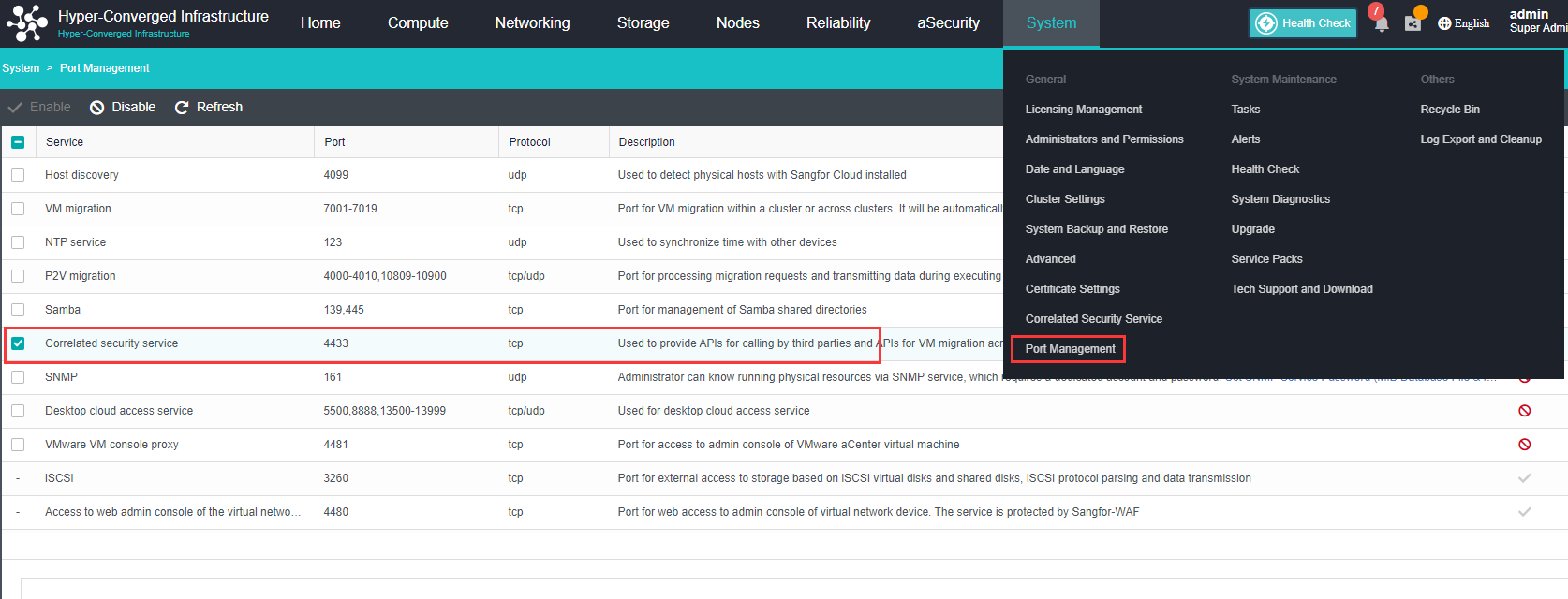

- Navigate to System > Service Packs and click the Settings tab.

- Click on the SP Center Addresses to ensure that the SP center addresses have been allowed connection to the customer network. The table below lists the addresses along with the purpose and requirements.

| SP Center address | Purpose | Requirements |

|---|---|---|

| https://cloudbgcop.sangfor.com | Cloud SP Server | It must be allowed |

| http://update1.sangfor.net | Online SP Center | At least one should be allowed, and it is recommended to allow multiple. |

| http://update2.sangfor.net | Online SP Center | At least one should be allowed, and it is recommended to allow multiple. |

| http://update3.sangfor.net | Online SP Center | At least one should be allowed, and it is recommended to allow multiple. |

| http://121.46.26.221 | Online SP Center | At least one should be allowed, and it is recommended to allow multiple. |

-

Check the Enable online SP Service checkbox.

-

Check the I have read and accept Data Processing Agreement checkbox.

- According to the HCI deployment scenarios and network conditions, select an appropriate online platform communication mode for the setting. (after selecting the corresponding scene for configuration, skip other scenes directly to step 6 for configuration).

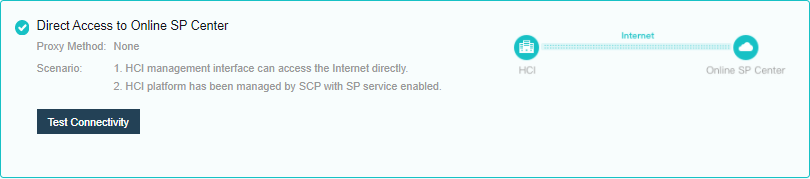

Scenario 1: Access the online patch platform directly.

- Select Direct Access to Online SP Center for communication mode settings, test the connectivity, and save the settings.

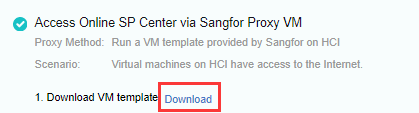

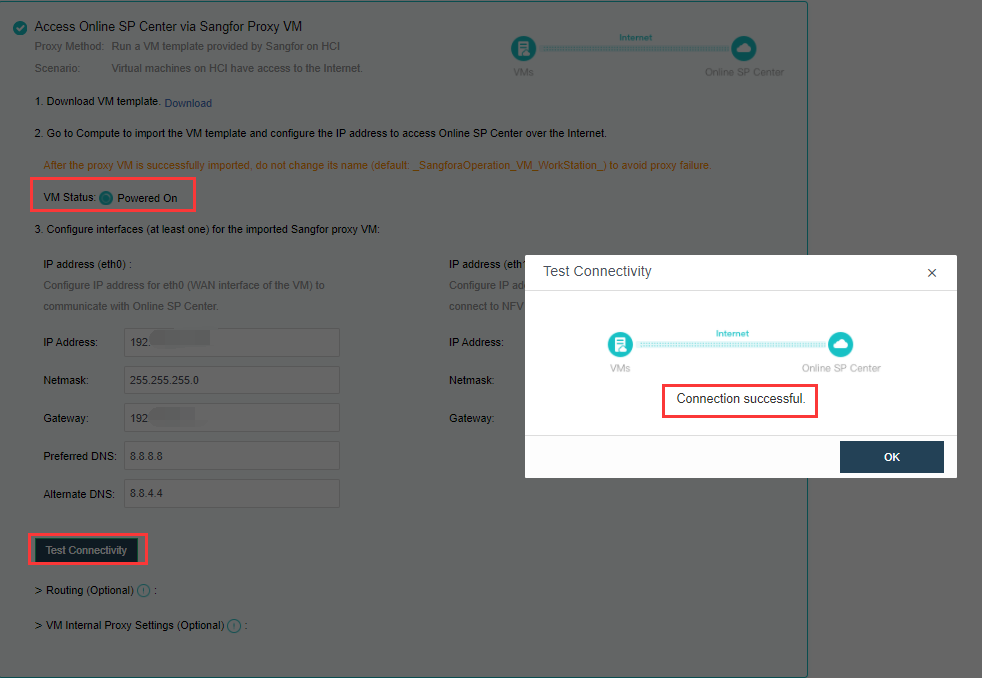

Scenario 2: HCI platform cannot be networked, but networked virtual machines can be deployed on HCI.

-

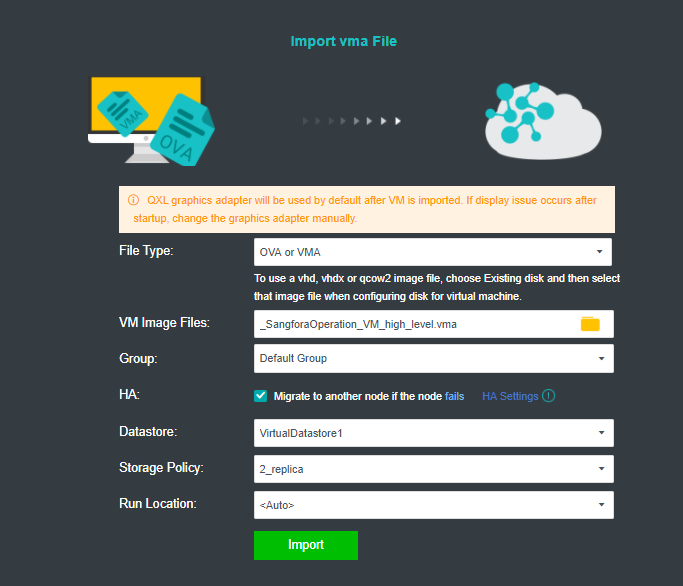

Download the virtual machine template.

-

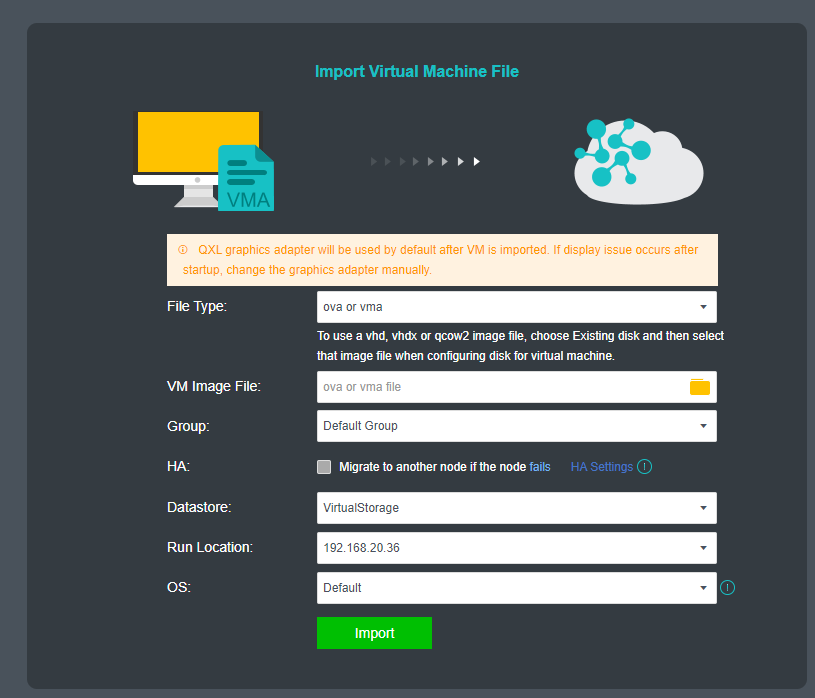

Import the downloaded VM template into HCI. When importing the template, it is recommended to configure it as follows:

a. HA: Enable the HA.b. Datastore: Shared datastore for all nodes, for example, virtual storage.

c. Run location:

<Auto>

- Edit the virtual machine, click Advanced, and confirm that HA is enabled and the Power on at node startup checkbox is checked.

-

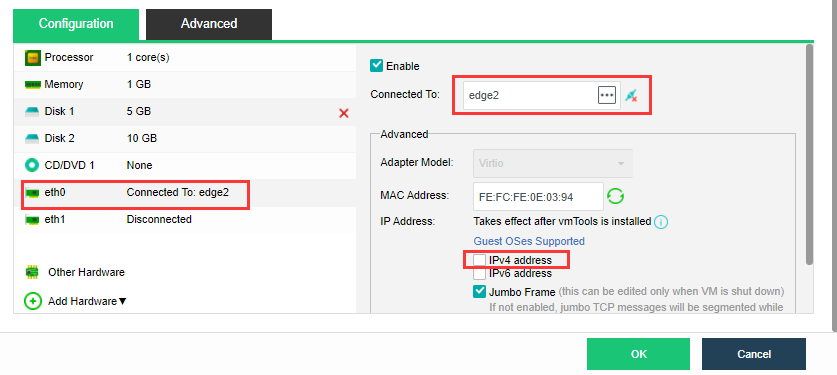

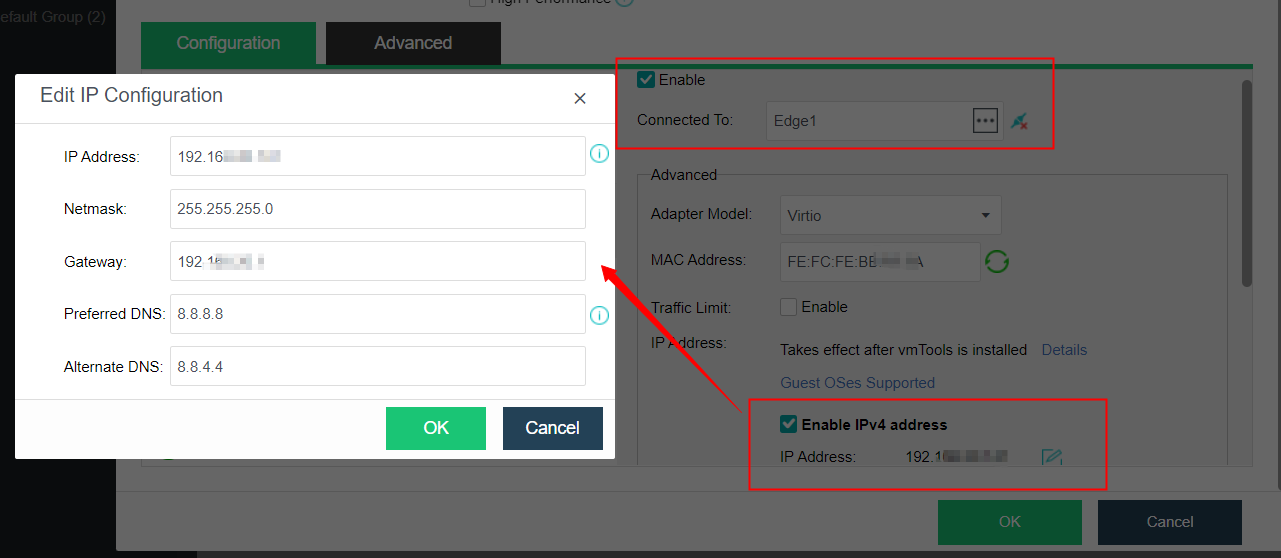

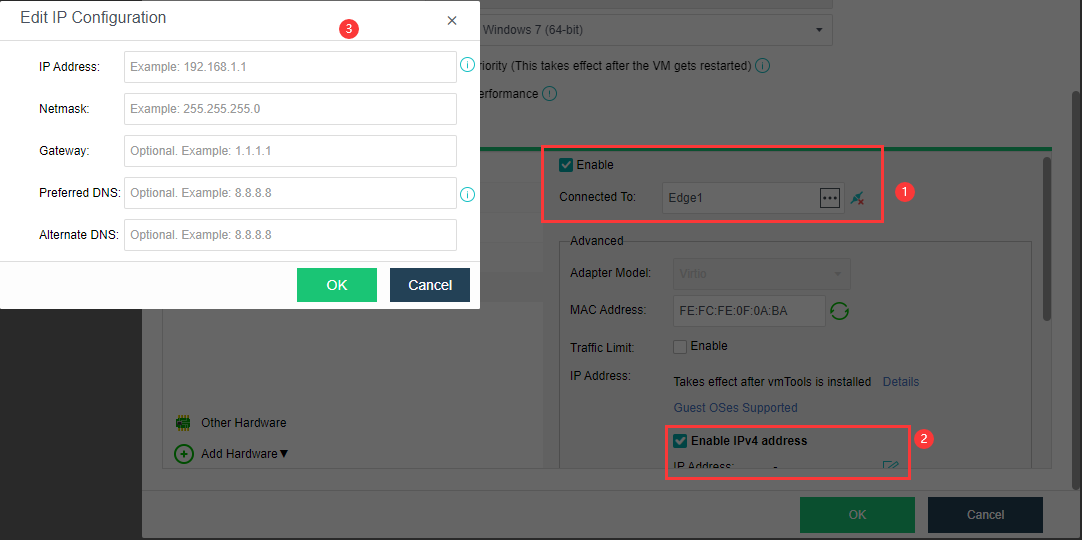

Configure the virtual machine eth0 to connect to the edge, and ensure that the edge can access the external network. Modify the IP settings on the page, configure the planned address to the virtual machine, and start the virtual machine.

-

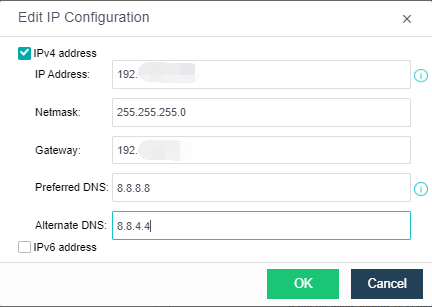

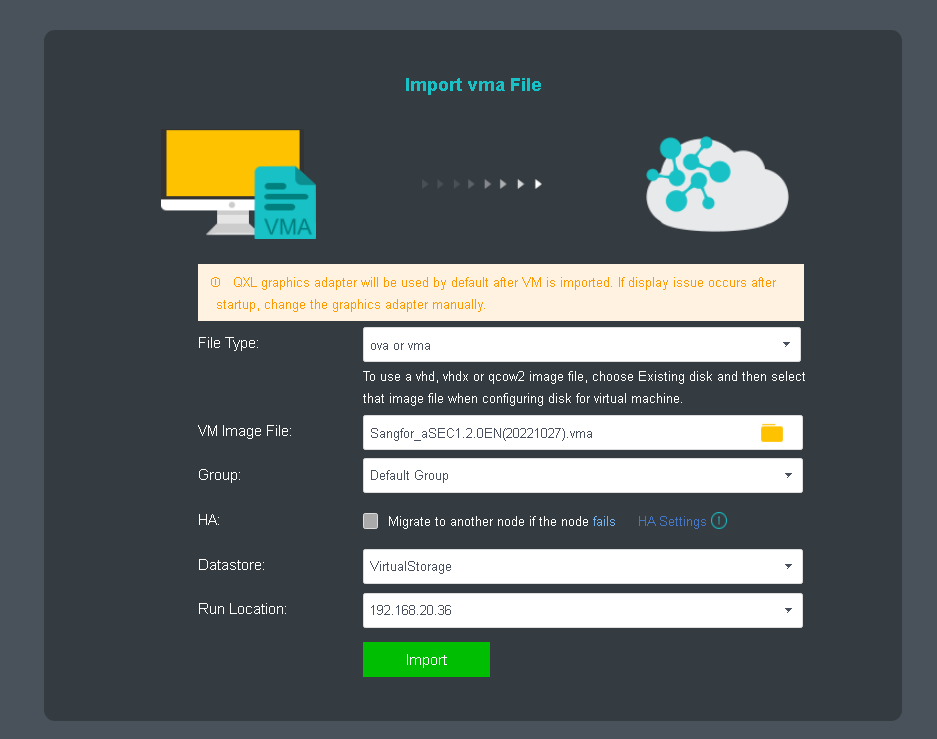

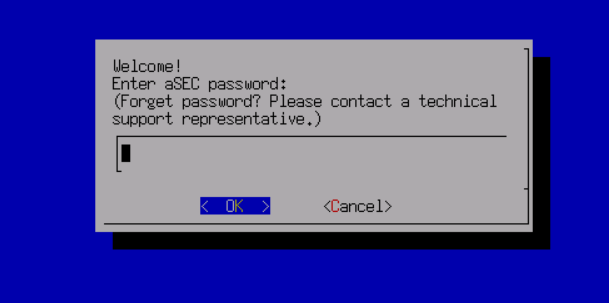

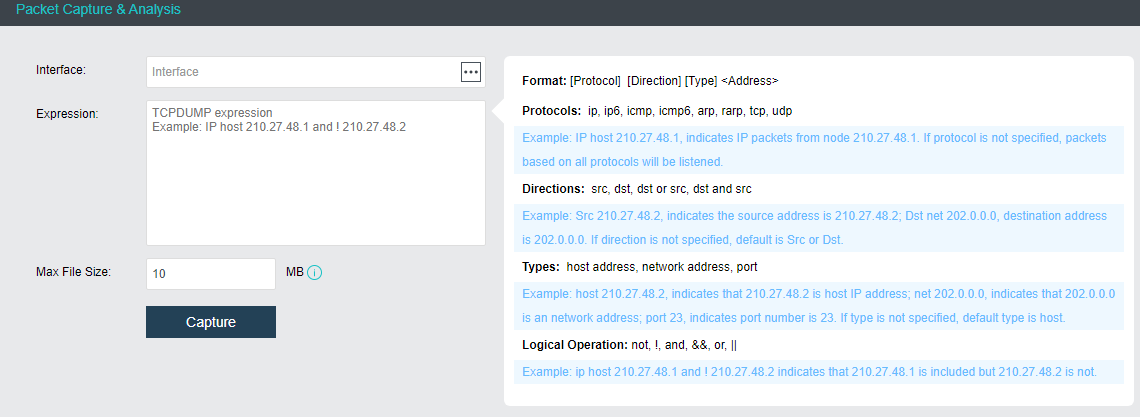

As shown in the figure below, configure eth0’s IP, netmask, gateway (optional), preferred DNS (optional), alternative DNS (optional), test connectivity, and ensure the IP Access to the online SP center. (Eth1 is the internal interface of the proxy virtual machine. If there is an NFV device, you need to configure the eth1 interface to connect to the NFV device. The configuration method refers to the configuration of the eth0 port to connect to the network where the NFV device is located).

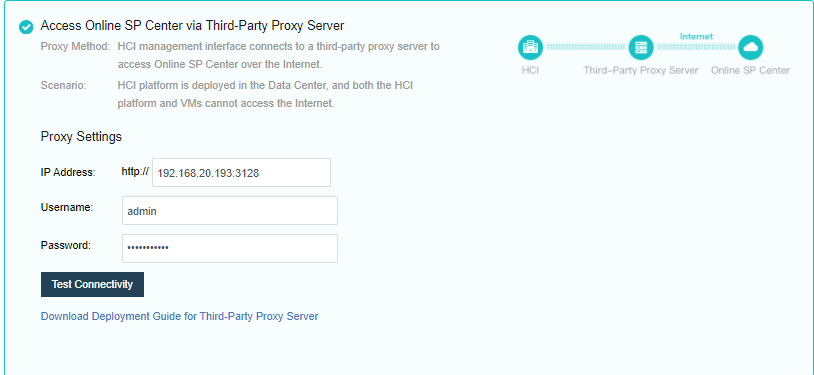

Scenario 3: the HCI platform has no internet connection, but it can access the internet through a third-party agent.

-

The network deployment is shown in the figure above. This scenario selects a third-party proxy server to access the online patch platform.

-

Click Download Deployment Guide for Third-Party Proxy Server. The downloaded content is a compressed package named Proxy_Squid_Deployment_Guidance.rar, containing document descriptions, recommended agent installation packages, and configuration files.

-

Refer to the downloaded configuration guide, install and configure a third-party agent program on the proxy server, and confirm that the server can access the Sangfor online SP server. Deploy two interfaces as shown in the figure below, one can access the Internet to connect to the Sangfor SP server, and the other can access the HCI in the intranet.

-

Fill in the address (IP + port, example: 10.250.0.20:3128) of the agent set in step 2 on the HCI platform. And the authentication user name and password of the agent are set during the deployment of the proxy server.

-

The IP address is the proxy server intranet port IP.

-

The port is the publishing port of the agent service. The default is 3128.

-

Click Test Connectivity, and confirm that the network is connected.

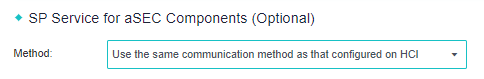

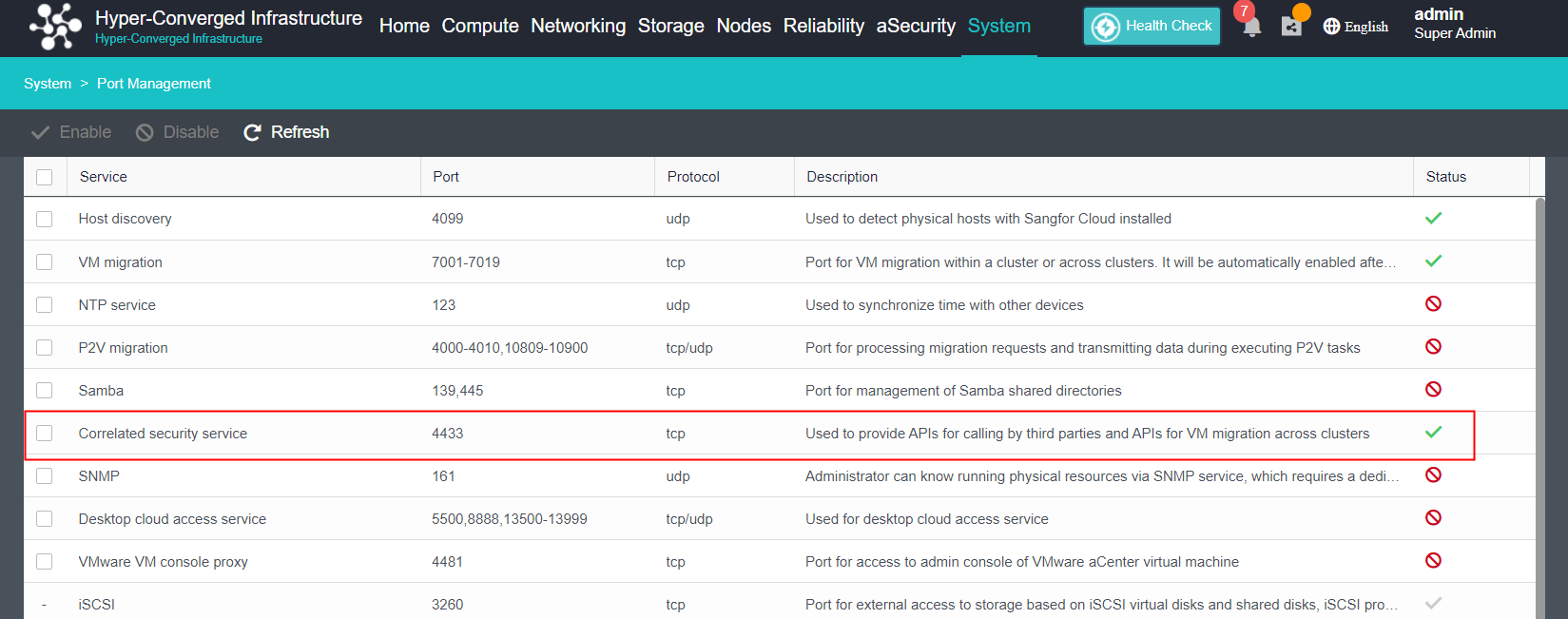

- Set the security component (aSEC) patch service (optional), and select Use the same communication method as that configured on HCI.

- Save the settings by clicking the Save button.

HCI Cluster Initialization

HCI Cluster Initialization Conditions

Whether creating a normal cluster or a stretched cluster, in the cluster initialization process of HCI, the authorization activation, cluster formation, and Overlay Network Interface configuration are the same. You need to set up the network according to the installation networking requirements in Chapter 2.1 Networking Installation Introduction, and then initialize the cluster, including authorization activation, cluster formation, and Overlay Network Interface configuration. Then configure virtual storage according to the actual situation, and create ordinary volumes or Stretched datastores.

HCI Cluster Initialization Has The Following Conditions

- Sufficient licenses need to be purchased according to the number of CPUs of all nodes in the cluster.

- A single node does not support virtual storage. An ordinary cluster requires at least two or more nodes in the cluster. If you need the striping function of virtual storage or the multi-volume function of virtual storage, you need three or more nodes in the cluster.

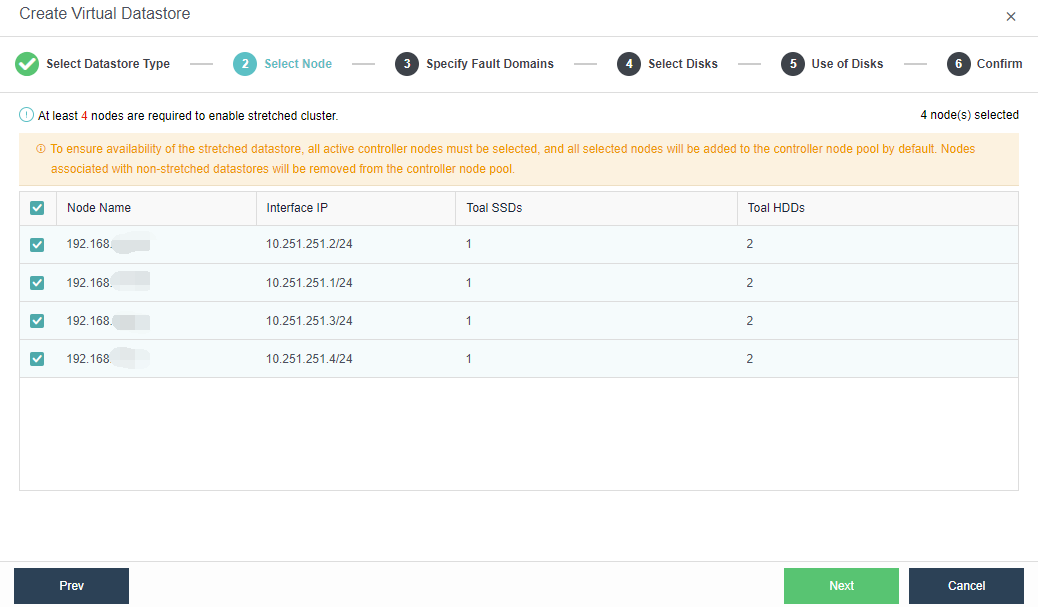

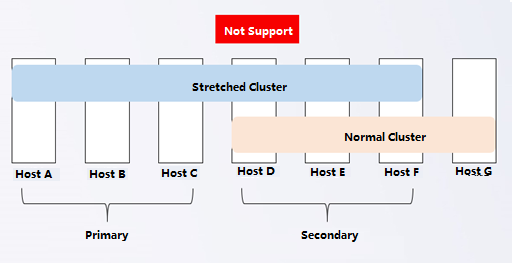

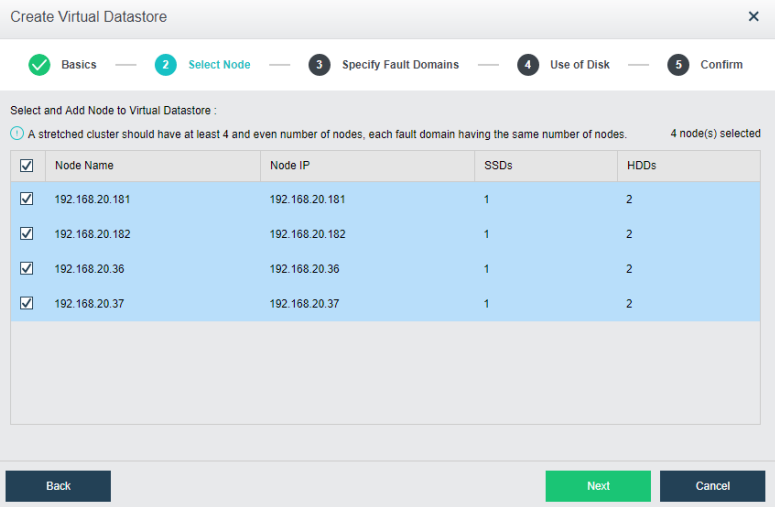

- The stretched cluster starts with at least four nodes, plus one witness node. In the actual deployment, ensure that the node location configured on the page is consistent with the actual physical location. Otherwise, it cannot play the role of machine room-level protection. The two fault domain computer rooms must be connected on the second floor. The link of the witness node does not need to be connected to the second floor but only needs to be reachable by the network.

- If you do not use virtual storage but external storage as the storage of the cluster, the external storage you need to use supports sharing (multiple nodes access at the same time) and VAAI features (mainly ats attributes). Otherwise, shared storage cannot be added.

- The production environment and test environment are recommended for building different clusters independently.

- It is recommended that there should be no more than 24 nodes in a single cluster.

- HCI deployment on a single host is prohibited.

- Licensing Check: If the validity period of the licensing is less than one year, remind the user to renew in time.

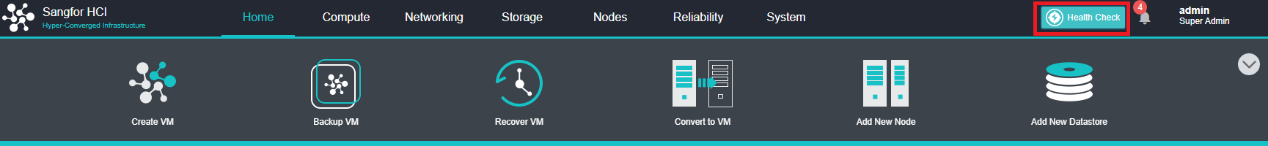

- Health Check: Must run health check after implementation to eliminate all fault risk items.

- aDeploy tool detection: Must run aDeploy tool after implementation to eliminate all fault risk items.

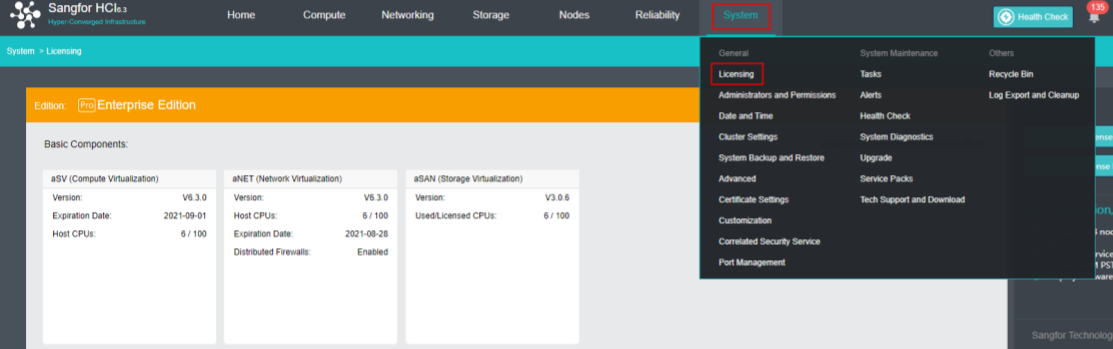

Licensing Activation

Steps

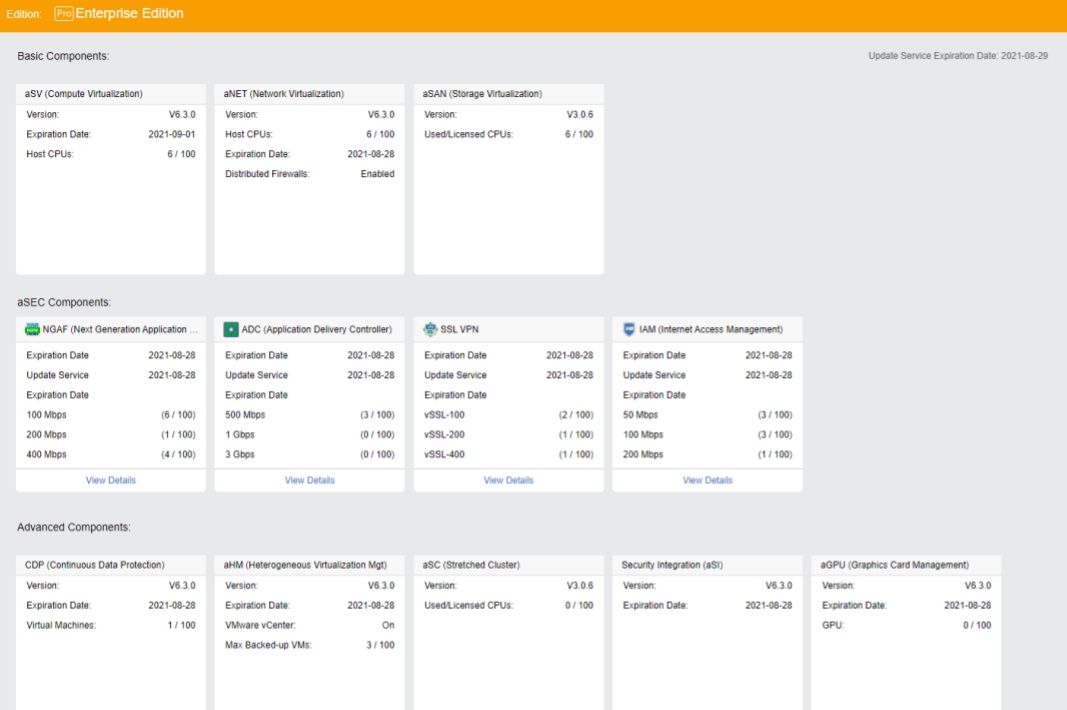

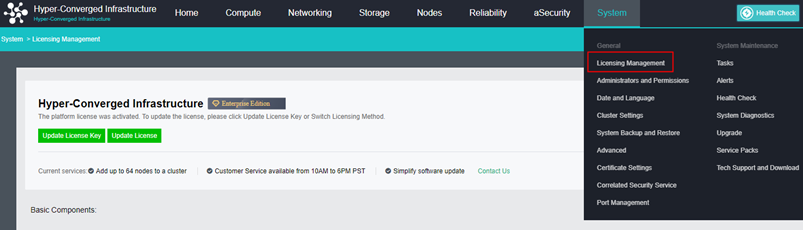

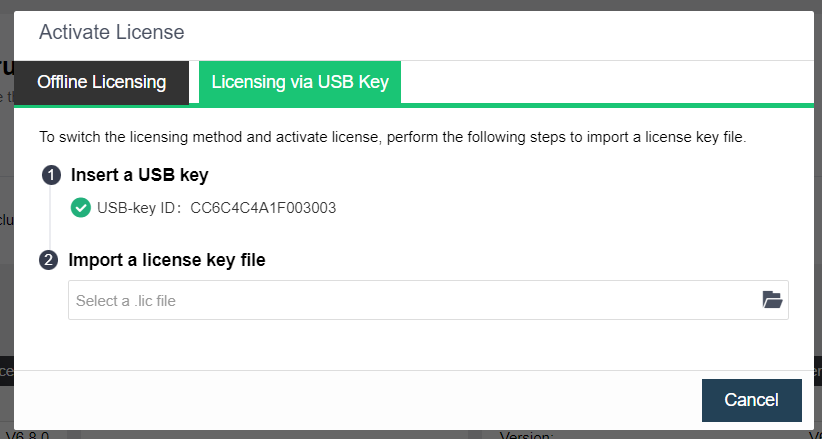

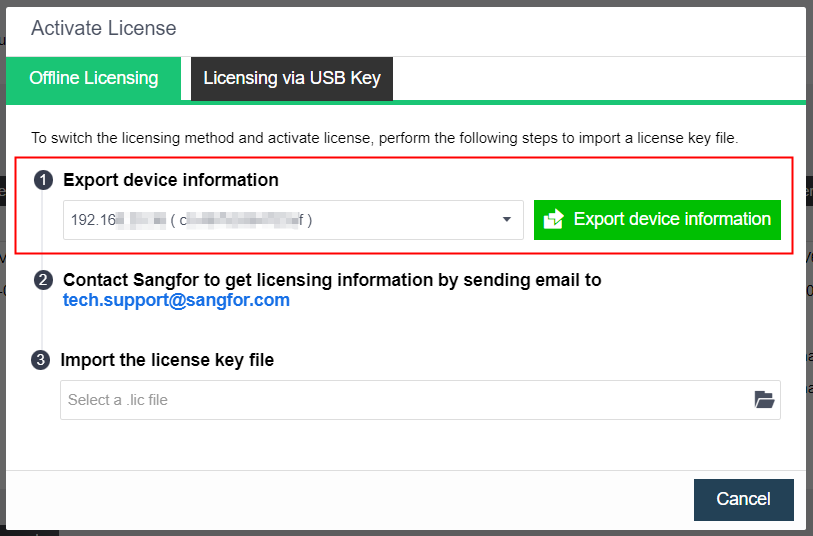

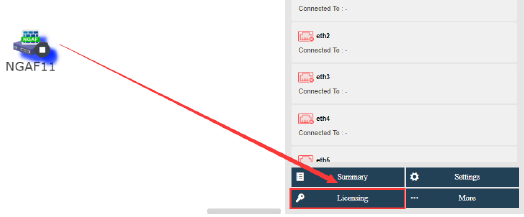

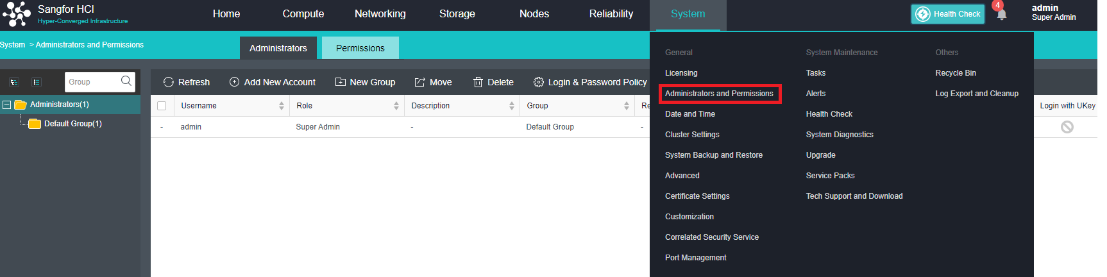

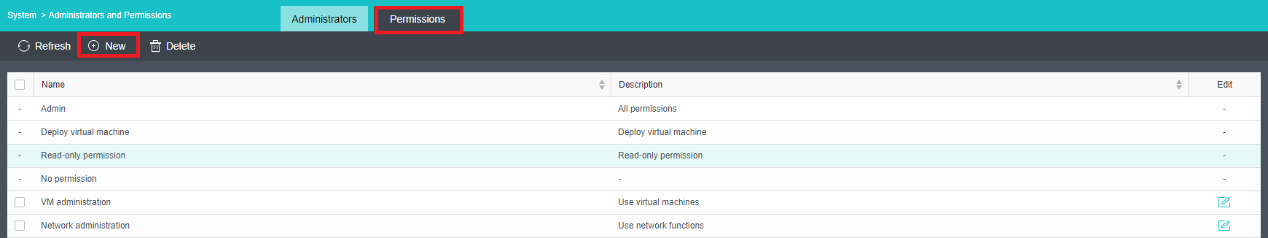

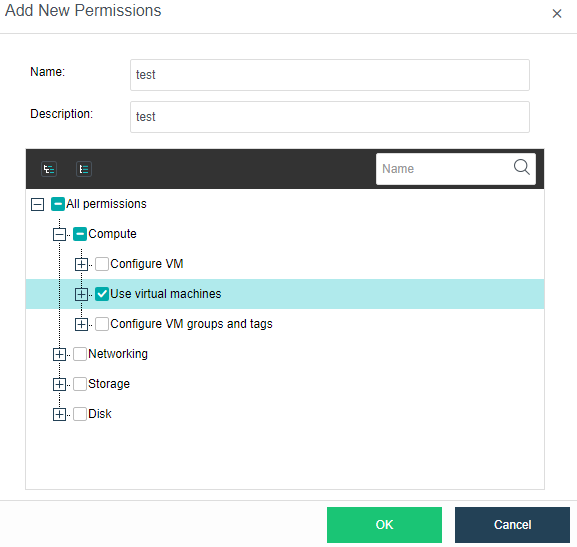

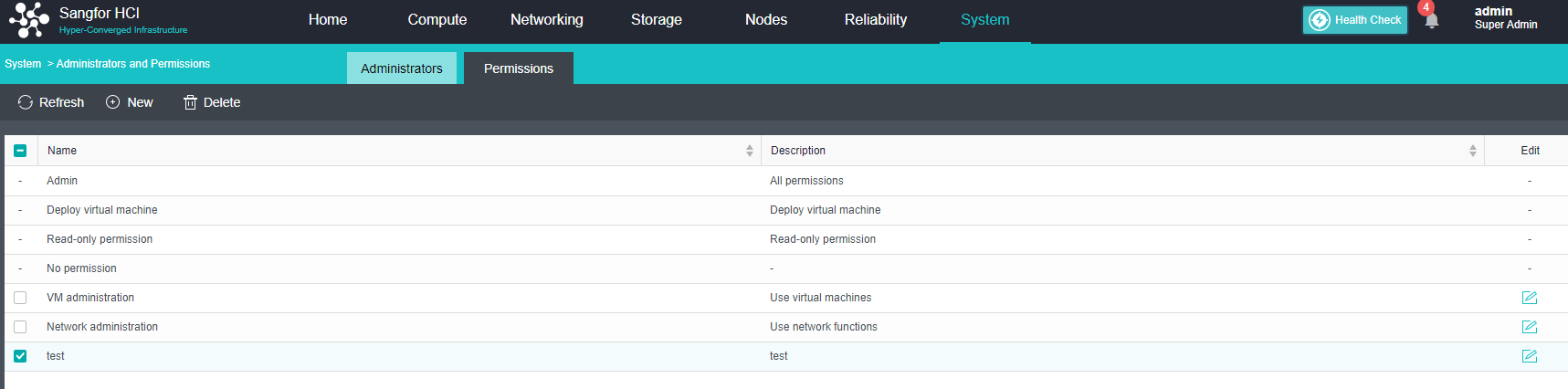

- Insert a USB key containing license key information into the cluster controller, and then go to System > General > Licensing, as shown below:

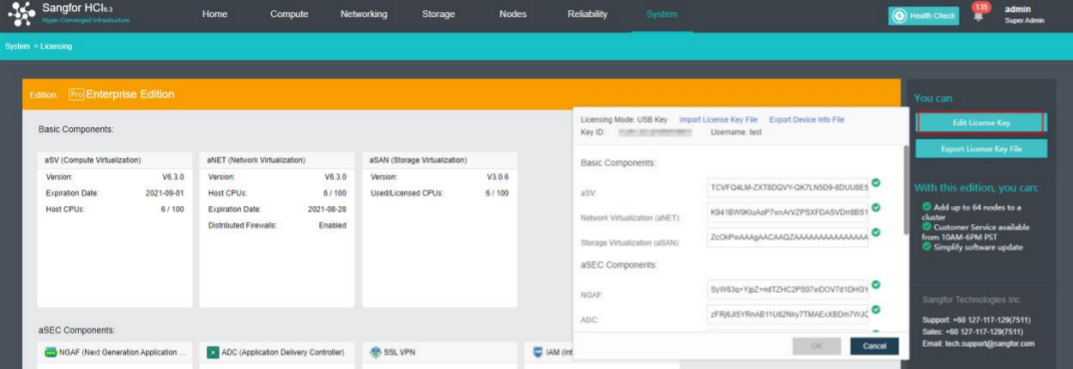

- To input the license key, navigate to Edit License Key > Import License Key File.

- After clicking OK, you can check the license information on the left panel to determine whether the license key is activated successfully, as shown below:

Cluster Formation

Configure Cluster IP

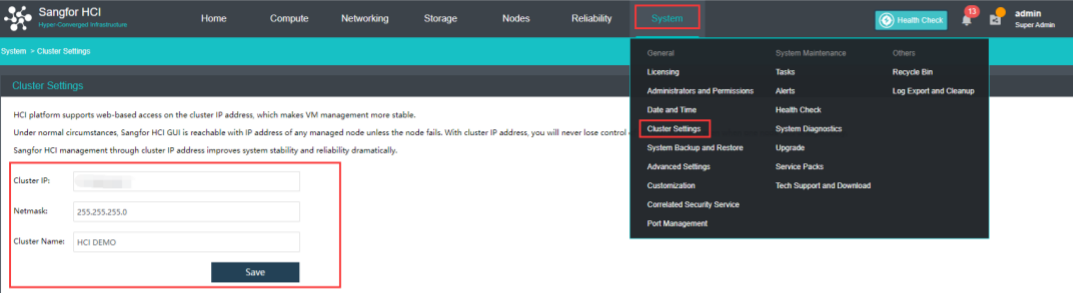

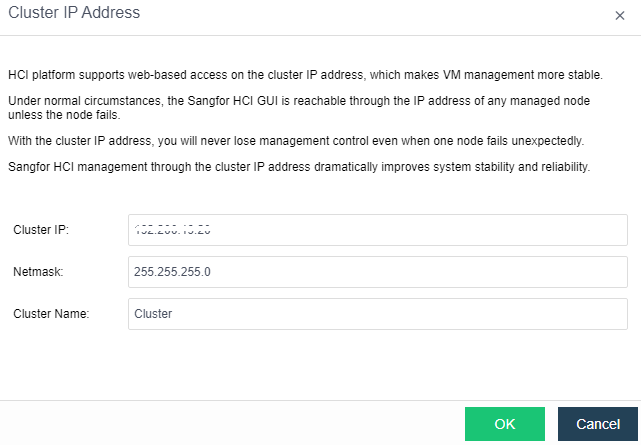

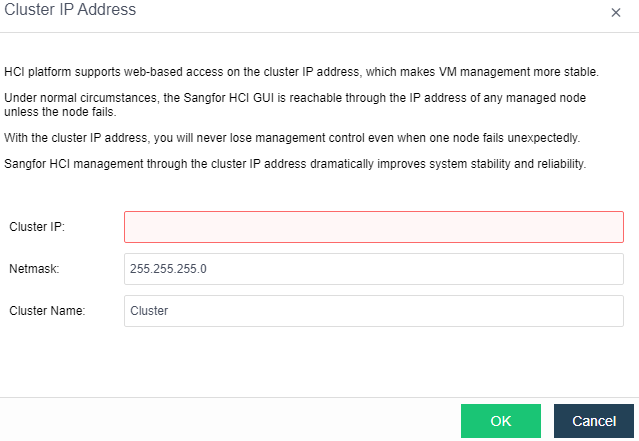

Cluster IP manages virtual machine resources through cluster IP after the node is offline and configures cluster IP through System > General > Cluster Settings.

Note:

Note that cluster IP address and node NIC address cannot be the same, or it will result in IP address conflict.

Configure Cluster Time

Steps

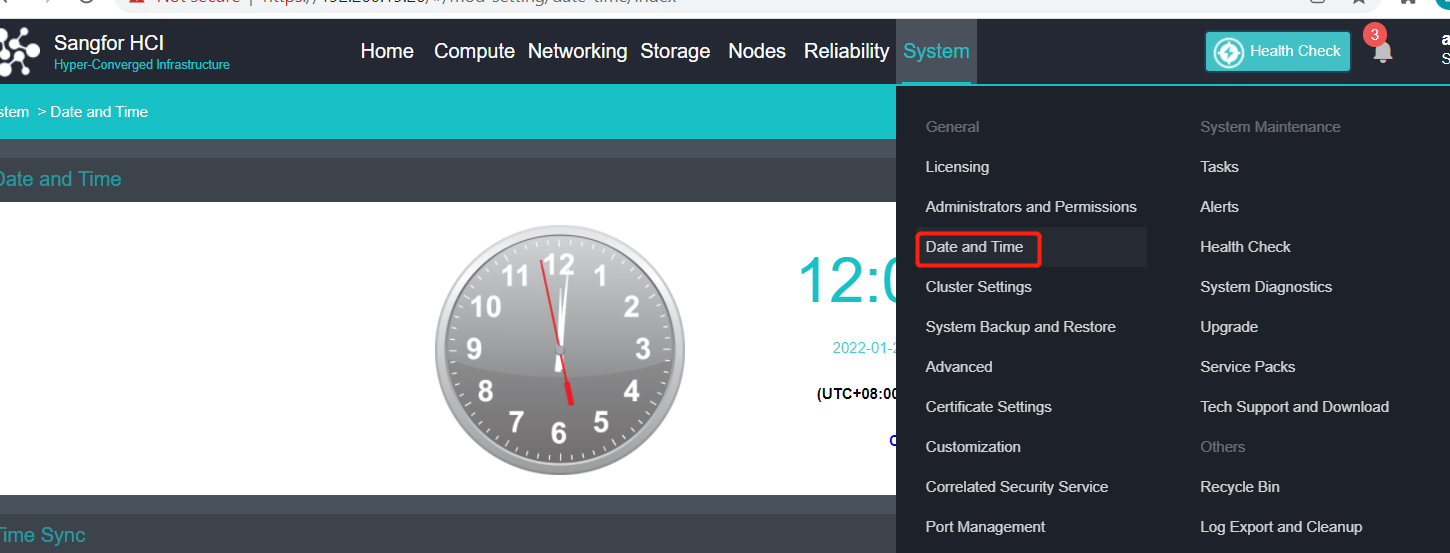

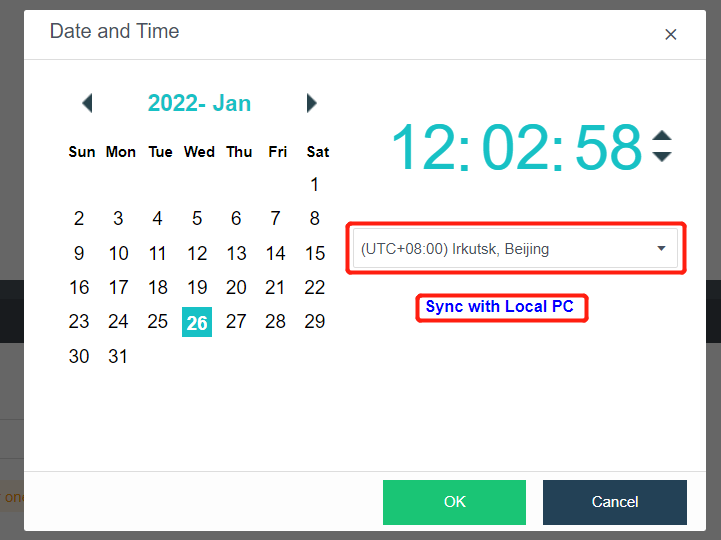

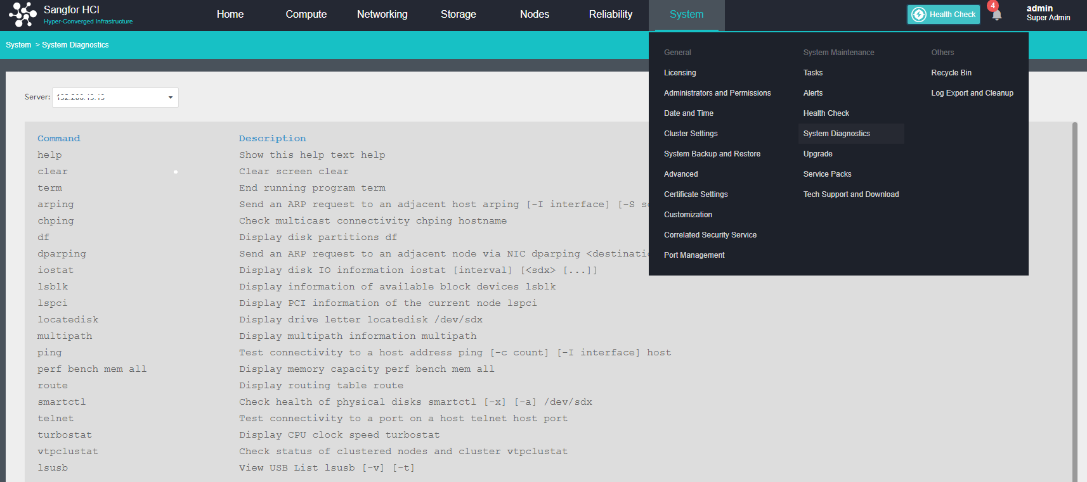

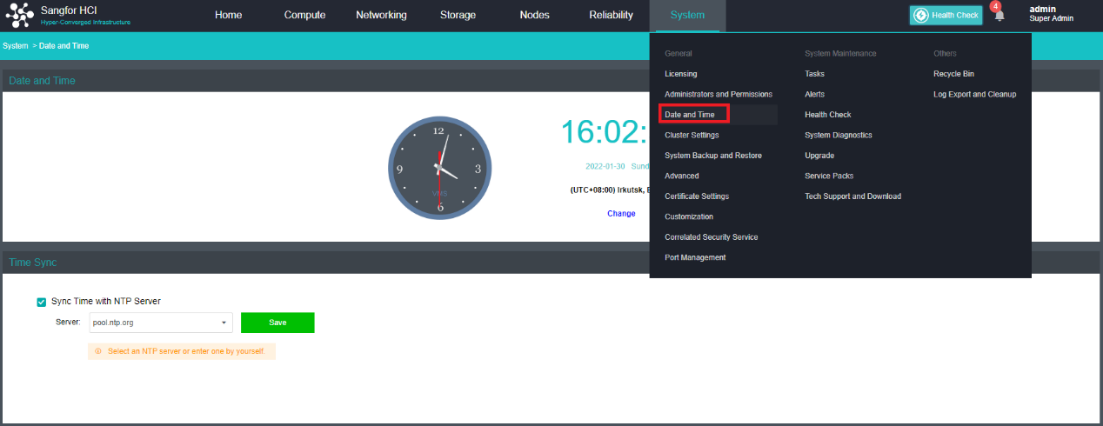

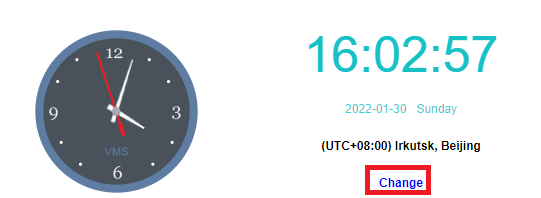

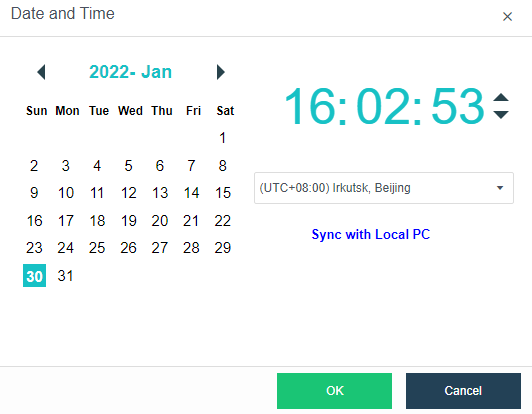

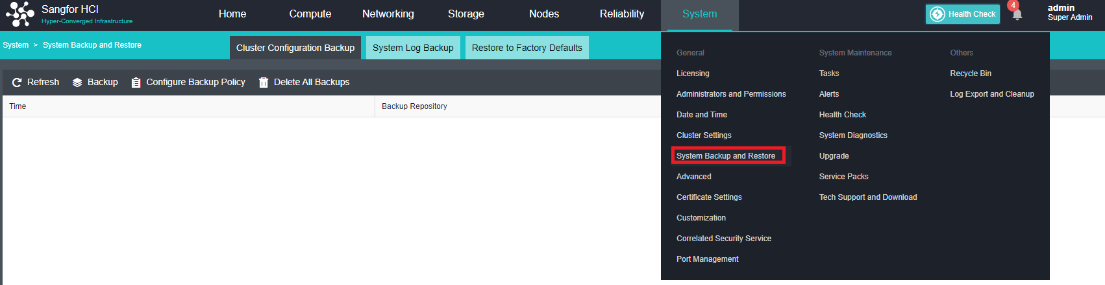

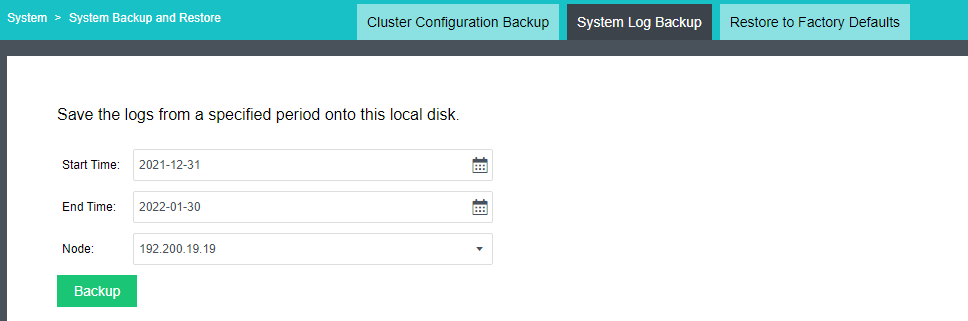

- Click System > Date and Time in the console to enter the page to compare whether the HCI cluster time is accurate. If the time is inaccurate, click Change to enter the platform time setting page.

- To modify the cluster time, you can fine-tune the time by clicking the up or down arrow or configure the platform time by obtaining the local computer’s time. You can also modify the time zone of the platform.

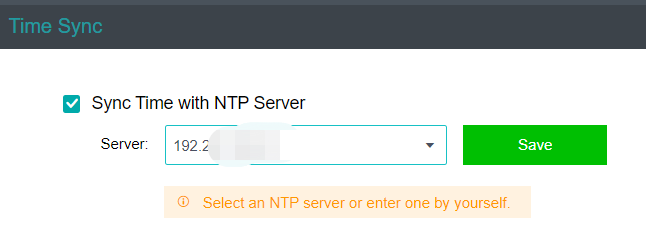

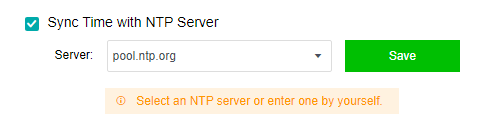

- If the platform time is synchronized with the internal NTP server, you only need to check the Sync Time with NTP Server checkbox, modify the server’s IP address, and click Save.

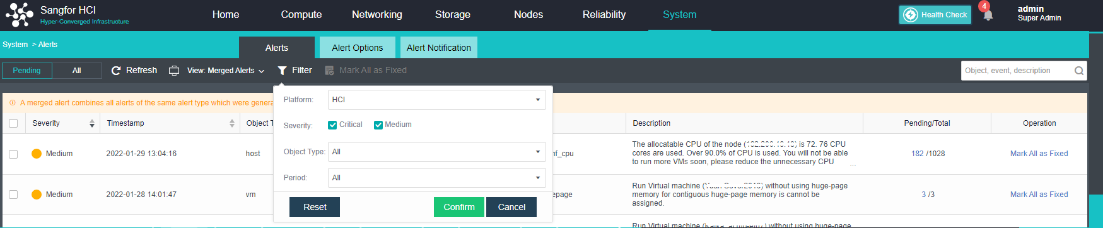

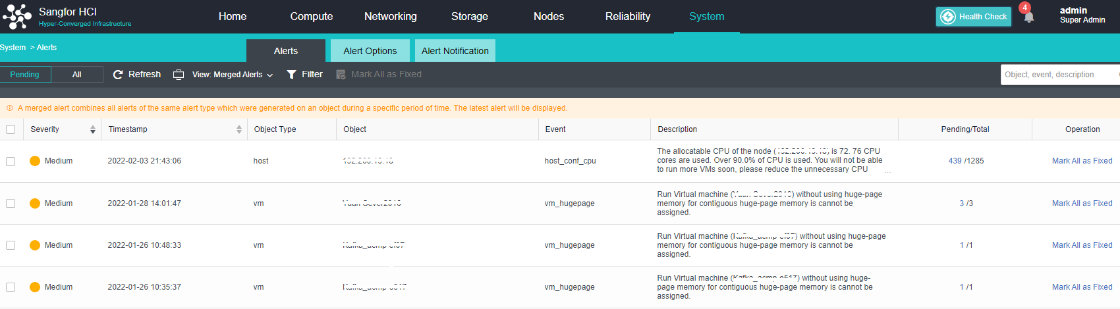

Configure Alert Notification (Optional)

Steps

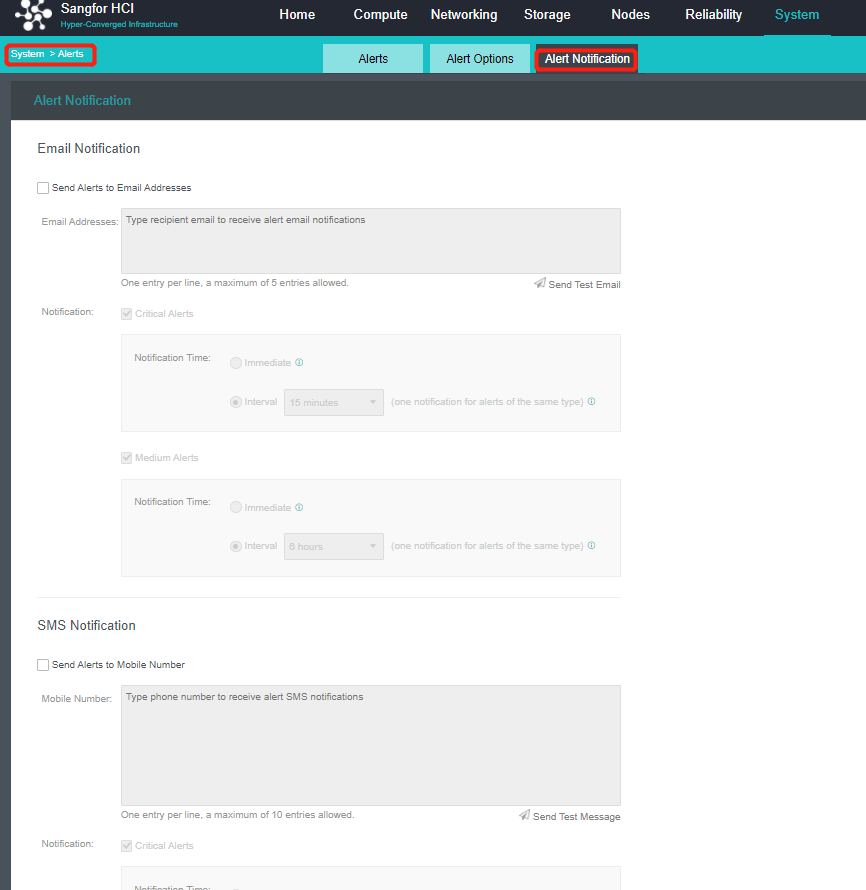

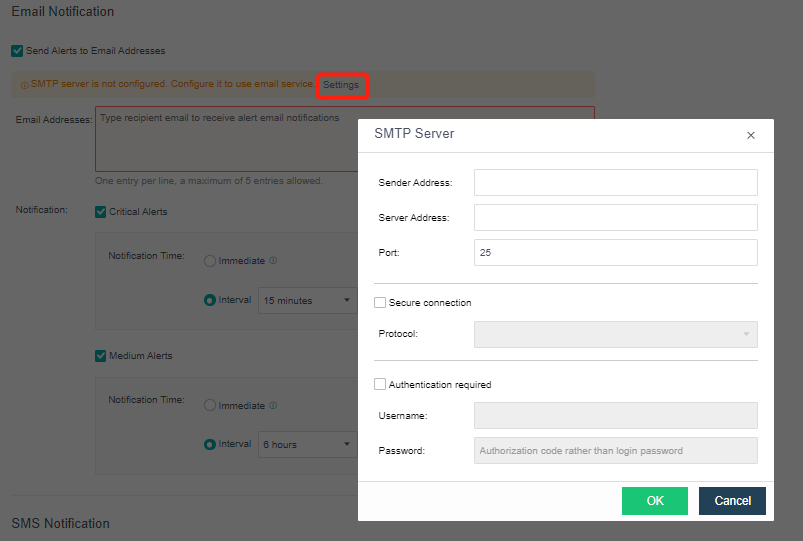

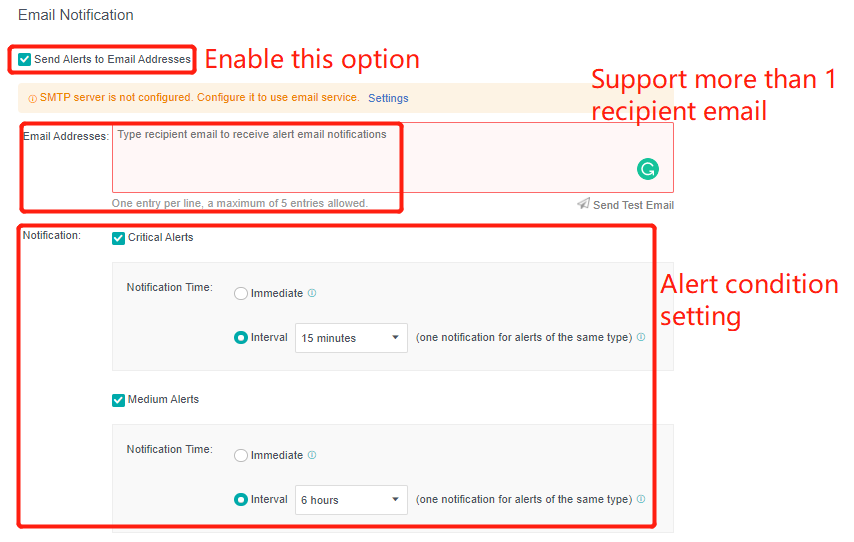

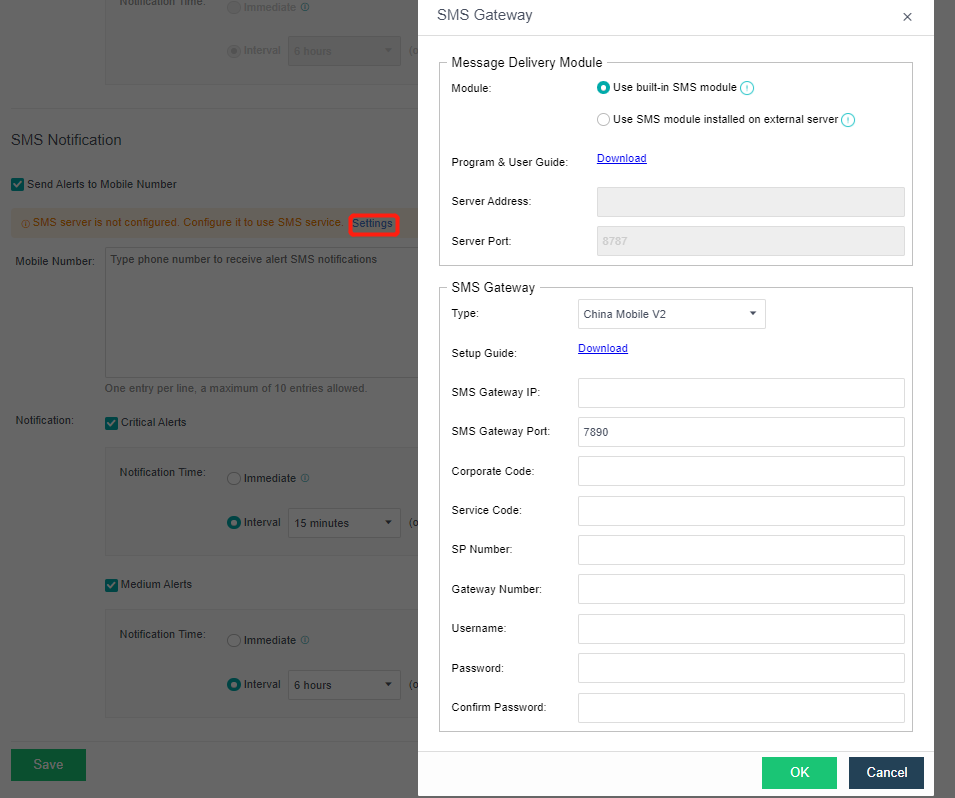

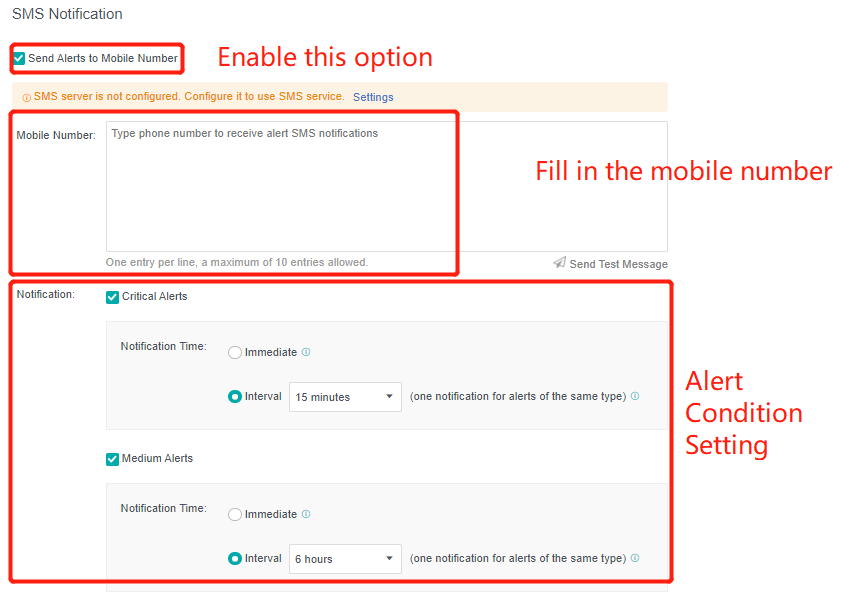

- Enter the configuration interface: open the console and enter System > Alert > Alert Notification. Check the Send Alerts to Email Addresses or Send Alerts to Mobile Number checkbox as required. Before setting, you need to set up a mail server or SMS server.

- Send to the specified mailbox: Check the Send Alerts to Email Addresses checkbox. If the mail server is not configured, click Setting. If the configuration already exists and needs to be modified, click Edit. Fill in the configuration information of the Mail server and click OK to save the settings. Fill in the email address that can be delivered by the mail server as required, set the condition for triggering the alarm notification as needed, and click Save to complete the configuration.

- Send to specified mobile phone number: check the Send Alerts to Mobile Number checkbox. If the SMS server is not configured, click Setting. If the configuration already exists and needs to be modified, click Edit. Fill in the configuration information of the SMS server and click OK to save the settings. When there is a problem with the SMS server configuration, you can download the guidance document by clicking the Download button. Fill in the mobile phone number of receiving SMS as required, set the conditions for triggering alert notification as required, and click Save to complete the configuration.

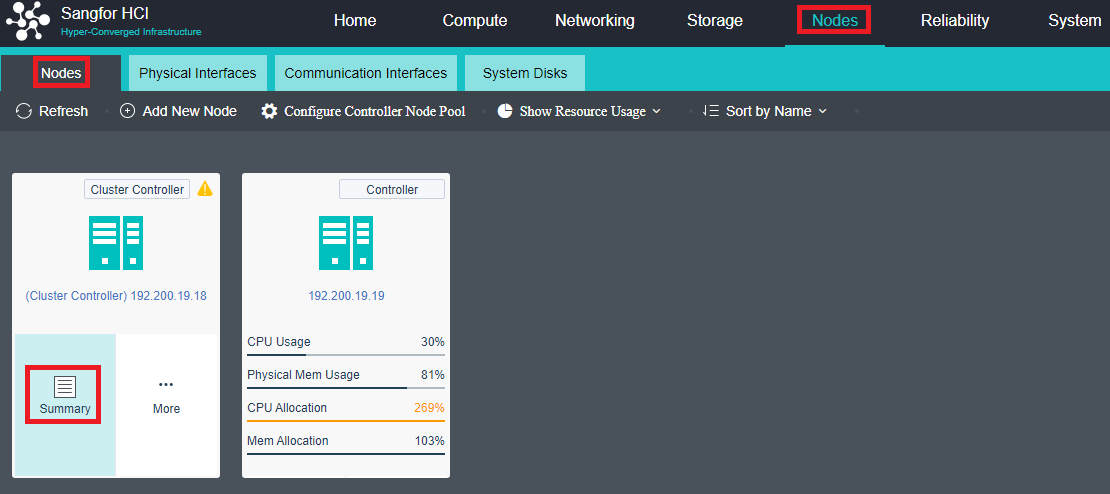

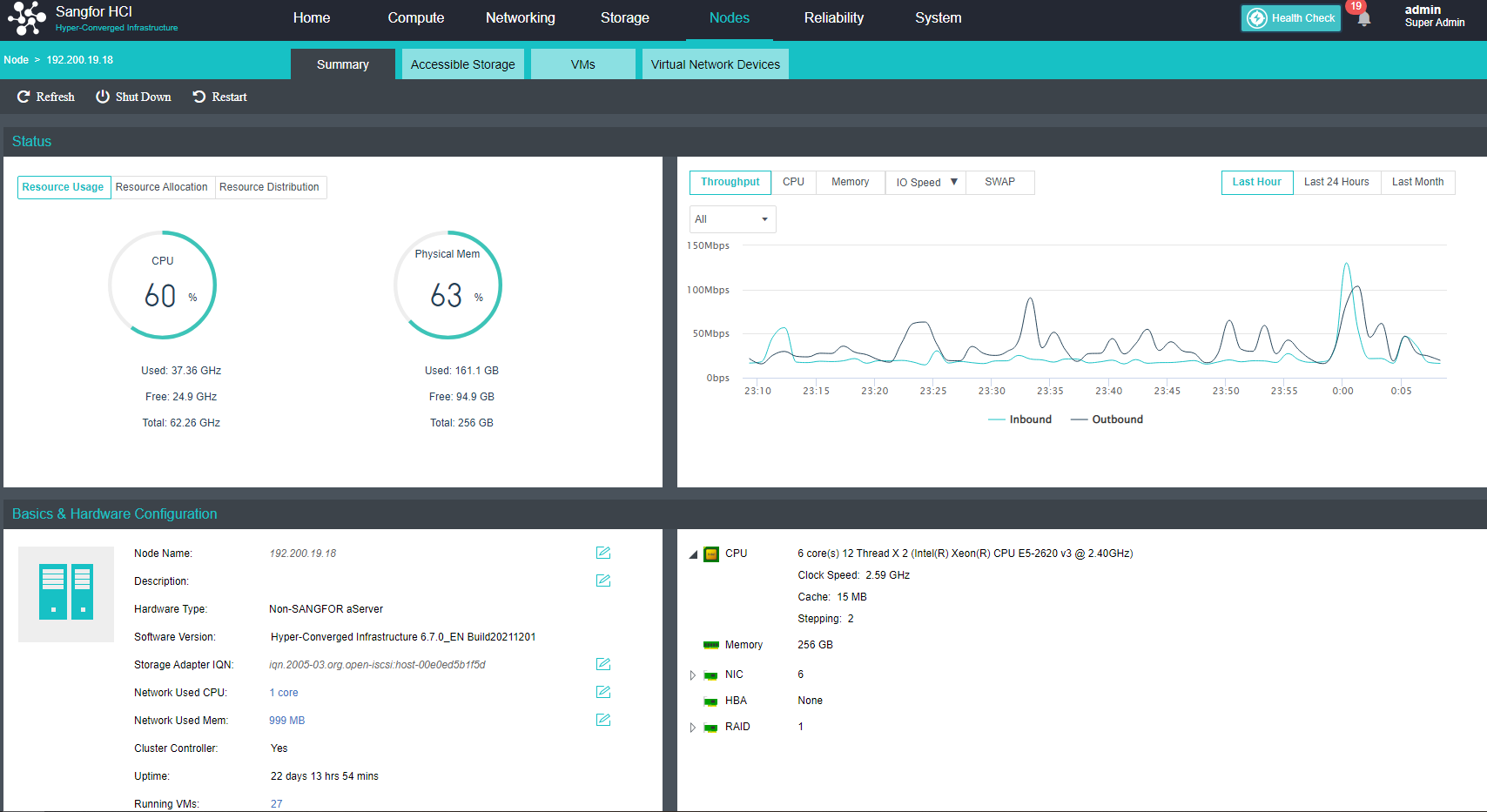

Add Node

Precautions

- For a cluster size of more than 24 nodes, it is recommended to use the hardware with good performance as the cluster controller of the control node.

- When two nodes are expanded to more than three nodes, the virtual machine must be shut down because an arbitration mechanism needs to be established.

- The first added node within the three nodes must be a control node and in the control node pool. Other hosts can choose whether to join the control node pool.

Steps

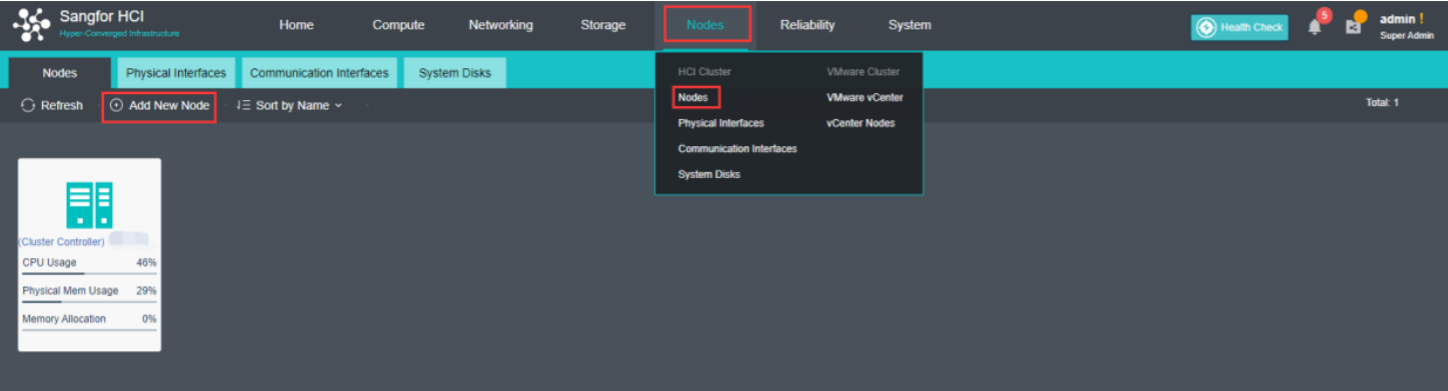

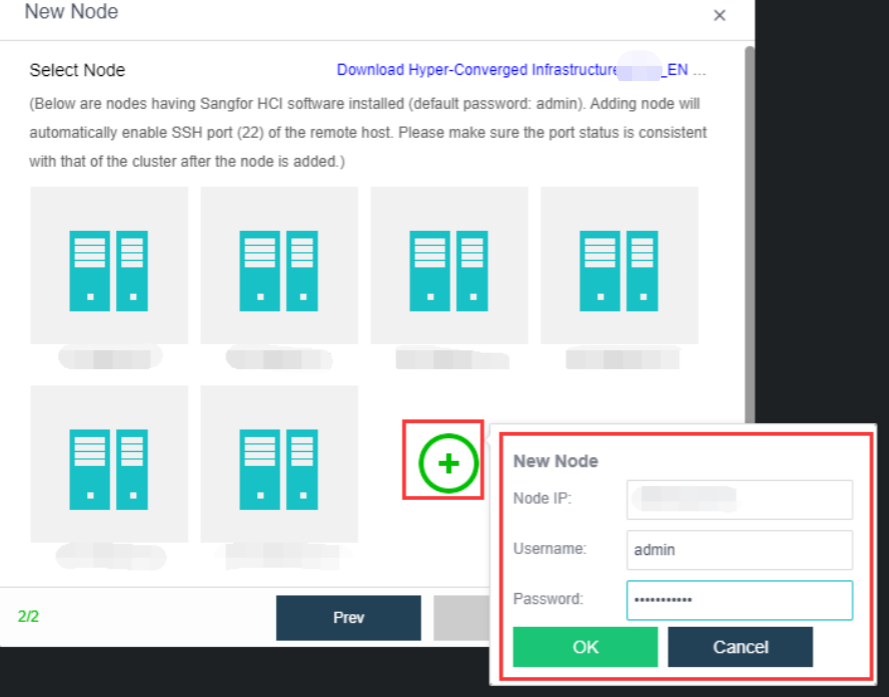

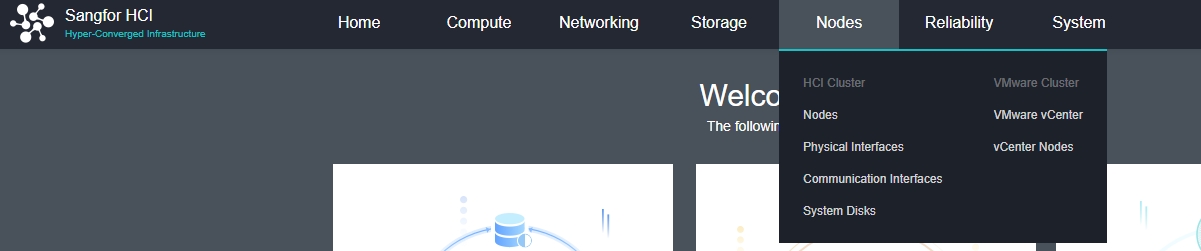

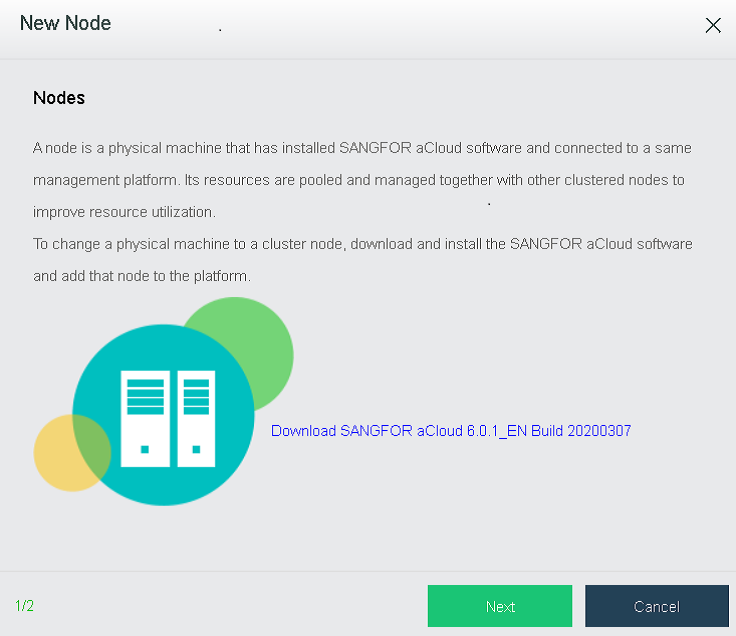

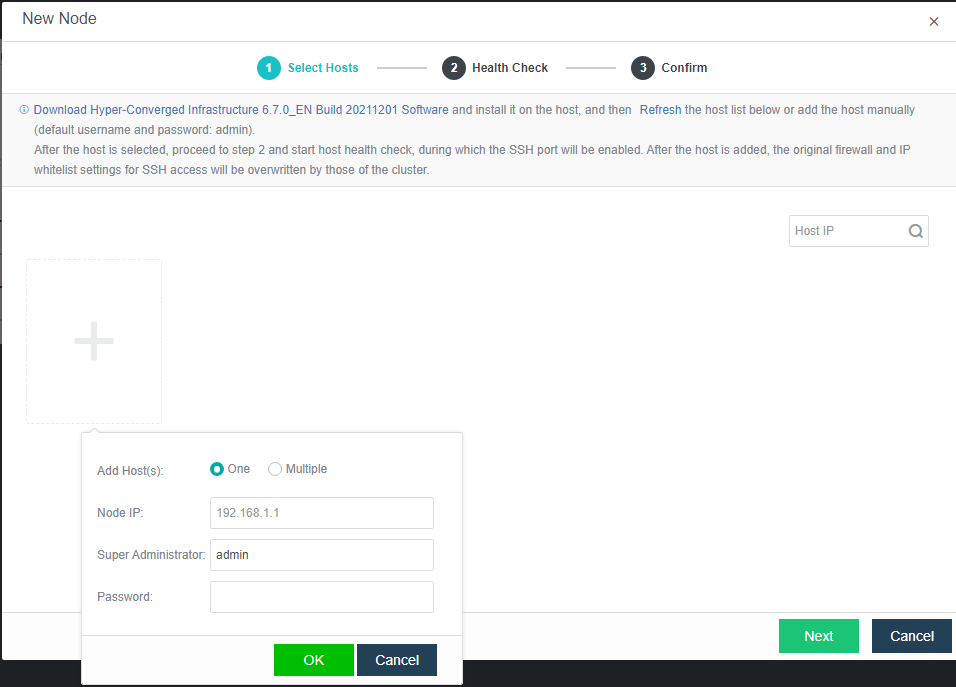

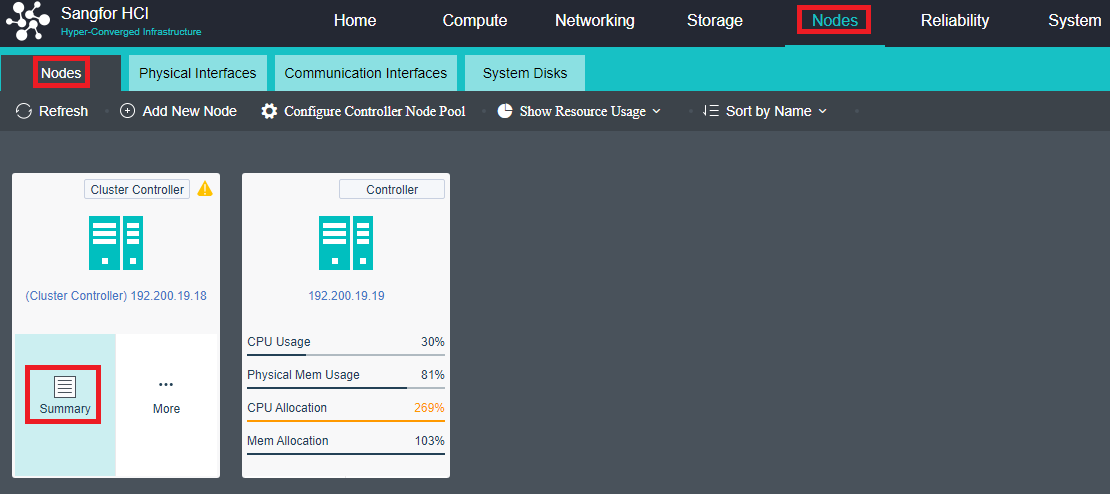

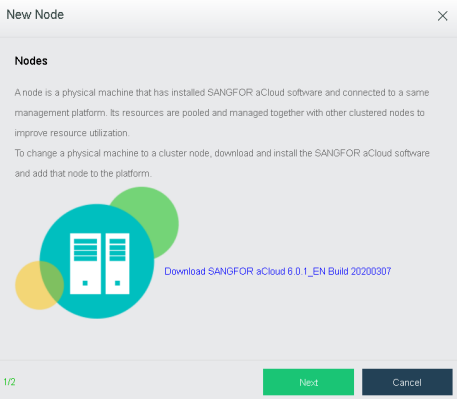

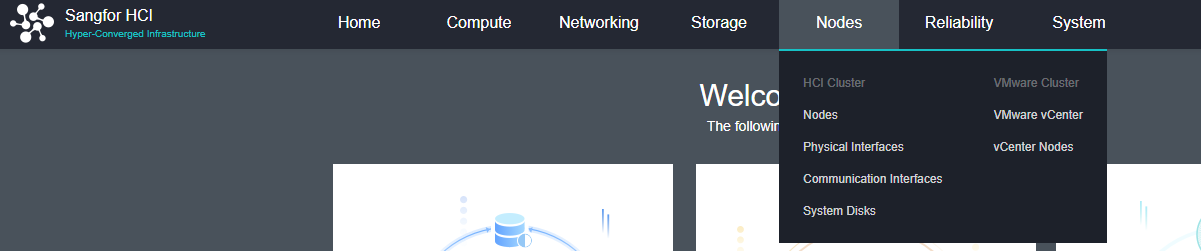

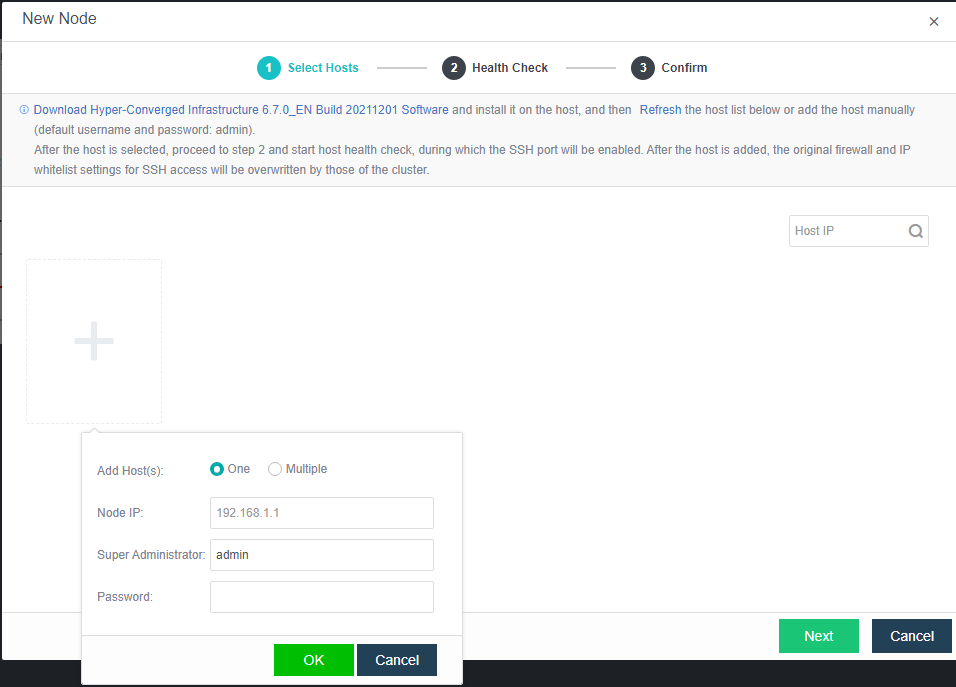

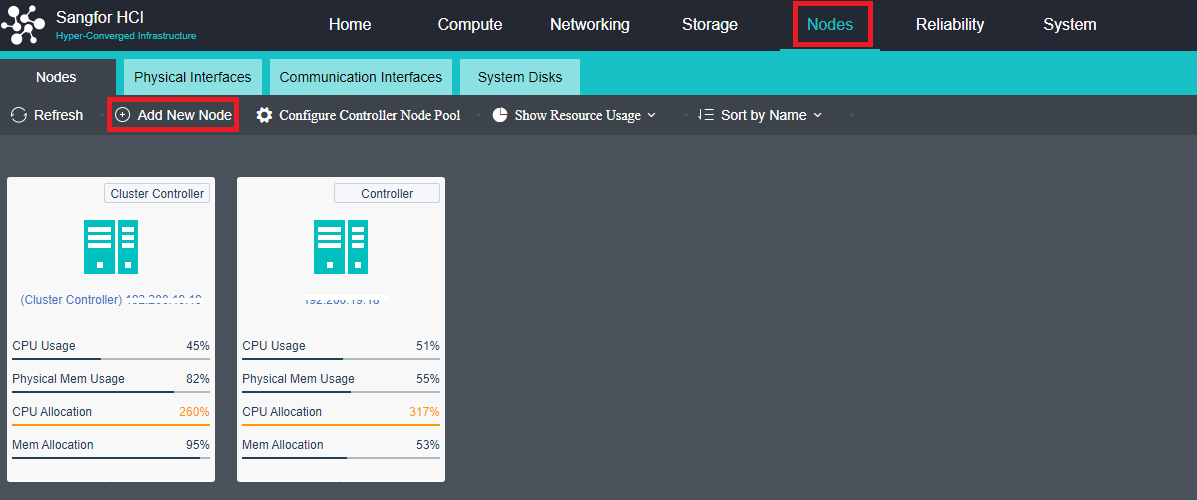

- Navigate to Nodes > HCI Cluster > Nodes. You can add a node to a cluster by clicking Add New Node.

- On the following page, select a node you want to add to the cluster and input the corresponding username and password. Once a node is chosen, the node icon turns green, indicating the node can be added to the cluster. There will be a prompt to reconfigure the firewall configuration of the added node and execute the node addition task.

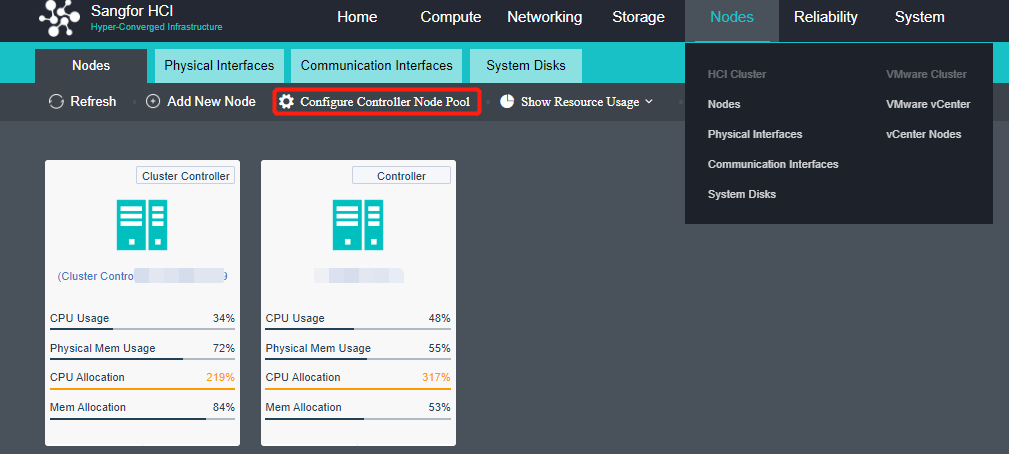

Configure Controller Node Pool

Description

In the HCI cluster, the node has the roles of control node and non-control node. The control node can provide cluster management and computing, network, storage, and other functions, such as virtual machine scheduling, task scheduling, etc. The non-control node can provide computing, network, storage, and other functions. The controller node pool is set to achieve high availability of cluster management nodes. When the control node fails, other nodes can be selected as the control node in the controller node pool to realize high availability switching and ensure the normal use of cluster management functions.

Precautions

-

For a cluster size of more than 24 nodes, it is recommended to use the hardware with good performance as the cluster controller.

-

The cluster supports up to three control nodes.

-

The cluster controller can only switch in the control node pool. If all the control nodes fail, there is no cluster controller.

Steps

- Navigate to Nodes and click Configure Controller Node Pool to add a node into the controller node pool.

- Add the control node into the control node pool and set three active nodes.

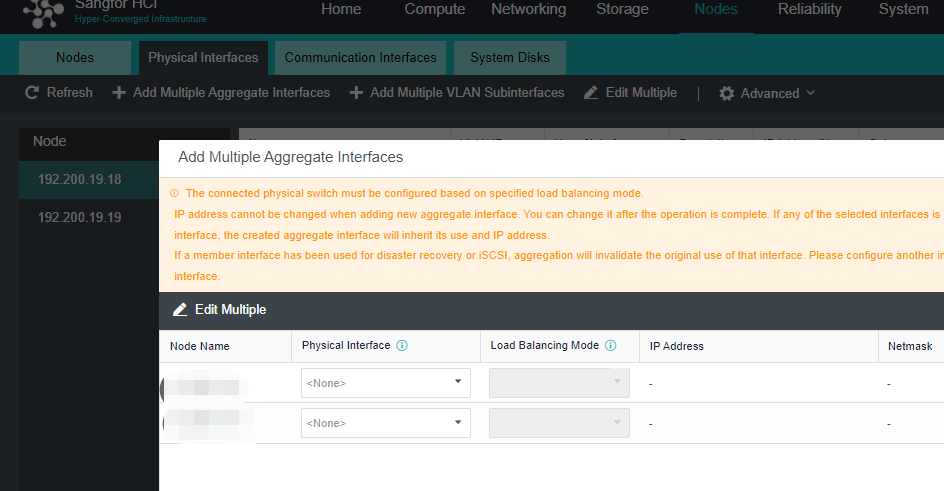

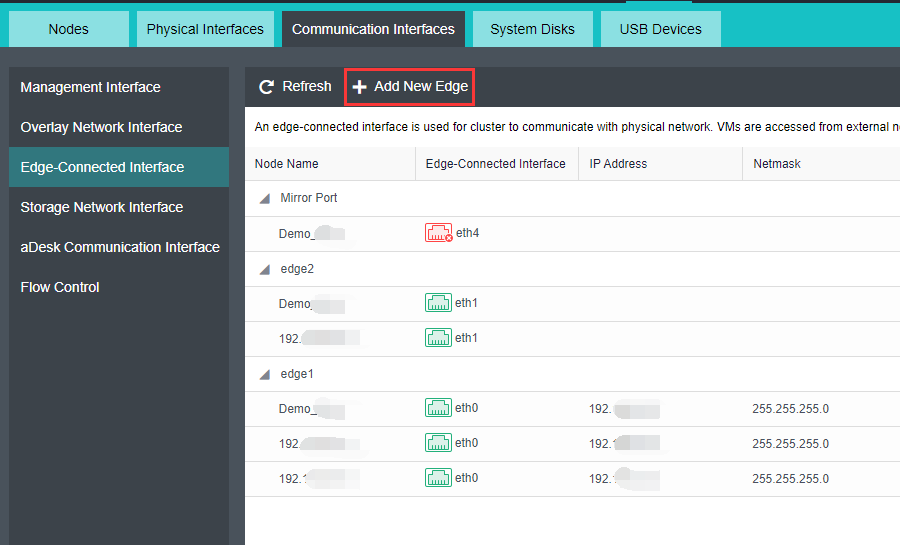

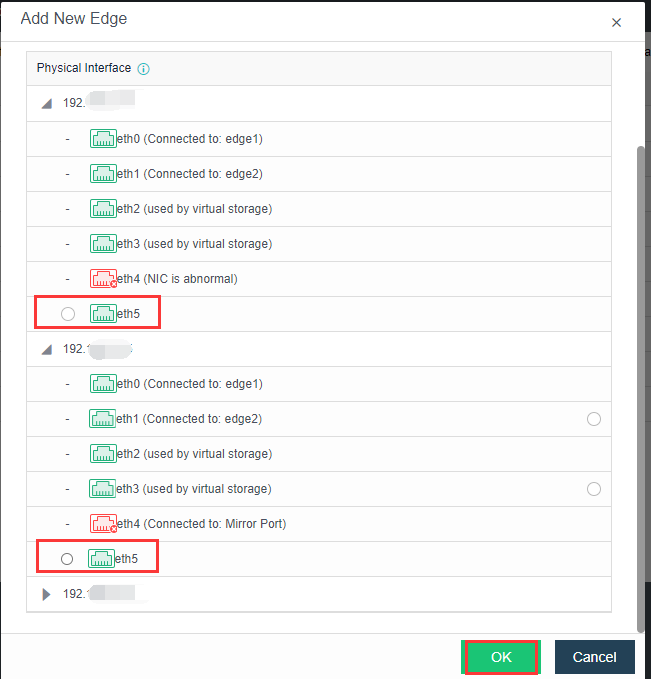

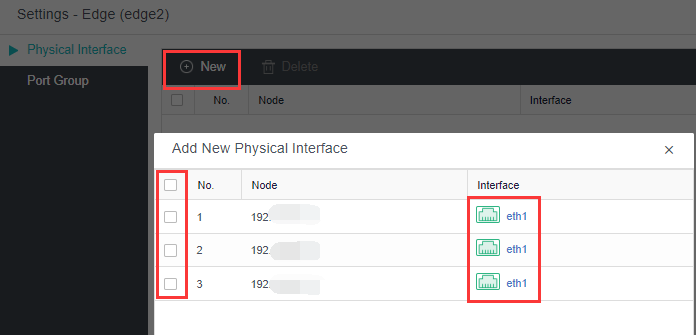

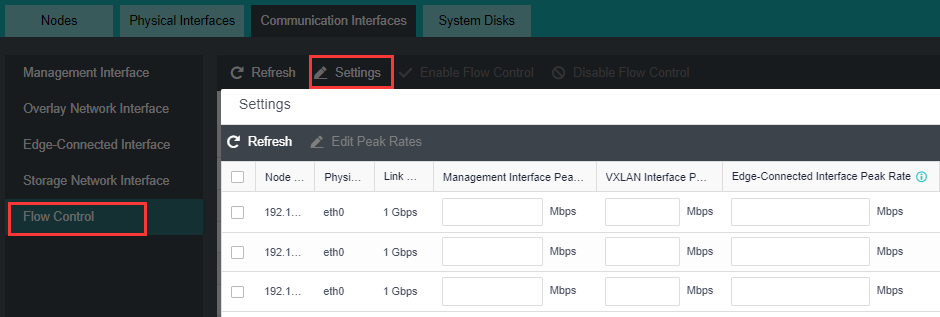

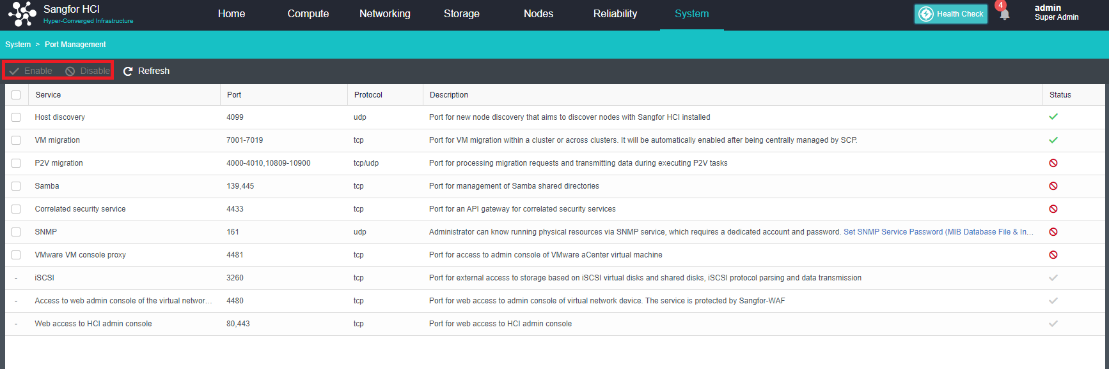

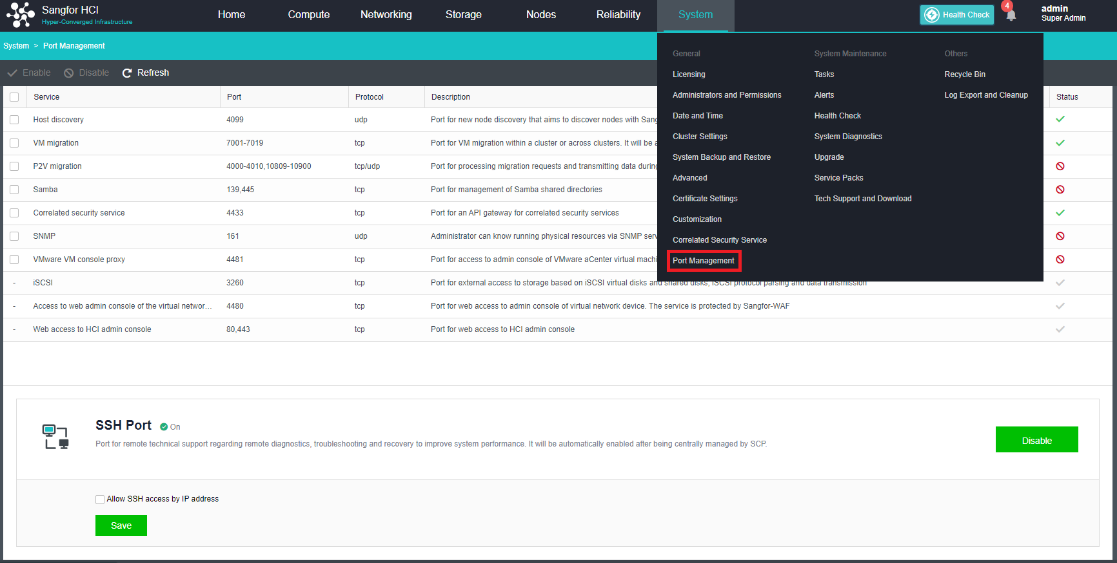

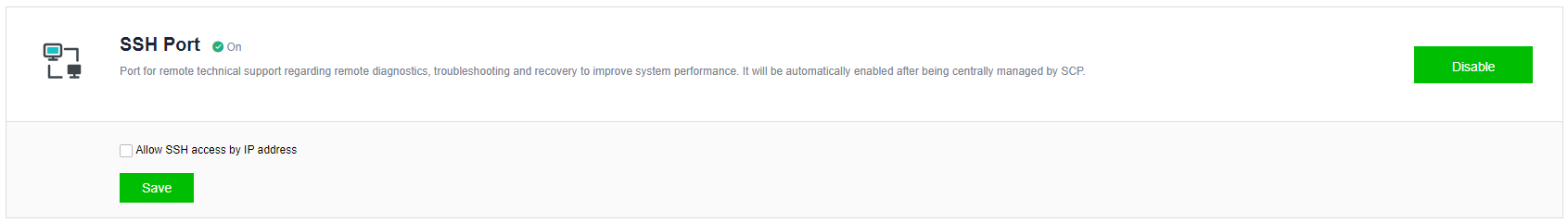

Manage Communication Port Configuration

Scenario

It is recommended to use the aggregation interface as the management communication port to increase the bandwidth and improve the network stability of the platform.

Precautions

- The peer switch needs to configure the corresponding interface aggregation mode when using the aggregation interface. Otherwise, the network will be blocked, and the HCI management interface cannot be logged in.

- The aggregation of the network interface is not supported.

- Direct aggregation of two interfaces with different roles is not supported. Aggregation can be performed after canceling one interface role. For example, eth0 (management interface) and eth1 (VXLAN interface) cannot be aggregated directly. The VXLAN interface can be adjusted to eth2. At this time, eth0 (management interface) and eth1 (no role) can be aggregated.

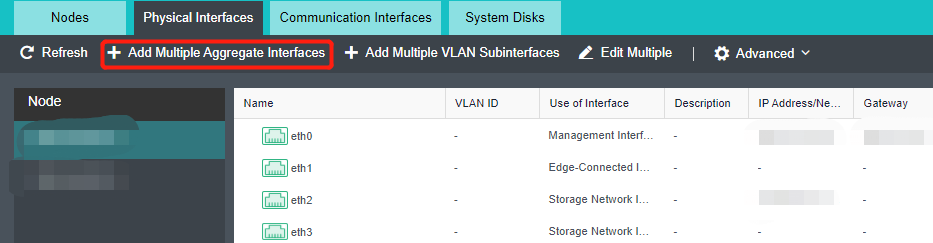

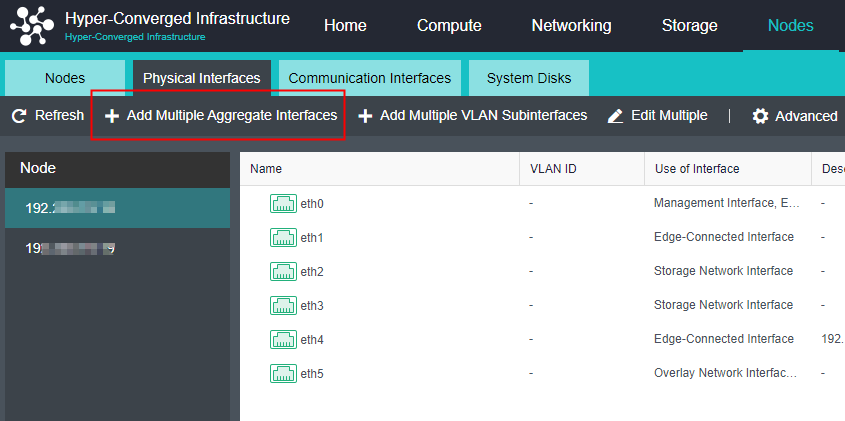

Steps

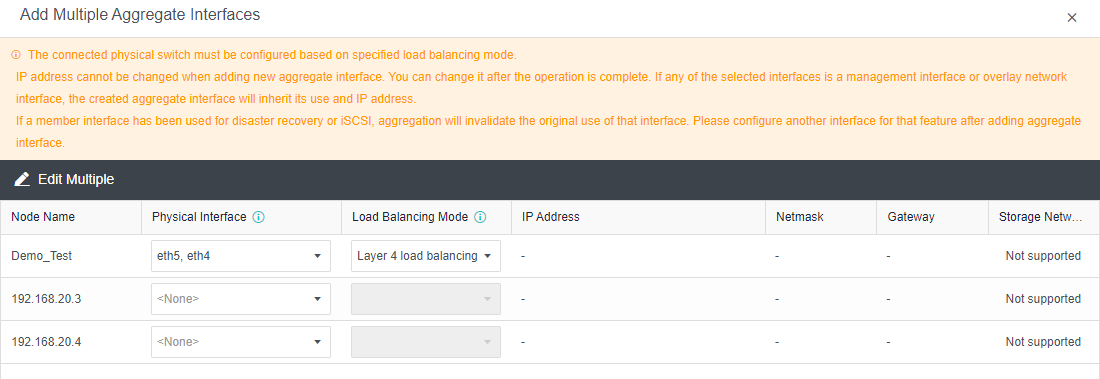

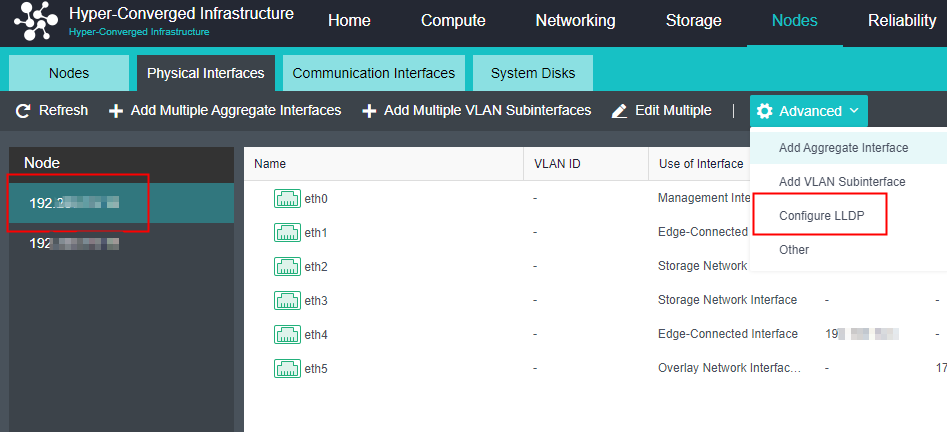

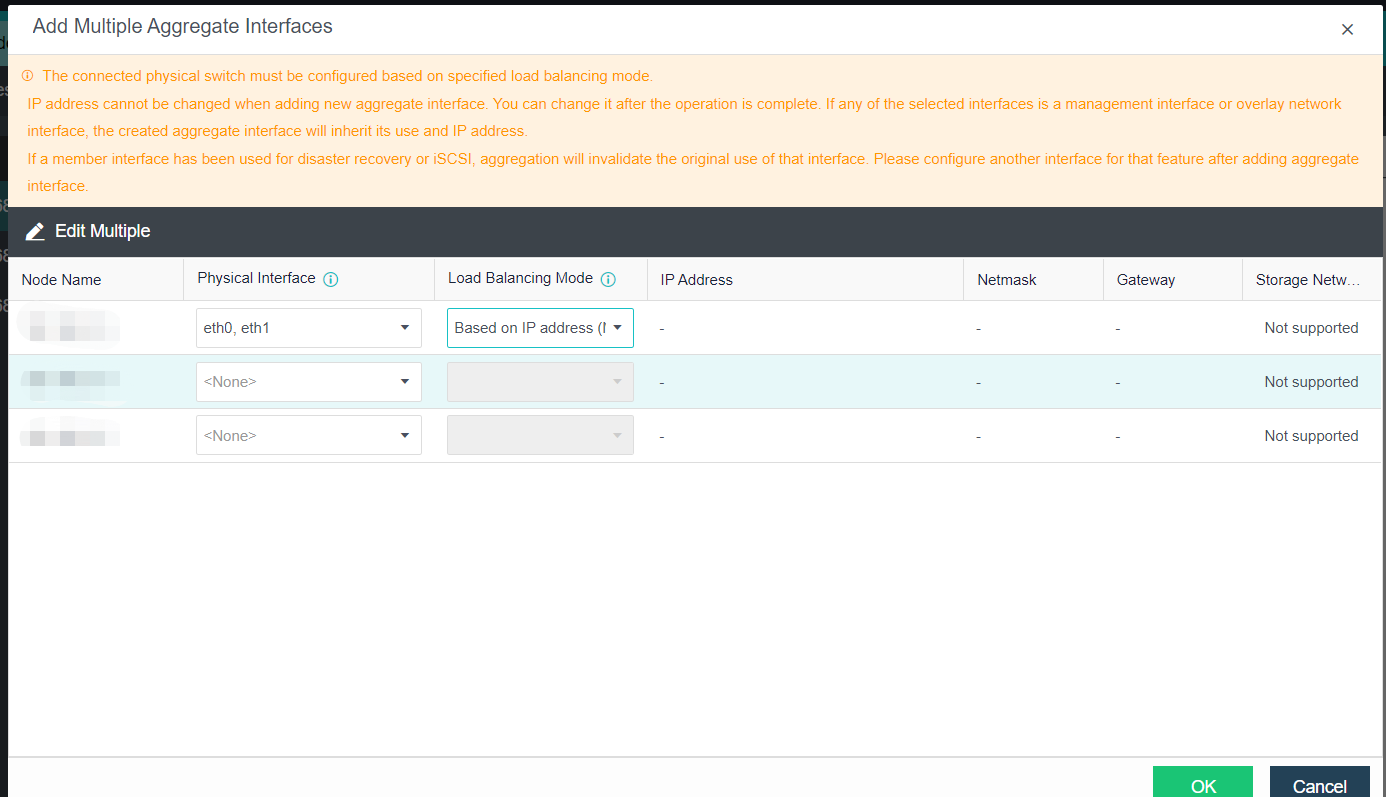

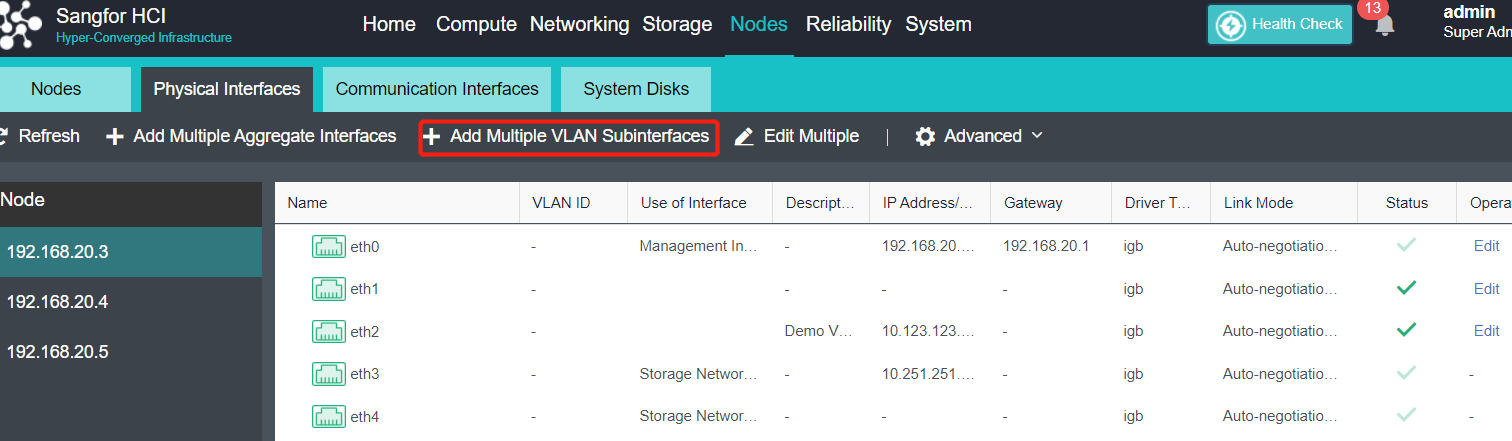

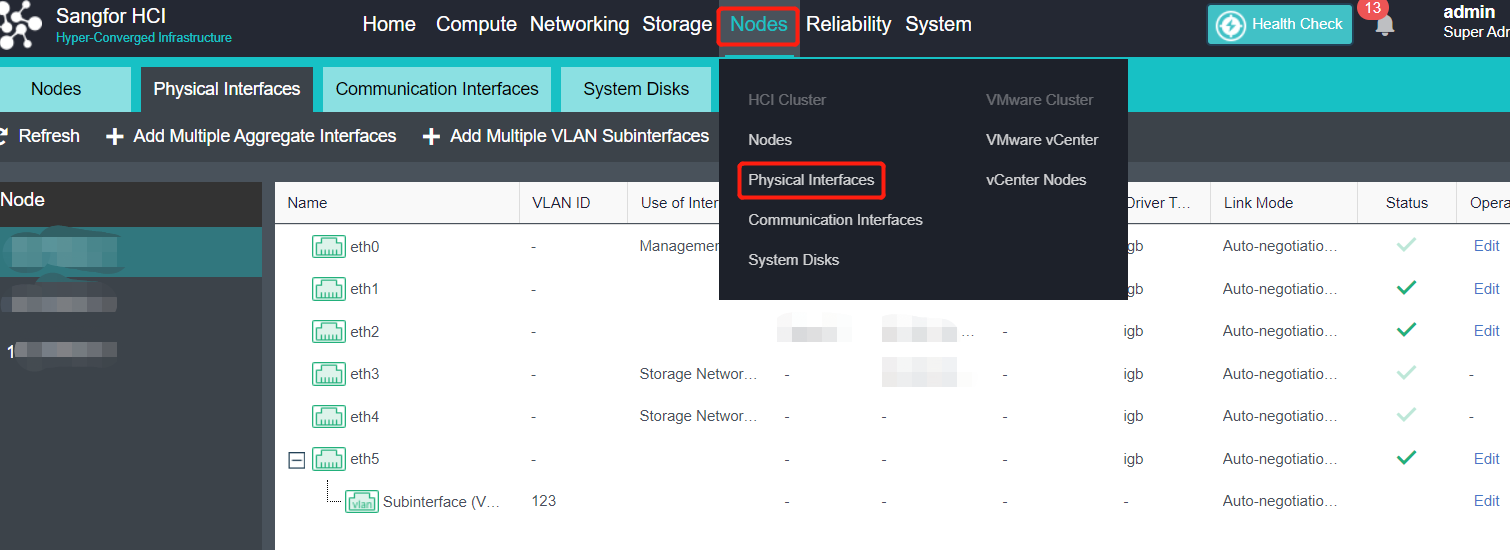

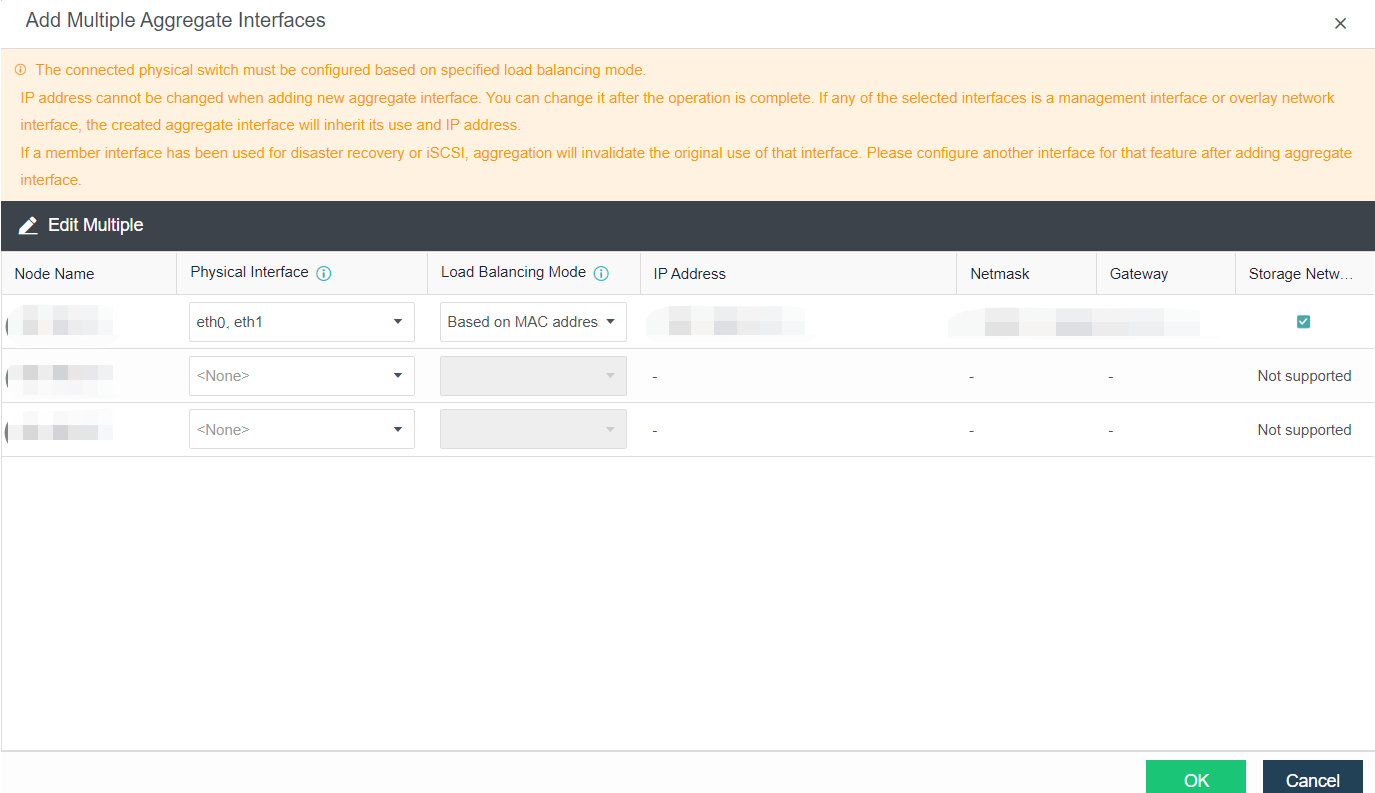

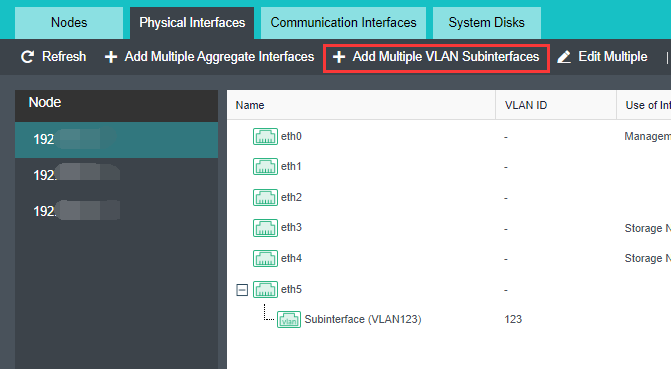

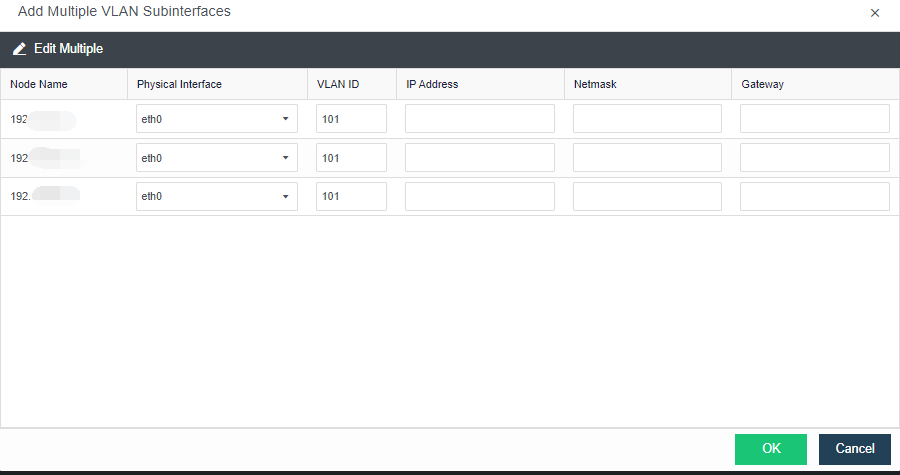

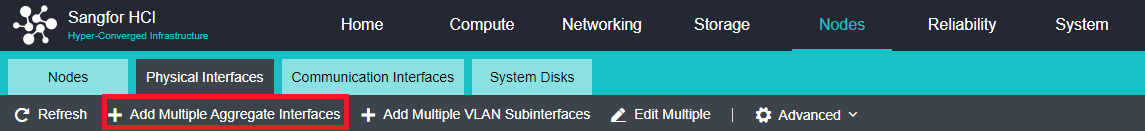

- Click Nodes > Physical Interface on the HCI console, click Add Multiple Aggregate Interfaces, and select two interfaces. The aggregation mode recommends loading according to MAC or IP address.

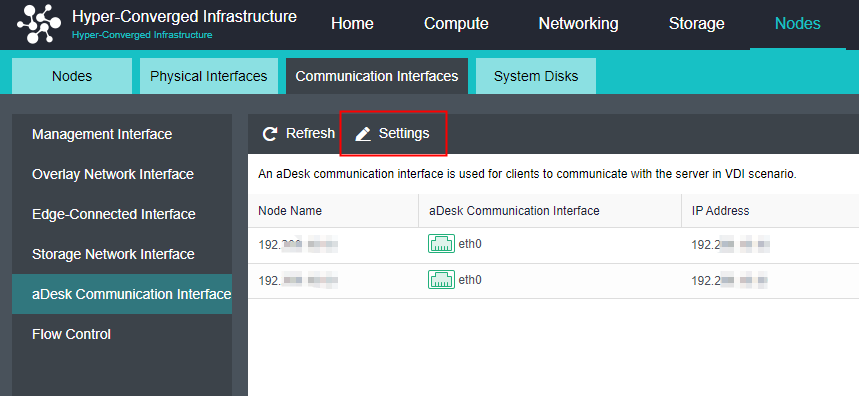

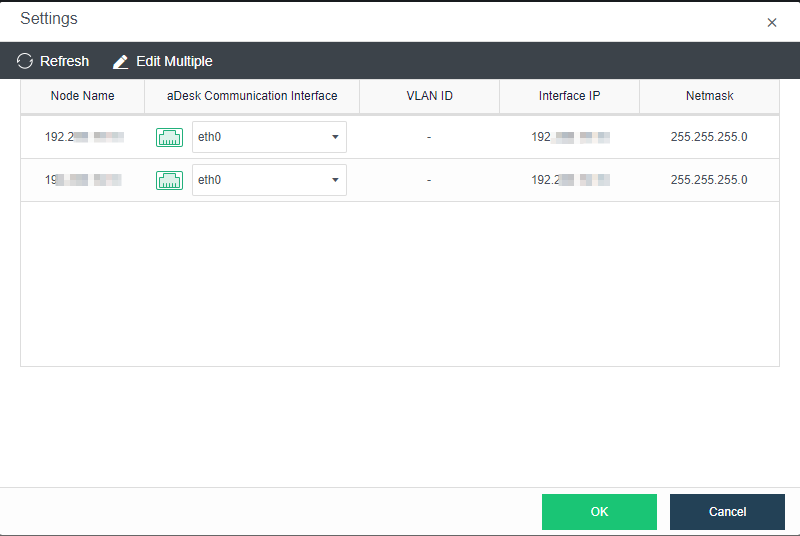

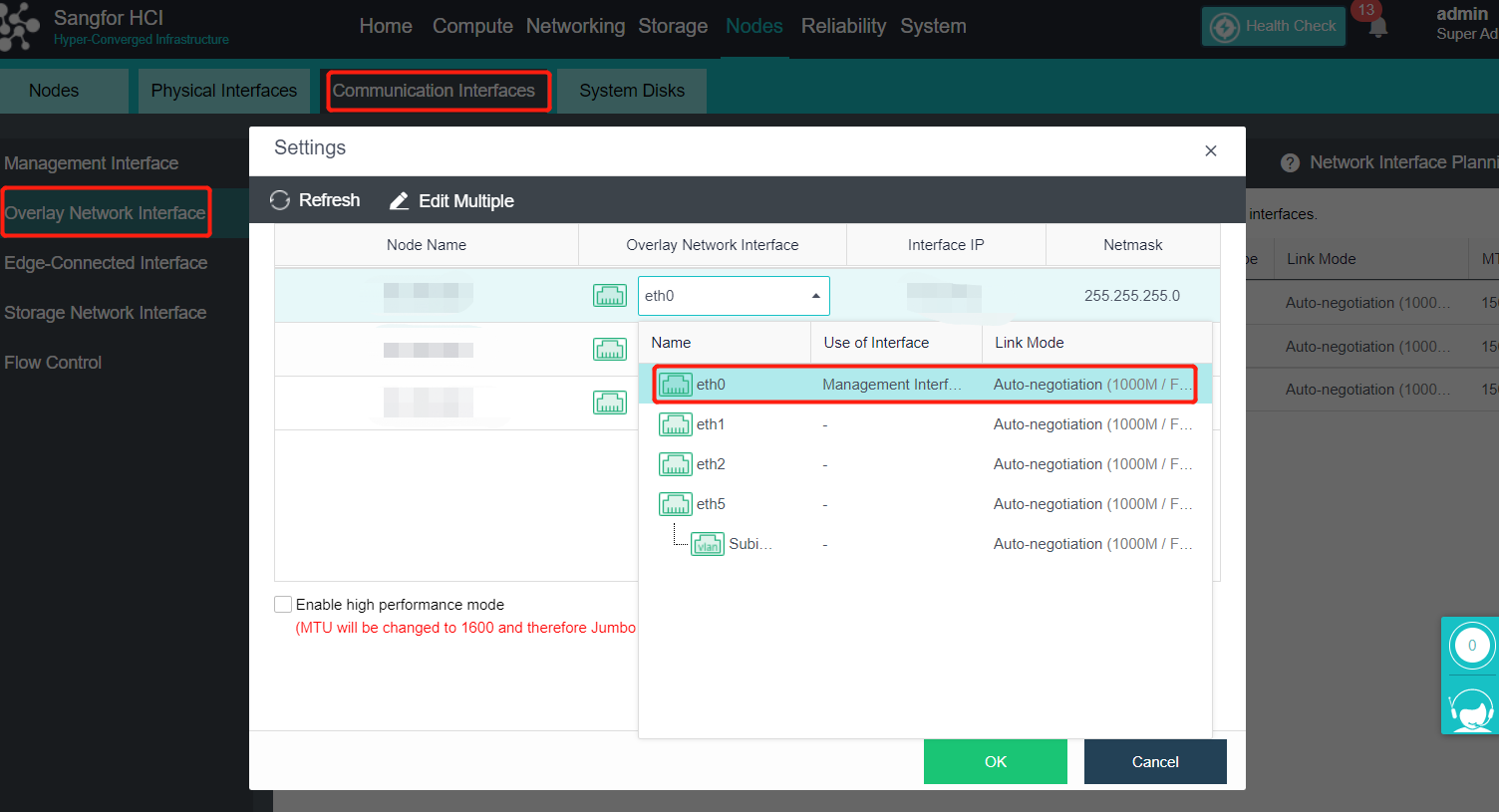

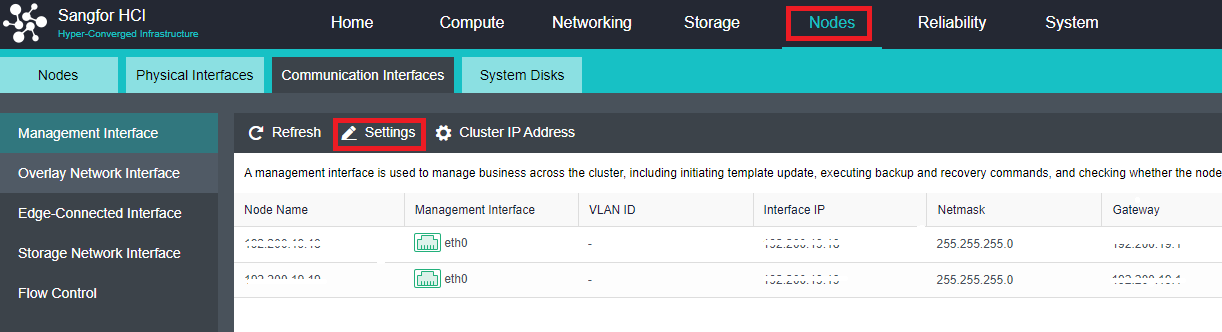

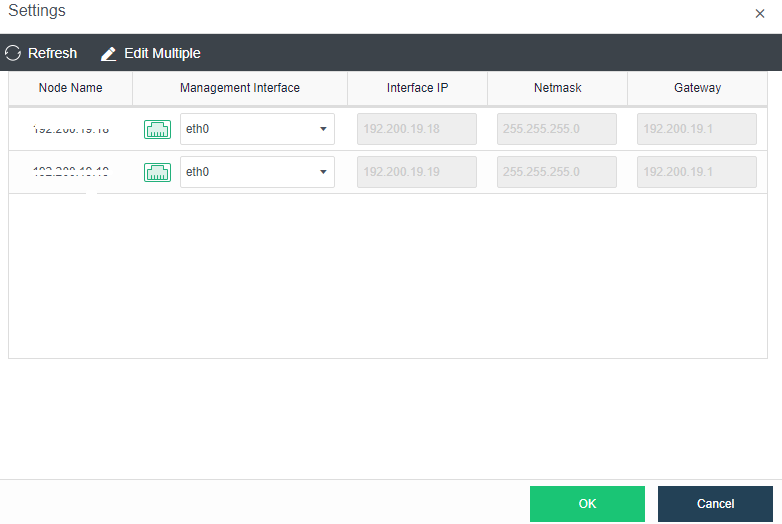

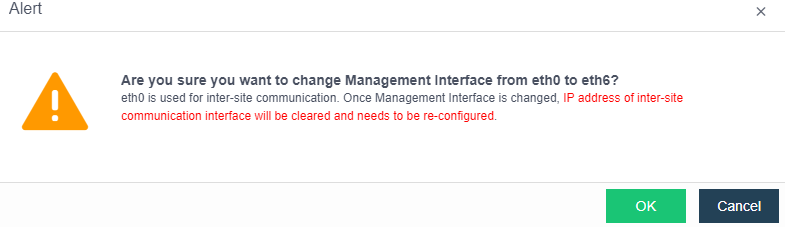

- Click Nodes > Communicate Interface in the HCI console. Among the four interface roles, click Management Interface, click Setting to enter the configuration page, and select the management interface as the added aggregation port.

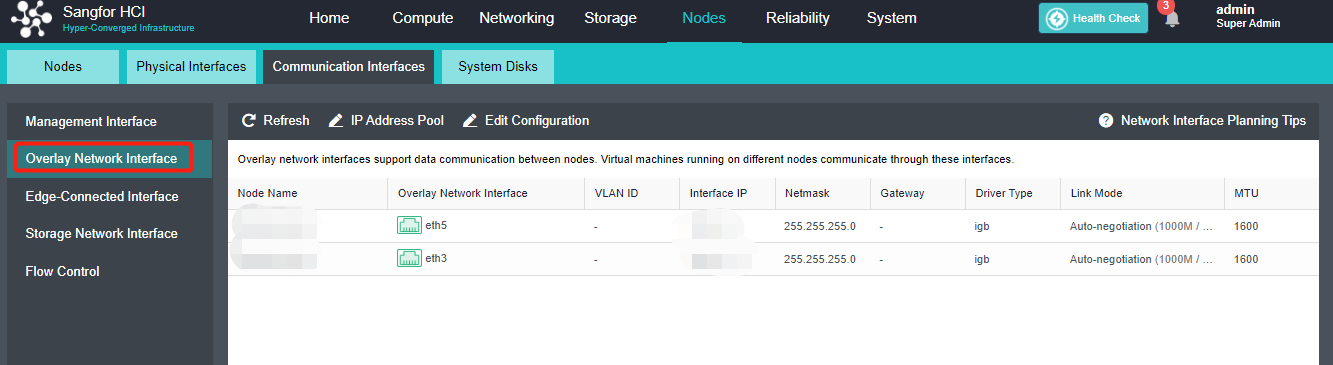

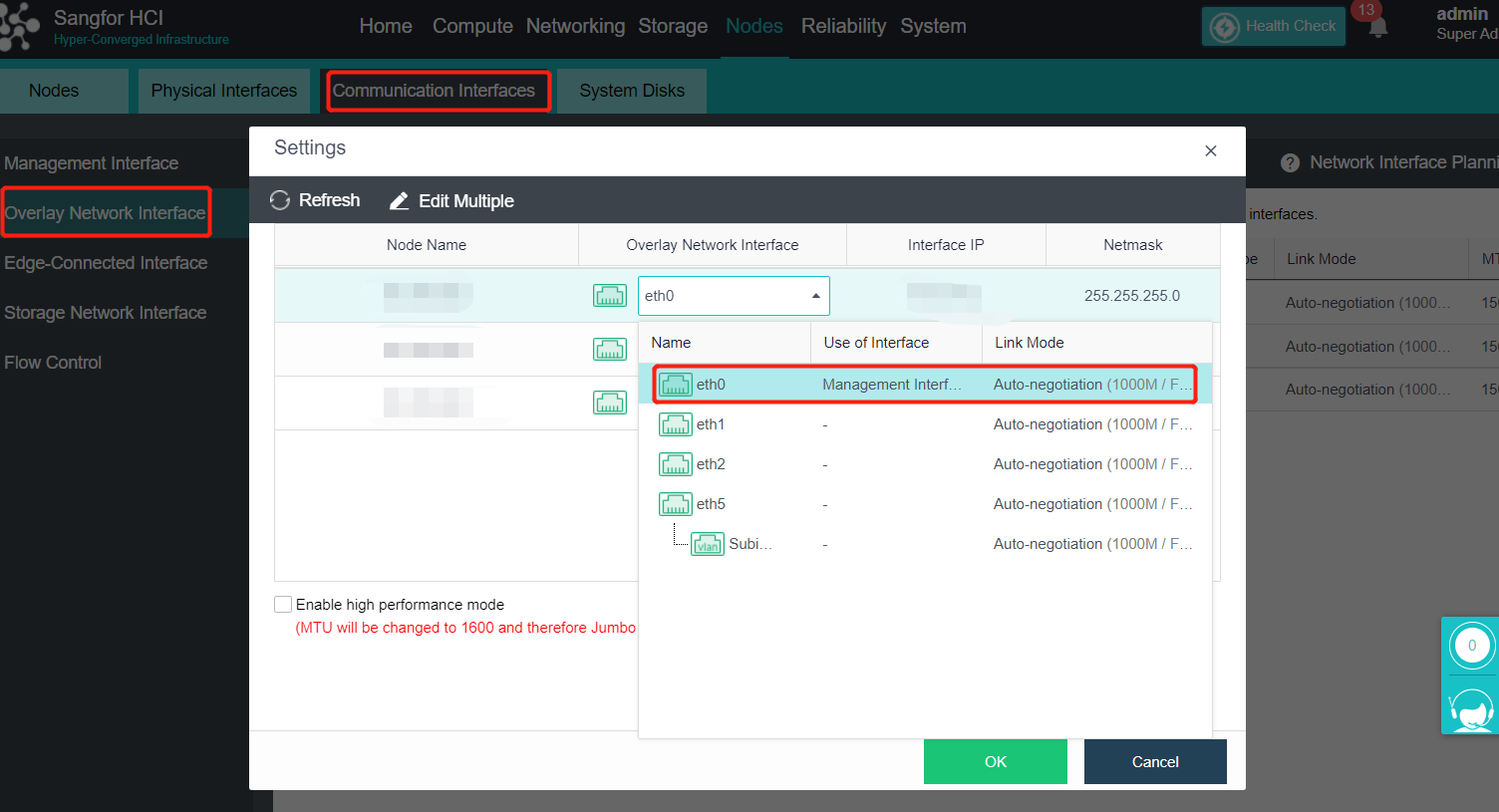

Overlay Network Interface Configuration

Precautions

-

After deploying the HCI cluster, you need to specify an Overlay Network Interface for each node. The Overlay Network Interface shall use Gigabit (or 10 Gigabit) interface and be interconnected by Gigabit (or 10 Gigabit) switch. (When only two nodes exist, the Overlay Network Interface can be connected directly). To improve the interface bandwidth and redundancy of the Overlay Network Interface, it is recommended to configure the aggregation interface as the Overlay Network Interface. At this time, the opposite end switch needs to configure the corresponding aggregation mode.

-

The Overlay Network Interface is configured as a multiplex cluster management port by default. To obtain high network forwarding performance, it is recommended to set the management communication port and Overlay Network Interface (VXLAN) to different interfaces.

-

Direct aggregation of two interfaces with different roles is not supported. Aggregation can be performed after canceling one interface role. For example, eth0 (management interface) and eth1 (VXLAN interface) cannot be aggregated directly. The VXLAN interface can be adjusted to eth2. At this time, eth0 (management interface) and eth1 (no role) can be aggregated.

Steps

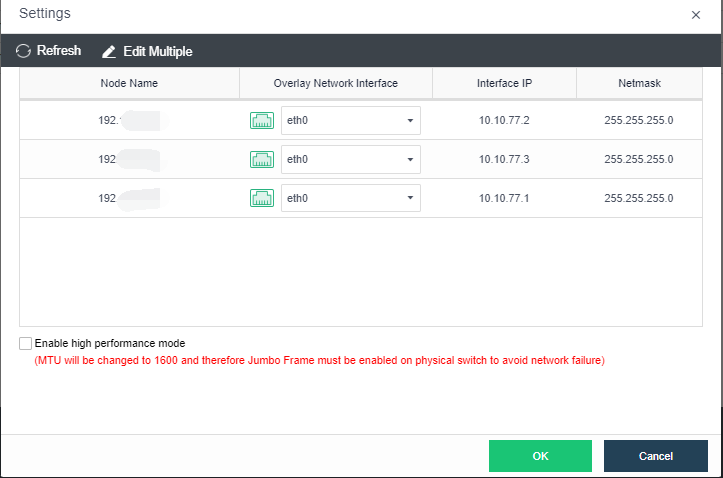

- Click Nodes > Physical Interface on the HCI console, click Add Multiple Aggregate Interfaces, and select two interfaces. The aggregation mode recommends loading according to MAC or IP address.

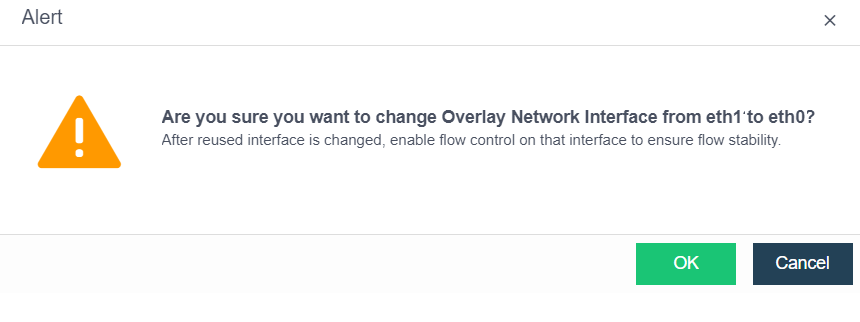

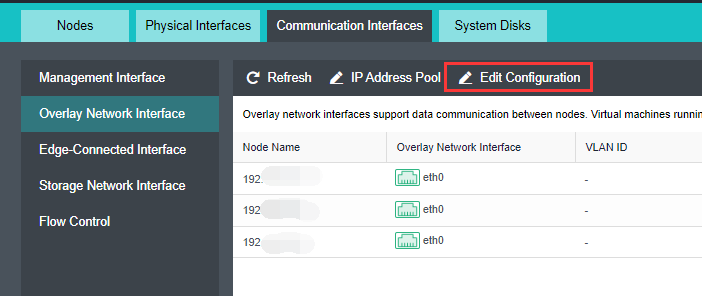

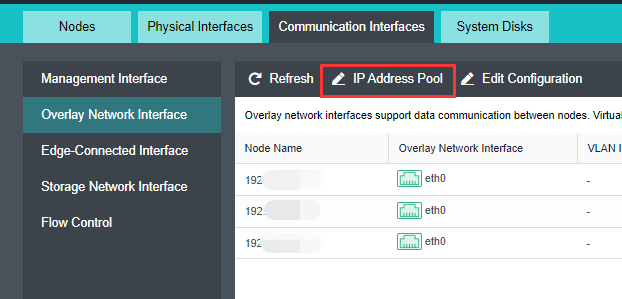

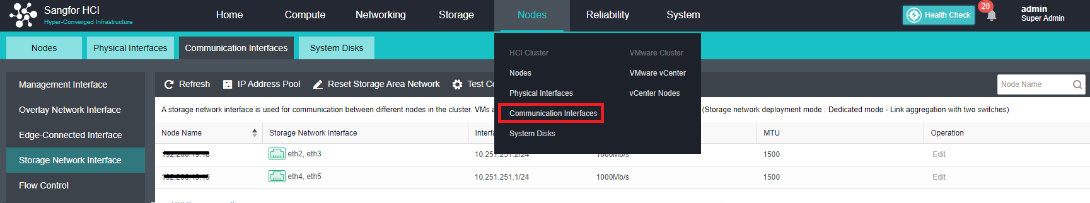

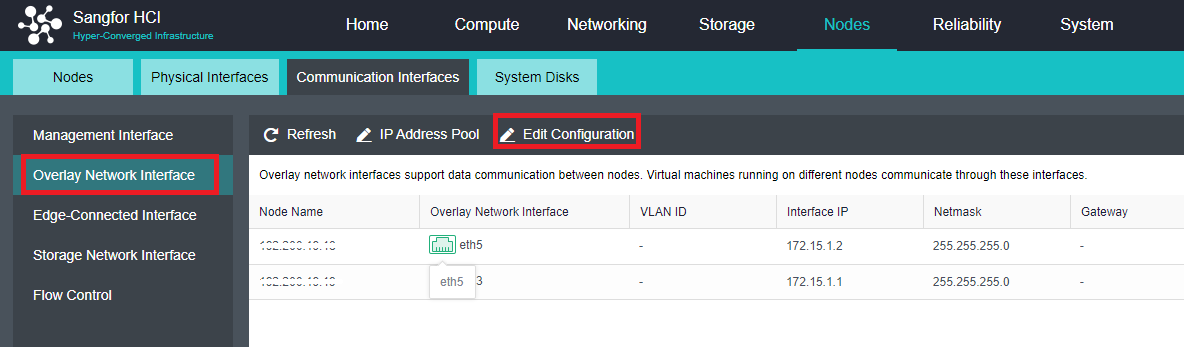

- Click Nodes > Communication Interface in the HCI console. Find the Overlay Network Interface among the four interface roles and click Edit Configuration to enter the configuration page to modify the Overlay Network Interface.

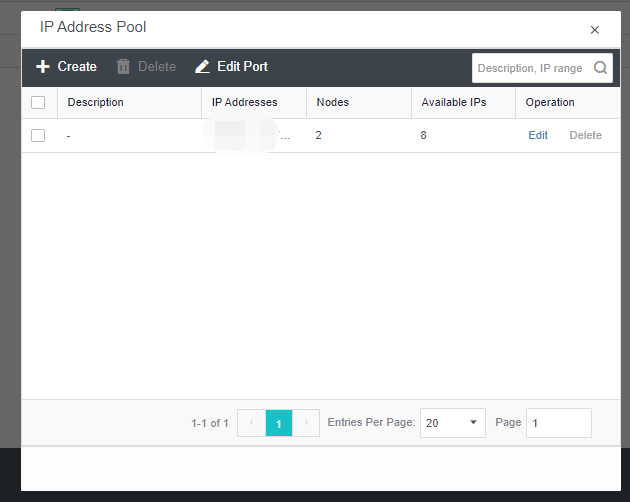

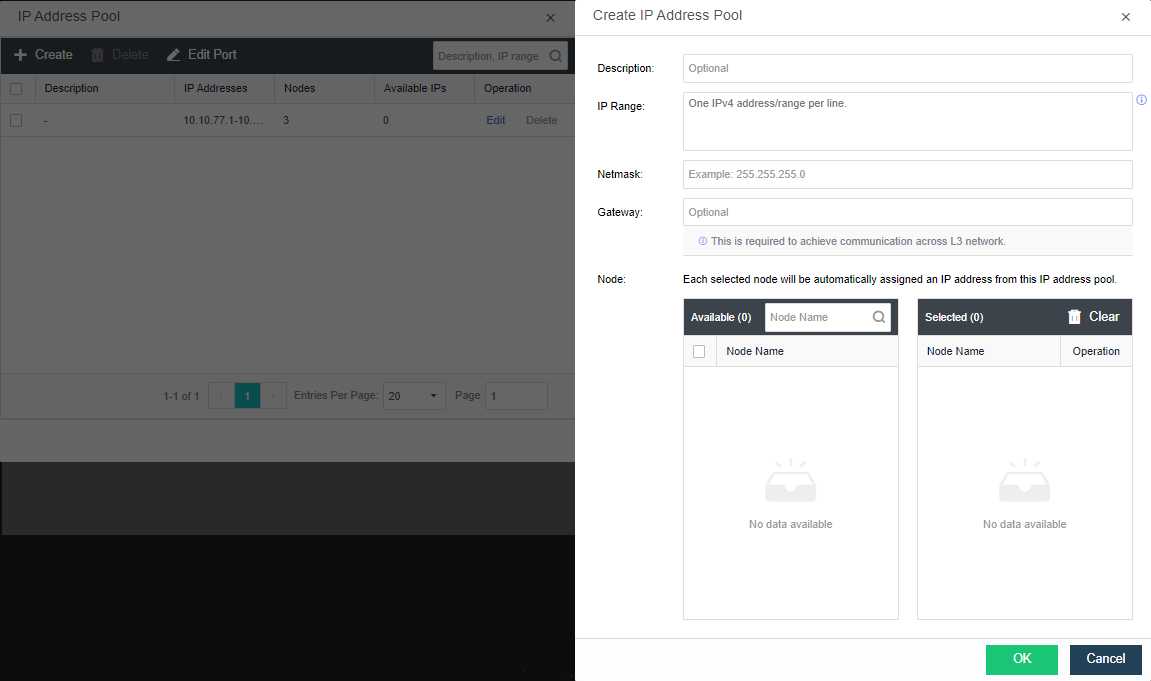

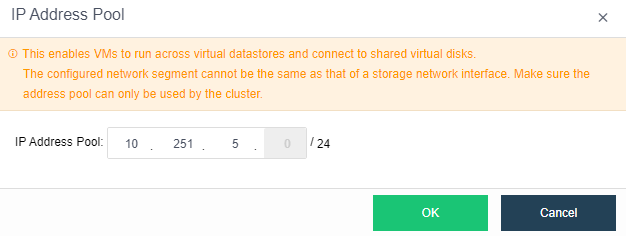

- Click Nodes > Communication Interface in the HCI console. Find the Overlay Network Interface among the four network port roles and click IP address Pool to enter the configuration page to modify the VXLAN IP pool.

-

Create: a VXLAN IP pool can be created. Multiple VXLAN IP pools can exist in a cluster. A node is only allowed to join one VXLAN IP pool.

-

Delete: You can delete the VXLAN IP pools that are no longer in use.

-

Edit Port: The port number used for VXLAN can be modified. The port number supports 8472, 4789 and 4790.

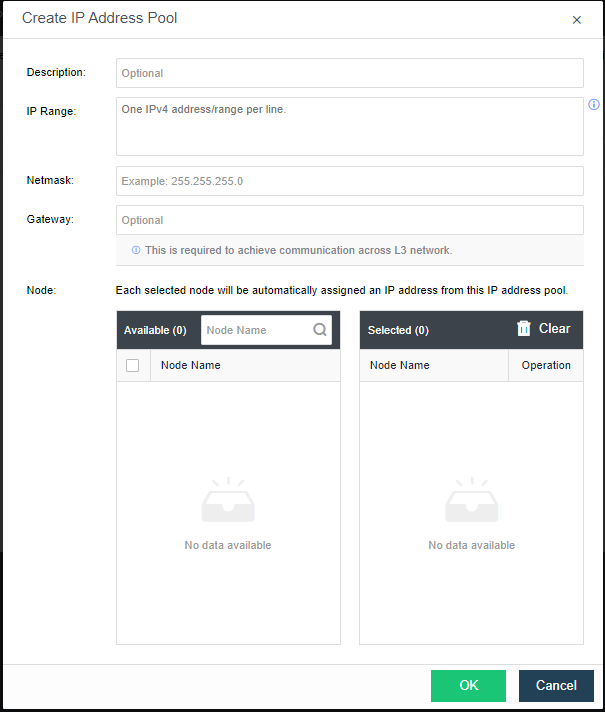

- Click Create to configure the address. Enter the IP pool where the VXLAN IP is located and the netmask. The IP range must be greater than or equal to the number of nodes.

-

Description: Describes the created VXLAN IP pool information.

-

IP Range: The IP address used by the VXLAN node network plane. It supports a single IP setting and range setting and can be set in multiple lines. It is necessary to ensure that all addresses are in the same network segment.

-

Netmask: The mask information of the IP address in the VXLAN IP pool.

-

Gateway: If cross-layer data communication is required, fill in the layer 3 gateway.

-

Node: The node that uses the IP in the VXLAN IP pool.

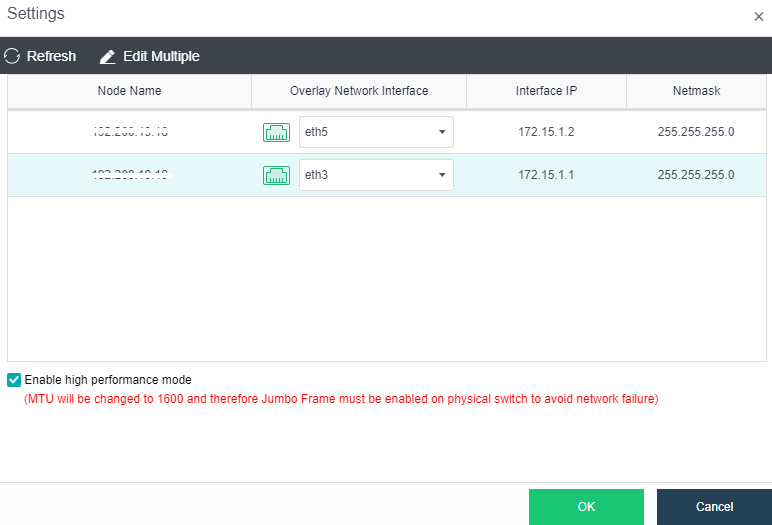

- By checking the Enable high performance mode checkbox, the MTU of the HCI node interface will be set to 1600 bytes so that the data encapsulated by VXLAN will not be fragmented when sent to the physical network. It can significantly improve the forwarding performance of the virtual network. At this time, the opposite physical switch must enable a jumbo frame.

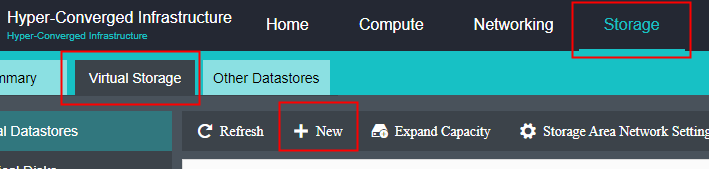

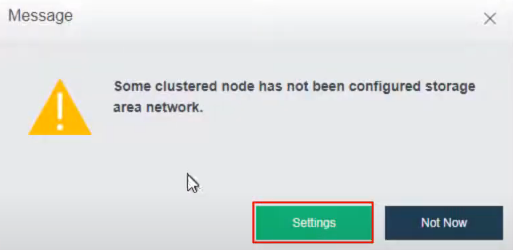

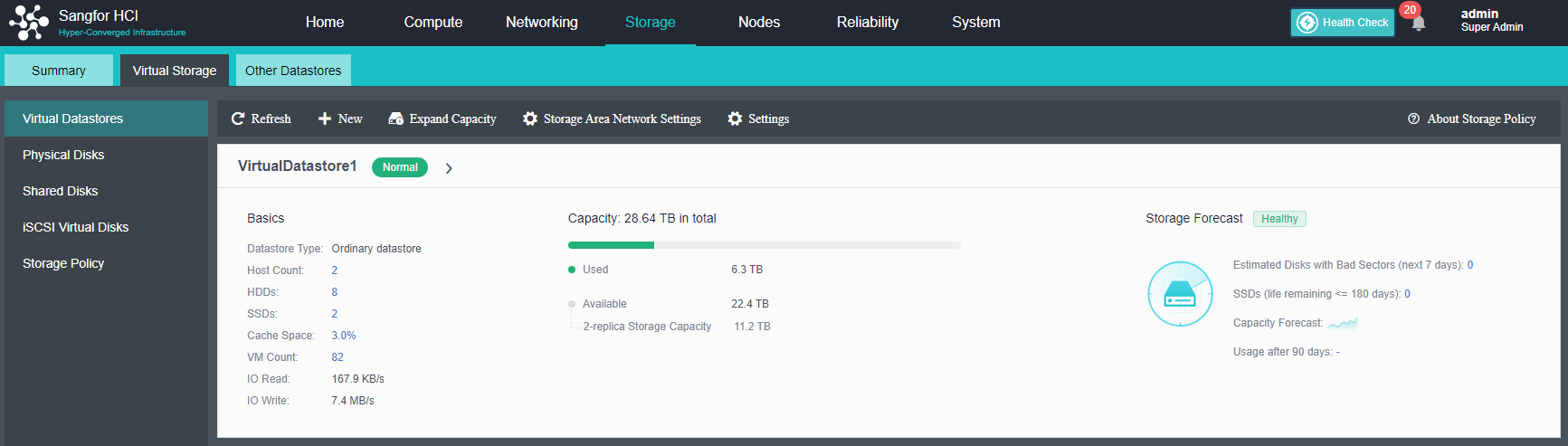

Virtual Storage Configuration

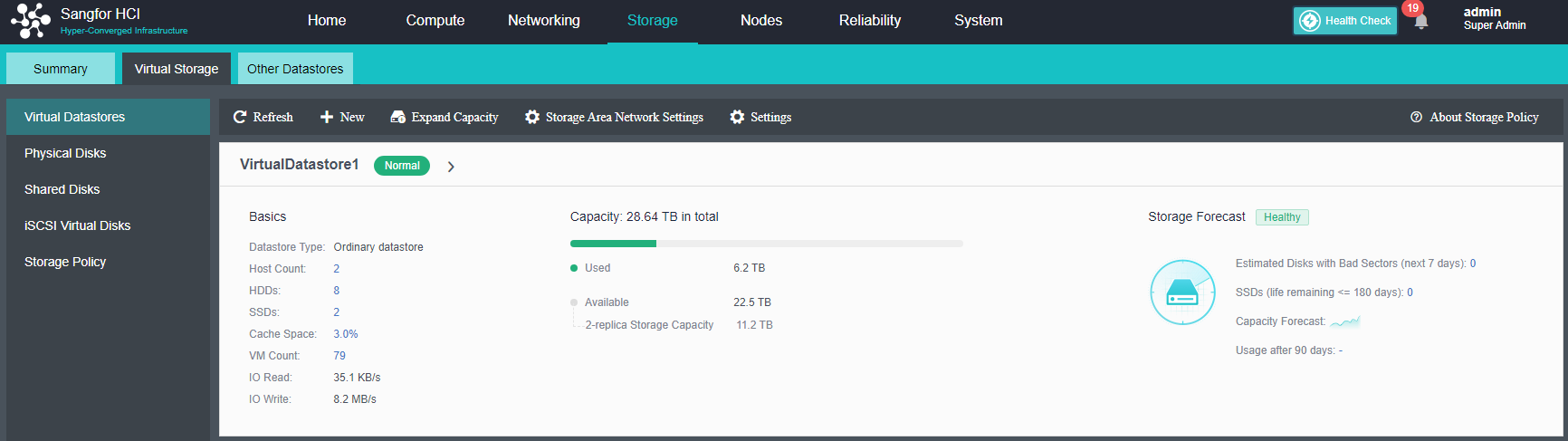

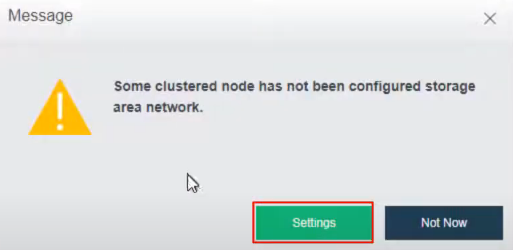

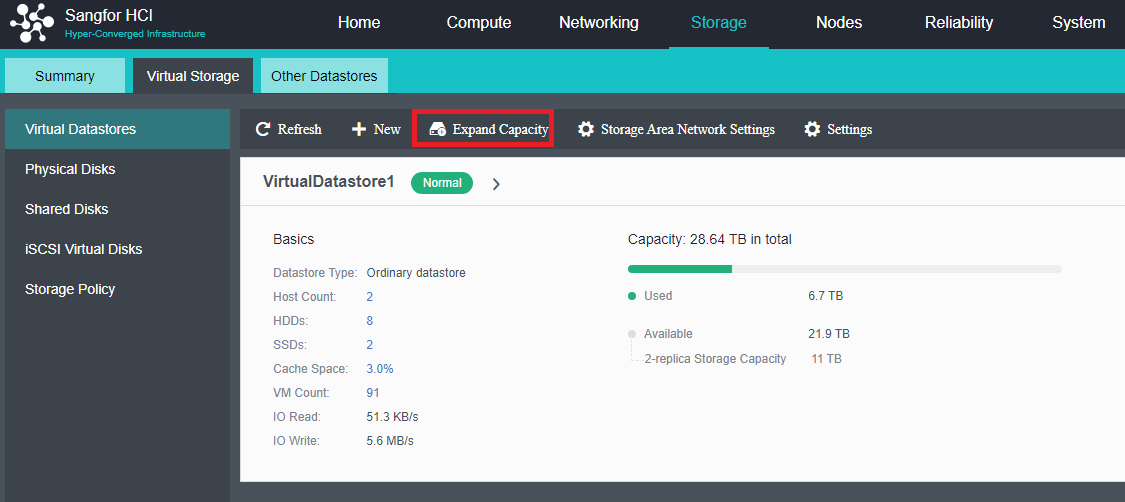

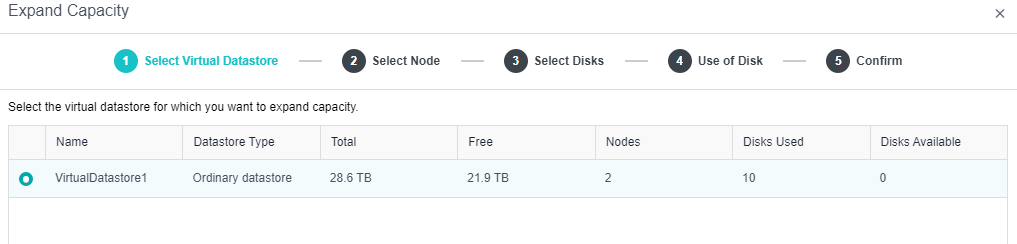

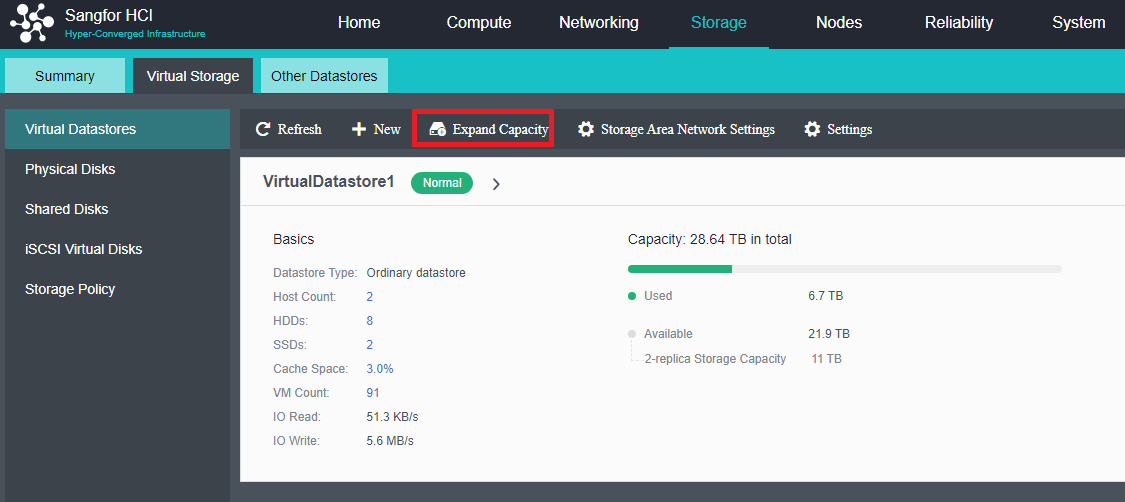

After the HCI node is organized into a cluster, navigate to Storage > Virtual Storage and click New. Because it is the first time to set up virtual storage, the system will automatically prompt to configure the storage private network.

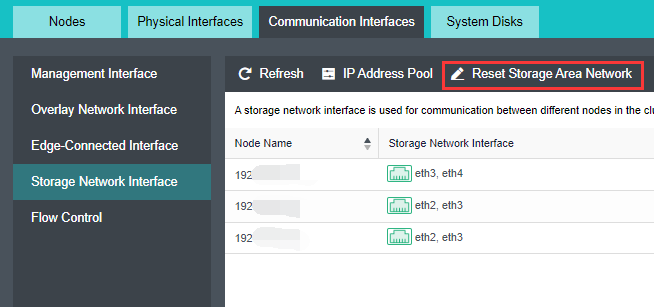

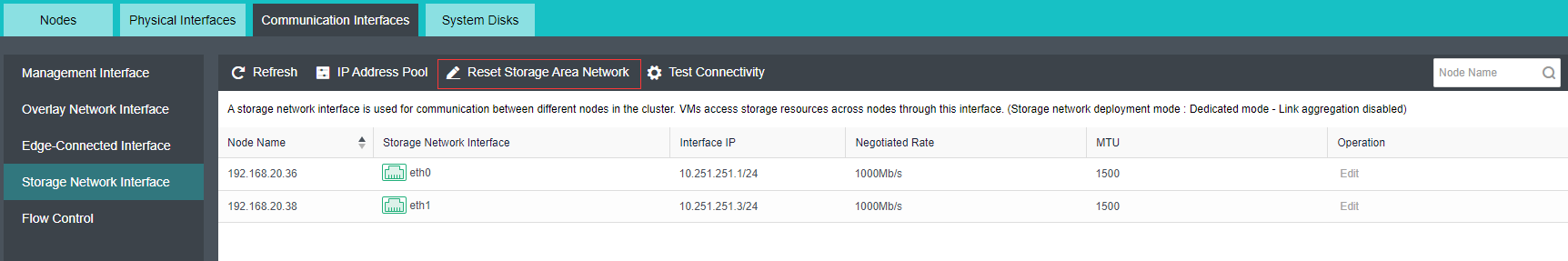

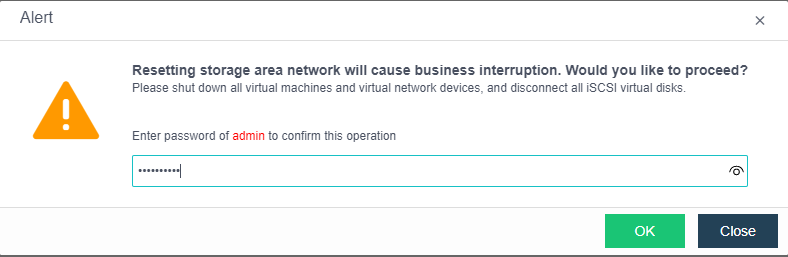

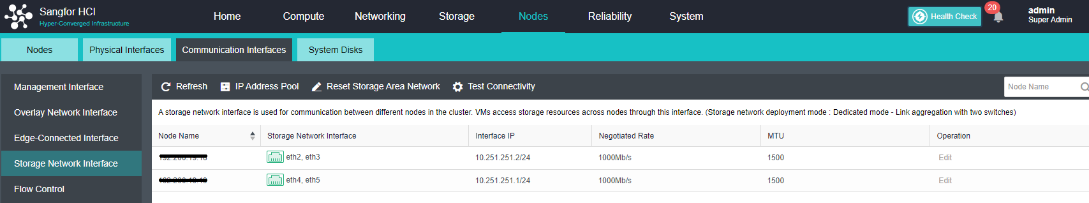

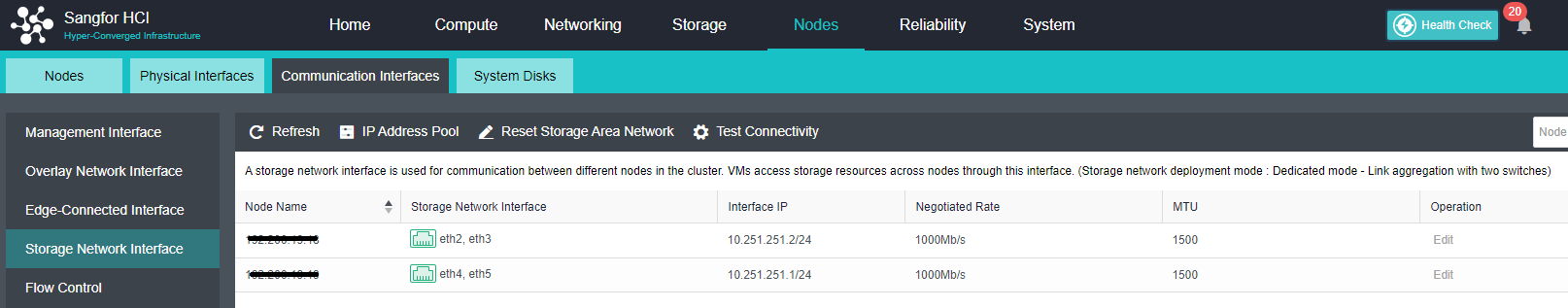

Configure Storage Network Interface

Precautions

-

Upgrade from the lower version to HCI6.7.0, the storage network deployment mode is Link Aggregation with One Switch or Link Aggregation with Two Switches by default.

-

Deploying the new storage network in the standard link aggregation mode is recommended.

-

If the storage area network is configured with standard link aggregation, link aggregation with one switch, and link aggregation with two switches, it is not supported to configure a static route to this network interface. Static routes can be configured in Link aggregation disabled mode.

-

It is forbidden to use Round robin among interfaces for the storage area network interface.

Steps

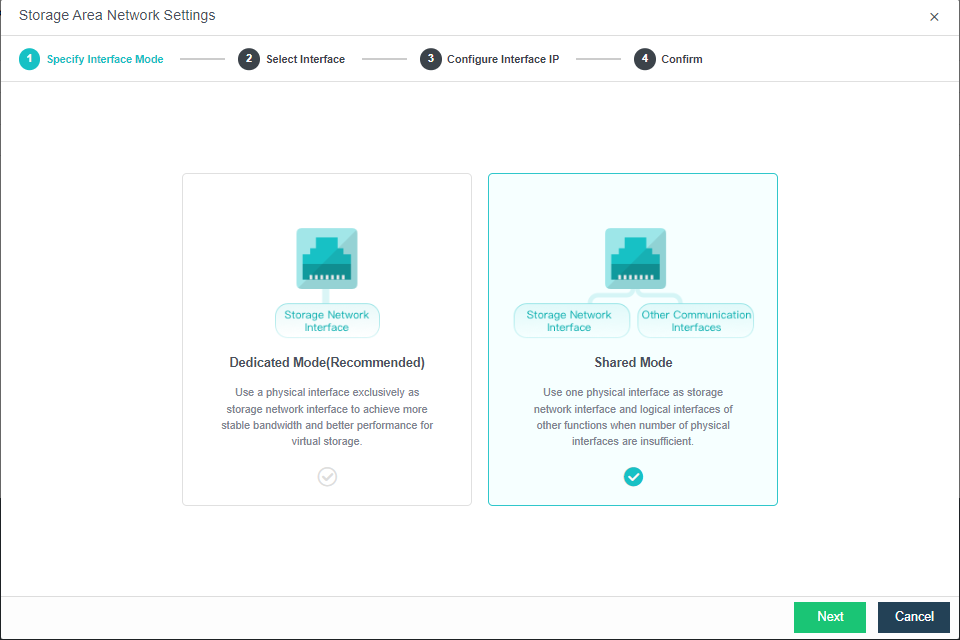

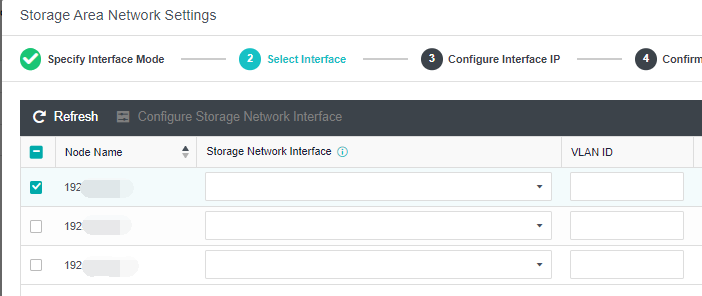

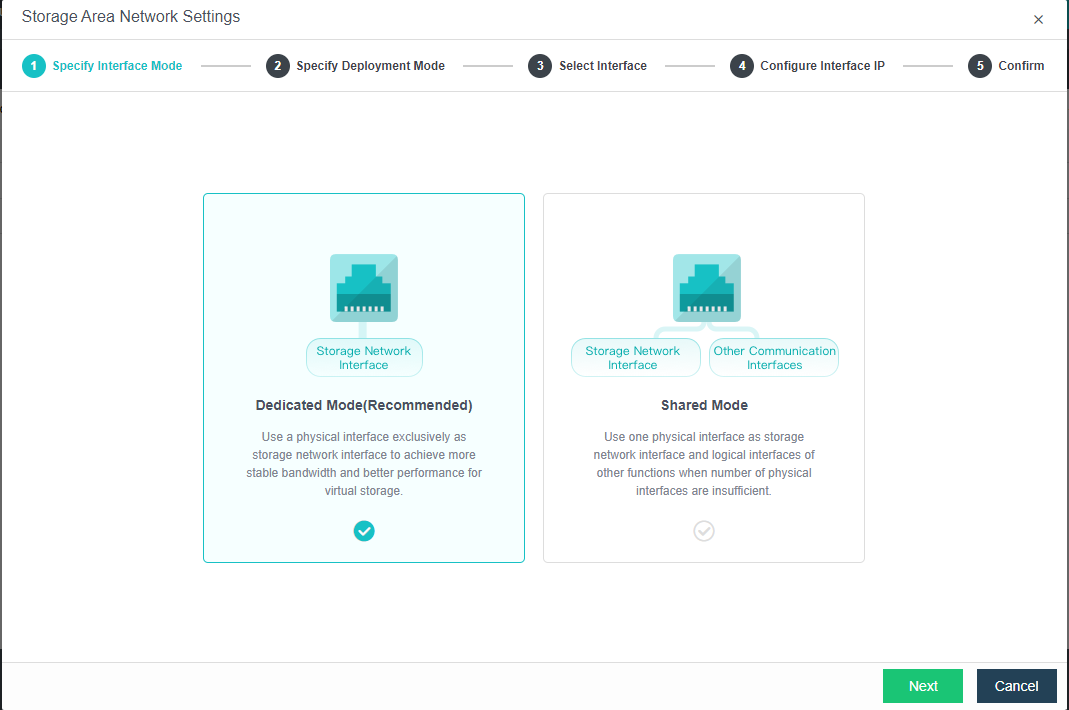

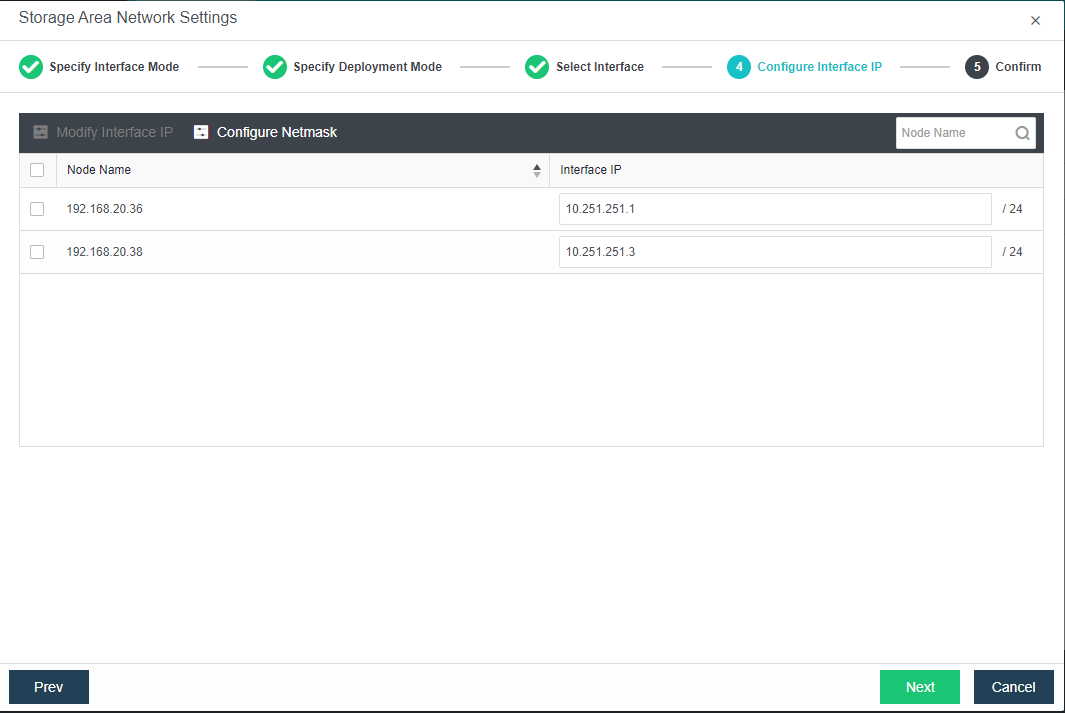

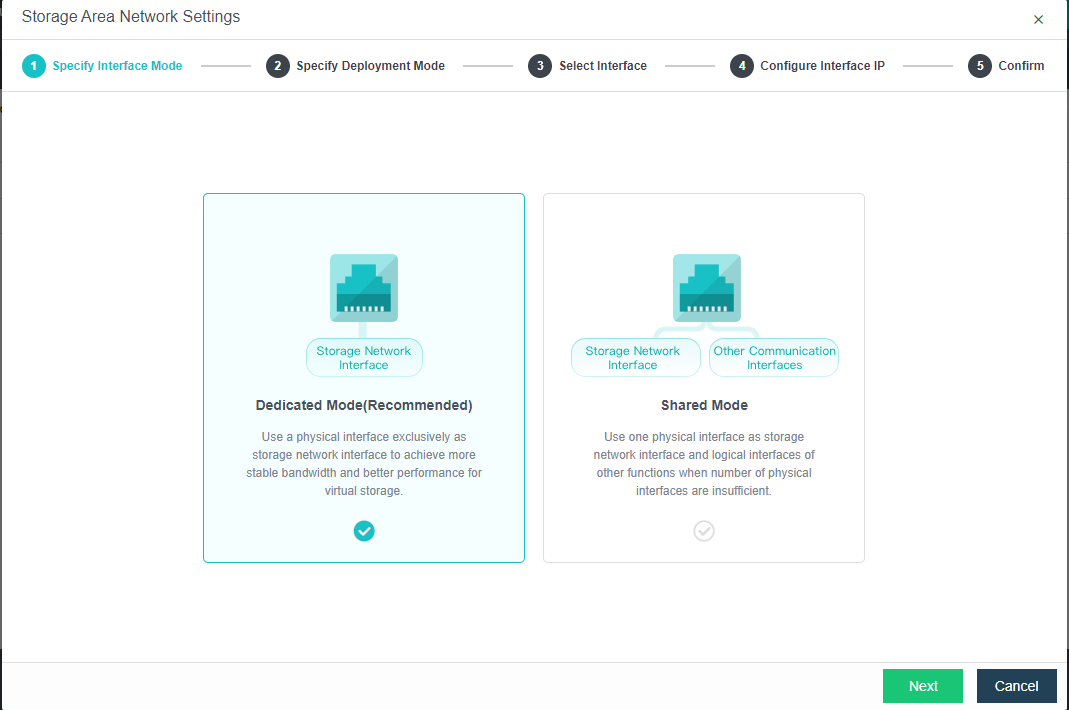

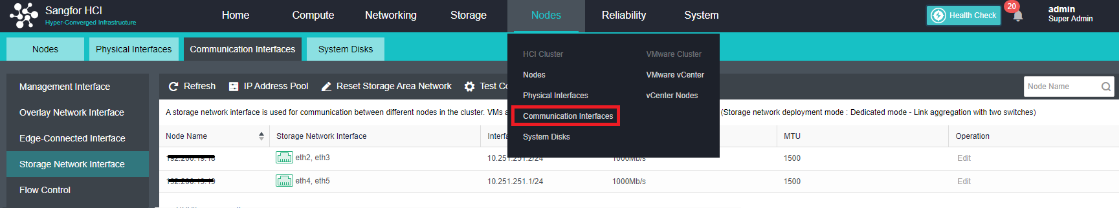

-

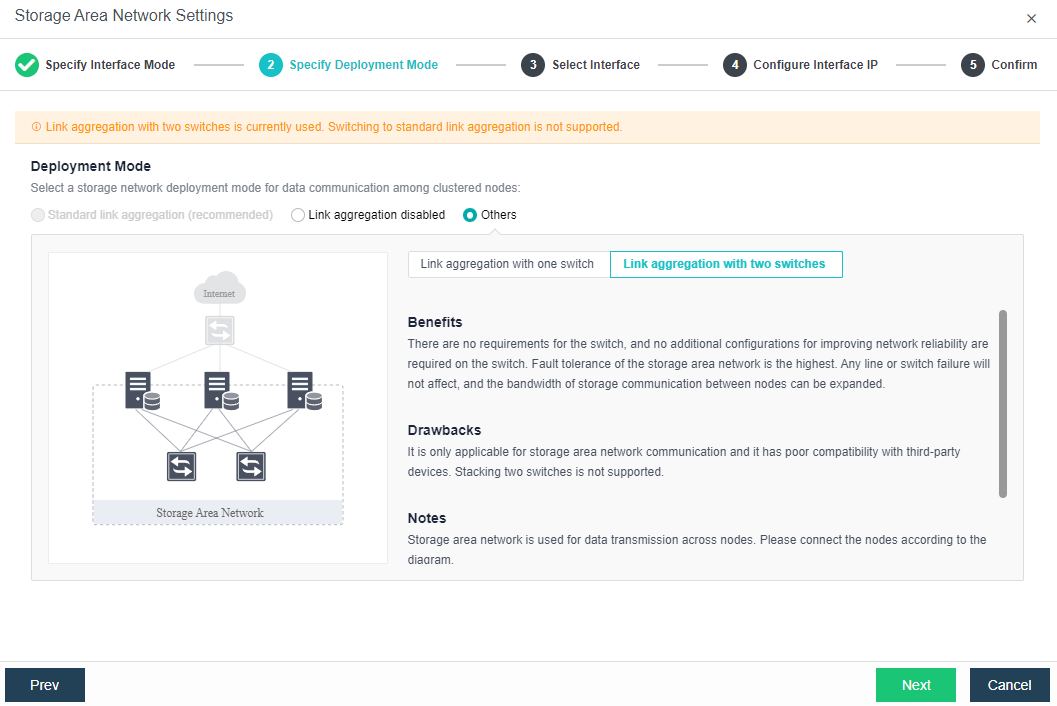

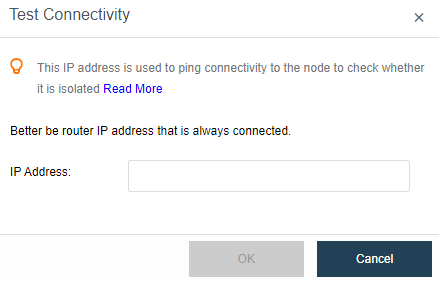

Navigate to Storage under Virtual Storage and click Storage Area Network Settings to configure the storage deployment mode.

By default, eth0 is used as a management interface and communication interface to synchronize configurations on the Sangfor HCI platform. The storage network interface is used to synchronize file data on virtual storage. It is better to use separate interfaces such as the management interface and storage network interface. It is recommended to use Standard Link Aggregation mode to form a storage area network for both standard and stretched clusters.

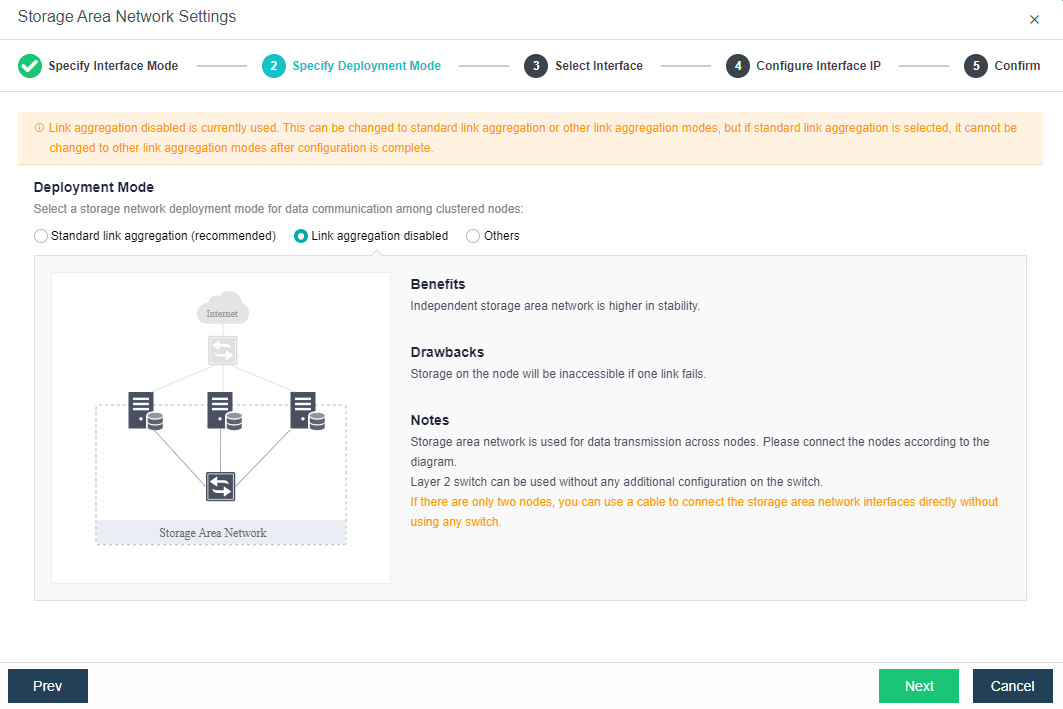

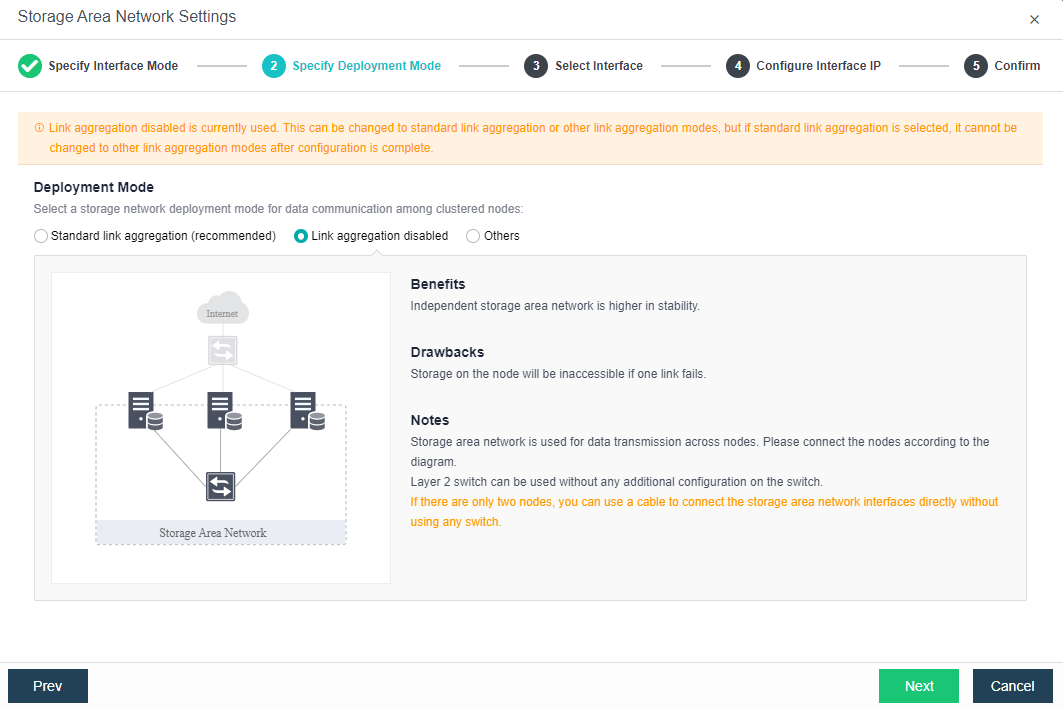

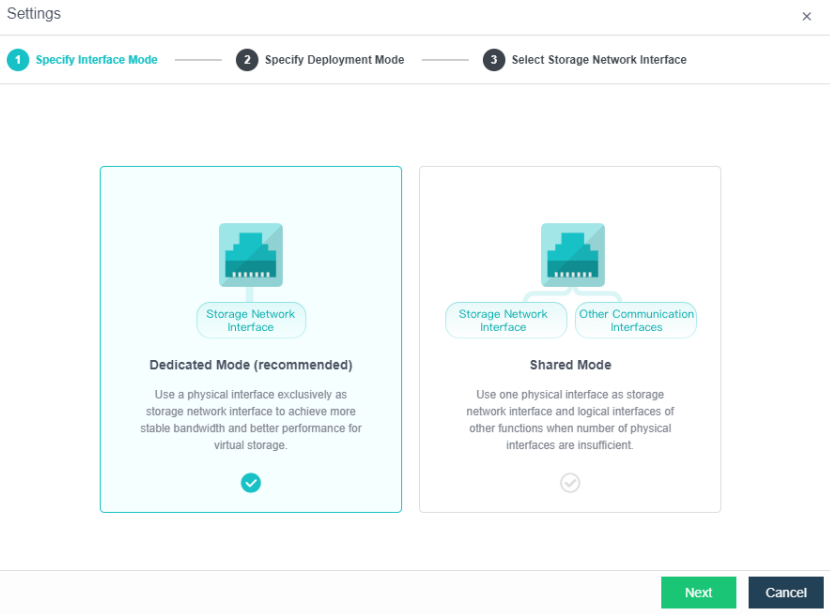

Deployment mode:

a. Standard link aggregation (recommended): By configuring interface aggregation on the node and switch, the node can connect to the third-party storage or third-party server through the virtual storage interface. If two switches are used, the switch must also be configured into stacking mode. Selecting the Layer 4 load balancing as the interface load balance mode is recommended.

b. No link aggregation: The network storing data communication is independent, and there is no requirement for the switch. It can be an ordinary layer 2 switch, and there is no need to make any configuration on the switch. However, when a link fails, the storage on the connected host will be directly unavailable.

c. Others

-

Link Aggregation with One Switch: A single switch is used for storage network link aggregation. There is no requirement for the switch. It can be an ordinary layer 2 switch, and there is no need to make any configuration on the switch. The failure of a single link does not affect storage communication.

-

Link Aggregation with two switches: Two switches are used for storage network link aggregation. There is no requirement for switches. Ordinary layer 2 switches do not need to make any configuration on the switches. They have high fault tolerance. Any line or switch failure will not affect storage communication.

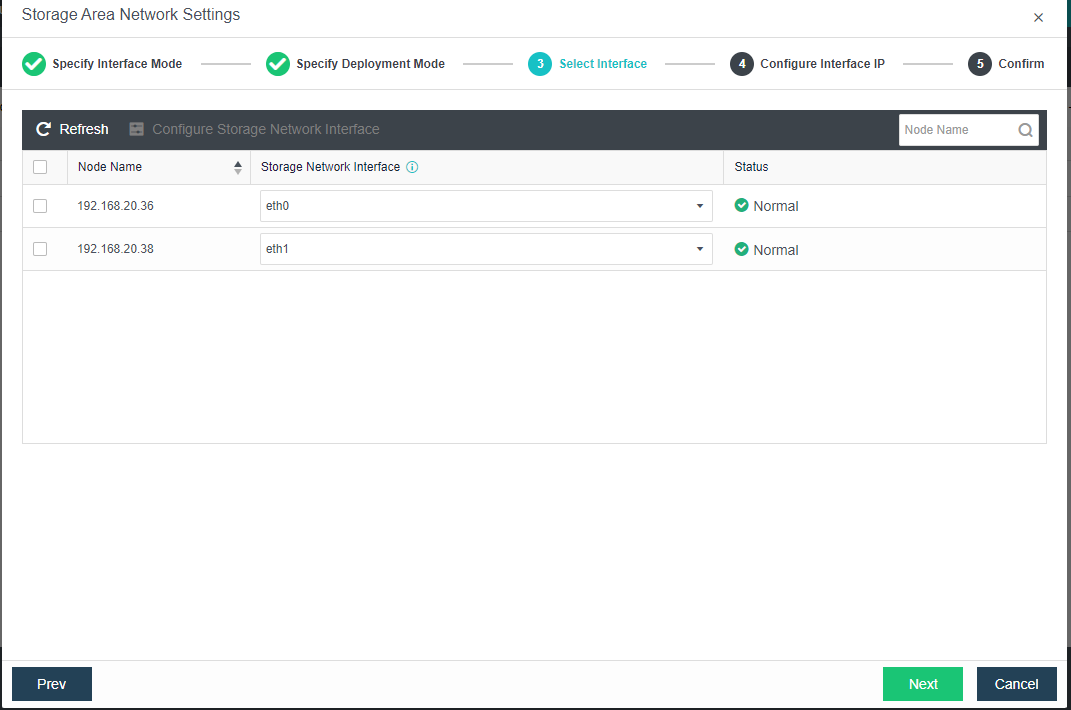

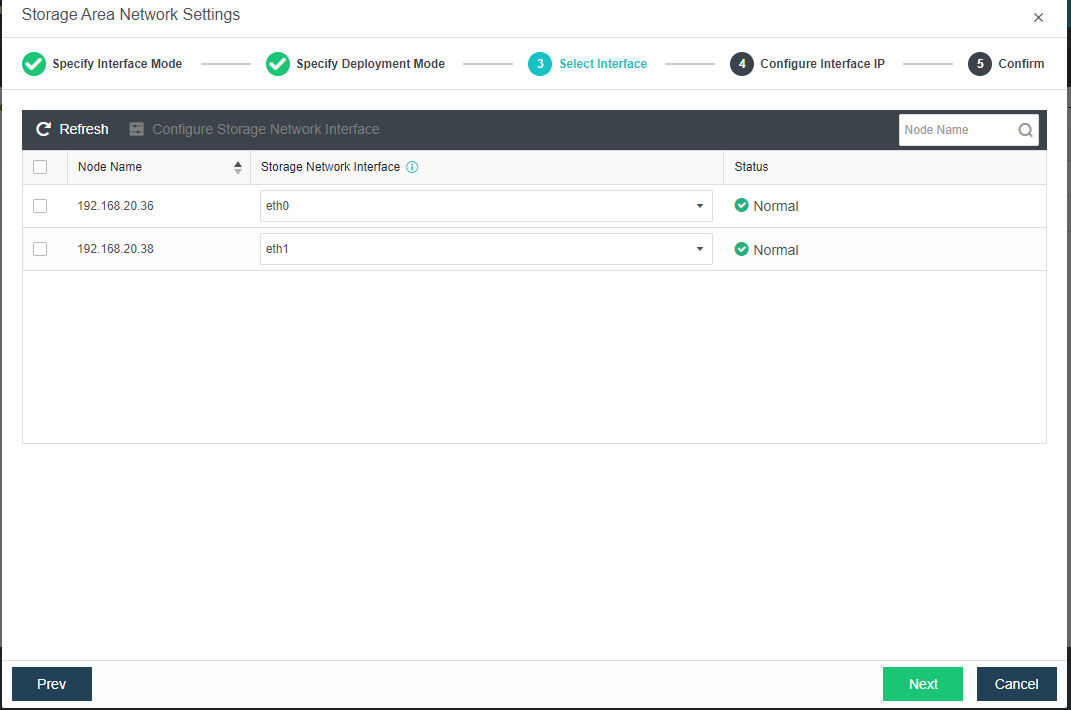

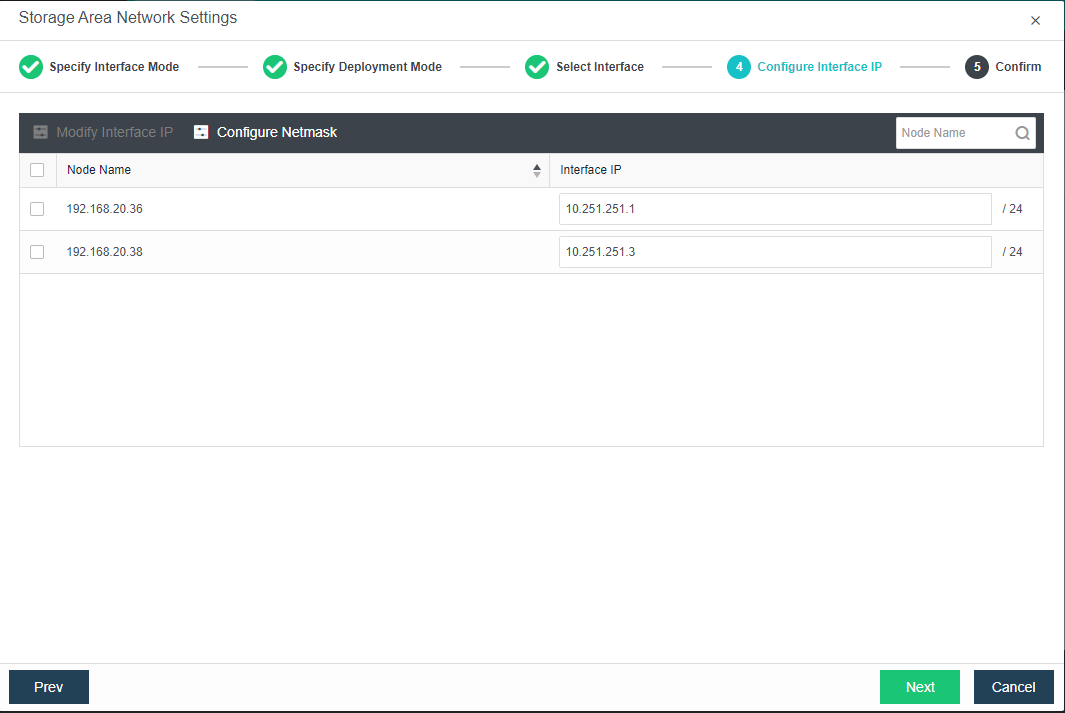

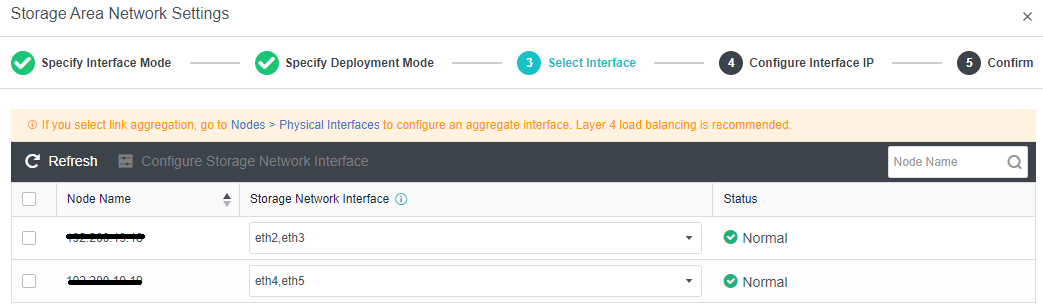

- Each node uses two interfaces for storage communication, and all communication interfaces are connected to the same layer-2 switch. The storage communication network interface between nodes will perform link aggregation by itself (the switch is also required to configure link aggregation). After selecting the storage communication deployment mode, you need to connect according to the schematic diagram of the deployment mode, then select the storage network interface corresponding to each node and configure the communication interface address.

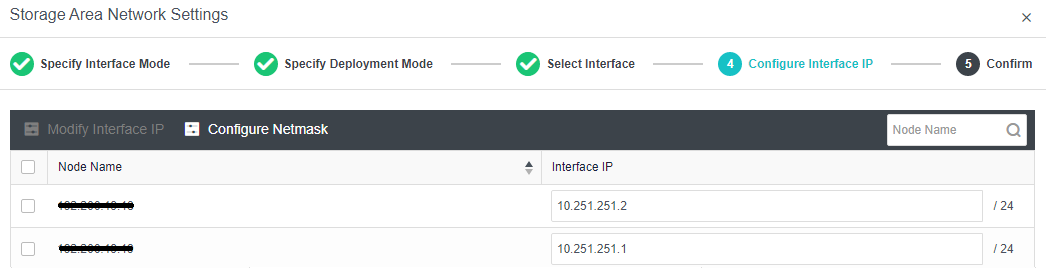

- After the storage network interface IP configuration is complete, the storage private network configuration is complete.

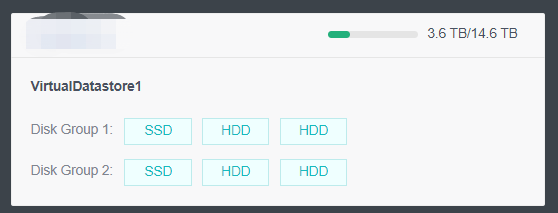

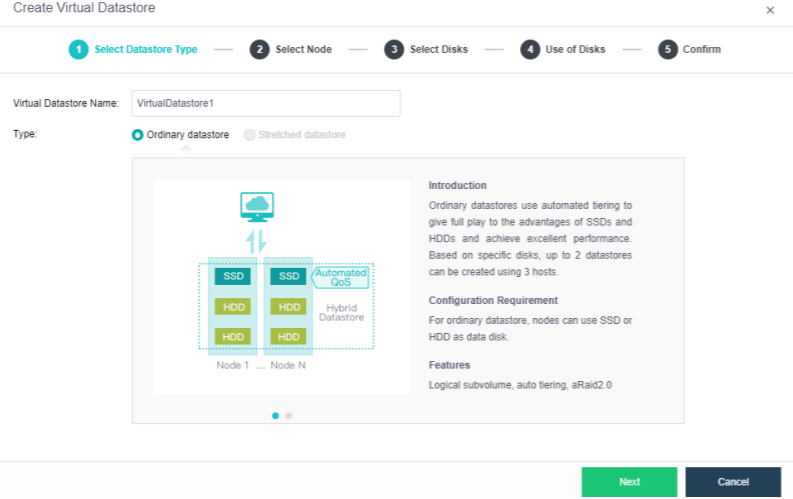

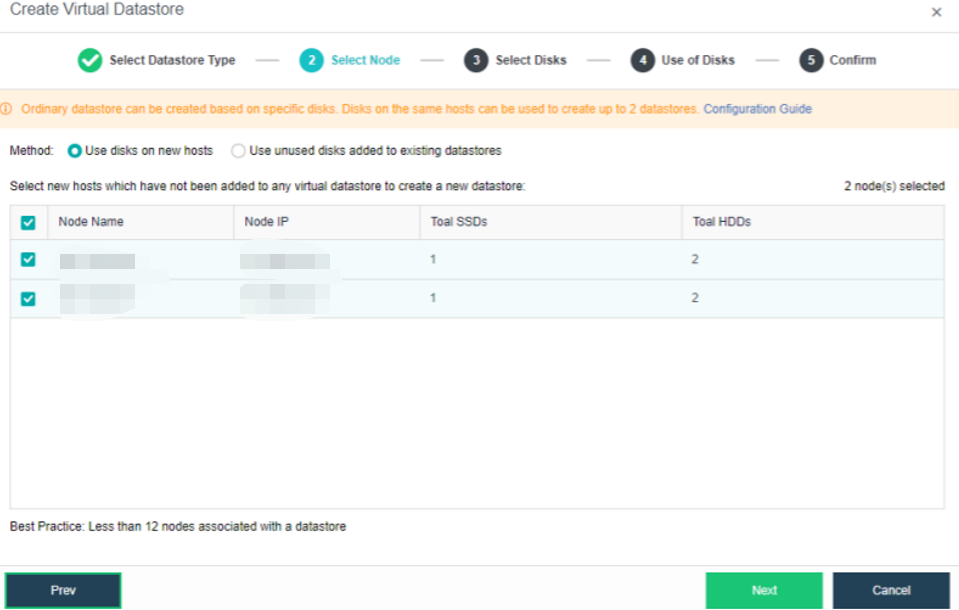

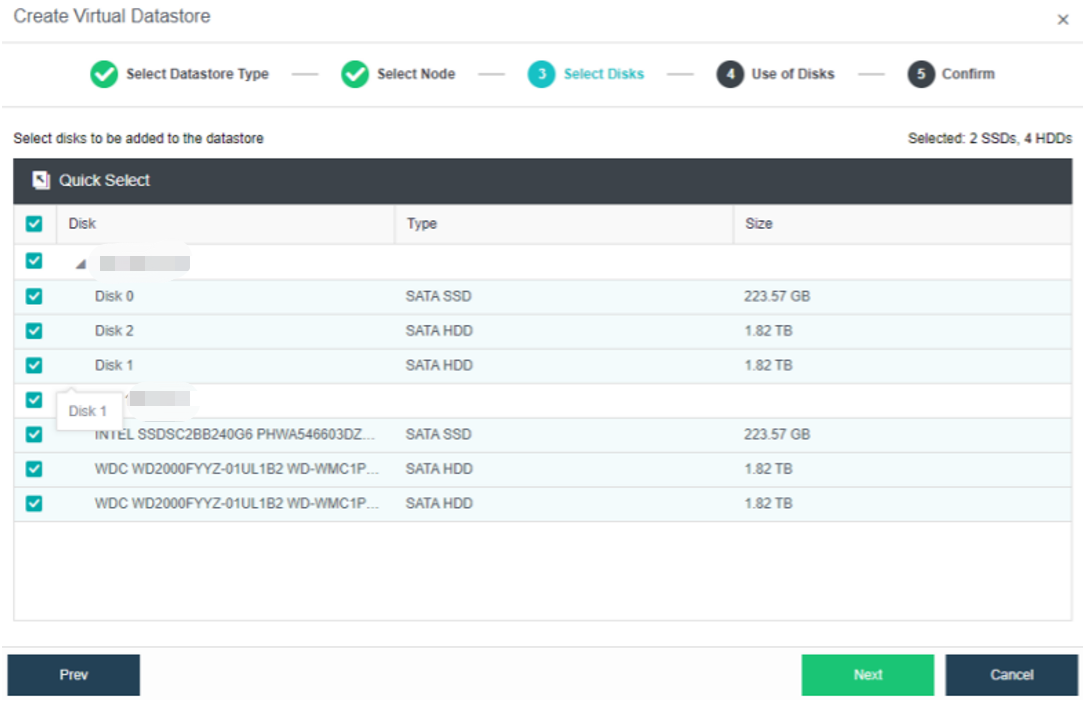

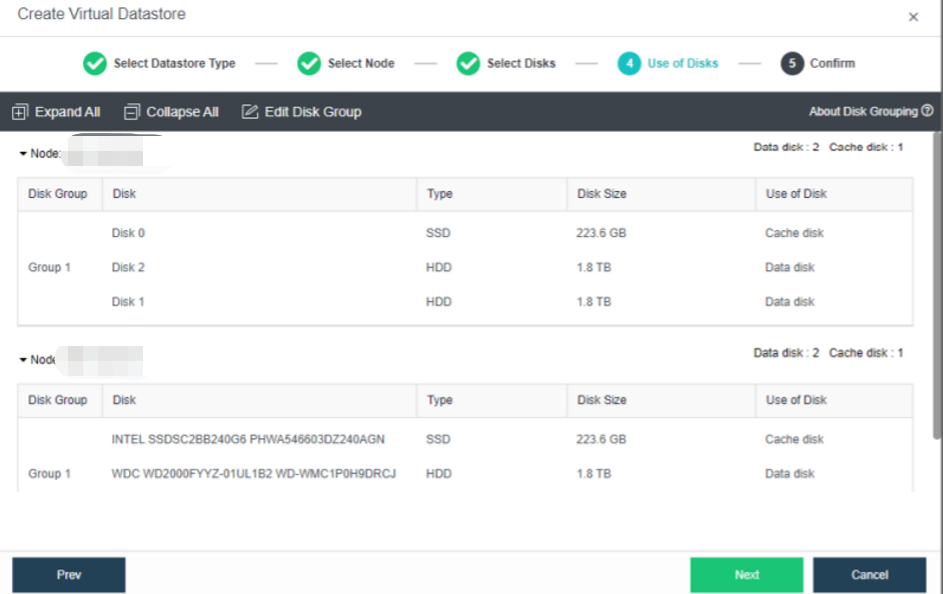

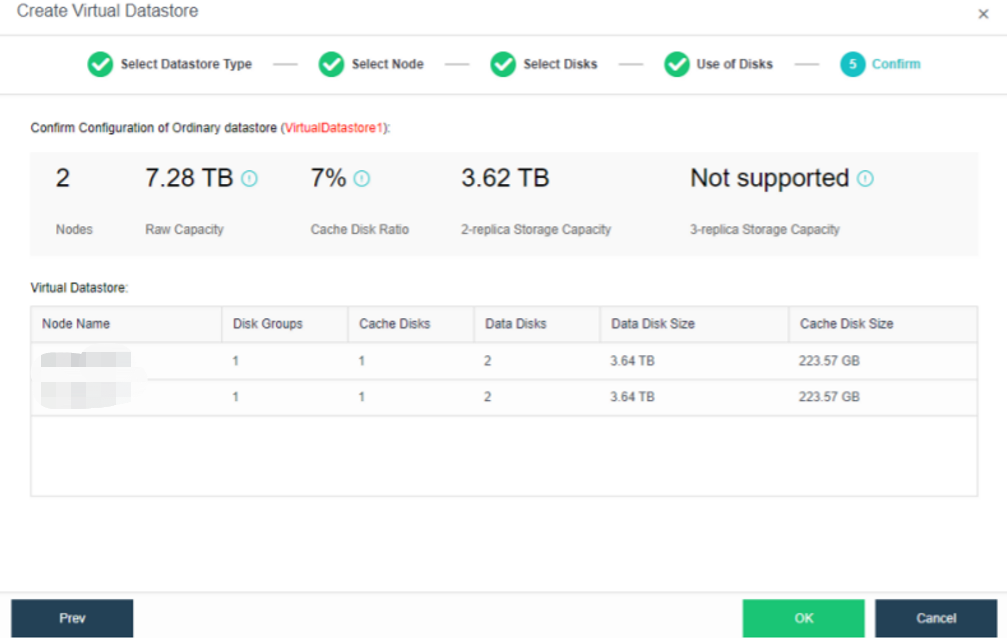

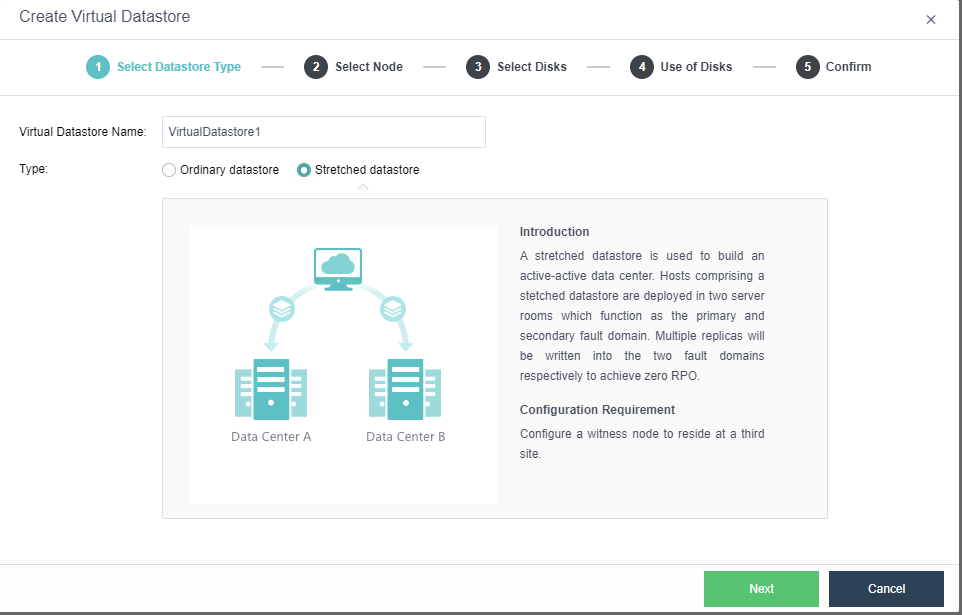

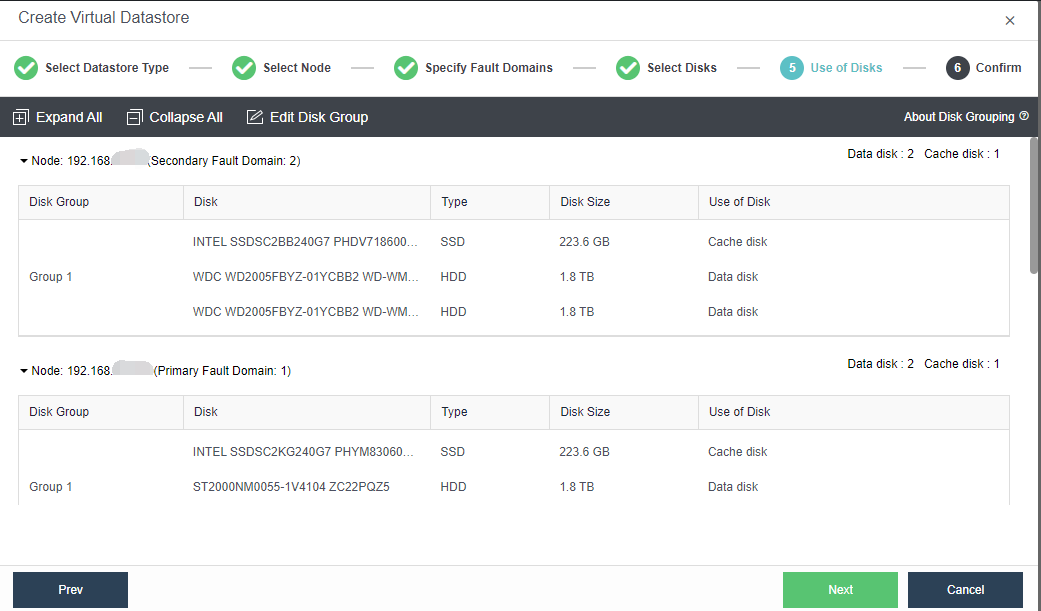

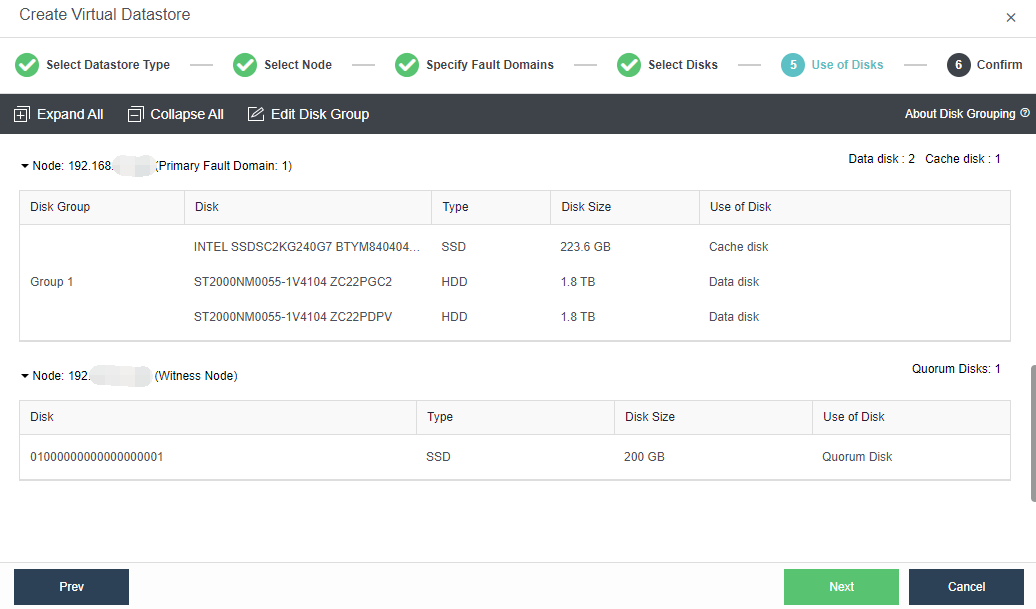

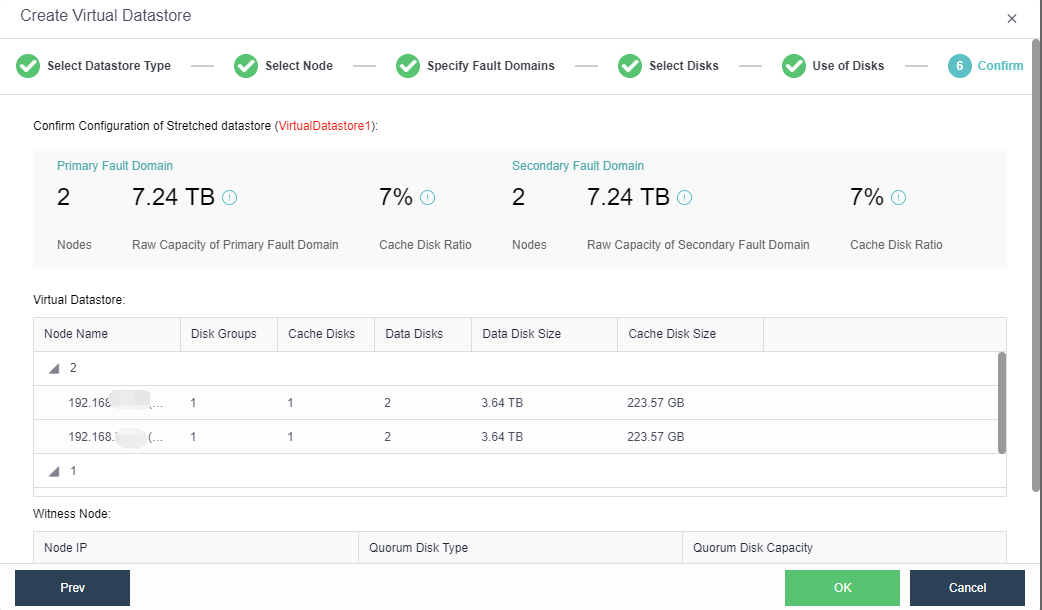

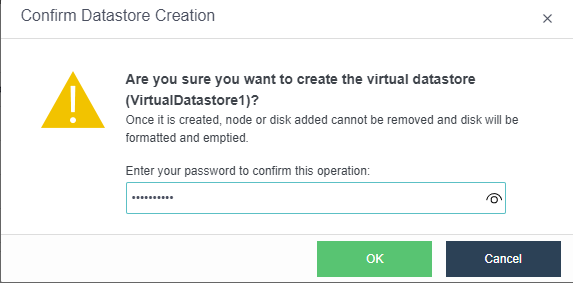

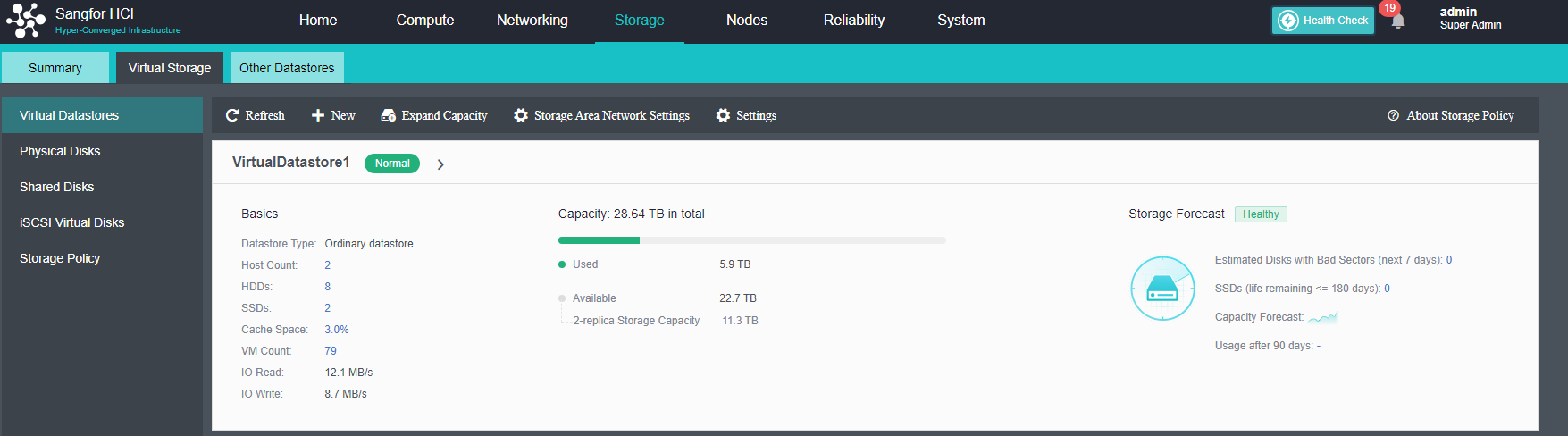

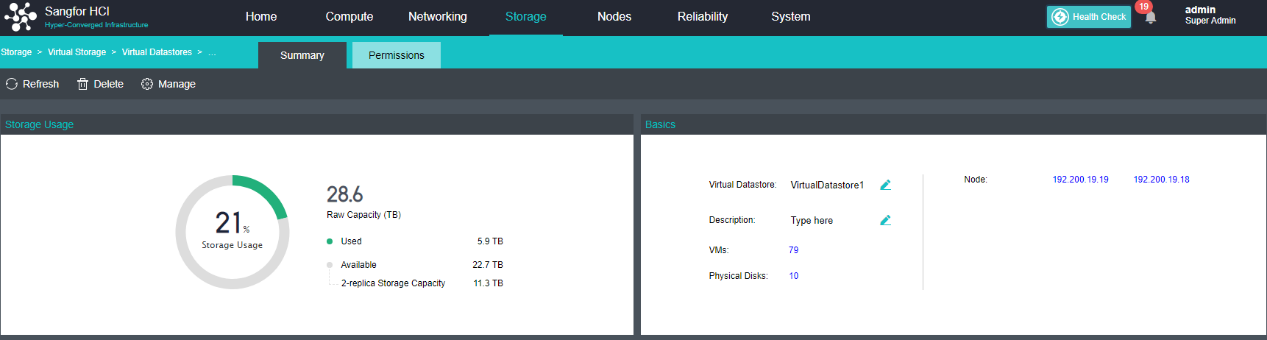

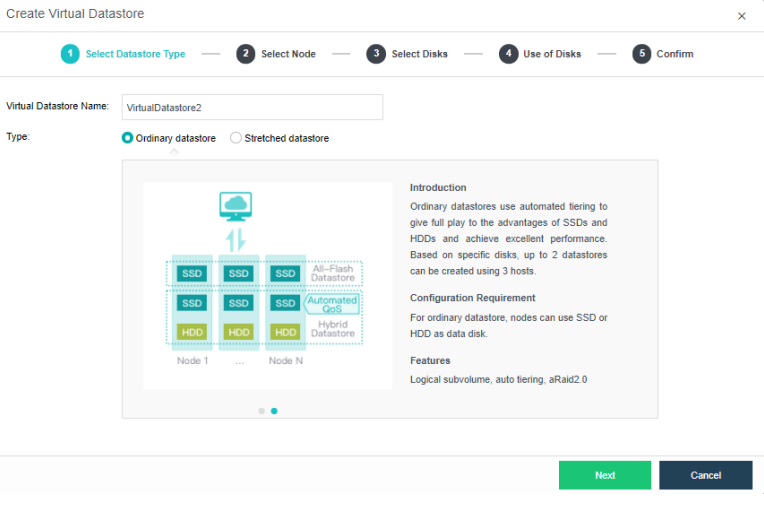

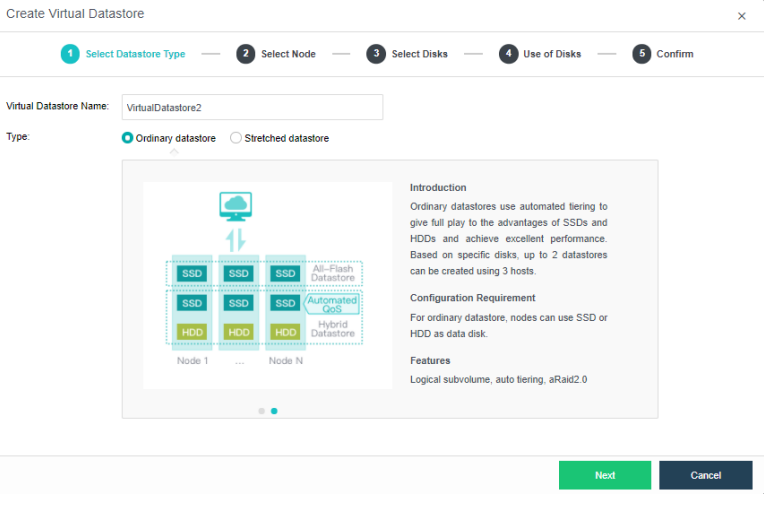

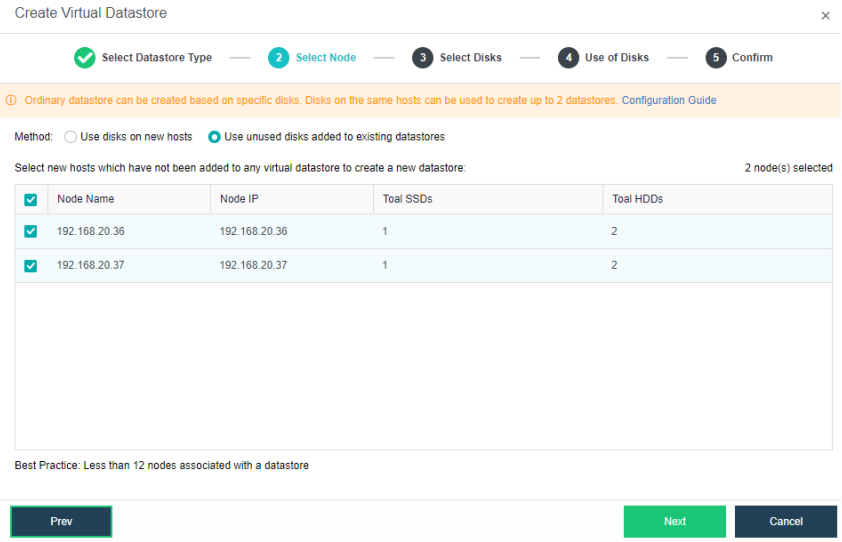

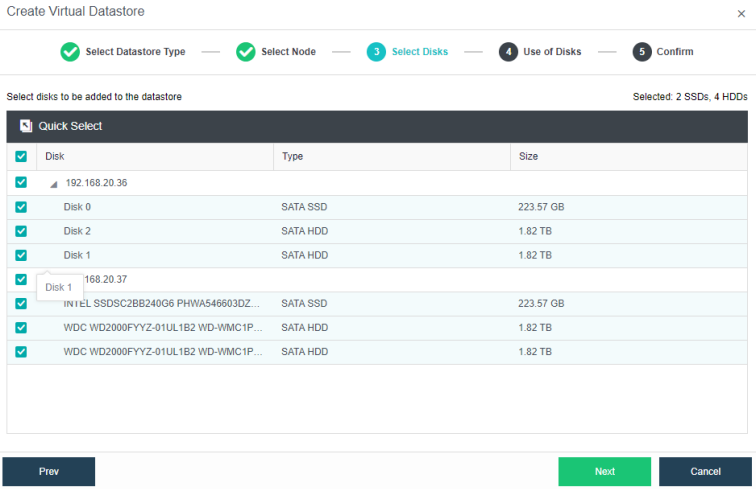

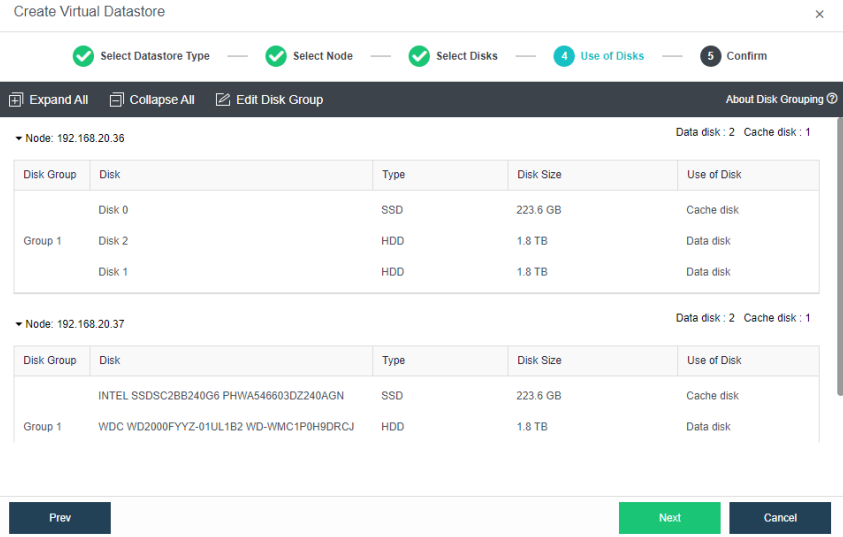

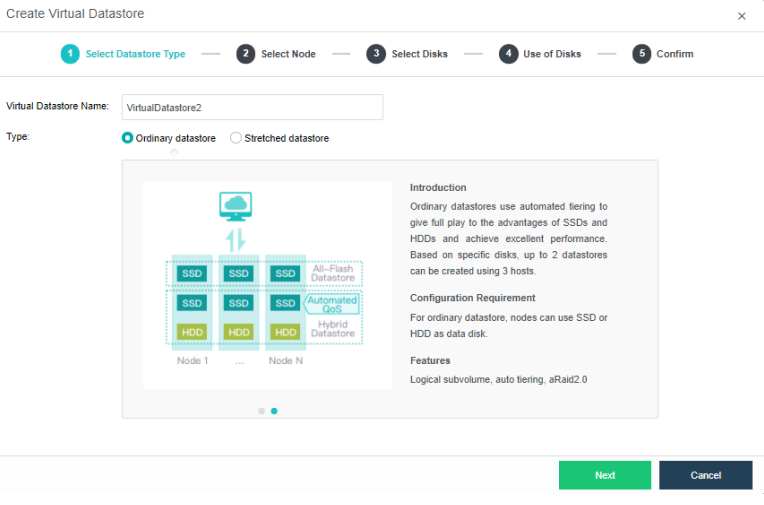

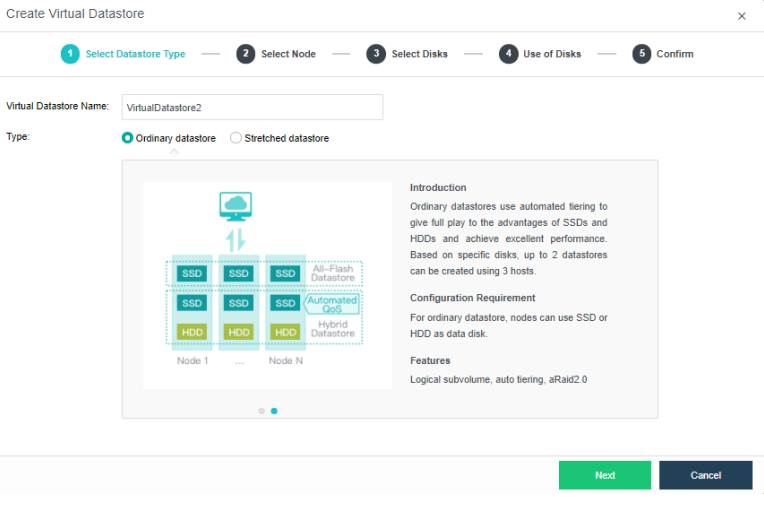

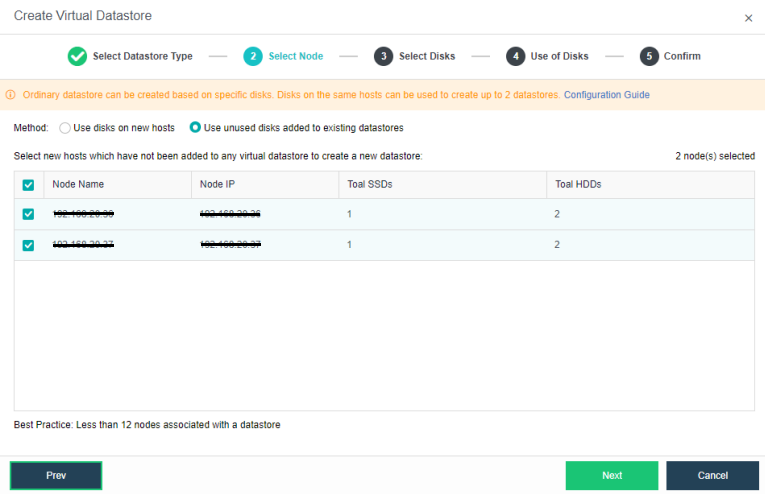

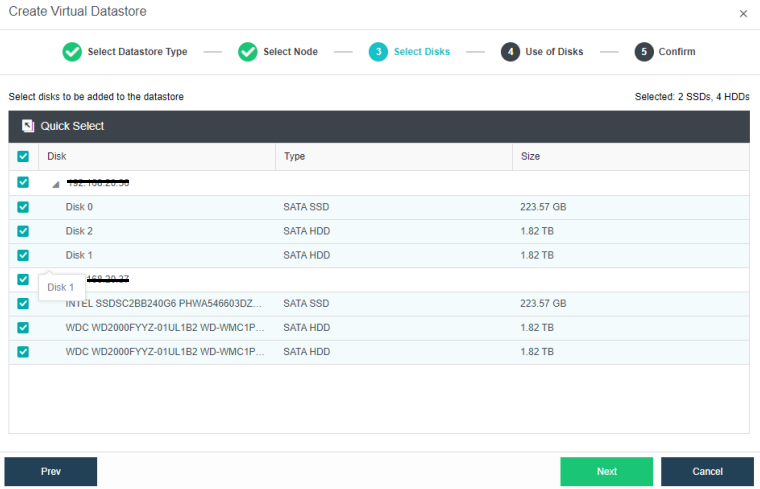

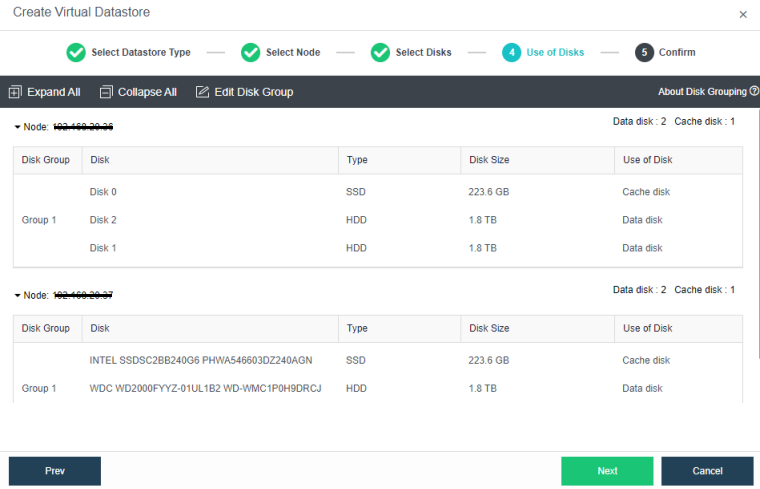

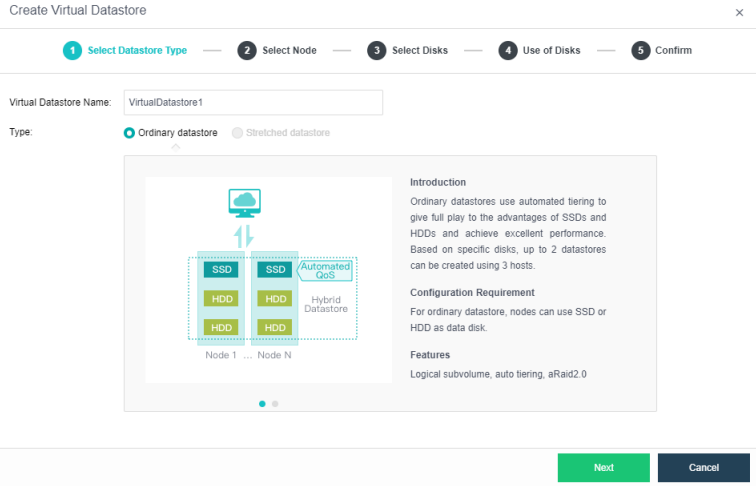

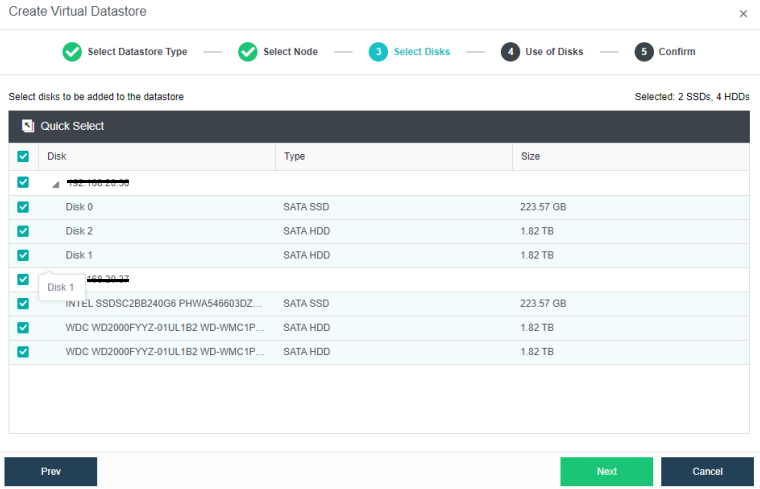

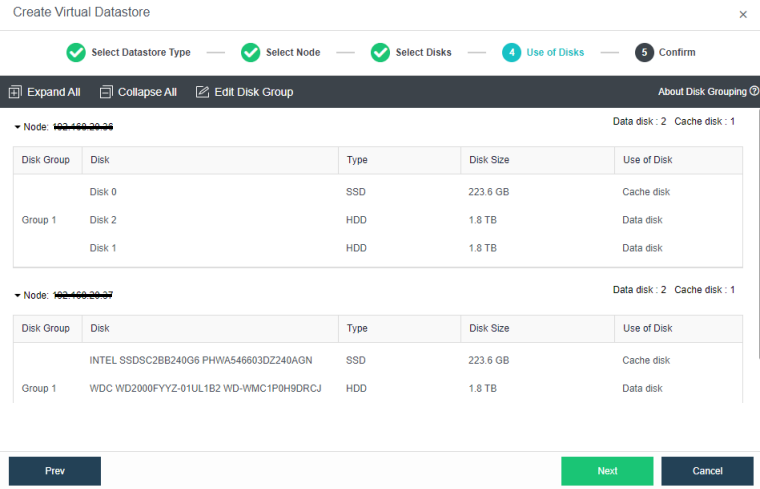

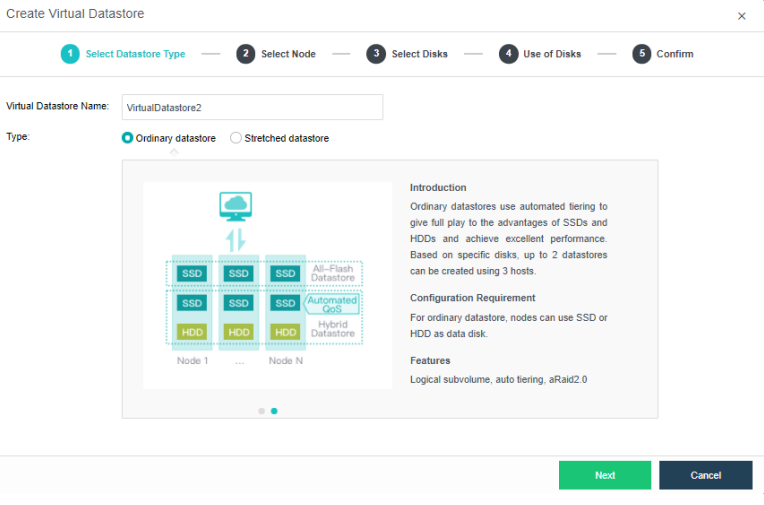

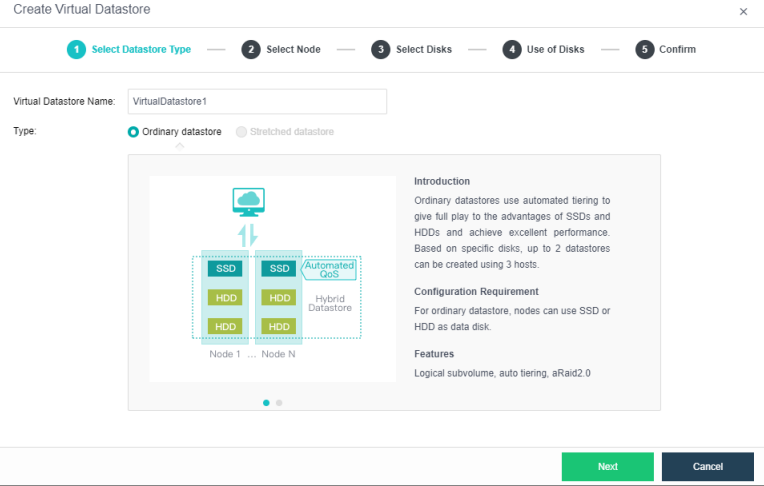

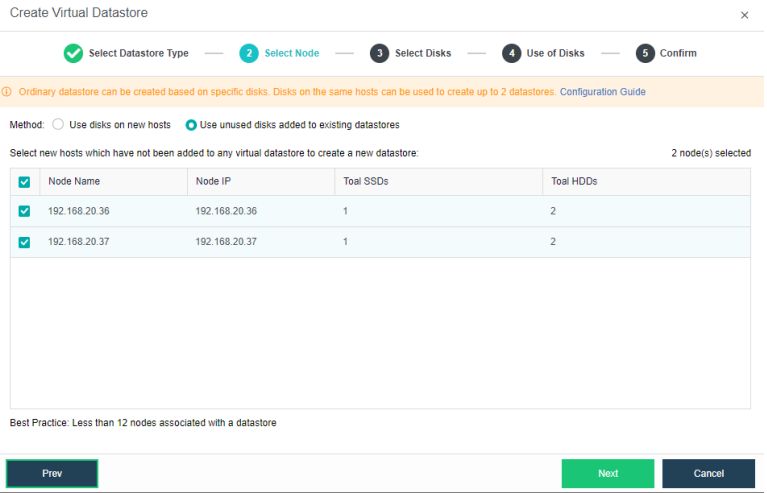

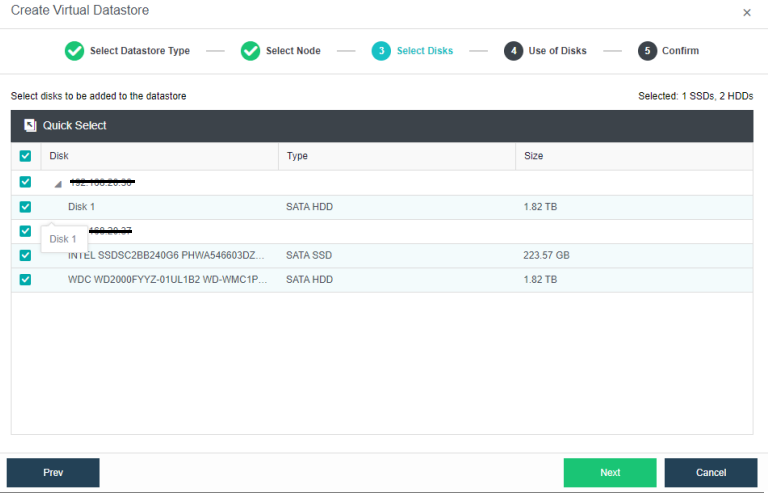

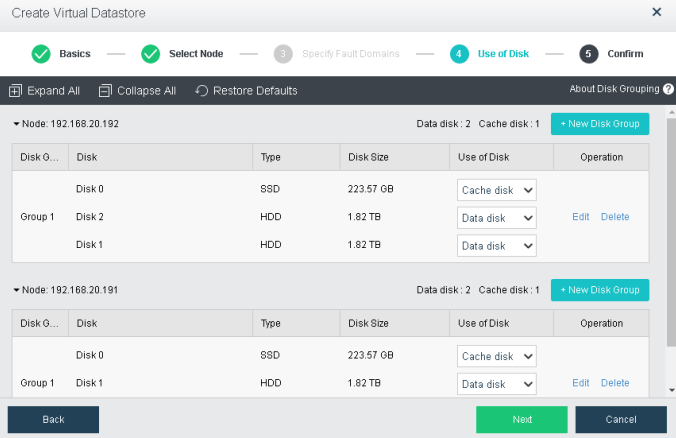

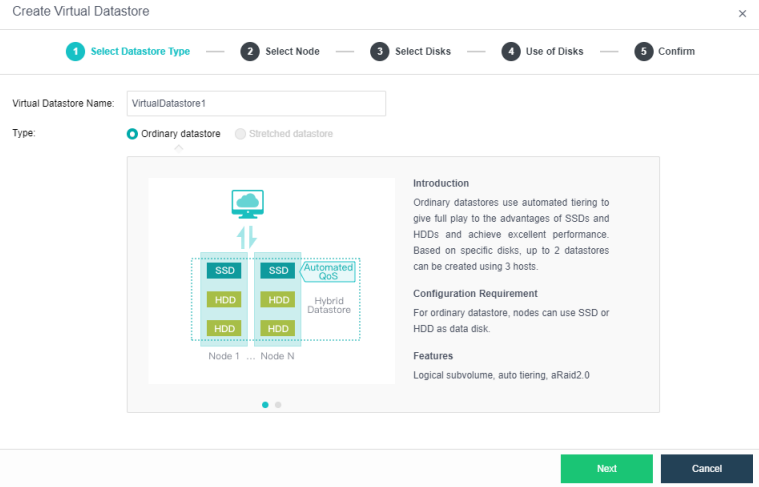

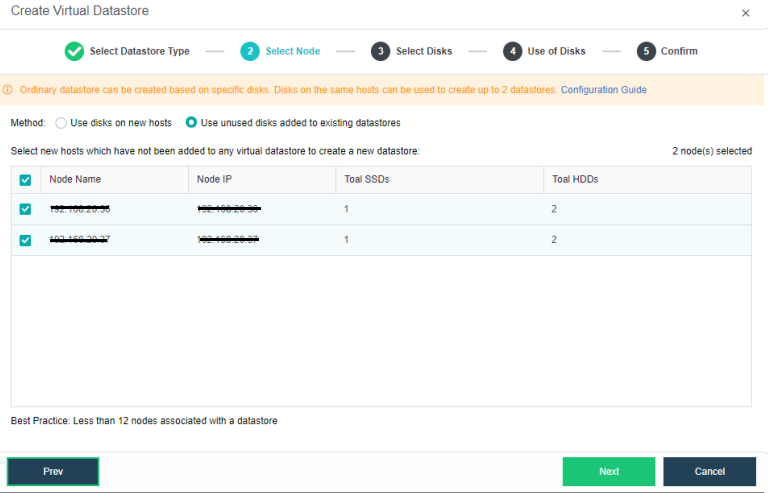

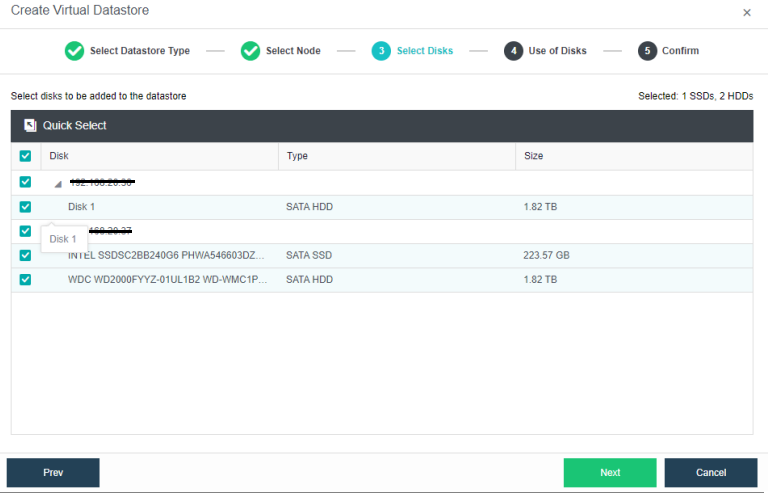

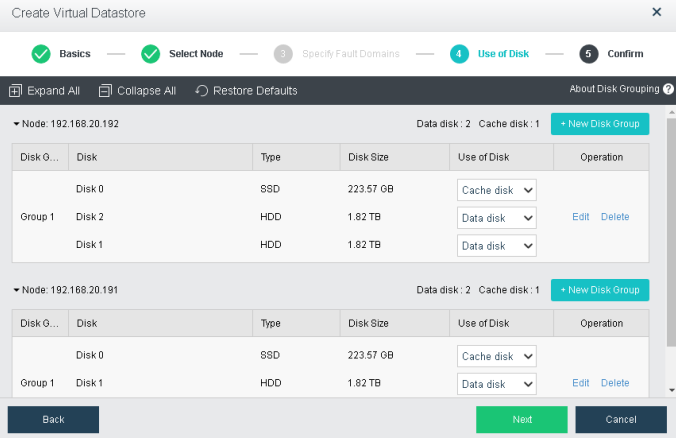

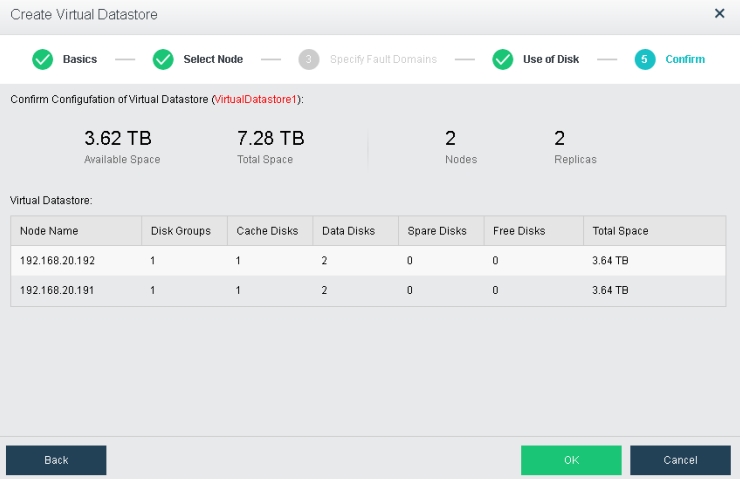

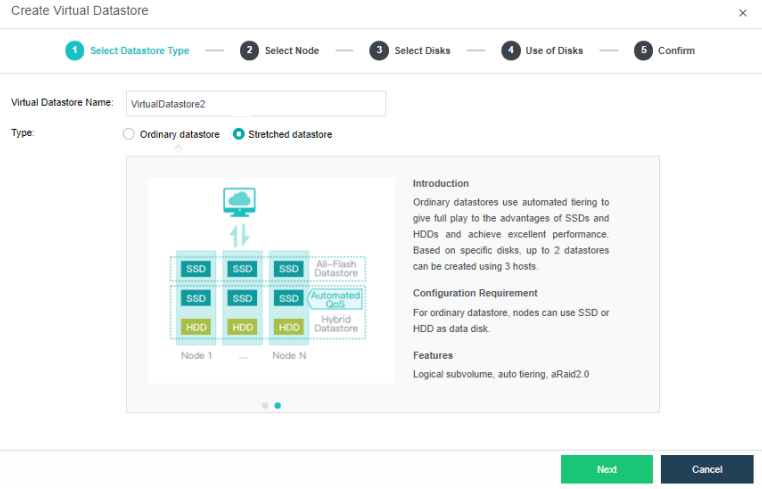

Configuring Datastore Type and Disks

The virtual storage volume is divided into Ordinary datastore and Stretched datastore. When the HCI cluster is planned to be a standard cluster, select Ordinary datastore. When planning an HCI cluster as a stretched cluster, select Stretched datastore.

During virtual storage initialization, there is no need to select the number of replicas. The same virtual datastore can configure two replicas and three replicas.

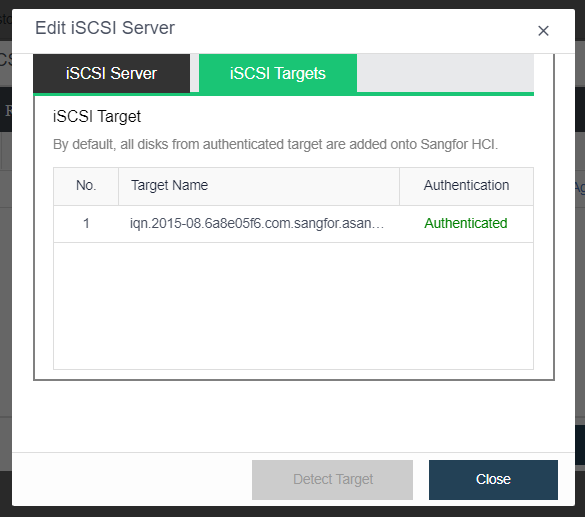

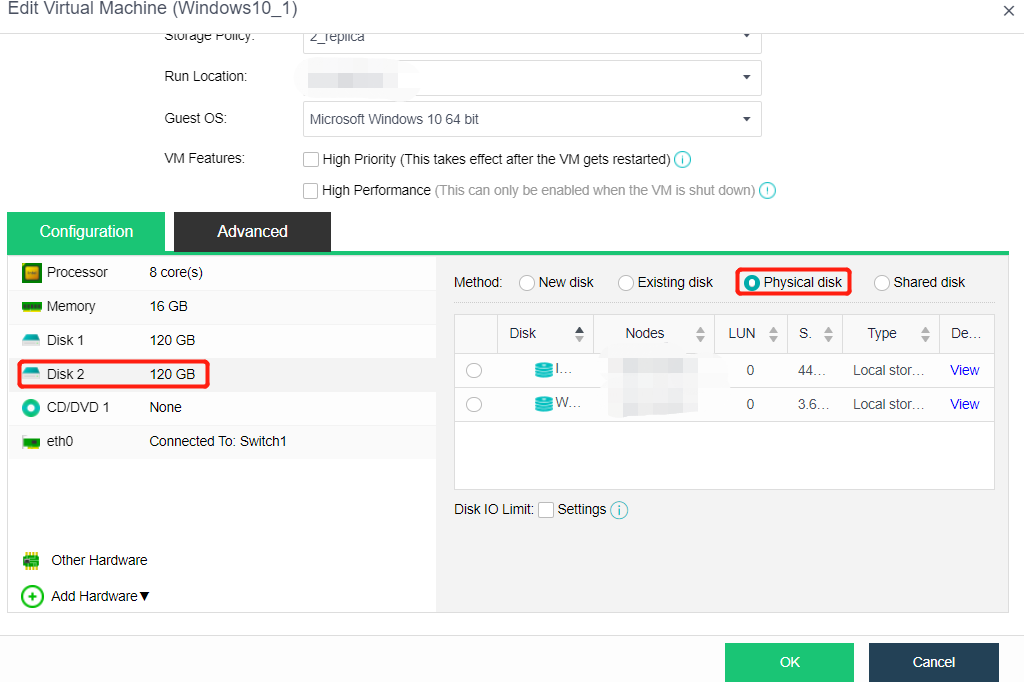

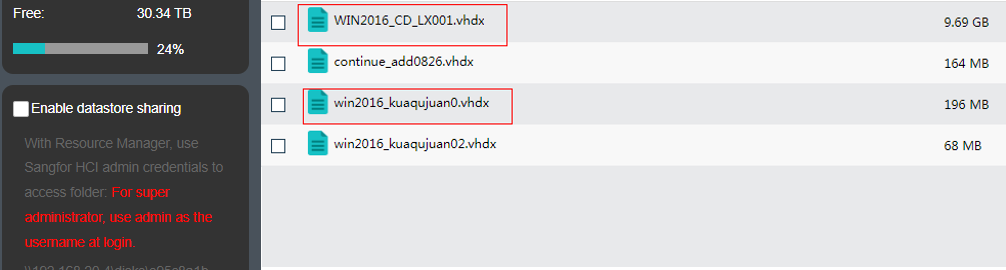

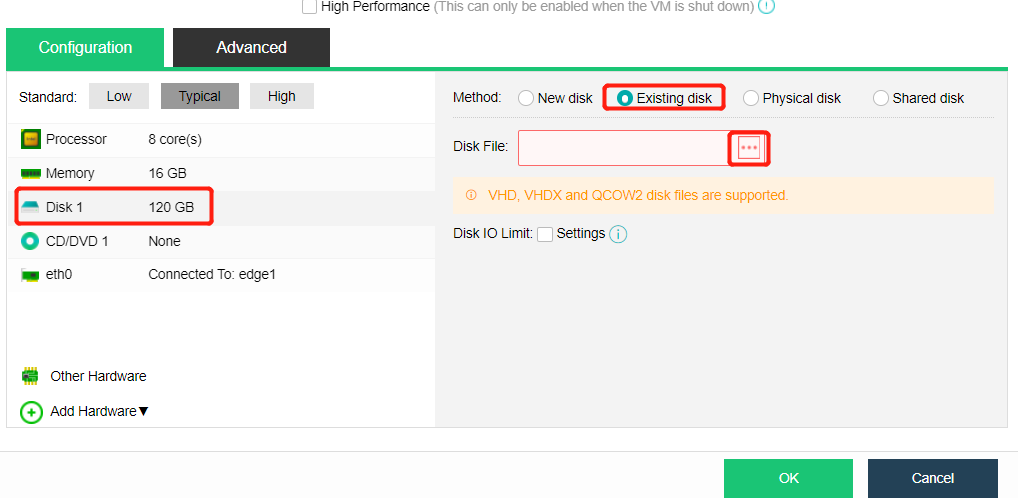

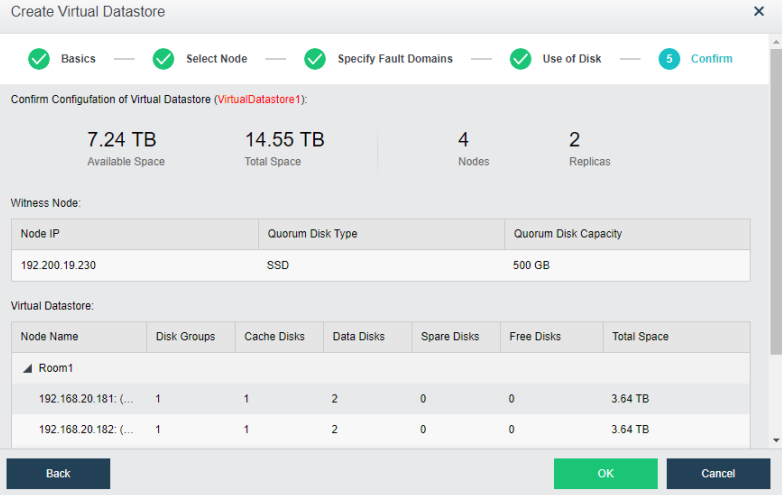

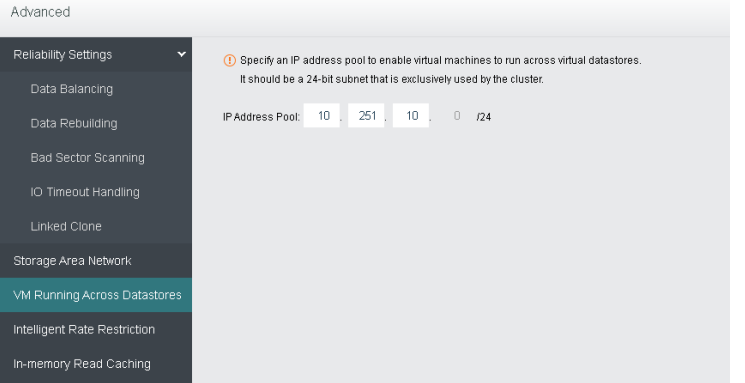

When the number of cluster nodes is three or four, if you need to use three replicas, each node must have two hard disk groups. There is no hard disk group limit when the number of cluster nodes is five or more.